OpenDataScience and Mail.Ru Group open machine learning course materials and new launch

Recently, OpenDataScience and Mail.Ru Group conducted an open machine learning course. In the last announcement, a lot was said about the course. In this article we will share the course materials, as well as announce a new launch.

UPD: now the course is in English under the brand name mlcourse.ai with articles on Medium, and materials on Kaggle ( Dataset ) and GitHub .

Who can’t wait: the new launch of the course is February 1, registration is not needed, but so that we remember you and invited you separately, fill out the form . The course consists of a series of articles on Habré ( Initial data analysis with Pandas is the first of them), supplementing their lectures on the YouTube channel , reproducible materials (Jupyter notebooks in the course’s github repository ), homework, Kaggle Inclass competitions, tutorials and individual projects data analysis. The main news will be in the VKontakte group , and life during the course will be shown in Slack OpenDataScience ( join ) in the channel #mlcourse_ai .

Outline of the article

- How our course differs from others

- Course Materials

- Read more about the new launch.

How is the course different from others

1. Not for beginners

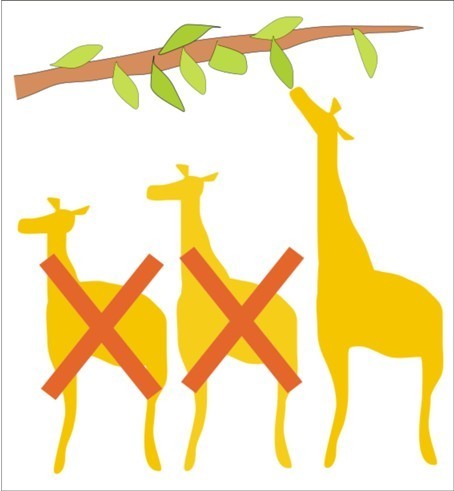

Often they will tell you that nothing is required of you, in a couple of months you will become an expert in data analysis. I still remember the phrase Andrew Ng from his basic course “Machine Learning”: “you don’t have to know what a derivative is, and now you’ll understand how optimization algorithms work in machine learning”. Or "you are already almost an expert in data analysis", etc. With all immense respect for the professor - this is hard marketing and jaundice. You won’t understand optimization without knowledge of derivatives, the basics of matan and linear algebra! Most likely you will not even become a Middle Data Scientist after completing a couple of courses (including ours). It will not be easy, and more than half of you will fall off in about 3-4 weeks. If you are wannabe, but not ready to immerse yourself in mathematics and programming, to see the beauty of machine learning in formulas and achieve results by printing tens and hundreds of lines of code - you are not here. But hope you all the same here.

In connection with the foregoing, we indicate the threshold of entry - knowledge of higher mathematics at a basic (but not bad) level and possession of the basics of Python. How to prepare, if you don’t have it yet, is described in detail in the VKontakte group and here under the spoiler, just below. In principle, you can take the course without mathematics, but then see the following picture. Of course, as far as the date the Scientist needs to know the math is a holivar, but here we are on the side of Andrei Karpaty, Yes you should understand backprop . Well, without mathematics at all, Data Science is almost like sorting with a bubble: you can solve the problem, but you can do it better, faster, and smarter. Well, without mathematics, of course, not getting to state-of-the-art, and watching it is very exciting.

Maths

- Если быстро, то можно пройтись по конспектам из специализации Яндекса и МФТИ на Coursera (делимся с разрешения).

- Если основательно подходить к вопросу, хватит вообще одной ссылки на MIT Open Courseware. На русском классный источник — Wiki-страница курсов ФКН ВШЭ. Но я бы взял программу МФТИ 2 курса и прошелся по основным задачникам, там минимум теории и много практики.

- И конечно, ничто не заменит хороших книг (тут можно и программу ШАДа упомянуть):

- Математический анализ — Кудрявцев;

- Линейная алгебра — Кострикин;

- Оптимизация — Boyd (англ.);

- Теория вероятностей и матстатистика — Кибзун.

Python

- Быстрый вариант — браузерные тьюториалы а-ля CodeAcademy, Datacamp и Dataquest, тут же могу указать свой репозиторий.

- Основательный — например, мэйловский курс на Coursera или MIT-шный курс "Introduction to Computer Science and Programming Using Python".

- Продвинутый уровень — курс питерского Computer Science Center.

2. Theory vs. Practice Theory and Practice

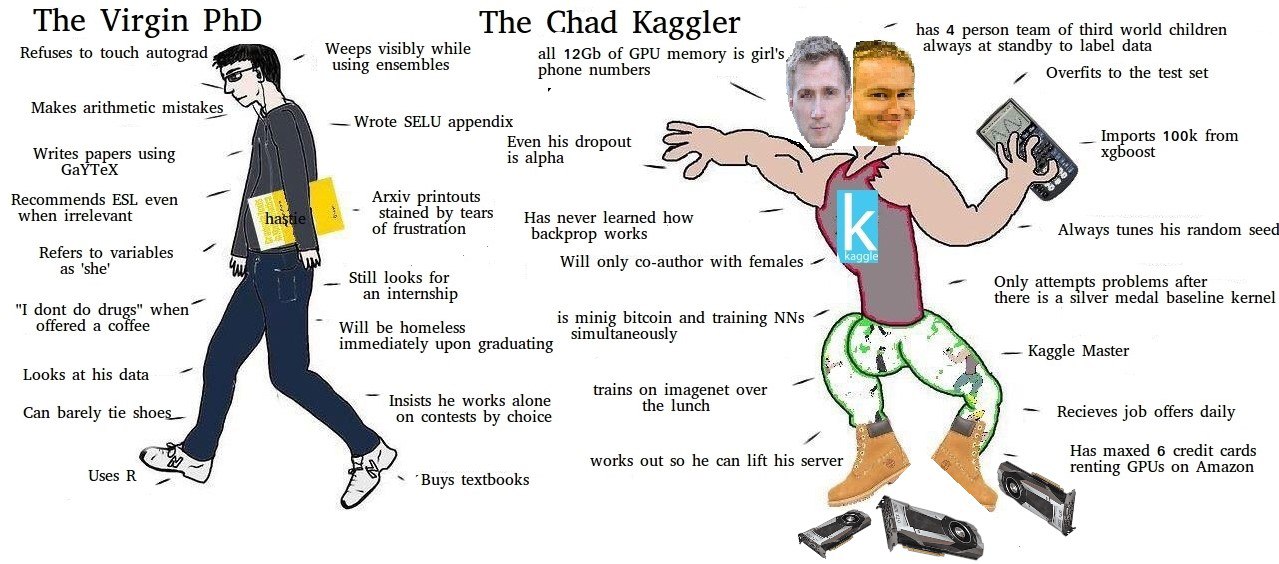

There are a lot of machine learning courses, there are really cool ones (like the specialization “Machine Learning and Data Analysis”), but many fall into one of the extremes: either too much theory (PhD guy), or, conversely, practice without understanding the basics (data monkey) .

We are looking for the optimal ratio: we have a lot of theory in articles on Habré (the 4th article about linear models is indicative ), we try to present it as clearly as possible, we present it even more popular in lectures. But the practice of the sea - homework, 4 Kaggle competitions, projects ... and that's not all.

3. Live communication

What is missing in most courses is live communication. Beginners sometimes only need one short piece of advice in order to budge and save hours, or even tens of hours. Coursera forums usually die out at some point. The uniqueness of our course is active communication and an atmosphere of mutual support. During the course, Slack OpenDataScience will help with any question, the chat lives and thrives, there is its own humor, someone trolls someone ... Well, and most importantly, the authors of homework and articles - in the same chat room - are always ready to help.

4. Kaggle in action

From the public VKontakte “Memes about machine learning for adult men.”

Kaggle competitions are a great way to quickly get your hands on data mining practice. Usually they begin to participate in them after taking a basic machine learning course (as a rule, the Andrew Ng course, the author is certainly charismatic and speaks great, but the course is already very outdated). During the course of the course, we will be invited to participate in 4 competitions, 2 of them are part of the homework, you just need to achieve a certain result from the model, and 2 others are already full-fledged competitions where you need to recreate (come up with signs, choose models) and overtake your comrades.

5. Free

Well, also an important factor, which is already there. Now, in the wake of the spread of machine learning, you will find many courses offering to educate you for a very broad compensation. And here everything is free and, without false modesty, at a very decent level.

Course Materials

Here we will briefly describe 10 topics of the course, what they are dedicated to, why the basic machine learning course cannot do without them, and what new things have been introduced.

Topic 1. Initial data analysis with Pandas. Article on Habré

I want to immediately start with machine learning, see the math in action. But 70-80% of the time working on a real project is fussing with data, and here Pandas is very good, I use it in my work almost every day. This article describes the basic Pandas methods for primary data analysis. Then we analyze the data set on the outflow of customers of the telecom operator and try to “predict” the outflow without any training, simply relying on common sense. In no case should you underestimate this approach.

I want to immediately start with machine learning, see the math in action. But 70-80% of the time working on a real project is fussing with data, and here Pandas is very good, I use it in my work almost every day. This article describes the basic Pandas methods for primary data analysis. Then we analyze the data set on the outflow of customers of the telecom operator and try to “predict” the outflow without any training, simply relying on common sense. In no case should you underestimate this approach.

Topic 2. Visual data analysis with Python. Article on Habré

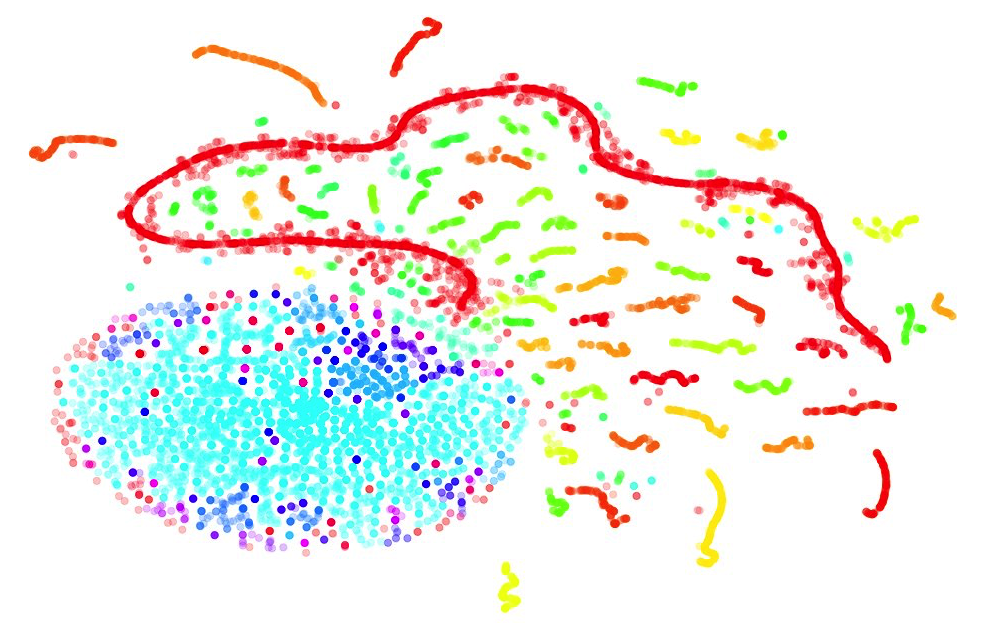

The role of visual data analysis is difficult to overestimate - this is how new signs are created, patterns and insights in the data are searched. K.V. Vorontsov gives an example of how it was thanks to visualization that they realized that during boosting classes continue to “move apart” as trees are added, and then this fact was proved theoretically. In the lecture, we will consider the main types of pictures that are usually built for the analysis of signs. We will also discuss how to peep into multidimensional space in general - using the t-SNE algorithm, which sometimes helps to draw such Christmas tree decorations.

The role of visual data analysis is difficult to overestimate - this is how new signs are created, patterns and insights in the data are searched. K.V. Vorontsov gives an example of how it was thanks to visualization that they realized that during boosting classes continue to “move apart” as trees are added, and then this fact was proved theoretically. In the lecture, we will consider the main types of pictures that are usually built for the analysis of signs. We will also discuss how to peep into multidimensional space in general - using the t-SNE algorithm, which sometimes helps to draw such Christmas tree decorations.

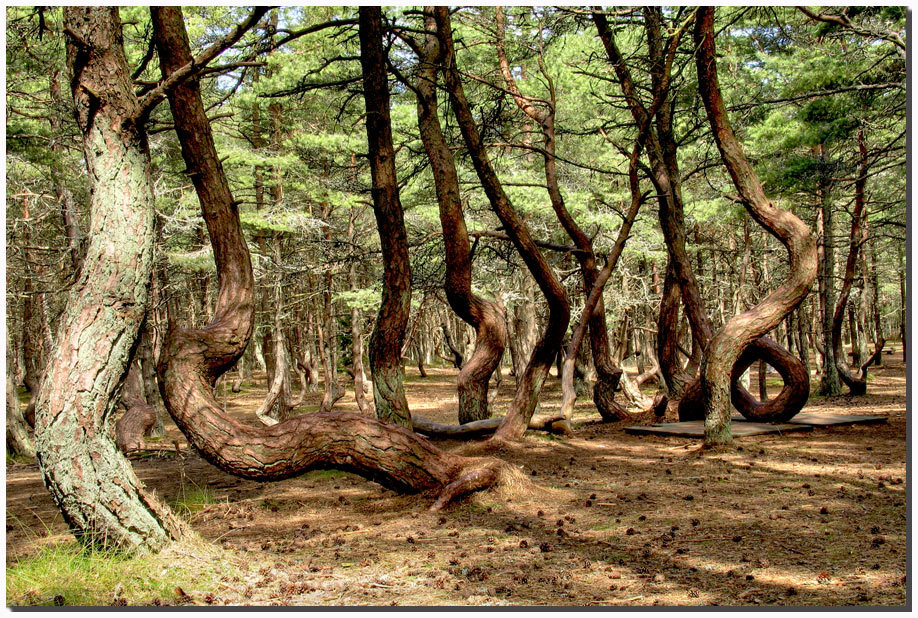

Theme 3. Classification, decision trees and the method of nearest neighbors.

Article on Habré

Here we will start talking about machine learning and about two simple approaches to solving the classification problem. Again, in a real project, you need to start with the simplest approaches, and it is the decision trees and the nearest neighbors method (as well as linear models, the next topic) that you should try first after the heuristics. We will touch upon the important issue of model quality assessment and cross-validation. We will discuss in detail the pros and cons of trees and the method of nearest neighbors. The article is long, but in particular decision trees deserve attention - it is on their basis that a random forest and boosting are built - algorithms that you will most likely use in practice.

Here we will start talking about machine learning and about two simple approaches to solving the classification problem. Again, in a real project, you need to start with the simplest approaches, and it is the decision trees and the nearest neighbors method (as well as linear models, the next topic) that you should try first after the heuristics. We will touch upon the important issue of model quality assessment and cross-validation. We will discuss in detail the pros and cons of trees and the method of nearest neighbors. The article is long, but in particular decision trees deserve attention - it is on their basis that a random forest and boosting are built - algorithms that you will most likely use in practice.

Theme 4. Linear models of classification and regression.

Article on Habré

This article will already be the size of a small brochure and for good reason: linear models are the most widely used approach to forecasting in practice. This article is like our miniature course: a lot of theory, a lot of practice. We will discuss the theoretical background of the least squares method and logistic regression, as well as the benefits of the practical application of linear models. We also note that there will be no excessive theorizing; the approach to linear models in machine learning differs from statistical and econometric. In practice, we will apply logistic regression to a very real taskuser identification by the sequence of visited sites. After the fourth homework, a lot of people will drop out, but if you do it all the same, you will already have a very good idea of what algorithms are used in production systems.

This article will already be the size of a small brochure and for good reason: linear models are the most widely used approach to forecasting in practice. This article is like our miniature course: a lot of theory, a lot of practice. We will discuss the theoretical background of the least squares method and logistic regression, as well as the benefits of the practical application of linear models. We also note that there will be no excessive theorizing; the approach to linear models in machine learning differs from statistical and econometric. In practice, we will apply logistic regression to a very real taskuser identification by the sequence of visited sites. After the fourth homework, a lot of people will drop out, but if you do it all the same, you will already have a very good idea of what algorithms are used in production systems.

Theme 5. Compositions: bagging, random forest. Article on Habré

Here again, the theory is interesting, and practice. We will discuss why “crowd wisdom” works for machine learning models, and many models work better than one, even the best. But in practice, we smoke a random forest (a composition of many decision trees) - something that is worth a try if you do not know which algorithm to choose. We will discuss in detail the numerous advantages of a random forest and its scope. And, as always, not without drawbacks: there are still situations where linear models will work better and faster.

Here again, the theory is interesting, and practice. We will discuss why “crowd wisdom” works for machine learning models, and many models work better than one, even the best. But in practice, we smoke a random forest (a composition of many decision trees) - something that is worth a try if you do not know which algorithm to choose. We will discuss in detail the numerous advantages of a random forest and its scope. And, as always, not without drawbacks: there are still situations where linear models will work better and faster.

Theme 6. Construction and selection of signs. Applications in the tasks of processing text, images and geodata. Article on Habré , a lecture about regression and regularization.

Here the plan of articles and lectures diverges a little (only once), the fourth topic of linear models is too great. The article describes the main approaches to the extraction, transformation and construction of features for machine learning models. In general, this lesson, the construction of signs, is the most creative part of Data Scientist's work. And of course, it is important to know how to work with various data (texts, images, geodata), and not just with a ready-made Pandas data frame.

Here the plan of articles and lectures diverges a little (only once), the fourth topic of linear models is too great. The article describes the main approaches to the extraction, transformation and construction of features for machine learning models. In general, this lesson, the construction of signs, is the most creative part of Data Scientist's work. And of course, it is important to know how to work with various data (texts, images, geodata), and not just with a ready-made Pandas data frame.

In the lecture, we will again discuss linear models, as well as the main technique for setting the complexity of ML-models - regularization. The book "Deep Learning" even refers to one well-known comrade (too lazy to climb over the proof link), who claims that in general "all machine learning is the essence of regularization." This, of course, is an exaggeration, but in practice, in order for the models to work well, they must be tuned , that is, it is proper to use regularization.

Theme 7. Education without a teacher: PCA, clustering. Article on Habré

Here we turn to the vast topic of teaching without a teacher - this is when there is data, but there is no target attribute that I would like to predict. Such unallocated data is a dime a dozen, and we must be able to benefit from them. We will discuss only 2 types of tasks - clustering and dimensionality reduction. In your homework, you will analyze data from the accelerometers and gyroscopes of mobile phones and try to cluster phone carriers on them, highlight the types of activities.

Here we turn to the vast topic of teaching without a teacher - this is when there is data, but there is no target attribute that I would like to predict. Such unallocated data is a dime a dozen, and we must be able to benefit from them. We will discuss only 2 types of tasks - clustering and dimensionality reduction. In your homework, you will analyze data from the accelerometers and gyroscopes of mobile phones and try to cluster phone carriers on them, highlight the types of activities.

Topic 8. Training in gigabytes with Vowpal Wabbit. Article on Habré

The theory here is the analysis of stochastic gradient descent, it was this optimization method that made it possible to successfully train both neural networks and linear models on large training samples. Here we will also discuss what to do when there are too many attributes (a trick with hashing the values of attributes) and move on to Vowpal Wabbit, a utility with which you can train a model in gigabytes of data in a matter of minutes, and sometimes it’s of acceptable quality. Consider many applications in various tasks - classification of short texts, as well as categorization of questions on StackOverflow. So far, the translation of this particular article (in the form of Kaggle Kernel) serves as an example of how we will submit material in English to Medium.

The theory here is the analysis of stochastic gradient descent, it was this optimization method that made it possible to successfully train both neural networks and linear models on large training samples. Here we will also discuss what to do when there are too many attributes (a trick with hashing the values of attributes) and move on to Vowpal Wabbit, a utility with which you can train a model in gigabytes of data in a matter of minutes, and sometimes it’s of acceptable quality. Consider many applications in various tasks - classification of short texts, as well as categorization of questions on StackOverflow. So far, the translation of this particular article (in the form of Kaggle Kernel) serves as an example of how we will submit material in English to Medium.

Topic 9. Time series analysis using Python. Article on Habré

Here we discuss various methods of working with time series: what stages of data preparation are necessary for models, how to get short-term and long-term forecasts. Let's walk through various types of models, from simple moving averages to gradient boosting. We also look at ways to search for anomalies in the time series and talk about the advantages and disadvantages of these methods.

Here we discuss various methods of working with time series: what stages of data preparation are necessary for models, how to get short-term and long-term forecasts. Let's walk through various types of models, from simple moving averages to gradient boosting. We also look at ways to search for anomalies in the time series and talk about the advantages and disadvantages of these methods.

Theme 10. Gradient boosting. Article on Habré

Well, and where without gradient boosting ... this is Matrixnet (Yandex search engine), and Catboost - the new generation of boosting in Yandex, and the search engine Mail.Ru. Boosting solves all three basic tasks of teaching with a teacher - classification, regression and ranking. In general, I want to call it the best algorithm, and this is close to the truth, but there are no better algorithms. But if you do not have too much data (fits into the RAM), not too many signs (up to several thousand), and the signs are heterogeneous (categorical, quantitative, binary, etc.), then, as the experience of Kaggle competitions shows, almost certainly gradient boosting will be the best in your task. Therefore, it was not without reason that so many cool implementations appeared - Xgboost, LightGBM, Catboost, H2O ...

Well, and where without gradient boosting ... this is Matrixnet (Yandex search engine), and Catboost - the new generation of boosting in Yandex, and the search engine Mail.Ru. Boosting solves all three basic tasks of teaching with a teacher - classification, regression and ranking. In general, I want to call it the best algorithm, and this is close to the truth, but there are no better algorithms. But if you do not have too much data (fits into the RAM), not too many signs (up to several thousand), and the signs are heterogeneous (categorical, quantitative, binary, etc.), then, as the experience of Kaggle competitions shows, almost certainly gradient boosting will be the best in your task. Therefore, it was not without reason that so many cool implementations appeared - Xgboost, LightGBM, Catboost, H2O ...

Again, we will not confine ourselves to the “how to tune XxBust” manual, but we will examine in detail the theory of boosting, and then consider it in practice, in the lecture we will reach Catboost. Here the task will be to beat the base line in the competition - this will give a good idea of the methods that work in many practical problems.

Read more about the new launch.

The course starts on February 5, 2018. During the course will be:

- live lectures in the Moscow office of Mail.Ru Group, on Mondays from February 5, 19.00-22.00. Video recordings of lectures are the same (youtube), but there will be comments, additions and improvements to them;

- the articles on Habr are old, here is the first. The articles will announce the current homework and deadlines for them, the information will be duplicated in the VKontakte group and in the #mlcourse_ai channel in Slack OpenDataScience;

- competitions, projects, tutorials and other activities, they are described in this article and in the course repository ;

- also once a week we will publish articles in English on Medium. It will be similar to this Kaggle Kernel about Vowpal Wabbit, only on Medium;

- From April 23 to July 15, a joint Stanford cs231n course on neural networks is planned (for details, see pinned items in the channel # class_cs231n of the ODS slack). This will be the second launch, now we are just going through, the course is magnificent, homework is difficult, interesting and very useful.

How to connect to the course?

Formal registration is not necessary. Just do your homework, take part in competitions, and we will consider you in the ranking. Nevertheless, fill out this survey, the left e-mail will be your ID during the course, at the same time we will remind you of the start closer to the point.

Discussion platforms

- #mlcourse_ai channel in Slack OpenDataScience. The main communication here, you can ask any question. The trump card - the authors of articles and homework are also in this channel, are ready to answer, help. But there is a lot of flood, so look at pinned items before asking a question;

- VKontakte group . The wall will be a convenient place for official announcements.

Good luck In the end, I want to say that everything will turn out, the main thing - do not quit! This "do not throw" you now ran a glance and most likely did not even notice. But think: this is the main thing.