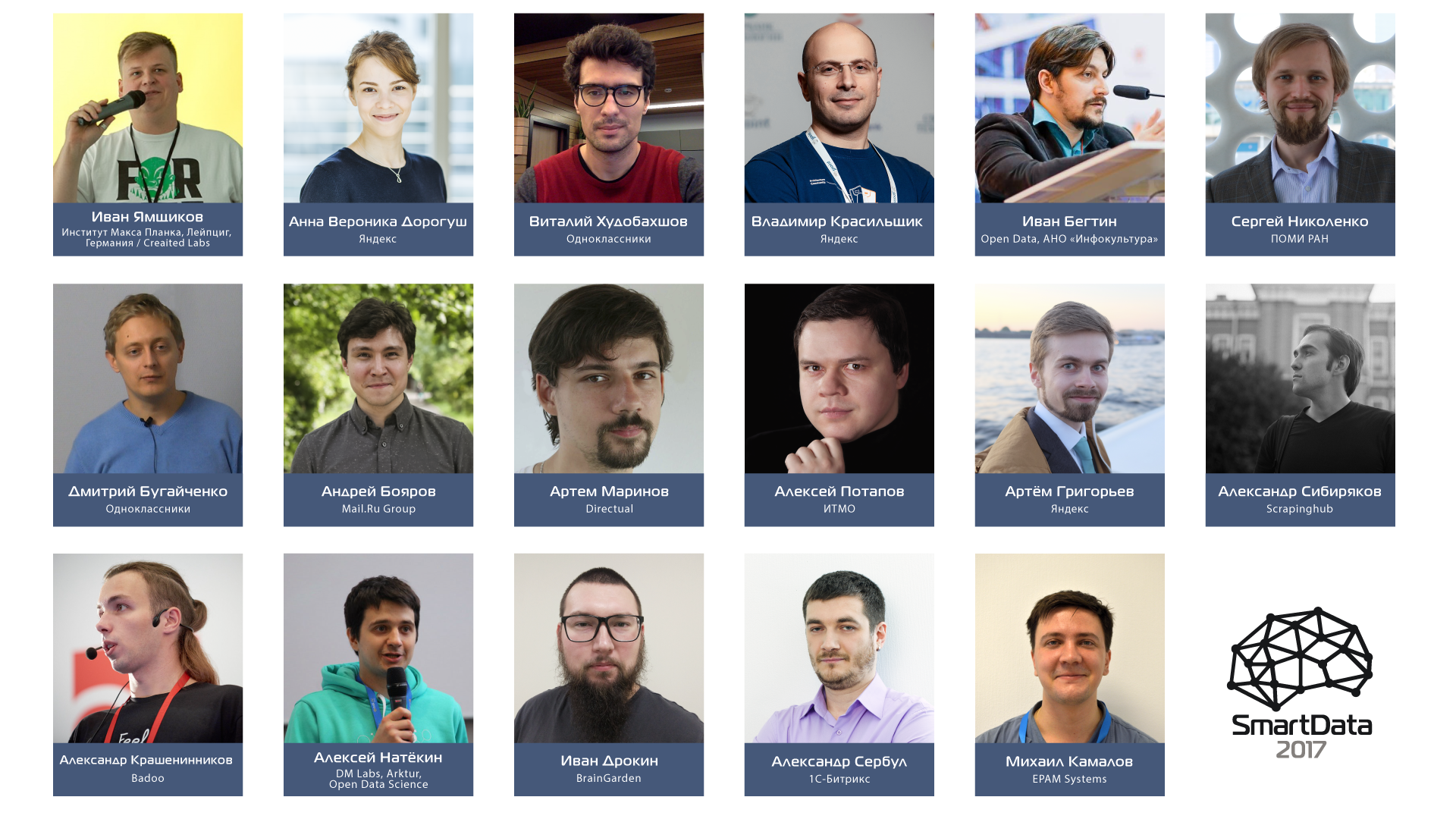

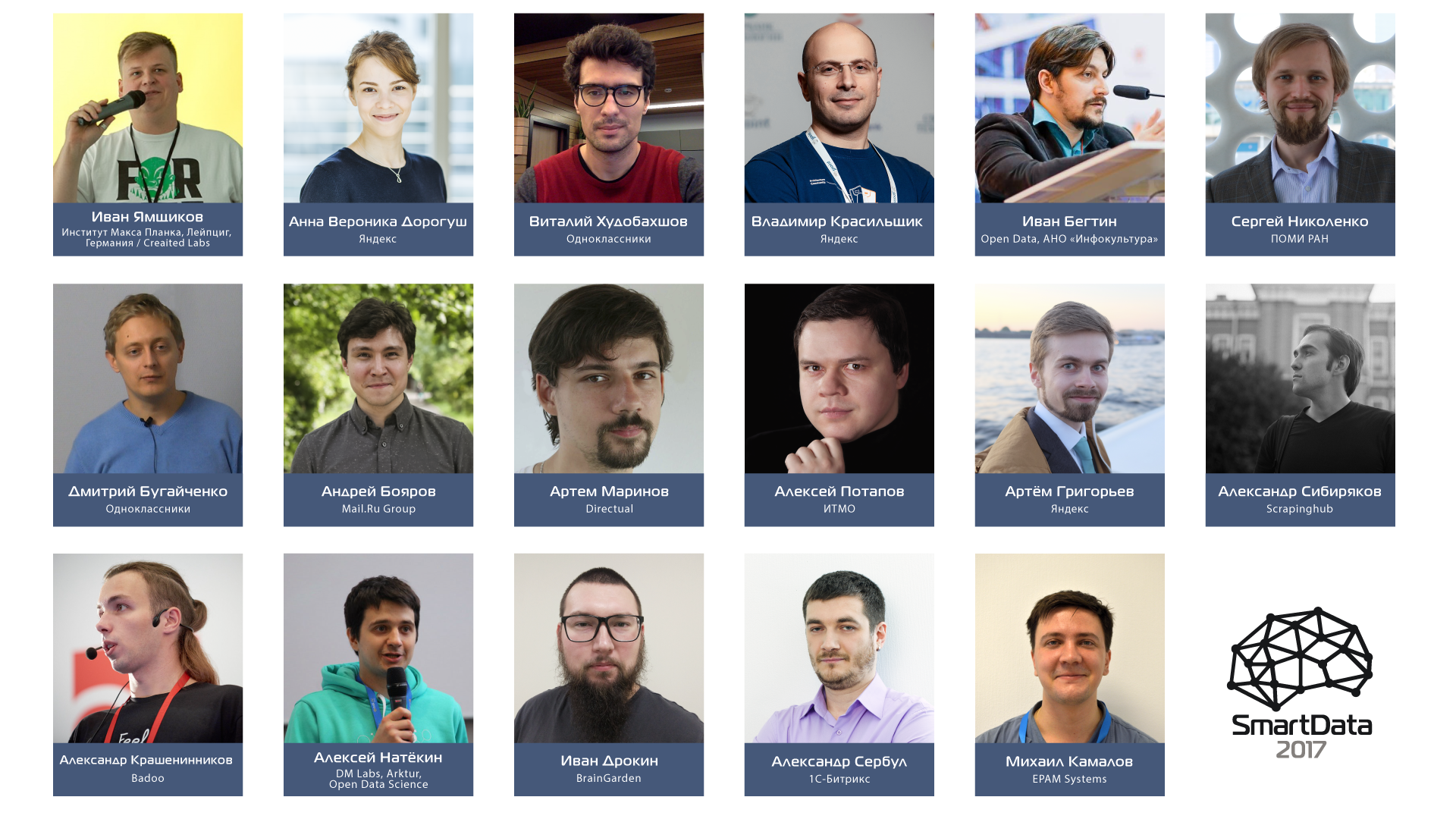

News from the fields of big and smart data: SmartData 2017 Piter conference program

In 2016/2017, we found that at each of our conferences there are 1-3 reports on Big Data, neural networks, artificial intelligence, or machine learning. It became clear that a good conference could be convened for this topic, which I will tell you about today.

Tasty : we decided to bring together scientists, practical engineers, architects under one roof and focus on technology - it would seem to be a common thing, but no.

Difficult : digging deeper, you can see that everyone does not work on separate issues together, but separately.

Scientists build neural networks in theory, architects make distributed systems for corporations with the goal of processing huge data streams in real time, unify access to them without the ultimate goal, practical engineers write all this software for purely narrow tasks, which then can not be transferred to something something else. In general, everyone digs his bed and does not climb to the neighbor ... So? No!

In fact : Everyone is engaged in part of the general. Just as Smart Data itself (and “smart data” is a very narrow translation) is inherently the same, so those who work with it, in fact, make a distributed network of various developments that can sometimes create unexpected combinations. This forms the foundation of Smart Data in its beauty and practical significance.

So, what are these pieces of the puzzle and who creates them, you can see and even discuss with the creators at the SmartData 2017 Piter conference on October 21, 2017. Details under the cut. Then there will be many letters, we are for big and smart data, although historically the announcement implies a quick and capacious text, short and accurate, like a sniper shot on a clear summer night. Usually we divide all reports into three or four categories, but this will not work out, each report is an independent story.

The ambiguity and clichés of the name (even we are exploiting this turnover a second time in a month), it would seem, beyond good and evil. But this does not even closely concern the contents of the opening keynote of Vitaliy Khudobakhshov.

Keynote: Vitaliy khud Khudobakhshov - “A name is a feature”

Keynote: Vitaliy khud Khudobakhshov - “A name is a feature”

No matter how strange it may seem to an educated person, the probability of being lonely / lonely “depends” on the name. We will talk about love and relationships, or rather, what exactly can the data of a social network tell about this. It’s about the same as saying: “The probability of being hit by a car if your name is Seryozha is higher than if you were called Kostya!” Sounds pretty wild, doesn't it? Well, at least unscientific.

Thus, we will talk about the most unexpected and counterintuitive observations that can be made using data analysis in social networks. Of course, we will not ignore the issues of statistical significance of such observations, the influence of bots and false correlations.

In general, Vitaly Khudobakhshov has one of the largest analytics databases in our country. Vitaliy has access to it since 2015 as a leading analyst at Odnoklassniki, where he deals with various aspects of data analysis.

Dmitry Bugaychenko - “From click to forecast and vice versa: Data Science pipeline in OK”

Dmitry Bugaychenko - “From click to forecast and vice versa: Data Science pipeline in OK”

Vitaly’s clever data and Dmitry’s machine learning are connected, even parts of their life paths are somewhat similar. The analysis of big data in Odnoklassniki was a unique chance for Dmitry to combine theoretical training and the scientific foundation with the development of real, popular products. And he gladly took this chance, having come there five years ago.

During the report, we will talk about the technologies for processing and storing data of the Hadoop ecosystem, as well as about many other things. This report will be useful to those who are engaged in machine learning, not only for entertainment, but also for profit.

As an example, consider one difficult task - personalizing the OK news feed. Without going into details, we will discuss data collection (batch and real-time), ETL, as well as the processing necessary to obtain the model.

But just getting a model is not enough, so we’ll also talk about how to get model-based forecasts in a complex, highly loaded distributed environment and how to use them for decision making.

And if you still want to completely immerse yourself in machine learning algorithmization in practice and working with big data, then it is better to conduct a dialogue with a person who has gone through one of the toughest schools on algorithms in the country (School of Data Analysis - SHAD). Meet: Anna Veronika Dorogush.

Anna Veronika Dorogush - “CatBoost - the next generation of gradient boosting”

Anna Veronika Dorogush - “CatBoost - the next generation of gradient boosting”

Double name, as a double direction of the report on the features of the new open gradient gradient boosting algorithm CatBoost from Yandex. The report will discuss how a technology was developed that can work with categorical characteristics, and why put it in the public domain. Also about CatBoost there will be answers to the eternal: what (to apply)? where (works)? to whom (should pay attention)?

The report will be useful for machine learning and data specialists: after listening to it, they will gain an understanding of how to use CatBoost most effectively and where it can be useful right now.

Artyom ortemij Grigoryev - “Crowdsourcing: how to tame the crowd?”

Artyom ortemij Grigoryev - “Crowdsourcing: how to tame the crowd?”

A couple of specialists from OK, a couple from Yandex are already a crowd, and with it a fourth, “other” task in the report, this time even more applied. In the tasks of machine learning and data analysis, it is often required to collect a large amount of manual markup. With a small number of performers, work can take months. Artyom is going to show how this can be done quickly and cheaply! And this experience can be transferred to other tasks (and they definitely are), where many performers are required for a limited period. The report, based on the experience of creating and using Toloka, Yandex crowdsourcing platform, will address quality control issues, motivation of performers, as well as various models of aggregation of markup results.

Artyom in Yandex since 2010, the head of the group, is responsible for developing the infrastructure for collecting expert assessments, developing services for assessors and the crowdsourcing platform Yandex.Tolok.

Alexander alexkrash Krasheninnikov - “Hadoop high availability: Badoo experience”

Alexander alexkrash Krasheninnikov - “Hadoop high availability: Badoo experience”

Big and smart data requires a certain infrastructure. We will be honest - very demanding. What to choose?

Hadoop infrastructure is a popular solution for tasks such as distributed storage and data processing. Good scalability and a developed ecosystem bribe and provide Hadoop with a solid place in the infrastructure of various information systems. But the more responsibility is assigned to this component, the more important it is to ensure its fault tolerance and high availability.

Alexander's report will be about ensuring the high availability of the components of the Hadoop cluster. In addition, let's talk:

The report will be most useful to those who already use Hadoop (to deepen their knowledge). The report will be of interest to another part of the audience in terms of a review of the architectural solutions used in this software package.

* Alexander, like no one else, understands what a "zoo" is.

He himself is the head of DataTeam at Badoo. He is engaged in the development of tools for data processing within the framework of ETL and the processing of various kinds of statistics, the Hadoop infrastructure. Web-dev experience over 10 years. For best results, do not disdain to use explosive mixtures from programming languages (Java, PHP, Go), databases (MySQL, Exasol) and distributed computing technologies (Hadoop, Spark, Hive, Presto).

Alexander asibiryakovSibiryakov - “Automatic Search for Contact Information on the Internet”

Alexander asibiryakovSibiryakov - “Automatic Search for Contact Information on the Internet”

The more data, the more “information garbage”. After working for five years at Yandex and two years at Avast! as an architect, Alexander built an automatic resolution of false positives. After that, the interest in large-scale data processing and information retrieval only intensified, as it usually happens with a case that you really like.

Alexander’s report will focus on a distributed robot for crawling the web, searching for and extracting contact information from corporate websites. In fact, these are two components: a web robot for receiving content and a separate application for analysis and extraction.

The main focus of the report will be on extraction itself, on the search for a working architecture, a sequence of algorithms and methods for collecting training data. The report will be useful to everyone who works with processing web data or provides solutions for processing large amounts of data.

Aleksey natekin Natekin - “Maps, Boosting, 2 Chairs”

Aleksey natekin Natekin - “Maps, Boosting, 2 Chairs”

According to the common opinion of my colleagues, Aleksey is quite charismatic, which is well linked to the fact that he is a dictator and coordinator of Open Data Science, the largest online community of Data Scientists in Eastern Europe . In addition to the above, Alexei is a producer of serious projects on machine learning and data analysis.

And now about the report. Everyone loves gradient boosting. It shows excellent results in most practical applications, and the phrase “stack xgboosts” has become a meme. As a rule, we are talking about boosting decision trees, and for training they use CPUs and machines with a bunch of RAM. Recently, for various reasons, many have bought video cards and decided: why don’t we start boosting them, neural networks on the GPU are significantly accelerated. Unfortunately, not everything is so simple: there are boost implementations on the GPU, but there are many nuances in their usefulness and meaningfulness. Let's try to figure it out together on Alexey's report - do you need a video card in 2017-2018 to train gradient boosting.

PS By the way, information has its own expiration date, moreover, each data type has a different one. So, in the case of Alexei another photo was provided, it can be viewed on the conference website to still correctly identify it.

Sergey snikolenko Nikolenko -

Sergey snikolenko Nikolenko - Another victory of robots: AlphaGo and deep learning with reinforcement “Deep convolution networks for object detection and image segmentation”

And this is a clear illustration of another expiration date of information: while preparing this post, the whole concept of the report managed to change.

As a result, in Sergey’s report we will discuss how networks that recognize individual objects turn into networks that distinguish objects from the mass of others. We will talk about the famous YoLo, and about single-shot detectors, and about the line of models from R-CNN to the recently appeared Mask R-CNN. And in principle, convolutional neural networks have long become the main class of models for image processing, and we have to live with it.

By the way, this is not all the data that Sergey Nikolenko, a specialist in machine learning (deep learning, Bayesian methods, word processing and much more) and algorithm analysis (network algorithms, competitive analysis), can get. The author of more than 100 scientific publications, several books, and author courses "Machine Learning", "Deep Networking Training", etc.

Vladimir vlkrasilDyer - “Back to the future of the modern banking system”

Vladimir vlkrasilDyer - “Back to the future of the modern banking system”

Historical note : “the first distributed online banking information exchange system in the Russian Federation began its life in 1993, and it was not Sberbank”

Modern banking Big Data systems are not only processing and storage of hundreds of millions of transactions per day and interaction with world trading platforms at space speeds, but also tight control and reporting by auditors and regulators. In the report, we will consider what Audit-Driven Development is and where it came from, we will show you how to organize a bitemporal fact store, so as not to mess up with the controlling authorities, and prove that a time machine must be built into any modern distributed system. Also during the presentation, the “universal formula of fact” will be revealed and the more often the tasks of the so-called “analytics” turn out to be.

A little about the speaker, or rather, a lot: Vladimir graduated with honors from the Department of Mathematical Software at St. Petersburg Electrotechnical University “LETI” and has been developing software for state, educational and financial institutions, as well as automobile and telecommunication concerns for over 14 years. Works in the St. Petersburg branch of Yandex by Yandex.Market developer. Vladimir is a resident of the Russian community of Java developers JUG.ru and speaks at industry conferences such as JPoint, Joker, JBreak and PGDay.

Ivan Drokin - “No data? No problems! Deep Learning at CGI ”

Ivan Drokin - “No data? No problems! Deep Learning at CGI ”

So, the words“ dataset ”,“ convolutional networks ”,“ recurrent networks ”are not scary and do not even need to be decrypted? Then a report by Ivan Drokin about deep convolution networks found his audience.

"We need to go deeper"

Currently, deep convolution networks are state-of-the-art algorithms in many computer vision problems. However, most of these algorithms require huge training samples, and the quality of the model depends entirely on the quality of the data and their quantity. In some tasks, data collection is difficult or sometimes impossible. *

In the report, we will consider an example of training deep convolution networks for localizing key points of an object on a fully synthetic data set.

* In one such experiment, data were collected by questionnaires and digitized by the students of one of the universities as part of the thesis. Processing one array, even with modern means of working with data, took 2 weeks only to enter data into the system. And for a combat project this data is required many times more. Do you have any extra “student” forces to collect this data? Not? Then, I hope you put in the plan and report of Artyom Grigoriev.

The dive instructor is Ivan Drokin, co-founder and chief science officer of Brain Garden, a company specializing in the development and implementation of intelligent full-cycle solutions. His professional interests include the application of deep learning for the analysis and processing of natural languages, images, video stream, as well as reinforced learning and question-answer systems. He has deep expertise in the areas of financial markets, hedge funds, bioinformatics, and computational biology.

Artyom onexdrk Marinov - “We segment 600 million users in real time every day”

Artyom onexdrk Marinov - “We segment 600 million users in real time every day”

We will not draw an analogy with Big Brother, since the data in the project on which Artyom's report is based is collected anonymously. Why NOT lead a similar analogy?

Every day, users make millions of actions on the Internet. The FACETz DMP project needs to structure this data and perform segmentation to identify user preferences (TADAM!). In his report, Artyom will tell you how, using Kafka and HBase, you can:

Artyom Marinov has been engaged in advertising technologies since 2013, for the last two years he worked at Data-Centric Alliance as a leader in the development of DMP Facetz, and now works at Directual. Prior to this, he led the development of a number of advertising projects at Creara for several years. He specializes in BigData and heavy duty work. The main languages are Java / Scala, in the profession for about 8 years.

Alexey a_potap Potapov - "Deep Learning, Probabilistic Programming and Meta-Calculations : Intersection Point"

Alexey a_potap Potapov - "Deep Learning, Probabilistic Programming and Meta-Calculations : Intersection Point"

So, there are generative and discriminant models that can act as approaches to the determination of some parameters of linear classifiers, which in turn can act as a way to solve classifier problems, when a decision is made on the basis of a linear operator over input data, respectively, having the property of linear separability , and the linear classification operation itself for two classes can be represented as a mapping of objects in multidimensional space onto a hyperplane ... in a house, which Jack built. *

This paragraph is intended to prepare you for the fact that there will be a lot about generative and discriminatory models, their relationships with each other and practical application within the framework of the two most promising approaches to machine learning - deep learning and probabilistic programming.

* This sequence is not part of the report by Alexei Potapov, professor at the ITMO Department of Computer Photonics and Video Informatics.

To understand how much Alexei likes his work, it is enough to look at his activity for 2 years: two manuals and 27 scientific papers have been published, including 12 works in refereed journals and one monograph; received 5 certificates of registration of computer programs; and also participation in 5 international conferences, although perhaps this is not an indicator.

Ivan ibegtinBegtin - “Open Data: On the Availability of State Data and How to Look for It”

Ivan ibegtinBegtin - “Open Data: On the Availability of State Data and How to Look for It”

Ivan is a well-known person, but if someone is not in the know, a brief introduction.

Ivan Begtin - director and co-founder of ANO "Infoculture" , member of the expert council under the Government, member of the Committee on Civic Initiatives Alexei Kudrin , laureate in the field of social and political journalism "Power N4" (2011), laureate of the "pressZvanie" award in the nomination " Zone of special attention ”(2012), co-founder of the All-Russian contest Apps4Russia. Author of public projects "State Spending", "Public Revenues", "State People", "The State and its Information" and many others. Ambassador to Russia of the Open Knowledge Foundation.

Why this data? Ivan's biography is the best introduction to his report on open government data.

The state policy of open data in Russia and in the world allows providing an unlimited number of users with access to the data created by the authorities. This opens up new opportunities for a business that is ready to create new products on their basis and develop existing ones, but it requires knowledge and understanding of how data collection, analysis and publication are organized.

Ivan’s report will tell you exactly how and why the data is collected, how the government uses it. And, of course, how to access and use them in your project. In addition, the data is not always reliable, errors accumulate in them, so we will also understand how these errors can be taken into account.

Maybe if they are collected from us in such quantity, it is time to use them?

Mikhail Kamalov - “Recommender systems: from matrix decompositions to deep learning in on-line mode”

Mikhail Kamalov - “Recommender systems: from matrix decompositions to deep learning in on-line mode”

“And for this report we recommend you take a report by Artyom Marinov, which underlines the practical significance of this material.”

Doesn’t resemble anything?

At present, recommendation systems are actively used both in the field of entertainment (Youtube, Netflix), and in the field of Internet marketing (Amazon, Aliexpress). In this regard, the report will consider the practical aspects of the use of deep learning, collaborative and content filtering and filtering by time as approaches in recommendation systems. In addition, the construction of hybrid recommendation systems and modification of approaches for online training at Spark will be considered.

Immersion in practical systems for ordinary users, which we are, will be carried out under the leadership of Mikhail Kamalov, Analyst at EPAM Systems since 2016, an expert in the field of tasks related to NLP and information retrieval.

Andrei Boyarov - “Deep Learning: Recognizing Scenes and Attractions in Images”

Andrei Boyarov - “Deep Learning: Recognizing Scenes and Attractions in Images”

Everyone who has come to this place has our thanks for patience and interest. As already mentioned at the beginning of this article, in the field of big and smart data there are no studies and reports divorced from the general mission. So, for this report, in the outline of the description of the previous one, we can recommend the report of Ivan Drokin for a deeper immersion in convolutional neural networks.

The report by Andrey Boyarov will discuss the construction of a system for solving the scene recognition problem using the state-of-the-art approach based on deep convolutional neural networks. What is relevant for the Mail.Ru corporation, where Andrei works as a research programmer.

The task of recognizing attractions stems from the recognition of scenes. Here, we need to highlight among all the images of the scenes those in which there are various famous places: palaces, monuments, squares, temples, etc. However, when solving this problem, it is important to ensure a low level of false positives. The report will consider the solution to the problem of recognition of attractions based on a neural network for scene recognition.

Alexander AlexSerbul Serbul - “Applied Machine Learning in E-Commerce: Scenarios and Architecture of Pilots and Combat Projects”

Alexander AlexSerbul Serbul - “Applied Machine Learning in E-Commerce: Scenarios and Architecture of Pilots and Combat Projects”

Being almost at the finish line of creating our network of reports, you still need to check with practical experience. Alexander Serbul, who oversees the quality control of integration and implementation of 1C-Bitrix LLC, as well as AI, deep learning and big data, will help us in this. In particular, Alexander acts as an architect and developer in the company's projects related to high workload and fault tolerance (Bitrix24), advises partners and clients on the architecture of highly loaded solutions, the effective use of clustering technologies for 1C-Bitrix products in the context of modern cloud services (Amazon Web Services, etc.)

All of the above was enclosed in a report on pilots and combat projects implemented by the company using various popular and "rare" machine learning algorithms: from recommendation systems to deep neural networks. The projects focused on technical implementation on the platforms Java (deeplearning4j), PHP, Python (keras / tf) using the open libraries Apache Mahout (Taste), Apache Lucene, Jetty, Apache Spark (including Streaming), a range of tools at Amazon Web Services. Guidance is given on the importance of certain algorithms and libraries, the relevance of their use and relevance in the market.

And these are the very realized projects:

Keynote: Ivan Yamshchikov - “Neurona: why did we teach the neural network to write poems in the style of Kurt Cobain?”

Keynote: Ivan Yamshchikov - “Neurona: why did we teach the neural network to write poems in the style of Kurt Cobain?”

How are Kurt Cobain, Civil Defense, art (classical, musical and visual) and big data connected?

If the Machine Learning: State of the art hubrapost suddenly caught my eye on the main page of the hub ... It's okay, there is still time. In the meantime, the article is being read, there is an opportunity to build links with other reports: from the neural network and GPU, architectural monuments, recognition and Deep Learning, we finally come to the question of “artificial intelligence”.

There are many examples of the application of machine learning and artificial neural networks in business, but in this report, Ivan will talk about the creative capabilities of AI. Tells you how Neurona did ,Neural Defense and Pianola . In the end, modern tasks in the field of constructing creative AI will be summarized and presented, as well as answers to questions why this is important and interesting.

Ivan Yamshchikov is currently a researcher at the Max Planck Institute (Leipzig, Germany) and a Yandex consultant. He is exploring new principles of artificial intelligence that could help us understand how our brains work.

So, our network is completed and is waiting for a meeting. All reports are tested for compatibility and complementarity. * You can plan your path according to the finished program on the conference website . And it is recommended to take into account the presence of discussion zones where you can always talk with the speaker personally after his session, not limited to the block of questions at the end of the report.

* Recommendations are not mandatory and are the personal opinion of the author, which may not be shared by any participant or our Program Committee. The author himself is puzzled by the issues of AI and further interaction with IoT, so he looks at everything biased.

Tasty : we decided to bring together scientists, practical engineers, architects under one roof and focus on technology - it would seem to be a common thing, but no.

Difficult : digging deeper, you can see that everyone does not work on separate issues together, but separately.

Scientists build neural networks in theory, architects make distributed systems for corporations with the goal of processing huge data streams in real time, unify access to them without the ultimate goal, practical engineers write all this software for purely narrow tasks, which then can not be transferred to something something else. In general, everyone digs his bed and does not climb to the neighbor ... So? No!

In fact : Everyone is engaged in part of the general. Just as Smart Data itself (and “smart data” is a very narrow translation) is inherently the same, so those who work with it, in fact, make a distributed network of various developments that can sometimes create unexpected combinations. This forms the foundation of Smart Data in its beauty and practical significance.

So, what are these pieces of the puzzle and who creates them, you can see and even discuss with the creators at the SmartData 2017 Piter conference on October 21, 2017. Details under the cut. Then there will be many letters, we are for big and smart data, although historically the announcement implies a quick and capacious text, short and accurate, like a sniper shot on a clear summer night. Usually we divide all reports into three or four categories, but this will not work out, each report is an independent story.

In the beginning was the Name. And “Name is a feature”

The ambiguity and clichés of the name (even we are exploiting this turnover a second time in a month), it would seem, beyond good and evil. But this does not even closely concern the contents of the opening keynote of Vitaliy Khudobakhshov.

Keynote: Vitaliy khud Khudobakhshov - “A name is a feature”

Keynote: Vitaliy khud Khudobakhshov - “A name is a feature”No matter how strange it may seem to an educated person, the probability of being lonely / lonely “depends” on the name. We will talk about love and relationships, or rather, what exactly can the data of a social network tell about this. It’s about the same as saying: “The probability of being hit by a car if your name is Seryozha is higher than if you were called Kostya!” Sounds pretty wild, doesn't it? Well, at least unscientific.

Thus, we will talk about the most unexpected and counterintuitive observations that can be made using data analysis in social networks. Of course, we will not ignore the issues of statistical significance of such observations, the influence of bots and false correlations.

In general, Vitaly Khudobakhshov has one of the largest analytics databases in our country. Vitaliy has access to it since 2015 as a leading analyst at Odnoklassniki, where he deals with various aspects of data analysis.

Dmitry Bugaychenko - “From click to forecast and vice versa: Data Science pipeline in OK”

Dmitry Bugaychenko - “From click to forecast and vice versa: Data Science pipeline in OK”Vitaly’s clever data and Dmitry’s machine learning are connected, even parts of their life paths are somewhat similar. The analysis of big data in Odnoklassniki was a unique chance for Dmitry to combine theoretical training and the scientific foundation with the development of real, popular products. And he gladly took this chance, having come there five years ago.

During the report, we will talk about the technologies for processing and storing data of the Hadoop ecosystem, as well as about many other things. This report will be useful to those who are engaged in machine learning, not only for entertainment, but also for profit.

As an example, consider one difficult task - personalizing the OK news feed. Without going into details, we will discuss data collection (batch and real-time), ETL, as well as the processing necessary to obtain the model.

But just getting a model is not enough, so we’ll also talk about how to get model-based forecasts in a complex, highly loaded distributed environment and how to use them for decision making.

And if you still want to completely immerse yourself in machine learning algorithmization in practice and working with big data, then it is better to conduct a dialogue with a person who has gone through one of the toughest schools on algorithms in the country (School of Data Analysis - SHAD). Meet: Anna Veronika Dorogush.

Anna Veronika Dorogush - “CatBoost - the next generation of gradient boosting”

Anna Veronika Dorogush - “CatBoost - the next generation of gradient boosting”Double name, as a double direction of the report on the features of the new open gradient gradient boosting algorithm CatBoost from Yandex. The report will discuss how a technology was developed that can work with categorical characteristics, and why put it in the public domain. Also about CatBoost there will be answers to the eternal: what (to apply)? where (works)? to whom (should pay attention)?

The report will be useful for machine learning and data specialists: after listening to it, they will gain an understanding of how to use CatBoost most effectively and where it can be useful right now.

Artyom ortemij Grigoryev - “Crowdsourcing: how to tame the crowd?”

Artyom ortemij Grigoryev - “Crowdsourcing: how to tame the crowd?”A couple of specialists from OK, a couple from Yandex are already a crowd, and with it a fourth, “other” task in the report, this time even more applied. In the tasks of machine learning and data analysis, it is often required to collect a large amount of manual markup. With a small number of performers, work can take months. Artyom is going to show how this can be done quickly and cheaply! And this experience can be transferred to other tasks (and they definitely are), where many performers are required for a limited period. The report, based on the experience of creating and using Toloka, Yandex crowdsourcing platform, will address quality control issues, motivation of performers, as well as various models of aggregation of markup results.

Artyom in Yandex since 2010, the head of the group, is responsible for developing the infrastructure for collecting expert assessments, developing services for assessors and the crowdsourcing platform Yandex.Tolok.

Alexander alexkrash Krasheninnikov - “Hadoop high availability: Badoo experience”

Alexander alexkrash Krasheninnikov - “Hadoop high availability: Badoo experience”Big and smart data requires a certain infrastructure. We will be honest - very demanding. What to choose?

Hadoop infrastructure is a popular solution for tasks such as distributed storage and data processing. Good scalability and a developed ecosystem bribe and provide Hadoop with a solid place in the infrastructure of various information systems. But the more responsibility is assigned to this component, the more important it is to ensure its fault tolerance and high availability.

Alexander's report will be about ensuring the high availability of the components of the Hadoop cluster. In addition, let's talk:

- about the "zoo" with which we are dealing; *

- about why provide high availability: points of failure of the system and the consequences of failures;

- about the tools and solutions that exist for this;

- about practical experience of implementation: preparation, deployment, verification.

The report will be most useful to those who already use Hadoop (to deepen their knowledge). The report will be of interest to another part of the audience in terms of a review of the architectural solutions used in this software package.

* Alexander, like no one else, understands what a "zoo" is.

He himself is the head of DataTeam at Badoo. He is engaged in the development of tools for data processing within the framework of ETL and the processing of various kinds of statistics, the Hadoop infrastructure. Web-dev experience over 10 years. For best results, do not disdain to use explosive mixtures from programming languages (Java, PHP, Go), databases (MySQL, Exasol) and distributed computing technologies (Hadoop, Spark, Hive, Presto).

Alexander asibiryakovSibiryakov - “Automatic Search for Contact Information on the Internet”

Alexander asibiryakovSibiryakov - “Automatic Search for Contact Information on the Internet”The more data, the more “information garbage”. After working for five years at Yandex and two years at Avast! as an architect, Alexander built an automatic resolution of false positives. After that, the interest in large-scale data processing and information retrieval only intensified, as it usually happens with a case that you really like.

Alexander’s report will focus on a distributed robot for crawling the web, searching for and extracting contact information from corporate websites. In fact, these are two components: a web robot for receiving content and a separate application for analysis and extraction.

The main focus of the report will be on extraction itself, on the search for a working architecture, a sequence of algorithms and methods for collecting training data. The report will be useful to everyone who works with processing web data or provides solutions for processing large amounts of data.

Aleksey natekin Natekin - “Maps, Boosting, 2 Chairs”

Aleksey natekin Natekin - “Maps, Boosting, 2 Chairs”According to the common opinion of my colleagues, Aleksey is quite charismatic, which is well linked to the fact that he is a dictator and coordinator of Open Data Science, the largest online community of Data Scientists in Eastern Europe . In addition to the above, Alexei is a producer of serious projects on machine learning and data analysis.

And now about the report. Everyone loves gradient boosting. It shows excellent results in most practical applications, and the phrase “stack xgboosts” has become a meme. As a rule, we are talking about boosting decision trees, and for training they use CPUs and machines with a bunch of RAM. Recently, for various reasons, many have bought video cards and decided: why don’t we start boosting them, neural networks on the GPU are significantly accelerated. Unfortunately, not everything is so simple: there are boost implementations on the GPU, but there are many nuances in their usefulness and meaningfulness. Let's try to figure it out together on Alexey's report - do you need a video card in 2017-2018 to train gradient boosting.

PS By the way, information has its own expiration date, moreover, each data type has a different one. So, in the case of Alexei another photo was provided, it can be viewed on the conference website to still correctly identify it.

Sergey snikolenko Nikolenko -

Sergey snikolenko Nikolenko - And this is a clear illustration of another expiration date of information: while preparing this post, the whole concept of the report managed to change.

As a result, in Sergey’s report we will discuss how networks that recognize individual objects turn into networks that distinguish objects from the mass of others. We will talk about the famous YoLo, and about single-shot detectors, and about the line of models from R-CNN to the recently appeared Mask R-CNN. And in principle, convolutional neural networks have long become the main class of models for image processing, and we have to live with it.

By the way, this is not all the data that Sergey Nikolenko, a specialist in machine learning (deep learning, Bayesian methods, word processing and much more) and algorithm analysis (network algorithms, competitive analysis), can get. The author of more than 100 scientific publications, several books, and author courses "Machine Learning", "Deep Networking Training", etc.

Vladimir vlkrasilDyer - “Back to the future of the modern banking system”

Vladimir vlkrasilDyer - “Back to the future of the modern banking system” Historical note : “the first distributed online banking information exchange system in the Russian Federation began its life in 1993, and it was not Sberbank”

Modern banking Big Data systems are not only processing and storage of hundreds of millions of transactions per day and interaction with world trading platforms at space speeds, but also tight control and reporting by auditors and regulators. In the report, we will consider what Audit-Driven Development is and where it came from, we will show you how to organize a bitemporal fact store, so as not to mess up with the controlling authorities, and prove that a time machine must be built into any modern distributed system. Also during the presentation, the “universal formula of fact” will be revealed and the more often the tasks of the so-called “analytics” turn out to be.

A little about the speaker, or rather, a lot: Vladimir graduated with honors from the Department of Mathematical Software at St. Petersburg Electrotechnical University “LETI” and has been developing software for state, educational and financial institutions, as well as automobile and telecommunication concerns for over 14 years. Works in the St. Petersburg branch of Yandex by Yandex.Market developer. Vladimir is a resident of the Russian community of Java developers JUG.ru and speaks at industry conferences such as JPoint, Joker, JBreak and PGDay.

Ivan Drokin - “No data? No problems! Deep Learning at CGI ”

Ivan Drokin - “No data? No problems! Deep Learning at CGI ”So, the words“ dataset ”,“ convolutional networks ”,“ recurrent networks ”are not scary and do not even need to be decrypted? Then a report by Ivan Drokin about deep convolution networks found his audience.

"We need to go deeper"

Currently, deep convolution networks are state-of-the-art algorithms in many computer vision problems. However, most of these algorithms require huge training samples, and the quality of the model depends entirely on the quality of the data and their quantity. In some tasks, data collection is difficult or sometimes impossible. *

In the report, we will consider an example of training deep convolution networks for localizing key points of an object on a fully synthetic data set.

* In one such experiment, data were collected by questionnaires and digitized by the students of one of the universities as part of the thesis. Processing one array, even with modern means of working with data, took 2 weeks only to enter data into the system. And for a combat project this data is required many times more. Do you have any extra “student” forces to collect this data? Not? Then, I hope you put in the plan and report of Artyom Grigoriev.

The dive instructor is Ivan Drokin, co-founder and chief science officer of Brain Garden, a company specializing in the development and implementation of intelligent full-cycle solutions. His professional interests include the application of deep learning for the analysis and processing of natural languages, images, video stream, as well as reinforced learning and question-answer systems. He has deep expertise in the areas of financial markets, hedge funds, bioinformatics, and computational biology.

Artyom onexdrk Marinov - “We segment 600 million users in real time every day”

Artyom onexdrk Marinov - “We segment 600 million users in real time every day”We will not draw an analogy with Big Brother, since the data in the project on which Artyom's report is based is collected anonymously. Why NOT lead a similar analogy?

Every day, users make millions of actions on the Internet. The FACETz DMP project needs to structure this data and perform segmentation to identify user preferences (TADAM!). In his report, Artyom will tell you how, using Kafka and HBase, you can:

- segment 600 million users after switching from MapReduce to Realtime;

- process 5 billion events every day;

- Store statistics on the number of unique users in a segment during stream processing;

- Track the impact of changes in segmentation parameters.

Artyom Marinov has been engaged in advertising technologies since 2013, for the last two years he worked at Data-Centric Alliance as a leader in the development of DMP Facetz, and now works at Directual. Prior to this, he led the development of a number of advertising projects at Creara for several years. He specializes in BigData and heavy duty work. The main languages are Java / Scala, in the profession for about 8 years.

Alexey a_potap Potapov - "Deep Learning, Probabilistic Programming and Meta-Calculations : Intersection Point"

Alexey a_potap Potapov - "Deep Learning, Probabilistic Programming and Meta-Calculations : Intersection Point"So, there are generative and discriminant models that can act as approaches to the determination of some parameters of linear classifiers, which in turn can act as a way to solve classifier problems, when a decision is made on the basis of a linear operator over input data, respectively, having the property of linear separability , and the linear classification operation itself for two classes can be represented as a mapping of objects in multidimensional space onto a hyperplane ... in a house, which Jack built. *

This paragraph is intended to prepare you for the fact that there will be a lot about generative and discriminatory models, their relationships with each other and practical application within the framework of the two most promising approaches to machine learning - deep learning and probabilistic programming.

* This sequence is not part of the report by Alexei Potapov, professor at the ITMO Department of Computer Photonics and Video Informatics.

To understand how much Alexei likes his work, it is enough to look at his activity for 2 years: two manuals and 27 scientific papers have been published, including 12 works in refereed journals and one monograph; received 5 certificates of registration of computer programs; and also participation in 5 international conferences, although perhaps this is not an indicator.

Ivan ibegtinBegtin - “Open Data: On the Availability of State Data and How to Look for It”

Ivan ibegtinBegtin - “Open Data: On the Availability of State Data and How to Look for It”Ivan is a well-known person, but if someone is not in the know, a brief introduction.

Ivan Begtin - director and co-founder of ANO "Infoculture" , member of the expert council under the Government, member of the Committee on Civic Initiatives Alexei Kudrin , laureate in the field of social and political journalism "Power N4" (2011), laureate of the "pressZvanie" award in the nomination " Zone of special attention ”(2012), co-founder of the All-Russian contest Apps4Russia. Author of public projects "State Spending", "Public Revenues", "State People", "The State and its Information" and many others. Ambassador to Russia of the Open Knowledge Foundation.

Why this data? Ivan's biography is the best introduction to his report on open government data.

The state policy of open data in Russia and in the world allows providing an unlimited number of users with access to the data created by the authorities. This opens up new opportunities for a business that is ready to create new products on their basis and develop existing ones, but it requires knowledge and understanding of how data collection, analysis and publication are organized.

Ivan’s report will tell you exactly how and why the data is collected, how the government uses it. And, of course, how to access and use them in your project. In addition, the data is not always reliable, errors accumulate in them, so we will also understand how these errors can be taken into account.

Maybe if they are collected from us in such quantity, it is time to use them?

Mikhail Kamalov - “Recommender systems: from matrix decompositions to deep learning in on-line mode”

Mikhail Kamalov - “Recommender systems: from matrix decompositions to deep learning in on-line mode”“And for this report we recommend you take a report by Artyom Marinov, which underlines the practical significance of this material.”

Doesn’t resemble anything?

At present, recommendation systems are actively used both in the field of entertainment (Youtube, Netflix), and in the field of Internet marketing (Amazon, Aliexpress). In this regard, the report will consider the practical aspects of the use of deep learning, collaborative and content filtering and filtering by time as approaches in recommendation systems. In addition, the construction of hybrid recommendation systems and modification of approaches for online training at Spark will be considered.

Immersion in practical systems for ordinary users, which we are, will be carried out under the leadership of Mikhail Kamalov, Analyst at EPAM Systems since 2016, an expert in the field of tasks related to NLP and information retrieval.

Andrei Boyarov - “Deep Learning: Recognizing Scenes and Attractions in Images”

Andrei Boyarov - “Deep Learning: Recognizing Scenes and Attractions in Images”Everyone who has come to this place has our thanks for patience and interest. As already mentioned at the beginning of this article, in the field of big and smart data there are no studies and reports divorced from the general mission. So, for this report, in the outline of the description of the previous one, we can recommend the report of Ivan Drokin for a deeper immersion in convolutional neural networks.

The report by Andrey Boyarov will discuss the construction of a system for solving the scene recognition problem using the state-of-the-art approach based on deep convolutional neural networks. What is relevant for the Mail.Ru corporation, where Andrei works as a research programmer.

The task of recognizing attractions stems from the recognition of scenes. Here, we need to highlight among all the images of the scenes those in which there are various famous places: palaces, monuments, squares, temples, etc. However, when solving this problem, it is important to ensure a low level of false positives. The report will consider the solution to the problem of recognition of attractions based on a neural network for scene recognition.

Alexander AlexSerbul Serbul - “Applied Machine Learning in E-Commerce: Scenarios and Architecture of Pilots and Combat Projects”

Alexander AlexSerbul Serbul - “Applied Machine Learning in E-Commerce: Scenarios and Architecture of Pilots and Combat Projects”Being almost at the finish line of creating our network of reports, you still need to check with practical experience. Alexander Serbul, who oversees the quality control of integration and implementation of 1C-Bitrix LLC, as well as AI, deep learning and big data, will help us in this. In particular, Alexander acts as an architect and developer in the company's projects related to high workload and fault tolerance (Bitrix24), advises partners and clients on the architecture of highly loaded solutions, the effective use of clustering technologies for 1C-Bitrix products in the context of modern cloud services (Amazon Web Services, etc.)

All of the above was enclosed in a report on pilots and combat projects implemented by the company using various popular and "rare" machine learning algorithms: from recommendation systems to deep neural networks. The projects focused on technical implementation on the platforms Java (deeplearning4j), PHP, Python (keras / tf) using the open libraries Apache Mahout (Taste), Apache Lucene, Jetty, Apache Spark (including Streaming), a range of tools at Amazon Web Services. Guidance is given on the importance of certain algorithms and libraries, the relevance of their use and relevance in the market.

And these are the very realized projects:

- clustering Bitrix24 users with Apache Spark

- calculation of the probability of leaving (churn), possible profit (CLV) and other business metrics in the conditions of big data and high loads

- collaborative referral system for more than 20,000 online stores

- product cataloging by LSH

- content-based recommended service for more than 100 million runet users

- classifier of calls to Bitrix24 technical support based on a neural network (except for n-gramm models, we will also consider pilots with a one-dimensional convolution)

- A chatbot of answers to questions based on a neural network connecting semantic spaces of questions and answers.

Keynote: Ivan Yamshchikov - “Neurona: why did we teach the neural network to write poems in the style of Kurt Cobain?”

Keynote: Ivan Yamshchikov - “Neurona: why did we teach the neural network to write poems in the style of Kurt Cobain?” How are Kurt Cobain, Civil Defense, art (classical, musical and visual) and big data connected?

If the Machine Learning: State of the art hubrapost suddenly caught my eye on the main page of the hub ... It's okay, there is still time. In the meantime, the article is being read, there is an opportunity to build links with other reports: from the neural network and GPU, architectural monuments, recognition and Deep Learning, we finally come to the question of “artificial intelligence”.

There are many examples of the application of machine learning and artificial neural networks in business, but in this report, Ivan will talk about the creative capabilities of AI. Tells you how Neurona did ,Neural Defense and Pianola . In the end, modern tasks in the field of constructing creative AI will be summarized and presented, as well as answers to questions why this is important and interesting.

Ivan Yamshchikov is currently a researcher at the Max Planck Institute (Leipzig, Germany) and a Yandex consultant. He is exploring new principles of artificial intelligence that could help us understand how our brains work.

So, our network is completed and is waiting for a meeting. All reports are tested for compatibility and complementarity. * You can plan your path according to the finished program on the conference website . And it is recommended to take into account the presence of discussion zones where you can always talk with the speaker personally after his session, not limited to the block of questions at the end of the report.

* Recommendations are not mandatory and are the personal opinion of the author, which may not be shared by any participant or our Program Committee. The author himself is puzzled by the issues of AI and further interaction with IoT, so he looks at everything biased.