Restrictive short text generation - for advertising and other purposes

In practice, the task often arises not just to write some text, but to fulfill some conditions - for example, to put a maximum of keywords in a given length and / or use / not use certain words and phrases. This can be important for business (in the preparation of advertisements, including contextual advertising, SEO-optimization of sites), for educational purposes (automatic preparation of test questions) and in a number of other cases. Such optimization problems cause a lot of headache, because it is relatively easy for people to write texts, but it’s not so easy to write something that meets one or another of the criteria of “optimality”. On the other hand, computers do an excellent job of optimizing in other areas, but have a poor understanding of natural language, and therefore it is difficult for them to compose text. In this article,

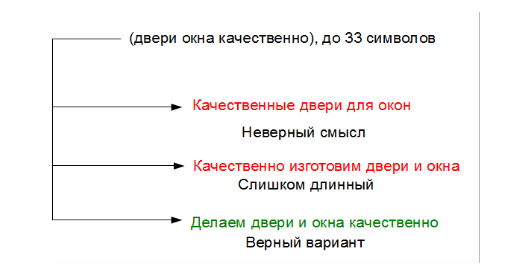

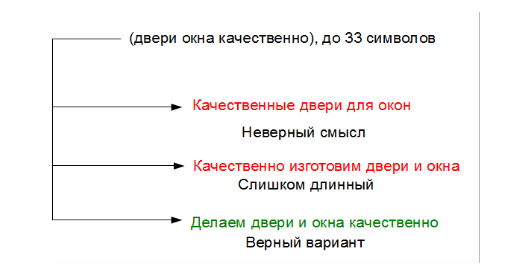

As an example, consider the task of writing a proposal of a given length on a specific topic, which should include a certain number of keywords. Let’s say the words “doors, windows, quality, make” are given - you need to make a sentence like “We make windows and doors with high quality!”.

One of the first known approaches to solving such a problem is the use of a statistical language model. A language model is a function of the distribution of the probability of finding words in a certain sequence. Those. a certain function P (S ) - which allows, knowing the sequence of words S , to obtain the probability Pmeet the words in this sequence. Most often, the language model is based on the so-called n-grams, i.e., counting the frequency of obtaining phrases from n words in large arrays of texts. The ratio of the number of occurrences of a given phrase to the number of analyzed phrases gives an approximation of the probability of a given phrase. Since the probabilities of long n-grams are difficult to evaluate (some word combinations can be very rare), their probabilities are approximated in one way or another using the frequencies of shorter sequences.

As applied to our example, if we have, say the word “Qualitatively”, then we can use the function P (S)evaluate the probabilities of all possible phrases of the original keywords “Qualitatively windows”, “Qualitatively doors”, “Qualitatively manufacture”. Most likely the latter will be most likely. Therefore, we add the word “make” to our line and repeat the operation, receiving in the next step “Quality manufacture of windows” and further “Quality manufacture of door windows”.

The disadvantages of this approach are immediately visible. Firstly, he uses words only in initial cases and numbers, and therefore we can’t say in any way that we prefer to use “make” instead of “make”. Secondly, there is no way to use any other words than the original ones, so the resulting phrase is “door window”. Of course, we can easily allow the use of any words included in the language model, for example, prepositions, which will work in this case. But if the keywords are only “qualitatively door windows”, then prepositions will not help and you will have to carefully select the words that the model can use “on its own”.

Other difficulties will arise. For example, in the described approach, the language model does not know anything about the keywords that it can use. Therefore, if the beginning “High-quality manufacture of doors for windows” is generated, then inevitably it will turn out “High-quality manufacture of doors for a window”, or if you use the coordination in the cases “High-quality manufacture of doors for a window”, which is all the same meaningless. This can be partially eliminated if you save the entire history of possibilities at every step. That is, when the phrase “doors for” was composed, there were other options, including “doors and”. In the absence of continuation, the “door for” got out. But if we saved the previous options and completed them, too, we get several alternatives for “doors and windows”, “doors for windows”, “doors for windows” and so on. Of these options, we already choose the most probable - “doors and windows”.

A more complex approach is described, for example, in [1]. Based on the same principle, it, however, takes into account not only the probabilities of n-grams, but additional linguistic information, determining the relationships between words. Based on a list of keywords, this method searches in a large body of texts for the use of these words and generates rules for their use based on an analysis of dependencies. With the help of these rules, candidate texts are created from which the most plausible options are selected. In addition to the complexity of implementation, the disadvantage of this method is a certain dependence on the language used, which complicates its portability, as well as the fact that a patent has been received for it, the validity of which has not yet expired.

The main efforts in the field of text generators were concentrated mainly on adding an increasing number of linguistic features and manually created rules, which led to the creation of fairly powerful systems, but focused mainly on English.

Recently, interest in learning systems of language generation has increased again, which is associated with the emergence of neural language models (Neural language models). There are neural network architectures that can describe the content of pictures and translate from one language to another. In previous articles, we considered a chat bot on neural networks and a neural generator of product descriptions. It is interesting to see how the neural network generator can solve this specific problem.

As a task, we selected the problem of generating contextual ad headlines from search queries, which is similar to the example we examined. Taking a set of 15,000 training examples in one subject area, we applied a neural network with the architecture described earlier [2], teaching it to generate new headers. The features of this architecture is that it can specify the required length of the generated text and keywords as input parameters. For verification, we generated 200 headings and estimated how many of them turned out to be qualitative:

The first results turned out to be rather modest:

In total, 64% of usable headings came out. Of the unsuitable 5% exceeded the desired length, 10% contained grammatical errors, and the rest changed their meaning using similar, but different words.

After that, we introduced a number of modifications into the system and changed the neural network architecture. The key factor for obtaining a good result is the ability to copy words from the source text to the target [3]. This is significant, because in a small sample of several tens of thousands of texts (instead of millions of sentences, as, for example, in machine translation), the system cannot learn a lot of words.

A few examples:

Thus, 89% became usable headers, while the headers that altered the meaning altogether disappeared. Considering that among the headings compiled by people, the number of “ideal” reaches 68-77% depending on a person’s qualifications, degree of fatigue, etc., it can be said that automatic generation capabilities make up about 80% of a person’s capabilities. This is pretty good, and paves the way for practical applications of such systems, especially since the opportunities for improvement are far from exhausted.

Literature

1. Uchimoto, Kiyotaka, Hitoshi Isahara, and Satoshi Sekine. “Text generation from keywords.” Proceedings of the 19th international conference on Computational linguistics-Volume 1. Association for Computational Linguistics, 2002.2

.Tarasov DS (2015) Natural Language Generation, Paraphrasing and Summarization of User Reviews with Recurrent Neural Networks // Computational Linguistics and Intellectual Technologies: Proceedings of Annual International Conference “Dialogue”, Issue 14 (21), V.1, pp. 571-579

3. Vinyals, Oriol, Meire Fortunato, and Navdeep Jaitly. “Pointer networks.” Advances in Neural Information Processing Systems. 2015.

As an example, consider the task of writing a proposal of a given length on a specific topic, which should include a certain number of keywords. Let’s say the words “doors, windows, quality, make” are given - you need to make a sentence like “We make windows and doors with high quality!”.

One of the first known approaches to solving such a problem is the use of a statistical language model. A language model is a function of the distribution of the probability of finding words in a certain sequence. Those. a certain function P (S ) - which allows, knowing the sequence of words S , to obtain the probability Pmeet the words in this sequence. Most often, the language model is based on the so-called n-grams, i.e., counting the frequency of obtaining phrases from n words in large arrays of texts. The ratio of the number of occurrences of a given phrase to the number of analyzed phrases gives an approximation of the probability of a given phrase. Since the probabilities of long n-grams are difficult to evaluate (some word combinations can be very rare), their probabilities are approximated in one way or another using the frequencies of shorter sequences.

As applied to our example, if we have, say the word “Qualitatively”, then we can use the function P (S)evaluate the probabilities of all possible phrases of the original keywords “Qualitatively windows”, “Qualitatively doors”, “Qualitatively manufacture”. Most likely the latter will be most likely. Therefore, we add the word “make” to our line and repeat the operation, receiving in the next step “Quality manufacture of windows” and further “Quality manufacture of door windows”.

The disadvantages of this approach are immediately visible. Firstly, he uses words only in initial cases and numbers, and therefore we can’t say in any way that we prefer to use “make” instead of “make”. Secondly, there is no way to use any other words than the original ones, so the resulting phrase is “door window”. Of course, we can easily allow the use of any words included in the language model, for example, prepositions, which will work in this case. But if the keywords are only “qualitatively door windows”, then prepositions will not help and you will have to carefully select the words that the model can use “on its own”.

Other difficulties will arise. For example, in the described approach, the language model does not know anything about the keywords that it can use. Therefore, if the beginning “High-quality manufacture of doors for windows” is generated, then inevitably it will turn out “High-quality manufacture of doors for a window”, or if you use the coordination in the cases “High-quality manufacture of doors for a window”, which is all the same meaningless. This can be partially eliminated if you save the entire history of possibilities at every step. That is, when the phrase “doors for” was composed, there were other options, including “doors and”. In the absence of continuation, the “door for” got out. But if we saved the previous options and completed them, too, we get several alternatives for “doors and windows”, “doors for windows”, “doors for windows” and so on. Of these options, we already choose the most probable - “doors and windows”.

A more complex approach is described, for example, in [1]. Based on the same principle, it, however, takes into account not only the probabilities of n-grams, but additional linguistic information, determining the relationships between words. Based on a list of keywords, this method searches in a large body of texts for the use of these words and generates rules for their use based on an analysis of dependencies. With the help of these rules, candidate texts are created from which the most plausible options are selected. In addition to the complexity of implementation, the disadvantage of this method is a certain dependence on the language used, which complicates its portability, as well as the fact that a patent has been received for it, the validity of which has not yet expired.

The main efforts in the field of text generators were concentrated mainly on adding an increasing number of linguistic features and manually created rules, which led to the creation of fairly powerful systems, but focused mainly on English.

Recently, interest in learning systems of language generation has increased again, which is associated with the emergence of neural language models (Neural language models). There are neural network architectures that can describe the content of pictures and translate from one language to another. In previous articles, we considered a chat bot on neural networks and a neural generator of product descriptions. It is interesting to see how the neural network generator can solve this specific problem.

Our results

As a task, we selected the problem of generating contextual ad headlines from search queries, which is similar to the example we examined. Taking a set of 15,000 training examples in one subject area, we applied a neural network with the architecture described earlier [2], teaching it to generate new headers. The features of this architecture is that it can specify the required length of the generated text and keywords as input parameters. For verification, we generated 200 headings and estimated how many of them turned out to be qualitative:

The first results turned out to be rather modest:

| Result | Percentage of Headings Received |

| Perfect headlines | 34% |

| Good headlines | 31% |

| Bad headers | 36% |

In total, 64% of usable headings came out. Of the unsuitable 5% exceeded the desired length, 10% contained grammatical errors, and the rest changed their meaning using similar, but different words.

After that, we introduced a number of modifications into the system and changed the neural network architecture. The key factor for obtaining a good result is the ability to copy words from the source text to the target [3]. This is significant, because in a small sample of several tens of thousands of texts (instead of millions of sentences, as, for example, in machine translation), the system cannot learn a lot of words.

| Result | Percentage of Headings Received |

| Perfect headlines | 60% |

| Good headlines | 29% |

| Bad headers | eleven% |

A few examples:

| Keywords | Text |

| wholesale supply of china toys | Wholesale toy from china. Discounts |

| talking furby toy reviews | talking toy, where to buy? |

| kids mats | educational rugs for children |

| Monster High dolls review house | all dollhouse video reviews |

Thus, 89% became usable headers, while the headers that altered the meaning altogether disappeared. Considering that among the headings compiled by people, the number of “ideal” reaches 68-77% depending on a person’s qualifications, degree of fatigue, etc., it can be said that automatic generation capabilities make up about 80% of a person’s capabilities. This is pretty good, and paves the way for practical applications of such systems, especially since the opportunities for improvement are far from exhausted.

Literature

1. Uchimoto, Kiyotaka, Hitoshi Isahara, and Satoshi Sekine. “Text generation from keywords.” Proceedings of the 19th international conference on Computational linguistics-Volume 1. Association for Computational Linguistics, 2002.2

.Tarasov DS (2015) Natural Language Generation, Paraphrasing and Summarization of User Reviews with Recurrent Neural Networks // Computational Linguistics and Intellectual Technologies: Proceedings of Annual International Conference “Dialogue”, Issue 14 (21), V.1, pp. 571-579

3. Vinyals, Oriol, Meire Fortunato, and Navdeep Jaitly. “Pointer networks.” Advances in Neural Information Processing Systems. 2015.