23,000 people wrote an online dictation on April 8, 2017. How did this happen?

This year, 200 thousand people from 858 cities of the world took part in the educational campaign “Total Dictation”. Dictation has been written for seven years mainly on offline sites, there has been an opportunity to do this online since 2014. Having experienced all the sorrows of extreme loads on the site, this year the organizers of the action attracted a whole team of IT companies. Today we talk about our part of the work.

photo: Valery Melnikov, RIA Novosti

The Total Dictation Foundation began promoting the action in February - then online training sessions started and the first media publications went. Each lesson was watched by an average of 13,990 people - of course, on the day of the dictation, an even more significant load on the site was supposed. Last year, during a dictation, a DDoS attack hit the server, due to which the site was unavailable for some time. The following were responsible for the site’s performance:

Prior to the start of our work, the project was placed on a simple virtual server with the following characteristics: 2 CPU cores, 4 GB of RAM, HDD.

Initially, the project team suggested that on the day of the action 120 RPS will arrive on the server, and 1000 visitors will visit the site every minute. To find out how much the RPS server can support now, and what server configuration will be required for peak load, a Yandex.Tank server load test was performed. The final configuration of the main and backup servers looked like this: 48 CPU cores, 128 GB of RAM, 250 GB SSD.

For the period of preparation of the project for peak load, we upgraded the virtual server with the site - so that we could carry out all the necessary optimizations both in terms of settings and in terms of code.

In parallel with the load testing, the process of connecting the anti-dos provider to the site was ongoing. What he looked like:

Initially, it was planned to split up the new servers, and make one server as the primary, and the other as the backup, in case the primary falls. But during the load tests to increase the overall system capacity, it was decided to use a backup server to process backend requests (in our case, php-fpm). Backend requests between the primary and backup servers were balanced using nginx on the primary server. MySQL was configured as the general session storage - “1C-Bitrix” allows you to do this without the need to modify server settings.

A week and a half before the day of the dictation, the project was switched to new servers. To do this, they first created a full copy of the old server - including all software settings, site files, databases. The switching process itself looked like this:

On the side of the anti-dos provider, the addresses of the target servers have been changed so that all traffic arrives at the new servers.

After the site was transferred, the final load testing was carried out - a load of 500 RPS was emulated, because the organizers suggested that there would be more visitors than they thought. As a result of the tests, it was found that due to the use of MySQL for storing sessions, the load on the disks was quite large, and in peaks this could lead to problems. Therefore, it was decided to reconfigure the sessions for storage in memcache - the load testing carried out after this showed that with the expected load on the current hardware “bottlenecks” should not appear.

In general, a project in which several independent parties are involved is always a challenge, always a risk. Therefore, before the start, in spite of all the preparatory work, stress testing, code audits, etc., there still remained some tension and jitters.

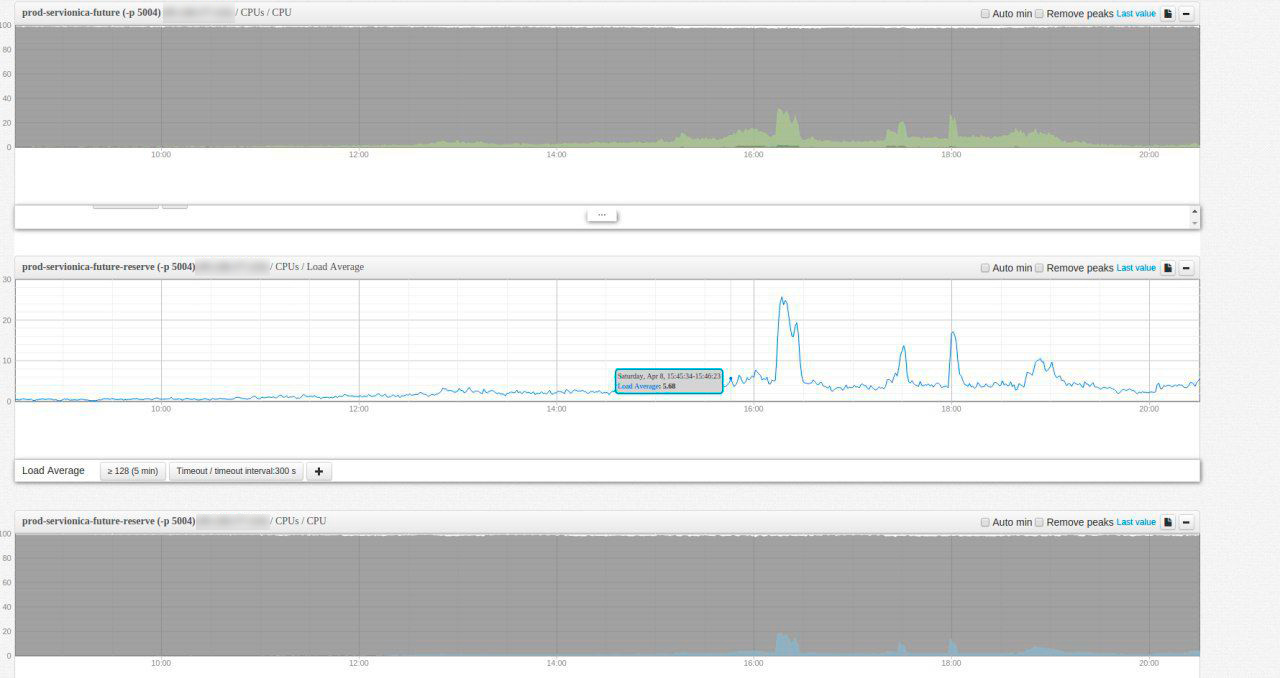

The dictation started on April 8, 2017 at 15:00 in Vladivostok (GMT +10). At the start, the load was minimal - about 20 requests per second for dynamics. But, of course, it was not worth relaxing. By the largest broadcast, the last one, at 14:00 Moscow time, we allocated more memory for caching in Memcache, took out the same session there, so that there was less disk load. The broadcast went without any fixed problems, the load was controlled, everything worked quickly, correctly.

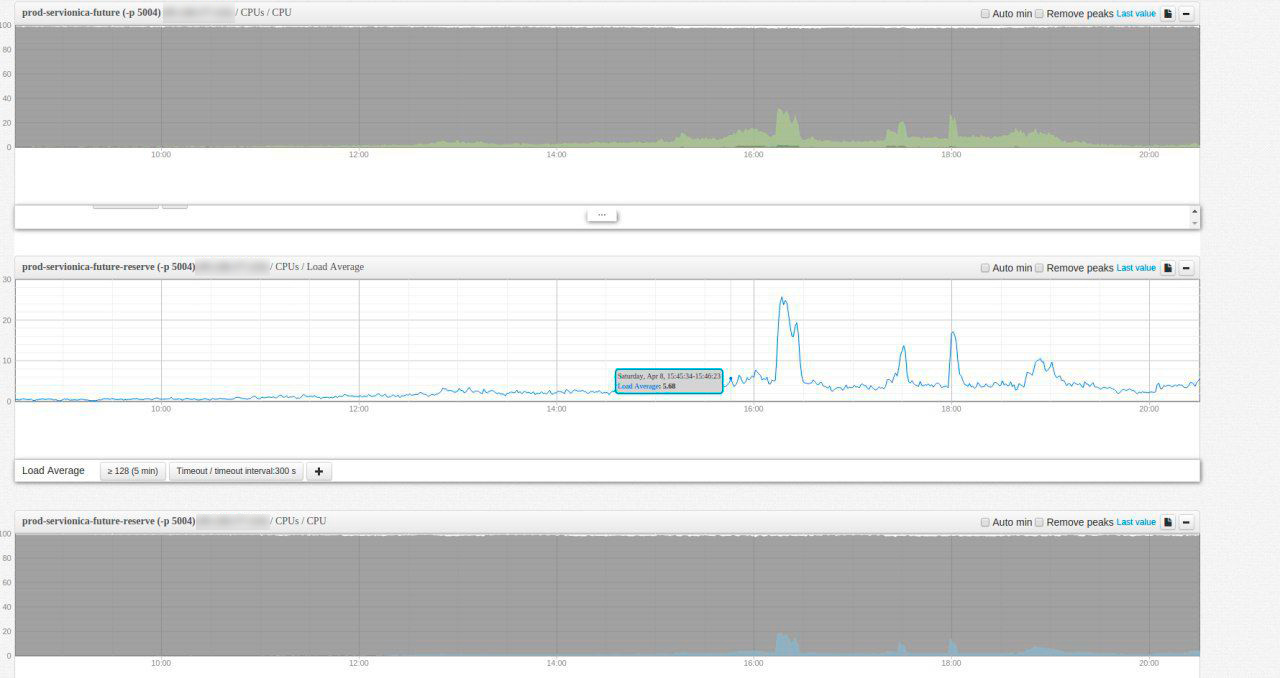

This is what the load picture looked like on that day (time on Irkutsk charts, GMT +8).

Everyone tried hard! We transmitted the data on load monitoring to colleagues from the Total Dictation to draw up a plan for next year.

On April 8, 90,000 spectators watched the action on the Internet, 23,000 people wrote a dictation online. From April 9 to April 18, the site was visited by 454798 unique users who viewed four million pages - participants found out their ratings and watched webinars with an analysis of errors. The organizers have already begun preparations for the dictation on April 14, 2018 - pull yourself up too (repeat the rules of the Russian language in online courses), all dictation!

PS On October 13th we are holding a free Uptime day conference in Moscow about accidents in the IT infrastructure: details in yesterday's post , registration on the site .

photo: Valery Melnikov, RIA Novosti

The Total Dictation Foundation began promoting the action in February - then online training sessions started and the first media publications went. Each lesson was watched by an average of 13,990 people - of course, on the day of the dictation, an even more significant load on the site was supposed. Last year, during a dictation, a DDoS attack hit the server, due to which the site was unavailable for some time. The following were responsible for the site’s performance:

- CMS “1C-Bitrix” (functionality and interface);

- Bitrixoid (technical support);

- "Servionics" (cloud hosting);

- Scientific and Technical Center Atlas (protection against bots and DDoS attacks);

- ITSumma (preparation of IT infrastructure and servers);

- Stepik (platform for online dictation).

Site preparation for load

Prior to the start of our work, the project was placed on a simple virtual server with the following characteristics: 2 CPU cores, 4 GB of RAM, HDD.

Initially, the project team suggested that on the day of the action 120 RPS will arrive on the server, and 1000 visitors will visit the site every minute. To find out how much the RPS server can support now, and what server configuration will be required for peak load, a Yandex.Tank server load test was performed. The final configuration of the main and backup servers looked like this: 48 CPU cores, 128 GB of RAM, 250 GB SSD.

For the period of preparation of the project for peak load, we upgraded the virtual server with the site - so that we could carry out all the necessary optimizations both in terms of settings and in terms of code.

In parallel with the load testing, the process of connecting the anti-dos provider to the site was ongoing. What he looked like:

- All A-records of the site were switched to IP anti-dos.

- The settings of the mail server have been changed so that the real IP of the project does not appear anywhere in the outgoing mail headers.

- On the anti-dos side, filtering of all requests coming to the site and their subsequent proxying to the project servers were configured.

Initially, it was planned to split up the new servers, and make one server as the primary, and the other as the backup, in case the primary falls. But during the load tests to increase the overall system capacity, it was decided to use a backup server to process backend requests (in our case, php-fpm). Backend requests between the primary and backup servers were balanced using nginx on the primary server. MySQL was configured as the general session storage - “1C-Bitrix” allows you to do this without the need to modify server settings.

A week and a half before the day of the dictation, the project was switched to new servers. To do this, they first created a full copy of the old server - including all software settings, site files, databases. The switching process itself looked like this:

- We set up database replication and synchronization of project files from the old server to the new one.

- At the time of the switch, proxying of all requests from the old server to the new one using nginx was enabled.

- Disabled database replication.

On the side of the anti-dos provider, the addresses of the target servers have been changed so that all traffic arrives at the new servers.

After the site was transferred, the final load testing was carried out - a load of 500 RPS was emulated, because the organizers suggested that there would be more visitors than they thought. As a result of the tests, it was found that due to the use of MySQL for storing sessions, the load on the disks was quite large, and in peaks this could lead to problems. Therefore, it was decided to reconfigure the sessions for storage in memcache - the load testing carried out after this showed that with the expected load on the current hardware “bottlenecks” should not appear.

Load on the day of the action

In general, a project in which several independent parties are involved is always a challenge, always a risk. Therefore, before the start, in spite of all the preparatory work, stress testing, code audits, etc., there still remained some tension and jitters.

The dictation started on April 8, 2017 at 15:00 in Vladivostok (GMT +10). At the start, the load was minimal - about 20 requests per second for dynamics. But, of course, it was not worth relaxing. By the largest broadcast, the last one, at 14:00 Moscow time, we allocated more memory for caching in Memcache, took out the same session there, so that there was less disk load. The broadcast went without any fixed problems, the load was controlled, everything worked quickly, correctly.

This is what the load picture looked like on that day (time on Irkutsk charts, GMT +8).

The overall result

Everyone tried hard! We transmitted the data on load monitoring to colleagues from the Total Dictation to draw up a plan for next year.

On April 8, 90,000 spectators watched the action on the Internet, 23,000 people wrote a dictation online. From April 9 to April 18, the site was visited by 454798 unique users who viewed four million pages - participants found out their ratings and watched webinars with an analysis of errors. The organizers have already begun preparations for the dictation on April 14, 2018 - pull yourself up too (repeat the rules of the Russian language in online courses), all dictation!

PS On October 13th we are holding a free Uptime day conference in Moscow about accidents in the IT infrastructure: details in yesterday's post , registration on the site .