Following the highloadcup trail: php vs node.js vs go, swoole vs workerman, splfixedarray vs array and more

The story about how I participated in highloadcup ( championship for backend developers ) from Mail.Ru, I wrote on a php server serving 10,000 RPS, but still did not get a winning T-shirt.

So, let's start with a T-shirt. I had to write my decision before September 1, and I finished only the sixth.

On the first of September, the results were summed up, the seats were distributed, T-shirts were promised to everyone who fit within a thousand seconds. After that, the organizers allowed another week to improve their decisions, but without prizes. I used this time to rewrite my decision (in fact, I only had a couple of evenings). Actually, I’m not supposed to wear a T-shirt, but it's a pity :(

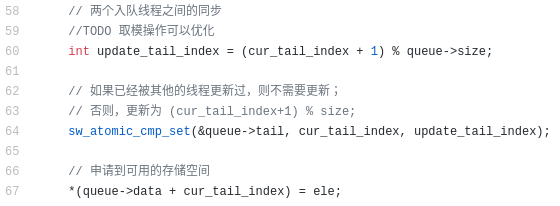

During my previous article, I compared libraries in php to create a web socket serverthen they recommended the swoole library to me - it is written in C ++ and installed from pecl. By the way, all these libraries can be used not only to create a web socket server, but are also suitable simply for an http server. I decided to use this.

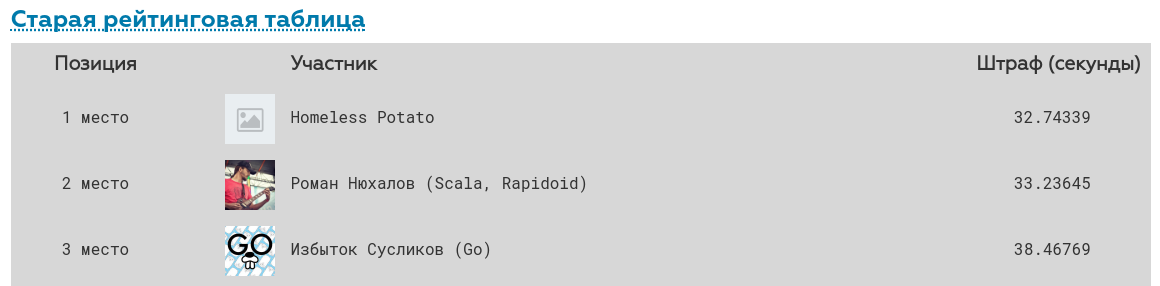

I took the swoole library, created the sqlite database in memory and immediately climbed to the top twenty with a result of 159 seconds, then I was shifted, I added the cache and reduced the time to 79 seconds, got back to the twenty, I was shifted, rewritten from sqlite to swoole_table and reduced the time to 47 seconds. Of course, I was far from the first places, but I managed to get around the table of my several friends with the solution on Go.

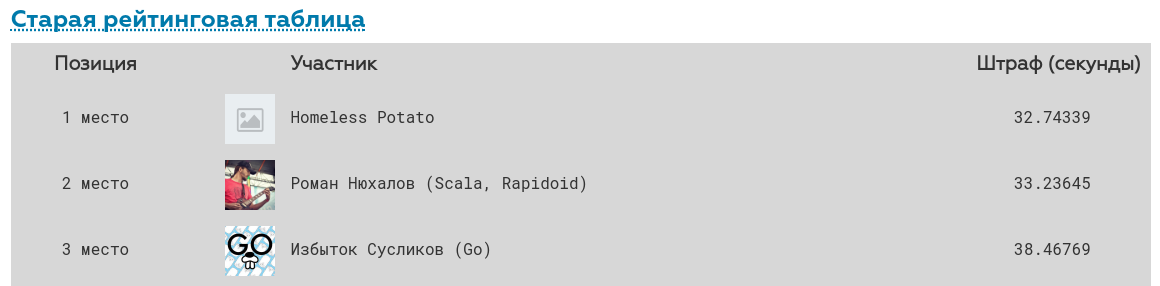

This is what the old rating table looks like now:

A little praise for Mail.Ru and you can go further.

Thanks to this wonderful championship, I became more familiar with the libraries of swoole, workerman, learned how to better optimize php for high loads, learned how to use yandex tank and much more. Continue to arrange such championships, competition encourages you to learn new information and upgrade skills.

To get started, I took swool, because it is written in C ++ and definitely should work faster than workerman, which is written in php.

I wrote hello world code:

Launched the Apache Benchmark Linux console utility, which makes 10k requests in 10 threads:

and got a response time of 0.17 ms.

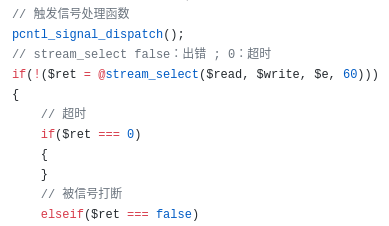

After that I wrote an example on workerman:

and got 0.43 ms , i.e. the result is 3 times worse.

But I did not give up, I installed the event library:

Measurements showed 0.11 ms , i.e. workerman written in php and using libevent is now faster than swoole written in C ++. I read tons of documentation in Chinese using google translate. But I didn’t find anything. By the way, both libraries are written by the Chinese and comments in Chinese in the library code for them - ...

Now I understand how the Chinese felt when they read my code.

I started a ticket on the swoole github asking how this could happen.

There I was recommended to use:

instead:

I took their advice and got 0.10 ms , i.e. slightly faster than workerman.

At this point, I already had a ready-made application in php, which I did not know how to optimize, it was responsible for 0.12 ms and decided to rewrite the application to something else.

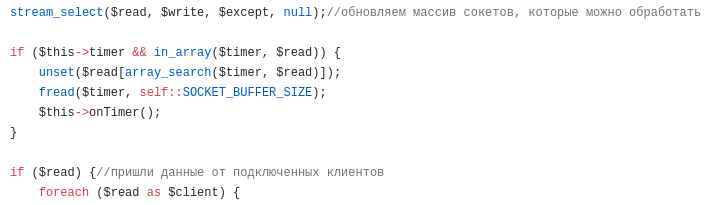

Tried node.js :

received 0.15 ms , i.e. 0.03 ms less than my finished php application

I took fasthttp on go and got 0.08 ms :

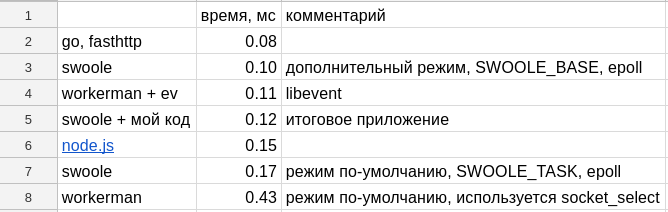

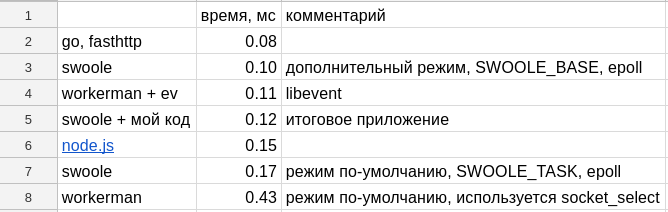

Summary table ( table and all tests on published on github ):

A week before the end of the contest, the conditions were a little complicated:

The data structure that needs to be stored is 3 tables: users (1kk), locations (1kk) and visits (11kk).

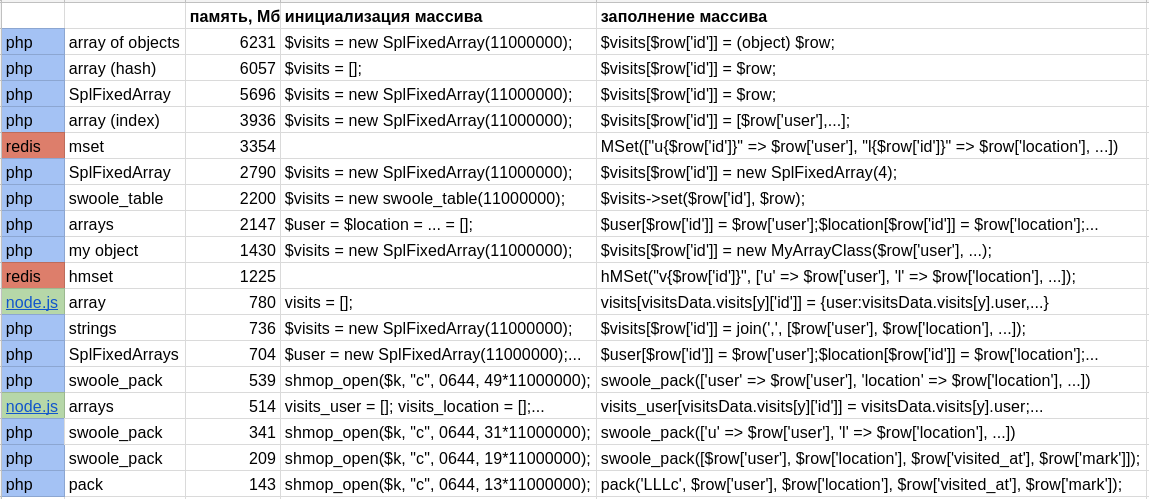

My decision has ceased to fit into the 4GB allocated for it. I had to look for options.

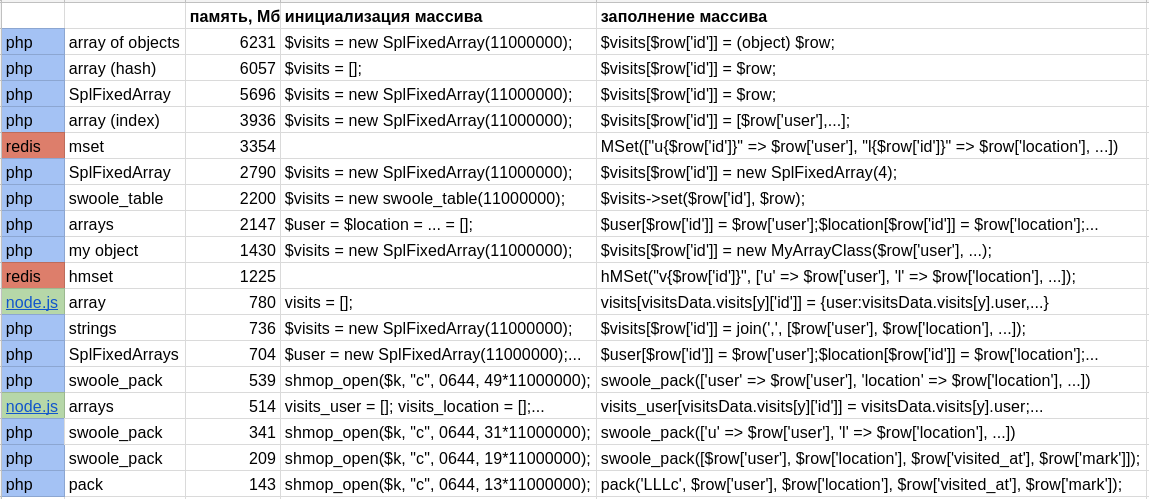

To begin with, I needed to fill in the memory from json files with 11 million records.

I tried swoole_table , measured the memory consumption - 2200 MB

I tried an associative array , the memory consumption is much larger - 6057 MB

I tried SplFixedArray, memory consumption is slightly less than that of a regular array - 5696 MB

I decided to save the individual properties of the visit to hotel arrays, because text keys can take up a lot of memory, i.e. changed this code:

on this:

memory consumption when dividing a three-dimensional array into two-dimensional was - 2147 MB , i.e. 3 times less. T.O. the key names in the three-dimensional array ate 2/3 of all the memory it occupied.

I decided to use splitting a three-dimensional array together with SplFixedArray and memory consumption fell another 3 times and amounted to 704 MB

For fun, I tried the same thing on node.js and got 780 MB

Summary table (the table and all tests are published on the github ): I wanted to try apc_cache and redis, but they still additionally spend memory for storing key names. In real life, you can use, but for this championship is not an option at all.

After all the optimizations, the total time was 404 seconds, which is almost 4 times slower than the first place.

Thanks again to the organizers who did not sleep at night, reloaded the hanging containers, fixed bugs, finished the site, answered questions in a telegram.

Actual summary tables and a similar code for all tests are published on the github:

comparing the speed of different web servers,

comparing the memory consumption of different structures.

My other article on Habr today: a free server in the cloud

Introduction

So, let's start with a T-shirt. I had to write my decision before September 1, and I finished only the sixth.

On the first of September, the results were summed up, the seats were distributed, T-shirts were promised to everyone who fit within a thousand seconds. After that, the organizers allowed another week to improve their decisions, but without prizes. I used this time to rewrite my decision (in fact, I only had a couple of evenings). Actually, I’m not supposed to wear a T-shirt, but it's a pity :(

During my previous article, I compared libraries in php to create a web socket serverthen they recommended the swoole library to me - it is written in C ++ and installed from pecl. By the way, all these libraries can be used not only to create a web socket server, but are also suitable simply for an http server. I decided to use this.

I took the swoole library, created the sqlite database in memory and immediately climbed to the top twenty with a result of 159 seconds, then I was shifted, I added the cache and reduced the time to 79 seconds, got back to the twenty, I was shifted, rewritten from sqlite to swoole_table and reduced the time to 47 seconds. Of course, I was far from the first places, but I managed to get around the table of my several friends with the solution on Go.

This is what the old rating table looks like now:

A little praise for Mail.Ru and you can go further.

Thanks to this wonderful championship, I became more familiar with the libraries of swoole, workerman, learned how to better optimize php for high loads, learned how to use yandex tank and much more. Continue to arrange such championships, competition encourages you to learn new information and upgrade skills.

php vs node.js vs go

To get started, I took swool, because it is written in C ++ and definitely should work faster than workerman, which is written in php.

I wrote hello world code:

$server = new Swoole\Http\Server('0.0.0.0', 1080);

$server->set(['worker_num' => 1,]);

$server->on('Request', function($req, $res) {$res->end('hello world');});

$server->start();Launched the Apache Benchmark Linux console utility, which makes 10k requests in 10 threads:

ab -c 10 -n 10000 http://127.0.0.1:1080/and got a response time of 0.17 ms.

After that I wrote an example on workerman:

require_once DIR . '/vendor/autoload.php';

use Workerman\Worker;

$http_worker = new Worker("http://0.0.0.0:1080");

$http_worker->count = 1;

$http_worker->onMessage = function($conn, $data) {$conn->send("hello world");};

Worker::runAll();and got 0.43 ms , i.e. the result is 3 times worse.

But I did not give up, I installed the event library:

pecl install eventWorker::$eventLoopClass = '\Workerman\Events\Ev';Final code

require_once DIR . '/vendor/autoload.php';

use Workerman\Worker;

Worker::$eventLoopClass = '\Workerman\Events\Ev';

$http_worker = new Worker("http://0.0.0.0:1080");

$http_worker->count = 1;

$http_worker->onMessage = function($conn, $data) {$conn->send("hello world");};

Worker::runAll();Measurements showed 0.11 ms , i.e. workerman written in php and using libevent is now faster than swoole written in C ++. I read tons of documentation in Chinese using google translate. But I didn’t find anything. By the way, both libraries are written by the Chinese and comments in Chinese in the library code for them - ...

Normal practice

Now I understand how the Chinese felt when they read my code.

I started a ticket on the swoole github asking how this could happen.

There I was recommended to use:

$serv = new Swoole\Http\Server('0.0.0.0', 1080, SWOOLE_BASE);instead:

$serv = new Swoole\Http\Server('0.0.0.0', 1080);Final code

$serv = new Swoole\Http\Server('0.0.0.0', 1080, SWOOLE_BASE);

$serv->set(['worker_num' => 1,]);

$serv->on('Request', function($req, $res) {$res->end('hello world');});

$serv->start();I took their advice and got 0.10 ms , i.e. slightly faster than workerman.

At this point, I already had a ready-made application in php, which I did not know how to optimize, it was responsible for 0.12 ms and decided to rewrite the application to something else.

Tried node.js :

const http = require('http');

const server = http.createServer(function(req, res) {

res.writeHead(200);

res.end('hello world');

});

server.listen(1080);received 0.15 ms , i.e. 0.03 ms less than my finished php application

I took fasthttp on go and got 0.08 ms :

Hello world on fasthttp

package main

import (

"flag"

"fmt"

"log"

"github.com/valyala/fasthttp"

)

var (

addr = flag.String("addr", ":1080", "TCP address to listen to")

compress = flag.Bool("compress", false, "Whether to enable transparent response compression")

)

func main() {

flag.Parse()

h := requestHandler

if *compress {

h = fasthttp.CompressHandler(h)

}

if err := fasthttp.ListenAndServe(*addr, h); err != nil {

log.Fatalf("Error in ListenAndServe: %s", err)

}

}

func requestHandler(ctx *fasthttp.RequestCtx) {

fmt.Fprintf(ctx, "Hello, world!")

}Summary table ( table and all tests on published on github ):

splfixedarray vs array

A week before the end of the contest, the conditions were a little complicated:

- data volume increased 10 times

- the number of requests per second increased by 10 times

The data structure that needs to be stored is 3 tables: users (1kk), locations (1kk) and visits (11kk).

Description of fields

User:

id - unique external identifier of the user. It is installed by the testing system and is used to check server responses. 32-bit unsigned integer.

email - the email address of the user. Type - unicode string up to 100 characters long. Unique field.

first_name and last_name are the first and last names, respectively. Type - unicode strings up to 50 characters long.

gender - the unicode string m means male, and f means female.

birth_date - date of birth, recorded as the number of seconds from the beginning of the UNIX era in UTC (in other words, this is a timestamp).

Location:

id- The unique external id of the attraction. Installed by the testing system. 32-bit unsigned integer.

place - a description of the attraction. Text field of unlimited length.

country - the name of the country of location. unicode string up to 50 characters long.

city - the name of the city of location. unicode string up to 50 characters long.

distance - the distance from the city in a straight line in kilometers. 32-bit unsigned integer.

Visit:

id - the unique external id of the visit. 32-bit unsigned integer.

location - id of the attraction. 32-bit unsigned integer.

user- id of the traveler. 32-bit unsigned integer.

visited_at - date of visit, timestamp.

mark - rating of visits from 0 to 5 inclusive. Integer.

My decision has ceased to fit into the 4GB allocated for it. I had to look for options.

To begin with, I needed to fill in the memory from json files with 11 million records.

I tried swoole_table , measured the memory consumption - 2200 MB

Data Download Code

$visits = new swoole_table(11000000);

$visits->column('id', swoole_table::TYPE_INT);

$visits->column('user', swoole_table::TYPE_INT);

$visits->column('location', swoole_table::TYPE_INT);

$visits->column('mark', swoole_table::TYPE_INT);

$visits->column('visited_at', swoole_table::TYPE_INT);

$visits->create();

$i = 1;

while ($visitsData = @file_get_contents("data/visits_$i.json")) {

$visitsData = json_decode($visitsData, true);

foreach ($visitsData['visits'] as $k => $row) {

$visits->set($row['id'], $row);

}

$i++;

}

unset($visitsData);

gc_collect_cycles();

echo 'memory: ' . intval(memory_get_usage() / 1000000) . "\n";I tried an associative array , the memory consumption is much larger - 6057 MB

Data Download Code

$visits = [];

$i = 1;

while ($visitsData = @file_get_contents("data/visits_$i.json")) {

$visitsData = json_decode($visitsData, true);

foreach ($visitsData['visits'] as $k => $row) {

$visits[$row['id']] = $row;

}

$i++;echo "$i\n";

}

unset($visitsData);

gc_collect_cycles();

echo 'memory: ' . intval(memory_get_usage() / 1000000) . "\n";I tried SplFixedArray, memory consumption is slightly less than that of a regular array - 5696 MB

Data Download Code

$visits = new SplFixedArray(11000000);

$i = 1;

while ($visitsData = @file_get_contents("data/visits_$i.json")) {

$visitsData = json_decode($visitsData, true);

foreach ($visitsData['visits'] as $k => $row) {

$visits[$row['id']] = $row;

}

$i++;echo "$i\n";

}

unset($visitsData);

gc_collect_cycles();

echo 'memory: ' . intval(memory_get_usage() / 1000000) . "\n";I decided to save the individual properties of the visit to hotel arrays, because text keys can take up a lot of memory, i.e. changed this code:

$visits[1] = ['user' => 153, 'location' => 17, 'mark' => 5, 'visited_at' => 1503695452];on this:

$visits_user[1] = 153;

$visits_location[1] = 17;

$visits_mark[1] = 5;

$visits_visited_at[1] => 1503695452;memory consumption when dividing a three-dimensional array into two-dimensional was - 2147 MB , i.e. 3 times less. T.O. the key names in the three-dimensional array ate 2/3 of all the memory it occupied.

Data Download Code

$user = $location = $mark = $visited_at = [];

$i = 1;

while ($visitsData = @file_get_contents("data/visits_$i.json")) {

$visitsData = json_decode($visitsData, true);

foreach ($visitsData['visits'] as $k => $row) {

$user[$row['id']] = $row['user'];

$location[$row['id']] = $row['location'];

$mark[$row['id']] = $row['mark'];

$visited_at[$row['id']] = $row['visited_at'];

}

$i++;echo "$i\n";

}

unset($visitsData);

gc_collect_cycles();

echo 'memory: ' . intval(memory_get_usage() / 1000000) . "\n";I decided to use splitting a three-dimensional array together with SplFixedArray and memory consumption fell another 3 times and amounted to 704 MB

Data Download Code

$user = new SplFixedArray(11000000);

$location = new SplFixedArray(11000000);

$mark = new SplFixedArray(11000000);

$visited_at = new SplFixedArray(11000000);

$user_visits = [];

$location_visits = [];

$i = 1;

while ($visitsData = @file_get_contents("data/visits_$i.json")) {

$visitsData = json_decode($visitsData, true);

foreach ($visitsData['visits'] as $k => $row) {

$user[$row['id']] = $row['user'];

$location[$row['id']] = $row['location'];

$mark[$row['id']] = $row['mark'];

$visited_at[$row['id']] = $row['visited_at'];

if (isset($user_visits[$row['user']])) {

$user_visits[$row['user']][] = $row['id'];

} else {

$user_visits[$row['user']] = [$row['id']];

}

if (isset($location_visits[$row['location']])) {

$location_visits[$row['location']][] = $row['id'];

} else {

$location_visits[$row['location']] = [$row['id']];

}

}

$i++;echo "$i\n";

}

unset($visitsData);

gc_collect_cycles();

echo 'memory: ' . intval(memory_get_usage() / 1000000) . "\n";For fun, I tried the same thing on node.js and got 780 MB

Data Download Code

const fs = require('fs');

global.visits = []; global.users_visits = []; global.locations_visits = [];

let i = 1; let visitsData;

while (fs.existsSync(`data/visits_${i}.json`) && (visitsData = JSON.parse(fs.readFileSync(`data/visits_${i}.json`, 'utf8')))) {

for (y = 0; y < visitsData.visits.length; y++) {

//visits[visitsData.visits[y]['id']] = visitsData.visits[y];

visits[visitsData.visits[y]['id']] = {

user:visitsData.visits[y].user,

location:visitsData.visits[y].location,

mark:visitsData.visits[y].mark,

visited_at:visitsData.visits[y].visited_at,

//id:visitsData.visits[y].id,

};

}

i++;

}

global.gc();

console.log("memory usage: " + parseInt(process.memoryUsage().heapTotal/1000000));Summary table (the table and all tests are published on the github ): I wanted to try apc_cache and redis, but they still additionally spend memory for storing key names. In real life, you can use, but for this championship is not an option at all.

Afterword

After all the optimizations, the total time was 404 seconds, which is almost 4 times slower than the first place.

Thanks again to the organizers who did not sleep at night, reloaded the hanging containers, fixed bugs, finished the site, answered questions in a telegram.

Actual summary tables and a similar code for all tests are published on the github:

comparing the speed of different web servers,

comparing the memory consumption of different structures.

My other article on Habr today: a free server in the cloud

Only registered users can participate in the survey. Please come in.

Have you been involved in highloadcup?

- 7.9% I'm not a backend developer 28

- 34.5% did not participate and did not want 122

- 48.7% wanted, but missed / found out late / there was no time 172

- 8.7% participated 31

Would you like to participate in the next championship for backend developers?

- 61.2% yes 217

- 15.8% no 56

- 22.8% yes, but I will not 81