Pitfalls of Inter-AS Option C at JunOS

- Tutorial

This article is written so to speak at the request of listeners of our radio station. When configuring Option C on JunOS, many people have the same question: why doesn’t everything work, although everything seems to be right? On JunOS, everything is not as trivial as on Cisco, and there can be several problems. Let's go directly to the point: the symptoms are that you configured the ASBR BGP-LU session to organize the Opt.C interface on Juniper equipment (and naturally the VPNv4 session between reflectors, but it has nothing to do with it), but there are pings between the loopbacks of PE routers of various autonomous systems But L3VPN does not work. Let's understand why this is happening and how to deal with it.

Opt.C, unlike opt.B or opt.A, is not only a session between ASBRs of different autonomous systems, but a complex solution, which includes a BGP-LU session between ASBRs, designed to transfer routes with labels to loopbacks between autonomous systems, and VPNv4 session, as a rule, between reflectors of different autonomous systems, designed to transmit VPNv4 prefixes. Inside your autonomous system, you choose the method of distributing routes with labels to remote loopbacks yourself - it can be a BGP-LU session directly from ASBR or via RR, or redistribution of BGP-LU routes in IGP to ASBR. Each approach has its pros and cons, you can read about it in my previous article, which describes the principle of operation of opt.C. The choice is only yours, but I personally like it when everything works exclusively through BGP,

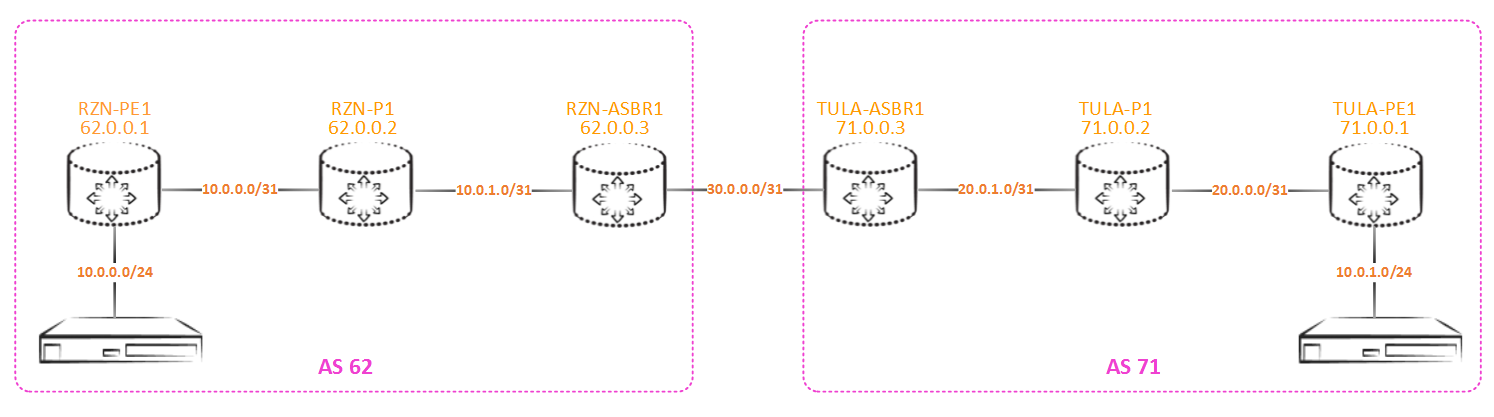

Let's reproduce the problem in the emulator and get to the bottom of its essence. Let's take this grid:

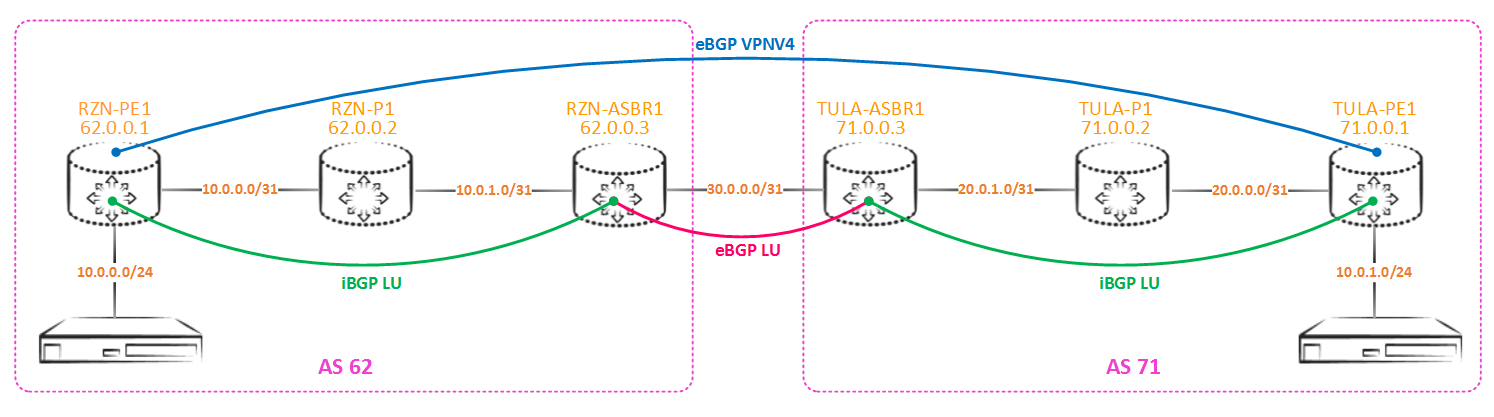

Labels obtained by eBGP-LU from a neighboring autonomous system inside our local autonomous system will be distributed using iBGP-LU (as I wrote earlier, you can use redistribution, but I don’t like this approach, besides, it has its own pitfall , which I will write about later): The

configuration of the joints is the same, and for RZN-ASBR1 it looks like this:

bormoglotx@RZN-ASBR1# show protocols bgp

group PE {

type internal;

family inet {

labeled-unicast;

}

export NHS;

neighbor 62.0.0.1 {

local-address 62.0.0.3;

}

}

group ASBR {

type external;

family inet {

labeled-unicast;

}

export LO-export;

neighbor 30.0.0.1 {

local-address 30.0.0.0;

peer-as 71;

}

}

[edit]

bormoglotx@RZN-ASBR1# show policy-options policy-statement NHS

then {

next-hop self;

accept;

}

[edit]

bormoglotx@RZN-ASBR1# show policy-options policy-statement LO-export

term Lo {

from {

protocol ospf;

route-filter 62.0.0.0/24 prefix-length-range /32-/32;

}

then accept;

}

term Lo-local {

from {

protocol direct;

route-filter 62.0.0.0/24 prefix-length-range /32-/32;

}

then accept;

}

then reject;For TULA-ASBR1, everything will be the same, adjusted for addressing. The first policy is hung in the direction of local PE routers and simply exchanges next-hop for itself. The second policy is hung in the direction of the remote ASBR and is designed to export only routes to loopbacks to a neighboring autonomous system.

Note: there are two terms in the policy - the first exports the loopbacks that are in igp, the second exports its own ASBR loopback, since it is installed in rib with the direct protocol, and not with igp. This can be done with one term, but it’s more convenient for me.

Check the state of the BGP-LU session between RZN-ASBR1 and TULA-ASBR1:

bormoglotx@RZN-ASBR1> show bgp neighbor 30.0.0.1

Peer: 30.0.0.1+179 AS 71 Local: 30.0.0.0+56580 AS 62

Type: External State: Established Flags:

Last State: OpenConfirm Last Event: RecvKeepAlive

Last Error: None

Export: [ LO-export ]

Options:

Address families configured: inet-labeled-unicast

Local Address: 30.0.0.0 Holdtime: 90 Preference: 170

Number of flaps: 0

Peer ID: 71.0.0.3 Local ID: 62.0.0.3 Active Holdtime: 90

Keepalive Interval: 30 Group index: 1 Peer index: 0

BFD: disabled, down

Local Interface: ge-0/0/0.0

NLRI for restart configured on peer: inet-labeled-unicast

NLRI advertised by peer: inet-labeled-unicast

NLRI for this session: inet-labeled-unicast

Peer supports Refresh capability (2)

Stale routes from peer are kept for: 300

Peer does not support Restarter functionality

NLRI that restart is negotiated for: inet-labeled-unicast

NLRI of received end-of-rib markers: inet-labeled-unicast

NLRI of all end-of-rib markers sent: inet-labeled-unicast

Peer supports 4 byte AS extension (peer-as 71)

Peer does not support Addpath

Table inet.0 Bit: 10000

RIB State: BGP restart is complete

Send state: in sync

Active prefixes: 3

Received prefixes: 3

Accepted prefixes: 3

Suppressed due to damping: 0

Advertised prefixes: 3

Last traffic (seconds): Received 3 Sent 27 Checked 26

Input messages: Total 1731 Updates 7 Refreshes 0 Octets 33145

Output messages: Total 1728 Updates 3 Refreshes 0 Octets 33030

Output Queue[0]: 0

Everything is fine, we give 3 routes and accept the same. In my case, a direct iBGP-LU session is configured between ASBR and PE, in which loopbacks are transmitted (the circuit is small and therefore there is no reflector).

We go further. Let's check what exactly announces the RZN-ASBR1 in the neighboring autonomy:

bormoglotx@RZN-ASBR1> show route advertising-protocol bgp 30.0.0.1

inet.0: 14 destinations, 14 routes (14 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

* 62.0.0.1/32 Self 2 I

* 62.0.0.2/32 Self 1 I

* 62.0.0.3/32 Self INo surprises - we give only lupbeks. Now between vpnv4 session should rise between PE routers. Check:

bormoglotx@RZN-PE1> show bgp summary group eBGP-VPNV4

Groups: 2 Peers: 2 Down peers: 0

Table Tot Paths Act Paths Suppressed History Damp State Pending

inet.0

10 3 0 0 0 0

bgp.l3vpn.0

1 0 0 0 0 0

Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped...

71.0.0.1 71 6 6 0 0 1:02 Establ

bgp.l3vpn.0: 0/1/1/0

TEST1.inet.0: 0/1/1/0Well, the session is up and we see that we get one route. But there will be no route in the routing table, since its next-hop is unusable:

bormoglotx@RZN-PE1> show route table bgp.l3vpn.0 hidden

bgp.l3vpn.0: 3 destinations, 3 routes (2 active, 0 holddown, 1 hidden)

+ = Active Route, - = Last Active, * = Both

1:1:10.0.1.0/24

[BGP/170] 00:02:14, localpref 100, from 71.0.0.1

AS path: 71 I, validation-state: unverified

UnusableIt is in this state because there is no route to the loopback in the inet.3 table, in which bgp resolves next-hop. To do this, enable resolve-vpn sessions on bgp-lu so that the routes are set in both inet.0 and inet.3 (by default, BGP-LU routes go to the inet.0 table):

bormoglotx@RZN-PE1> show route table bgp.l3vpn.0 hidden

bgp.l3vpn.0: 3 destinations, 3 routes (3 active, 0 holddown, 0 hidden)

bormoglotx@RZN-PE1> show route table bgp.l3vpn.0 10.0.1.0/24

bgp.l3vpn.0: 3 destinations, 3 routes (3 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

1:1:10.0.1.0/24

*[BGP/170] 00:05:10, localpref 100, from 71.0.0.1

AS path: 71 I, validation-state: unverified

> to 10.0.0.1 via ge-0/0/0.0, Push 16, Push 299968, Push 299792(top)As you can see, the route immediately disappeared from hidden and installed in the routing table.

In theory, everything is configured, there are routes - traffic should go:

bormoglotx@RZN-PE1> show route table TEST1.inet.0 10.0.1.1

TEST1.inet.0: 3 destinations, 3 routes (3 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

10.0.1.0/24 *[BGP/170] 00:02:54, localpref 100, from 71.0.0.1

AS path: 71 I, validation-state: unverified

> to 10.0.0.1 via ge-0/0/0.0, Push 16, Push 299968, Push 299792(top)

bormoglotx@RZN-PE1> ping routing-instance TEST1 source 10.0.0.1 10.0.1.1 rapid

PING 10.0.1.1 (10.0.1.1): 56 data bytes

.....

--- 10.0.1.1 ping statistics ---

5 packets transmitted, 0 packets received, 100% packet lossBut in fact, we get an epic fail - nothing worked for us. Moreover, the connectivity between the loopbacks of PE routers is:

bormoglotx@RZN-PE1> ping source 62.0.0.1 71.0.0.1 rapid

PING 71.0.0.1 (71.0.0.1): 56 data bytes

!!!!!

--- 71.0.0.1 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max/stddev = 9.564/13.021/16.509/2.846 msLet's figure it out. To do this, you need to verify what the intermediate routers do with labels.

So, RZN-PE1 pushes three tags: Push 16, Push 299968, Push 299792 (top). Label 299792 is needed to get to RZN-ASBR1, label 299968 is the label that RZN-ASBR1 generated for prefix 71.0.0.1 (TULA-PE1) and label 16 is a vpnv4 label.

The top label in the stack is label 299792, and the RZN-P1 router works with it, receiving a transit mpls packet - it does not look at the next label in the stack. We will check what RZN-P1 should do by receiving a packet with the above label:

bormoglotx@RZN-P1> show route table mpls.0 label 299792

mpls.0: 8 destinations, 8 routes (8 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

299792 *[LDP/9] 13:37:43, metric 1

> to 10.0.1.0 via ge-0/0/1.0, Pop

299792(S=0) *[LDP/9] 13:37:43, metric 1

> to 10.0.1.0 via ge-0/0/1.0, PopEverything is logical, since RZN-P1 is the last but one router in LSP to RZN-ASBR1, then it removes the top label (PHP) and then sends a packet with two labels to ASBR. That is, on the RZN-ASBR1 a packet arrives with the top mark in the stack 299968:

bormoglotx@RZN-ASBR1> show route table mpls.0 label 299968

mpls.0: 14 destinations, 14 routes (14 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

299968 *[VPN/170] 00:39:11

> to 30.0.0.1 via ge-0/0/0.0, Swap 300000There is also no crime here - as it should be, a swap of a given label occurs and transfer to TULA-ASBR1.

Now we move to the neighboring autonomous system and see what will be done with the received TULA-ASB1 package:

bormoglotx@TULA-ASBR1> show route table mpls.0 label 300000

mpls.0: 14 destinations, 14 routes (14 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

300000 *[VPN/170] 00:40:48

> to 20.0.1.1 via ge-0/0/1.0, Pop

300000(S=0) *[VPN/170] 00:40:48

> to 20.0.1.1 via ge-0/0/1.0, Pop TULA-ASBR1 removes the top label and sends traffic to the side of the TULA-P1 packet with only one label - label 16, which is a vpnv4 label. This is our problem - TULA-P1 does not know about such a label and simply drops the packet (and if it knows 16, it will be a label of some other service and the traffic will drop, only a little later):

bormoglotx@TULA-P1> show route table mpls.0 label 16

bormoglotx@TULA-P1>Why is this happening? The point is which route is taken as the basis for generating labels through the BGP-LU session. By default, the inet.0 table is used for the labeled-unicast session - the received routes are installed into it and the routes are returned from it. At the same time, IGP routes are taken as the basis for generating routes to loopbacks (since they are the best in inet.0), in our case these are ospf routes. As you know, IGP routes do not have MPLS labels, therefore, when a route was sent through a BGP-LU session, the TULA-ASBR1 router wrote to the routing table that the label should be removed and a packet should be sent through some specific interface (in our case, a packet with one label - the router does not know that there will be another one under the top label - it does not go there). That is, we simply do not stitch two LSPs.

But why then ping to the remote PE passes? It's simple - when the PE router sends an icmp request, it does not hang a vpnv4 label - the packet comes with a stack of two labels (well, or one if you use redistribution). Traffic still arrives on the ASBR of the remote autonomous system (in our case, on TULA-ASBR1), as we have already found out, it is deselected and already bare ip traffic goes through the P router, which is in the same IGP domain as the destination host and knows the route to it (although TULA-P1 does not know the return route, but this does not prevent it from forwarding traffic). That is, it works like this:

There are several ways to solve this problem. Let's start with the mpls traffic engineering options. This is not the traffic engineering you thought of (I think the word RSVP came to your mind). There are 4 traffic engineering options:

bormoglotx@RZN-ASBR1# set protocols mpls traffic-engineering ?

Possible completions:

bgp BGP destinations only

bgp-igp BGP and IGP destinations

bgp-igp-both-ribs BGP and IGP destinations with routes in both routing tables

mpls-forwarding Use MPLS routes for forwarding, not routingThe bgp option is the default option, it forces the router to install the ldp and rsvp routes in the inet.3 table and therefore only bgp has access to these routes (next-hop resolution for VPNv4 \ L2-signaling \ EVPN routes). But I draw your attention to the fact that BGP-LU routes will go to inet.0, and not to inet.3.

Bgp-igp option - this option forces the router to install rsvp and ldp routes into the inet.0 table, in which case inet.3 will become empty. If your ASBR is also used as a PE for terminating L3VPN, then you should be aware that they will stop working without crutches. Another side effect is routing.

Bgp-igp-both-ribs option- this option forces the router to install ldp and rsvp routes to both the inet.0 table and the inet.3 table. A side effect like the previous option is that the routing “breaks”, but L3VPN will work.

Mpls-forwarding option - this option forces the router to copy routes from inet.3 to inet.0, but these routes will be available only for forwarding. There will be no changes in inet.3. A side effect - traffic that previously went over ip (for example, bgp sessions to remote loopbacks) will now go through mpls (although this is probably more plus than minus - depending on which side you look at).

These options should be used with caution, especially the second and third. Both of these options break routing - since ldp and rsvp routes appear in the inet.0 table, in which usually the main igp protocol is isis or ospf. But now igp routes will become less priority due to their large protocol preference over label distribution protocols. Routing will break, this means that if before turning on the option you saw a route to which lupback via ospf / isis as the best one, now ldp / rsvp routes will take its place. If, for example, you specified the from ospf condition in some export policy, then now this condition will not be met with all the consequences ... The second option, as I indicated above, is also dangerous because the inet.3 table becomes empty. Keep that in mind. But the mpls-forwarding option is just what we need. When you enable it, routes from inet.

It is important to understand that none of these options generate new LSPs - just existing LSPs are copied (or transferred) to inet.0. Using these options (meaning the 2nd, 3rd and 4th options in the list), you allow the router to use its LSPs not only to forward L2 / 3VPN traffic, but also normal traffic (if between points that want to exchange this traffic, there is LSP - well, for example, these points are router routers), which usually goes with a clean IP.

Turn this option on and check what happens. As you remember, early TUAL-ASBR1 announced the 300000 label and made a pop label instead of swap. After enabling this option, the labels will be regenerated:

bormoglotx@RZN-ASBR1> show route receive-protocol bgp 30.0.0.1 71.0.0.1/32 detail

inet.0: 14 destinations, 16 routes (14 active, 0 holddown, 0 hidden)

* 71.0.0.1/32 (1 entry, 1 announced)

Accepted

Route Label: 300080

Nexthop: 30.0.0.1

MED: 2

AS path: 71 INow TULA-ASBR1 announces the label 300080 for us. Let's see what the router with the transit packet with this label will do:

bormoglotx@TULA-ASBR1> show route table mpls.0 label 300080

mpls.0: 18 destinations, 18 routes (18 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

300080 *[VPN/170] 00:04:07

> to 20.0.1.1 via ge-0/0/1.0, Swap 299776Now everything is as it should - swap marks occur - our LSPs are now stitched together. And here is what happens with the inet.0 routing table:

bormoglotx@TULA-ASBR1> show route table inet.0 71.0.0.0/24

inet.0: 14 destinations, 16 routes (14 active, 0 holddown, 0 hidden)

@ = Routing Use Only, # = Forwarding Use Only

+ = Active Route, - = Last Active, * = Both

71.0.0.1/32 @[OSPF/10] 00:05:50, metric 2

> to 20.0.1.1 via ge-0/0/1.0

#[LDP/9] 00:05:50, metric 1

> to 20.0.1.1 via ge-0/0/1.0, Push 299776

71.0.0.2/32 @[OSPF/10] 00:05:50, metric 1

> to 20.0.1.1 via ge-0/0/1.0

#[LDP/9] 00:05:50, metric 1

> to 20.0.1.1 via ge-0/0/1.0

71.0.0.3/32 *[Direct/0] 14:16:29

> via lo0.0New routes have appeared in the table. Now, for forwarding, we use LSP (LDP \ RSVP route, marked with #), and for routing all the same IGP routes (which are now marked with @). I would like to note that now routes towards the neighboring ASBR, as before, are generated from ospf routes that do not have labels. But since we now use the LDP route for forwarding, the router will not do pop label but swap.

Well, check that L3VPN is wound up:

bormoglotx@RZN-PE1> ping routing-instance TEST1 source 10.0.0.1 10.0.1.1 rapid

PING 10.0.1.1 (10.0.1.1): 56 data bytes

!!!!!

--- 10.0.1.1 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

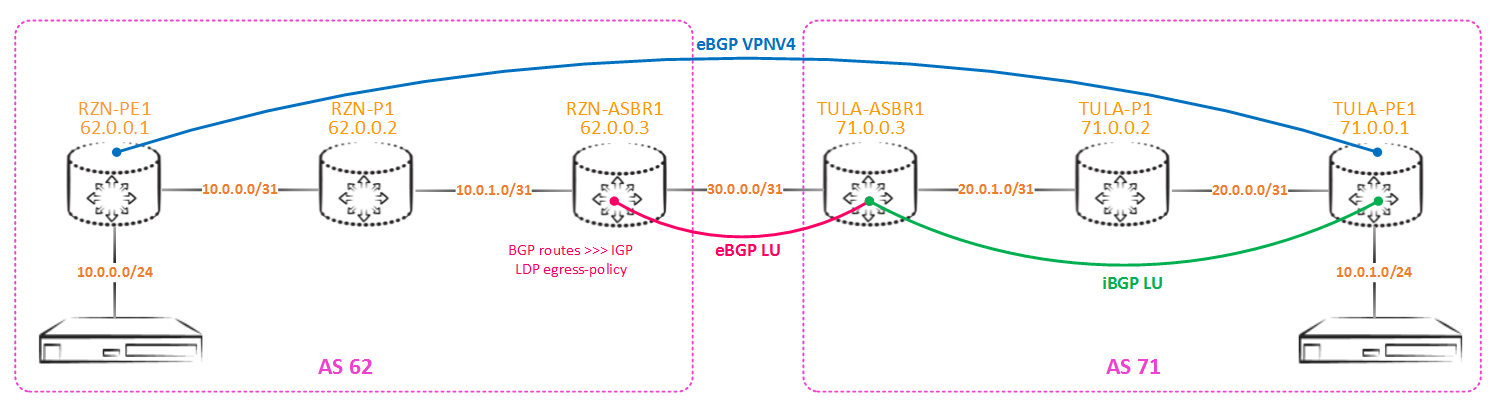

round-trip min/avg/max/stddev = 6.861/16.084/47.210/15.596 msIf you are going to redistribute in igp on ASBR:

then you will need to do an egress-policy on the ldp protocol so that ASBR will generate labels for the received prefixes and distribute them within the network. By default, JunOS generates a tag only for its loopback, as well as to routes with tags received via ldp, unlike the same Cisco, therefore, there is no such problem on Cisco equipment (but without it there are enough problems).

On RZN-ASBR-1, I exported the routes received via bgp to ospf (bgp LU between PE and ASBR is now gone):

bormoglotx@RZN-ASBR1> show route receive-protocol bgp 30.0.0.1

inet.0: 14 destinations, 14 routes (14 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

* 71.0.0.1/32 30.0.0.1 2 71 I

* 71.0.0.2/32 30.0.0.1 1 71 I

* 71.0.0.3/32 30.0.0.1 71 IAccording to the export policy, these routes go to ospf:

bormoglotx@RZN-ASBR1> show configuration protocols ospf

export OSPF-EXPORT;

area 0.0.0.0 {

interface lo0.0 {

passive;

}

interface ge-0/0/1.0 {

interface-type p2p;

}

interface ge-0/0/0.0 {

passive;

}

}

bormoglotx@RZN-ASBR1> show configuration policy-options policy-statement OSPF-EXPORT

term Remote-Lo {

from {

route-filter 71.0.0.0/24 prefix-length-range /32-/32;

}

then accept;

}

then reject;The routes received from the neighboring autonomous system fall into the ospf database as external and are then distributed inside the igp domain:

bormoglotx@RZN-ASBR1> show ospf database

OSPF database, Area 0.0.0.0

Type ID Adv Rtr Seq Age Opt Cksum Len

Router 62.0.0.1 62.0.0.1 0x80000016 2576 0x22 0xf0fb 60

Router 62.0.0.2 62.0.0.2 0x80000017 2575 0x22 0xf57b 84

Router *62.0.0.3 62.0.0.3 0x8000001a 80 0x22 0xd2dd 72

OSPF AS SCOPE link state database

Type ID Adv Rtr Seq Age Opt Cksum Len

Extern *71.0.0.1 62.0.0.3 0x80000001 80 0x22 0x2afc 36

Extern *71.0.0.2 62.0.0.3 0x80000001 80 0x22 0x1611 36

Extern *71.0.0.3 62.0.0.3 0x80000001 80 0x22 0x225 36Check the availability of the route on RZN-PE1:

bormoglotx@RZN-PE1> show route 71.0.0.1/32

inet.0: 13 destinations, 13 routes (13 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

71.0.0.1/32 *[OSPF/150] 00:02:10, metric 2, tag 0

> to 10.0.0.1 via ge-0/0/0.0The route is only in the inet.0 table, but for bgp to install the route in the bgp.l3vpn.0 table, lsp must be present before the protocol next-hop in the inet.3 table. The inet.3 table currently does not have a route to the 71.0.0.1/32 prefix. There is no route, since ldp does not generate labels for these prefixes.

bormoglotx@RZN-ASBR1> show ldp database

Input label database, 62.0.0.3:0--62.0.0.2:0

Label Prefix

299776 62.0.0.1/32

3 62.0.0.2/32

299792 62.0.0.3/32

Output label database, 62.0.0.3:0--62.0.0.2:0

Label Prefix

300640 62.0.0.1/32

300656 62.0.0.2/32

3 62.0.0.3/32In order for lsp to appear, it is necessary to make an egress-policy for ldp on RZN-ASBR1, in which to indicate that for prefixes from the range 71.0.0.0/24, labels should be generated:

bormoglotx@RZN-ASBR1> show configuration protocols ldp

egress-policy LDP-EXPORT;

interface ge-0/0/1.0;

bormoglotx@RZN-ASBR1> show configuration policy-options policy-statement LDP-EXPORT

term Local-Lo {

from {

route-filter 62.0.0.3/32 exact;

}

then accept;

}

term Remote-Lo {

from {

route-filter 71.0.0.0/24 prefix-length-range /32-/32;

}

then accept;

}Be careful with the egress-policy for LDP, as most often when you configure it for the first time, the engineer gets an empty inet. 3. Those prefixes that you specify in the policy will be announced via LDP, but then the router will consider that it is a sors for all these FECs and simply will not install them in inet.3 (since local FECs are not installed in inet.3 - default local FEC on JunOS is its own loopback and ldp why not build lsp to yourself). In the above policy, in the first term I give my own lubpeck, in the second term lupbacks of the neighboring autonomy obtained by bgp. If you want to install routes from another autonomous system in inet on ASBR. 3. then for the session in the direction of the neighboring autonomous system, add the option resolve-vpn:

bormoglotx@RZN-ASBR1> show configuration protocols bgp group ASBR

type external;

family inet {

labeled-unicast {

resolve-vpn;

}

}

export LO-export;

neighbor 30.0.0.1 {

local-address 30.0.0.0;

peer-as 71;

}Now labels will be generated for prefixes from the range 71.0.0.0/24:

bormoglotx@RZN-ASBR1> show ldp database

Input label database, 62.0.0.3:0--62.0.0.2:0

Label Prefix

299776 62.0.0.1/32

3 62.0.0.2/32

299792 62.0.0.3/32

299952 71.0.0.1/32

299968 71.0.0.2/32

299984 71.0.0.3/32

Output label database, 62.0.0.3:0--62.0.0.2:0

Label Prefix

3 62.0.0.1/32

3 62.0.0.2/32

3 62.0.0.3/32

300704 71.0.0.1/32

300720 71.0.0.2/32

300736 71.0.0.3/32After the data manipulation on RZN-PE1 in inet.3, a route should appear to the loopbacks of the neighboring autonomous system and, as a result, connectivity inside L3VPN should appear:

bormoglotx@RZN-PE1> show route 71.0.0.1/32

inet.0: 13 destinations, 13 routes (13 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

71.0.0.1/32 *[OSPF/150] 00:09:17, metric 2, tag 0

> to 10.0.0.1 via ge-0/0/0.0

inet.3: 5 destinations, 5 routes (5 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

71.0.0.1/32 *[LDP/9] 00:02:14, metric 1

> to 10.0.0.1 via ge-0/0/0.0, Push 299952

bormoglotx@RZN-PE1> ping routing-instance TEST1 source 10.0.0.1 10.0.1.1 rapid

PING 10.0.1.1 (10.0.1.1): 56 data bytes

!!!!!

--- 10.0.1.1 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max/stddev = 7.054/11.118/21.571/5.493 msThis we examined the first solution to the problem. Now let's move on to the second.

As I said earlier, by default labeled-unicat routes are installed and delivered from the inet.0 table, which, by default, also has no routes with labels. We can force the router to send and receive routes from the inet table. 3. It is done like this:

bormoglotx@RZN-ASBR1# show protocols bgp group ASBR

type external;

family inet {

labeled-unicast {

rib {

inet.3;

}

}

}

export LO-export;

neighbor 30.0.0.1 {

local-address 30.0.0.0;

peer-as 71;

}Note: the igp protocol should not be specified for import in the policy, since ospf / isis routes are not in the inet.3 table.

Now let's see what we give to the neighboring autonomous system:

bormoglotx@RZN-ASBR1> show route advertising-protocol bgp 30.0.0.1

inet.3: 4 destinations, 4 routes (4 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

* 62.0.0.1/32 Self 1 I

* 62.0.0.2/32 Self 1 IOnly two routes, and before there were three. The route to yourself is not given (to the RZN-ASBR1 loopback). Why - it's easy to understand if you look for your bast in the inet.3 table:

bormoglotx@RZN-ASBR1> show route table inet.3 62.0.0.3/32

bormoglotx@RZN-ASBR1> Since the local FEC is not installed in inet.3, it is logical that there is no route. In order for our loopback to be given, we need to copy it from the table inet.0 to the table inet.3. To do this, make a rib-group and hang it on the interface-routes:

bormoglotx@RZN-ASBR1> show configuration | compare rollback 4

[edit routing-options]

+ interface-routes {

+ rib-group inet inet.0>>>inet.3-Local-Lo;

+ }

+ rib-groups {

+ inet.0>>>inet.3-Local-Lo {

+ import-rib [ inet.0 inet.3 ];

+ import-policy Local-Lo;

+ }

+ }

[edit policy-options]

+ policy-statement Local-Lo {

+ term Lo {

+ from {

+ protocol direct;

+ route-filter 62.0.0.0/24 prefix-length-range /32-/32;

+ }

+ then accept;

+ }

+ then reject;

+ }So this line:

import-rib [ inet.0 inet.3 ]tells us that the routes from the inet.0 table should be copied to the inet.3 table. And here is this line

import-policy Local-Loapplies a policy to this import so as not to copy all routes. Well, in the end, this rib-group must be screwed where. Since we copy the direct route, we fasten it to the interface-routes.

After such manipulations, the route to the loopback will be accessible in the inet.3 table (but it will be installed in the table not in the ldp / rsvp protocol, but in the direct flow, as in inet.0):

[edit]

bormoglotx@RZN-ASBR1# run show route 62.0.0.3/32 table inet.3

inet.3: 5 destinations, 5 routes (5 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

62.0.0.3/32 *[Direct/0] 00:00:07

> via lo0.0We can check whether we are now giving our lupback to a neighboring autonomous region:

bormoglotx@RZN-ASBR1> show route advertising-protocol bgp 30.0.0.1

inet.3: 5 destinations, 5 routes (5 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

* 62.0.0.1/32 Self 1 I

* 62.0.0.2/32 Self 1 I

* 62.0.0.3/32 Self IThey solved one problem. But the second problem arises:

bormoglotx@RZN-PE1> show route table TEST1.inet.0

TEST1.inet.0: 2 destinations, 2 routes (2 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

10.0.0.0/24 *[Direct/0] 01:38:46

> via ge-0/0/5.0

10.0.0.1/32 *[Local/0] 01:38:46

Local via ge-0/0/5.0Now there are no routes in L3VPN to the neighboring autonomous system, since bgp peering lies between PE routers:

bormoglotx@RZN-PE1> show bgp summary group eBGP-VPNV4

Groups: 2 Peers: 2 Down peers: 1

Table Tot Paths Act Paths Suppressed History Damp State Pending

inet.0

7 0 0 0 0 0

bgp.l3vpn.0

0 0 0 0 0 0

Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped...

71.0.0.1 71 179 183 0 1 18:34 ActiveThe fact is that the routes on the ASBR are in the inet.3 table, and the label-unicast session with remote PEs is built from inet.0, and naturally nothing is returned to the PEs. To do this, make another rib-group on ASBR and transfer the routes from inet.3 to inet.0:

[edit]

bormoglotx@RZN-ASBR1# show routing-options rib-groups inet.3>>>inet.0-Remote-Lo

import-rib [ inet.3 inet.0 ];

import-policy Remote-Lo;

[edit]

bormoglotx@RZN-ASBR1# show policy-options policy-statement Remote-Lo

term Lo {

from {

route-filter 71.0.0.0/24 prefix-length-range /32-/32;

}

then accept;

}

then reject;And we fasten it to our BGP session, in which we get these routes:

bormoglotx@RZN-ASBR1# show protocols bgp group ASBR

type external;

family inet {

labeled-unicast {

rib-group inet.3>>>inet.0-Remote-Lo;

rib {

inet.3;

}

}

}

export LO-export;

neighbor 30.0.0.1 {

local-address 30.0.0.0;

peer-as 71;

}A similar configuration should be done on the second ASBR, if you have redistribution in igp there too, after which the routes to remote loopbacks will be installed in the routing table on PEs and eBGP vpnv4 session will rise (no one forbids redistribution in one autonomy, but in the second to use exclusively BGP - the choice is only for network administrators)

bormoglotx@RZN-PE1> show bgp summary group eBGP-VPNV4

Groups: 2 Peers: 2 Down peers: 0

Table Tot Paths Act Paths Suppressed History Damp State Pending

inet.0

10 3 0 0 0 0

bgp.l3vpn.0

1 1 0 0 0 0

Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped...

71.0.0.1 71 184 189 0 1 1:11 Establ

bgp.l3vpn.0: 1/1/1/0

TEST1.inet.0: 1/1/1/0Now you can check the connectivity inside L3VPN:

bormoglotx@RZN-PE1> ping routing-instance TEST1 source 10.0.0.1 10.0.1.1 rapid

PING 10.0.1.1 (10.0.1.1): 56 data bytes

!!!!!

--- 10.0.1.1 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max/stddev = 4.332/37.029/83.503/29.868 msNow, I think that there will be less issues with configuring opt.C on JunOS. But if anyone has any questions - write in the comments, or to me in a telegram.

Thanks for attention.