Phone Pixel 3 is learning to determine the depth in photos

- Transfer

Portrait mode on Pixel smartphones allows you to take professional-looking photos that draw attention to the subject with a blurred background. Last year, we described how we calculate depth using a single camera and Phase-Detection Autofocus (PDAF), also known as dual-pixel autofocus . This process used the traditional stereo algorithm without learning. This year at Pixel 3, we adopted machine learning to improve the depth estimate and to produce even better results in portrait mode.

Left: original image captured in HDR +. On the right is a comparison of shooting results in portrait mode using depth from traditional stereo and machine learning. Learning outcomes result in fewer errors. In the traditional stereo result, the depth of many horizontal lines behind a man is incorrectly estimated to be equal to the depth of the man himself, as a result of which they remain sharp.

In the past year, we have described that portrait mode uses a neural network for the separation of pixels belonging to images of people and the background image, and complements the two-level mask depth information obtained from the PDAF-pixels. All this was done to get a blur, depending on the depth close to what a professional camera can give.

For PDAF, it takes two slightly different shots of the scene. Switching between shots, you can see that the person does not move, and the background shifts horizontally - this effect is called parallax . Since parallax is a function of the distance of a point from the camera and the distance between two points of view, we can determine the depth by comparing each point in one picture with its corresponding point in another.

The PDAF images on the left and in the middle look similar, but the parallax can be seen on the right in an enlarged fragment. It is easiest to notice it in a circular structure in the center of the increase.

However, the search for such correspondences in PDAF images (this method is called stereo depth) is an extremely difficult task, since the points between the photos are shifted very little. Moreover, all stereo technologies suffer from aperture problems. If you look at the scene through a small aperture, it will be impossible to find the correspondence of points for lines parallel to the stereo baseline, that is, the line connecting the two cameras. In other words, studying in the presented photo horizontal lines (or vertical lines in pictures with portrait orientation) all shifts in one image relative to another look approximately the same. In last year's portrait mode, all these factors could lead to errors in determining the depth and the appearance of unpleasant artifacts.

With the portrait mode in Pixel 3, we correct these errors, using the fact that the parallax of stereo photographs is just one of many clues present in the images. For example, points that are far from the focus plane seem less sharp, and this will be a hint from the defocused depth. In addition, even when viewing an image on a flat screen, we can easily estimate the distance to objects, since we know the approximate size of everyday objects (that is, we can use the number of pixels depicting a person’s face to estimate how far he is). This will be a semantic hint.

Manually developing an algorithm that combines these prompts is extremely difficult, but using the MO, we can do it while improving the performance of the prompts from the PDAF parallax. Specifically, we are training a convolutional neural network written in TensorFlow , which accepts pixels from a PDAF and learns to predict depth. This new, improved depth estimation method based on MO is used in Pixel 3 portrait mode.

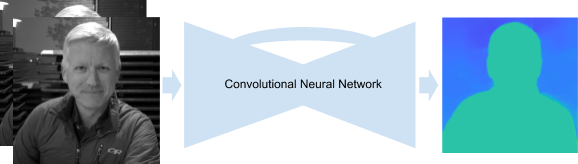

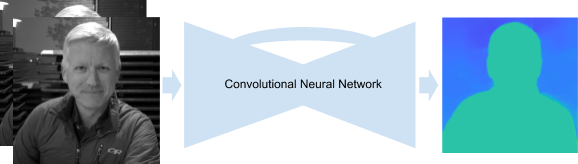

Our convolutional neural network accepts a PDAF image as input and produces a depth map. The network uses an encoder-decoder-style architecture with additional connections within the layer [ skip connections ] and residual blocks [ residual blocks ].

To train the network, we need a lot of PDAF images and the corresponding high-quality depth maps. And since we need the depth prediction to be useful in portrait mode, we need the training data to be similar to the photos that users take from smartphones.

To do this, we designed a special device “Frankenfon”, in which five Pixel 3 phones were combined and a WiFi connection was established between them, which allowed us to take photos from all phones at the same time (with a difference of no more than 2 ms). With this device, we calculated high-quality depth maps based on photos, using both motion and stereo from several points of view.

Left: device for collecting training data. In the middle: an example of switching between five photos. Synchronization of cameras ensures the ability to calculate the depth in dynamic scenes. Right: Total Depth. Low confidence points, where the juxtaposition of pixels in different photographs was uncertain due to the weakness of the textures, are colored black, and are not used in training.

The data obtained with this device turned out to be ideal for network training for the following reasons:

However, despite the ideality of the data obtained using this device, it is still extremely difficult to predict the absolute depth of scene objects — any given PDAF pair can correspond to various depth maps (it all depends on the characteristics of the lenses, focal length, etc.). To take all this into account, we estimate the relative depth of the objects in the scene, which is enough to obtain satisfactory results in portrait mode.

Estimation of depth using MO on Pixel 3 should work quickly so that users do not have to wait too long for the results of images in portrait mode. However, to get good depth estimates using small defocus and parallax, you have to feed neural network photos in full resolution. To ensure fast results, we use TensorFlow Lite , a cross-platform solution for running MO models on mobile and embedded devices, as well as a powerful Pixel 3 GPU, which allows you to quickly calculate the depth of unusually large input data. Then we combine the obtained depth estimates with masks from our neural network, highlighting people in order to get beautiful shooting results in portrait mode.

In the Google Camera App versions 6.1 and above, our depth maps are embedded in the images of portrait mode. This means that you can use the Google Photos Depth Editor to change the degree of blur and focus point after you have already taken the picture. You can also use third-party programs to extract depth maps from jpeg, and study them yourself. Also via the link you can take an album showing relative depth maps and corresponding images in portrait mode, for comparing the traditional stereo and MO-approach.

Left: original image captured in HDR +. On the right is a comparison of shooting results in portrait mode using depth from traditional stereo and machine learning. Learning outcomes result in fewer errors. In the traditional stereo result, the depth of many horizontal lines behind a man is incorrectly estimated to be equal to the depth of the man himself, as a result of which they remain sharp.

A brief excursion into the previous material

In the past year, we have described that portrait mode uses a neural network for the separation of pixels belonging to images of people and the background image, and complements the two-level mask depth information obtained from the PDAF-pixels. All this was done to get a blur, depending on the depth close to what a professional camera can give.

For PDAF, it takes two slightly different shots of the scene. Switching between shots, you can see that the person does not move, and the background shifts horizontally - this effect is called parallax . Since parallax is a function of the distance of a point from the camera and the distance between two points of view, we can determine the depth by comparing each point in one picture with its corresponding point in another.

The PDAF images on the left and in the middle look similar, but the parallax can be seen on the right in an enlarged fragment. It is easiest to notice it in a circular structure in the center of the increase.

However, the search for such correspondences in PDAF images (this method is called stereo depth) is an extremely difficult task, since the points between the photos are shifted very little. Moreover, all stereo technologies suffer from aperture problems. If you look at the scene through a small aperture, it will be impossible to find the correspondence of points for lines parallel to the stereo baseline, that is, the line connecting the two cameras. In other words, studying in the presented photo horizontal lines (or vertical lines in pictures with portrait orientation) all shifts in one image relative to another look approximately the same. In last year's portrait mode, all these factors could lead to errors in determining the depth and the appearance of unpleasant artifacts.

Improving depth assessment

With the portrait mode in Pixel 3, we correct these errors, using the fact that the parallax of stereo photographs is just one of many clues present in the images. For example, points that are far from the focus plane seem less sharp, and this will be a hint from the defocused depth. In addition, even when viewing an image on a flat screen, we can easily estimate the distance to objects, since we know the approximate size of everyday objects (that is, we can use the number of pixels depicting a person’s face to estimate how far he is). This will be a semantic hint.

Manually developing an algorithm that combines these prompts is extremely difficult, but using the MO, we can do it while improving the performance of the prompts from the PDAF parallax. Specifically, we are training a convolutional neural network written in TensorFlow , which accepts pixels from a PDAF and learns to predict depth. This new, improved depth estimation method based on MO is used in Pixel 3 portrait mode.

Our convolutional neural network accepts a PDAF image as input and produces a depth map. The network uses an encoder-decoder-style architecture with additional connections within the layer [ skip connections ] and residual blocks [ residual blocks ].

Neural Network Training

To train the network, we need a lot of PDAF images and the corresponding high-quality depth maps. And since we need the depth prediction to be useful in portrait mode, we need the training data to be similar to the photos that users take from smartphones.

To do this, we designed a special device “Frankenfon”, in which five Pixel 3 phones were combined and a WiFi connection was established between them, which allowed us to take photos from all phones at the same time (with a difference of no more than 2 ms). With this device, we calculated high-quality depth maps based on photos, using both motion and stereo from several points of view.

Left: device for collecting training data. In the middle: an example of switching between five photos. Synchronization of cameras ensures the ability to calculate the depth in dynamic scenes. Right: Total Depth. Low confidence points, where the juxtaposition of pixels in different photographs was uncertain due to the weakness of the textures, are colored black, and are not used in training.

The data obtained with this device turned out to be ideal for network training for the following reasons:

- Five points of view guarantee the presence of parallax in several directions, which saves us from the problem of aperture.

- The location of the cameras ensures that any point of the image is repeated on at least two photographs, which reduces the number of points that cannot be matched.

- The baseline, that is, the distance between the cameras, is larger than that of the PDAF, which guarantees a more accurate depth estimate.

- Synchronization of cameras ensures the ability to calculate the depth in dynamic scenes.

- The portability of the device ensures the possibility of taking photographs in nature, simulating photos that users take with the help of smartphones.

However, despite the ideality of the data obtained using this device, it is still extremely difficult to predict the absolute depth of scene objects — any given PDAF pair can correspond to various depth maps (it all depends on the characteristics of the lenses, focal length, etc.). To take all this into account, we estimate the relative depth of the objects in the scene, which is enough to obtain satisfactory results in portrait mode.

Combine it all

Estimation of depth using MO on Pixel 3 should work quickly so that users do not have to wait too long for the results of images in portrait mode. However, to get good depth estimates using small defocus and parallax, you have to feed neural network photos in full resolution. To ensure fast results, we use TensorFlow Lite , a cross-platform solution for running MO models on mobile and embedded devices, as well as a powerful Pixel 3 GPU, which allows you to quickly calculate the depth of unusually large input data. Then we combine the obtained depth estimates with masks from our neural network, highlighting people in order to get beautiful shooting results in portrait mode.

Try it yourself

In the Google Camera App versions 6.1 and above, our depth maps are embedded in the images of portrait mode. This means that you can use the Google Photos Depth Editor to change the degree of blur and focus point after you have already taken the picture. You can also use third-party programs to extract depth maps from jpeg, and study them yourself. Also via the link you can take an album showing relative depth maps and corresponding images in portrait mode, for comparing the traditional stereo and MO-approach.