How we didn't win the hackathon

From November 30 to December 2, PicsArt AI hackathon passed in Moscow with a prize pool of $ 100,000. The main task was to make an AI solution for processing photos or videos that can be used in the PicsArt application. A work colleague (at that time) Arthur Kuzin offered to take part, interested me in the idea of anonymizing personal photos of users while preserving details (facial expressions, etc.). Arthur also called Ilya Kibardin, an MIPT student (someone needed to write code). The name was born very quickly: DeepAnon.

This will be a story about our decision, his degradation development, hackathon, and how not need to adapt to the jury.

To hackathon

There were three ways to hit the hackathon. The first is to get into the top 50 leaderboard on the task of face segmentation - “Datascience genius”. The second is to immediately unite in a group of up to 4 people and show a working MVP - a “vigorous team”. In the latter version, it was necessary to simply send a description of his brilliant idea. We decided not to waste time and immediately, a week before the hackathon, to do MVP. Well, in general, we decided that it was cool, so the whole time got into the top 10 on the leaderboard and, accordingly, inzemani.

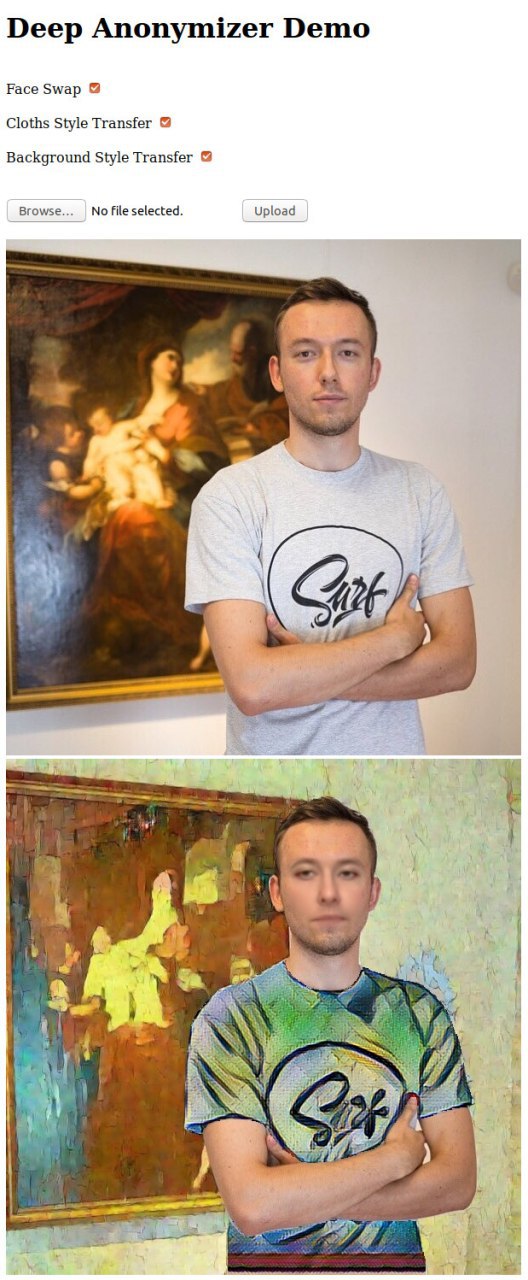

The main idea of our product is as follows: people thoughtlessly rummage photos with themselves (sometimes not entirely legal) on social networks, which they may later regret, or for which they may even be punished in the future. To protect yourself, you can zazurzurit your face with “squares” and gloss over the background. But then not only the face will be hidden, but also the emotions on it, and the background will be spoiled. To save emotions on the face and objects in the background, our application changes all the faces in the photo, and the background makes it cartoony. And so that a person cannot be recognized by clothes, it is replaced by another one.

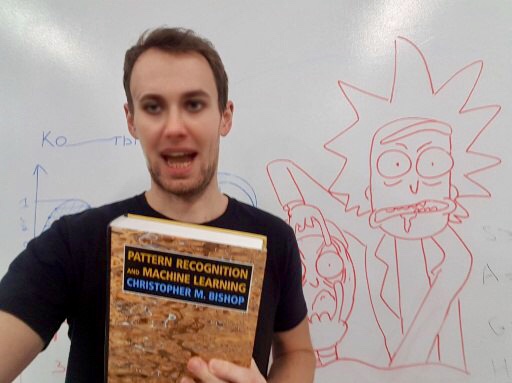

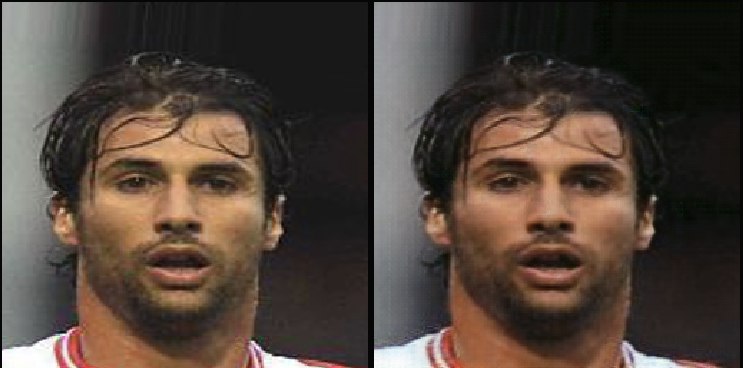

For the week we managed to do almost everything that was planned. For each stage, a separate neural network was used (and sometimes even several). At first, all the faces were on the image and were replaced with the same celebrity face - for this, a solution similar to DeepFake was used. Further, with the help of human segmentation, the background was separated and the style was transferred to the cartoon style (Rick and Morty). At the last stage, the segmentation of different parts of the clothes took place and they changed - a random color shift in HSV space, since the transfer of the style did not have time before the MVP of the jury.

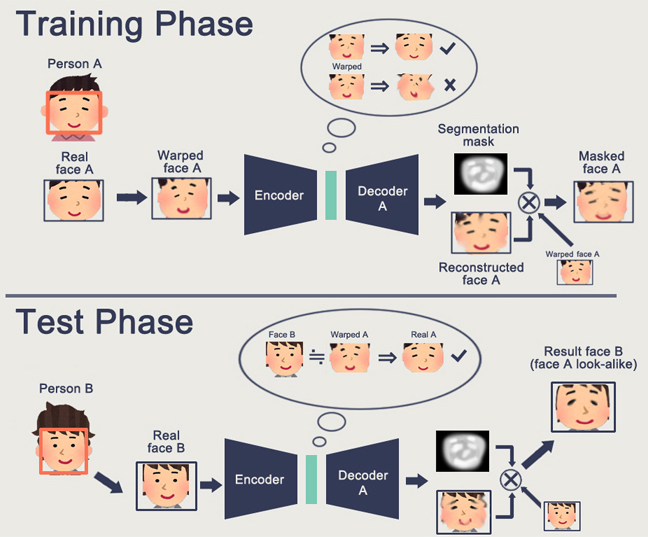

At each stage, there were their own technical difficulties. For example, all DeepFake implementations on a githaba could only turn a certain person X into a certain person Y. In this formulation of the problem, many photographs of two people are needed. The easiest way to collect such a set of data is to find a video of a person’s speeches, where most of the time only he is shown, and, using face detection, to cut a photo. The main focus of our idea was just that one could do the same other person Y from any person. We tried instead of one person X to use a multitude of all kinds of people from the Dlebace CelebA, and, to our happiness, it started. Below is the DeepFake scheme taken from the implementation we used .

The result was wrapped in a web demo at flask and launched for display. Here is the description of our profit center for the jury.

Introducing a service that allows you to anonymize media content (photos and videos). In the base case, the service hides faces. In the extended mode, it changes users' faces to those of other people (DeepFake), changes clothes and background (segmentation + Style Transfer). As an alternative use, the service can be used to create fan and virus clips or photos with replaced faces of celebrities.

There were a few days left before the hackathon started, and we managed to improve the change of clothes. If before that it was a change of colors in HSV, now for each type of clothing a different style was used (by various artists). Another idea was that it would be better to change the face not into one celebrity, but first classify by sex, and then transfer the faces of men and women differently (for example, Navalny and Sobchak). And at the last moment, we managed to add different levels of anonymization to the web demo - it was possible to choose which elements of anonymization to use.

We went to the offline part with the mood that this is a useful, unusual use, and not just viral masks on faces. One of the messages in our team chat:

Yes, if you think about it, everyone needs it. It's just that they still haven’t realized it. A lot of people shuffle their content as they thump, drink, break the law and think that the government doesn’t care.

And after 5 years, the AI will come, look at the old posts, and after the fact will solder the term.

On hackathon

On Friday, the offline part began. Each team was assigned a separate table, and the buffet had endlessfast carbscookies. After the official opening, we decided not to waste time and immediately find out from the jury what they want from the participants. After talking with several organizers informally, we felt that their anonymity did not catch them. But I liked the idea of segmentation of individual parts of the photo and their change. It also became clear that they want something from us that can be embedded in PicsArt. However, in the evening our team sent a description of the project, which referred to anonymization, but with an emphasis on segmentation and editing of individual parts of the photo.

Description of the project for the jury on Friday night:

We offer a service that allows simple and automated processing of photos for their anonymization. With the help of segmentation of clothing, accessories, hair on the head, as well as background elements, the service allows you to process each object independently, without the need for manual selection. The service also allows you to change faces with the preservation of facial expressions and expressions.

According to the hackathon format, those teams that showed themselves well on technical commissions will be allowed to protect. On the commissions there was live communication with the jury and the PicsArt technical team, as well as a demo demonstration describing his work.

At the first commission on Saturday, we could not sell the jury to anonymize, but we saw that they liked the idea of editing individual items in the photo. Also, the jury members very enthusiastically accepted the idea with a changeable hairstyle by pressing the hair (it turned out that they are now trying to do something similar).

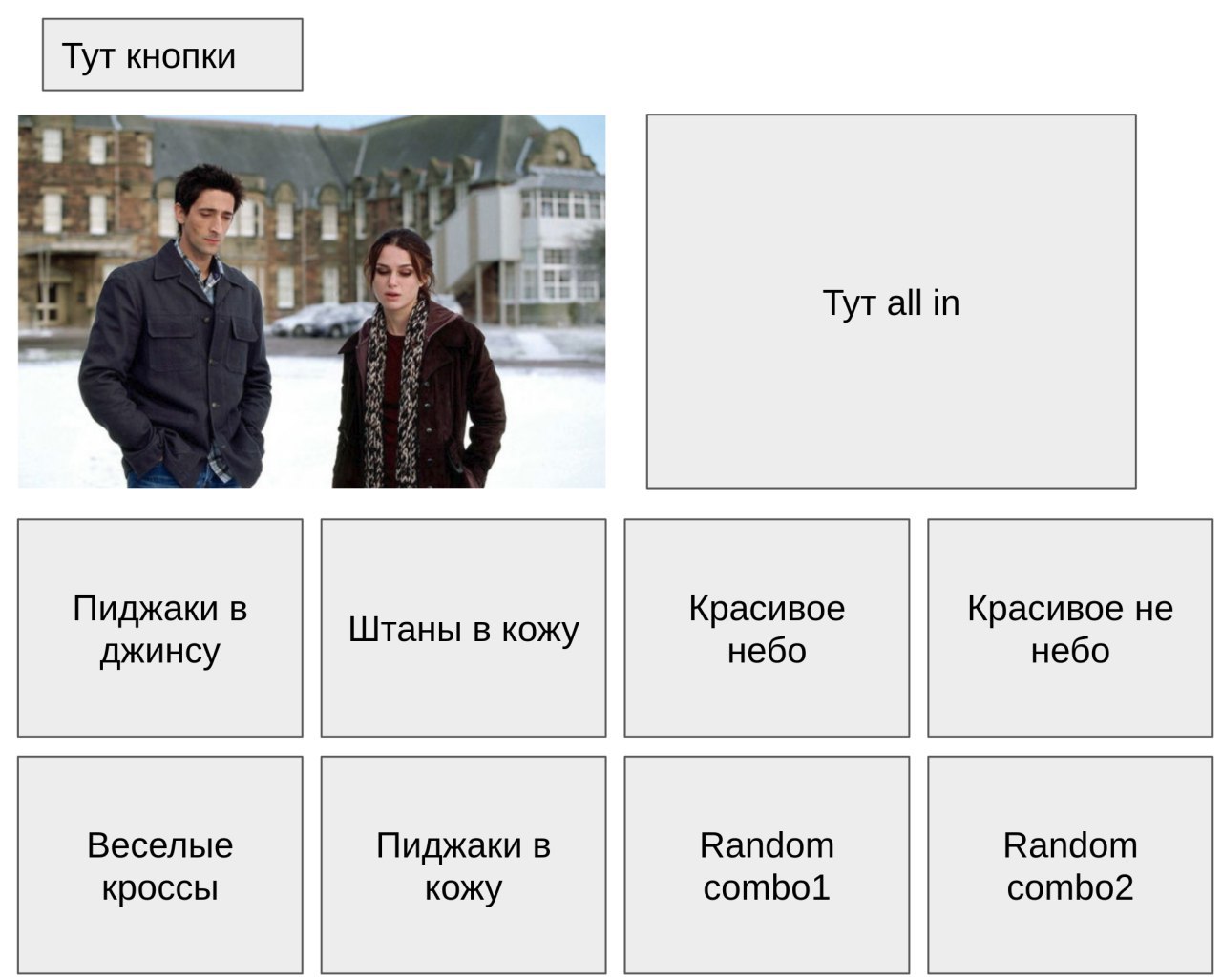

Our team could not resist the pressure (unfortunately) and agreed to change the vision of the product. It was decided to focus on high-quality clothing change.

In the demo on the main screen, it was planned to show several variants of the original photo (ideally, the clothes change to tap on the screen):

Outerwear:

- leave as is

- jeans

- leopard

Pants:

- leave as is

- jeans

- leopard

Footwear:

- leave as is

- fun shoes

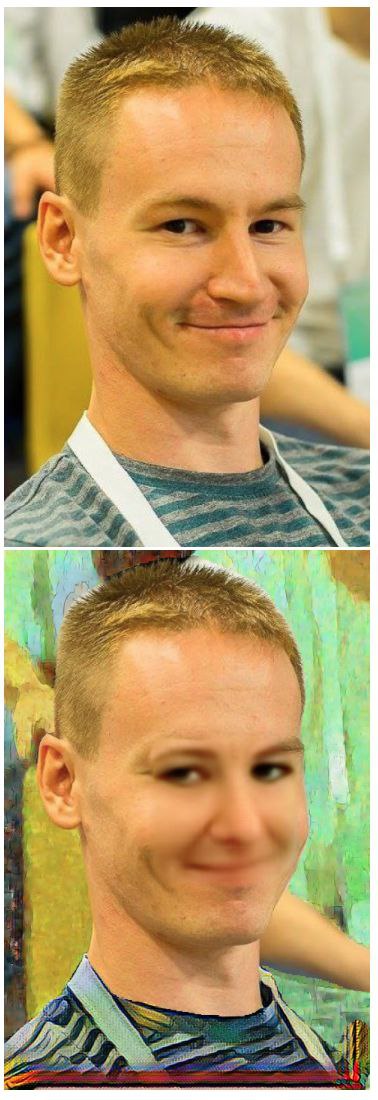

In the basic version with hair, it was decided to make the transformation “bald - not bald”. For this purpose, bald celebrities and all the rest were chosen from the celebA datasets. In these two groups, CycleGAN was put to study , which is able to transform domain A pictures into domain B, and vice versa (another example would be the transformation of a horse into a zebra).

We managed to get acquainted with one of the PicsArt developers and learn a little about their internal kitchen. He didn’t really believe in the success of our experiment with hair, but put up links in which direction to look. To our disappointment, the neuron didn’t really learn how to add-remove hair. But she learned to change the skin tone (guess why).

The vision of the product changed after each communication with the commission. Plans have added sky improvement and a change in the styles of individual objects (initially only clothes): buildings, cars, and also accessories in people. The focus went away with anonymization more and more. For the final presentation, we decided to adhere to the following structure of 4 slides:

- Clothing segmentation. Photo: original photo, segmented clothing, 4 options for processing clothing.

- Scene segmentation. Dim photo with overexposed sky. The sky is becoming artistic, the buildings are cartoony.

- Face swap and change hair. This is what gets started.

- Together. Slide, which shows that this is done in "three clicks".

But it turned out that the presentation is not needed. By the evening of Saturday, it was announced to everyone that the defense should have a speech of 3 minutes without a presentation. From the stage you will need to show your demo in real time. Organizers want to see working technologies, not beautiful presentations, and this is awesome. Comparing with other hackathons, where teams with non-working demos won, we liked this concept. A small problem was only that at that time our heap of models was launched separately and for a very long time. Optimization was needed to show from the stage.

During the development, mentors from the technical commission went around the room and looked at the progress. After another conversation with one of the mentors, we received feedback that it would be better for us to focus on one thing, in his opinion, on changing clothes. PicsArt wants the result to be realistic and can be shown to users. In fact, different mentors and jury members had different points of view on what an ideal project should be for them.

That they viral effect on the site hakaton, then a serious editor

Ble, not hakaton, and solid: "here is a new infa, we redo everything that we have"

After changing the hair did not start CycleGAN, we decided to try a different approach. First, segment the hair, and then apply inpainting on them. The inpainting task is to restore the hidden part of the image to the surrounding context. According to our plan, we hide the hair, and the neural network will try to restore it. But since the network did not see what kind of hair this person had, she would restore another hairstyle. The problem is that even the models trained on the faces of people could not restore the hair normally (if you paint only part of the hair, it works).

The main difficulty - a huge variety of hairstyles. There was an idea to train inpainting only on bald people, and then the model would probably have learned to change any hairstyle for the absence of hair. We took the implementation of the neural network from this repository .

Since the demo will need to be shown from the stage in real time, it was necessary to optimize the speed of the pipeline. The greatest increase in speed gave the transfer of all neural networks in memory for the entire time of the application. Not without difficulties: starting to do everything in the docker, but scoring along the way, several times fell into pain with tensorflow versions. In fact, it is difficult not to get into such a situation, when you try to launch a dozen repositories from a githaba a day, each of which uses a different version of tensorflow, updating it to the required version in one place - you break it in another. Docker can be a good friend in such a situation, but in the conditions of the hackathon, I want to spend every minute on testing new hypotheses, and not on creating a new image. However, succumbing to such temptation,

Final day

On Sunday morning we decided on the final vision of the product (it would be time already): changing clothes with the possibility of improving the sky. I wanted to narrow down the task as much as possible, but it seemed that only changing clothes was too small. This is what the “design” of our web application looked like.

Initially, we wanted to add a responsive design so that it was convenient to watch from the phone. But time was running out, and our design came down to np.vstack (imgs_list).

Before the final performance, I wanted to bring the feature with clothes to a finished state. An alpha blend of clothes and background was added - abrupt transitions disappeared. Left only the most realistic textures - jeans and crocodile skin. A few hours before the show, it was possible to start sky segmentation and transfer the style to it from this repository . There were options with the transformation of the sky in the apocalyptic, poisonous, cartoon. But the most suitable subject of the contest was the winter sky - its use gave the effect of “sky improver”.

There was quite a bit of time when all the components merged into a single whole and started working. We downloaded a lot of photos from social networks and planned to launch an application on them to select the most successful cases (read). But it turned out that our team performs first, so everything was as honest as possible - they showed demos on random photos.

Arthur, speaking on stage, was able to reveal our idea, and Ilya on the projector showed our MVP - the clothes changed at all photos, and the sky improved.

Not all participants were able to cope with the task - show only their demo. The temptation to add some beautiful slides was great. Of those solutions that we liked the most on protection - the transformation of video into comics, as well as the combination of two photos into one.

results

As a result, on the insider info, our team took the 6th place in one step from the mani.

After the fact, we came to a common opinion (well, except that 3 of the top 5 teams received the prize unfairly), that it was necessary to be steadfast and resolutely finish the original idea of anonymization. Even now, we are convinced that it is suitable and will bring value to a number of users. Being engaged in the development of ideas with anonymization all weekend, we would at least get more fun.

If you have never participated in hackathons, be sure to try it - an excellent test of yourself and your team, a chance to realize something that you never had enough time for. And of course, be sure to do what you like, because the maximum kaef from the process can be obtained only by burning on hardcore.

Current project status

Our team posted the code for the final demo on GitHub . And also there is a separate repository that does exactly anonymization . In the future, there are plans to develop the original version with anonymization: rewrite everything in PyTorch, train in photos with higher resolution and less noise (photo with only one face), and raise the bot in a telegram.

For those who already want to try the version born at the very beginning of the hackathon, the bot in the telegram ( @DbrainDeepAnon-> /start-> /unlock dbraindeepanon) is launched in demo mode . It works on the Dbrain server (our gratitude), on which all the training for transferring people took place, so try it until you turn it off. The service uses the internal wrapper of Dbrain - wrappa, which allows you to easily wrap the docker container and launch the telegram bot. Soon wrappa will be available in open source.

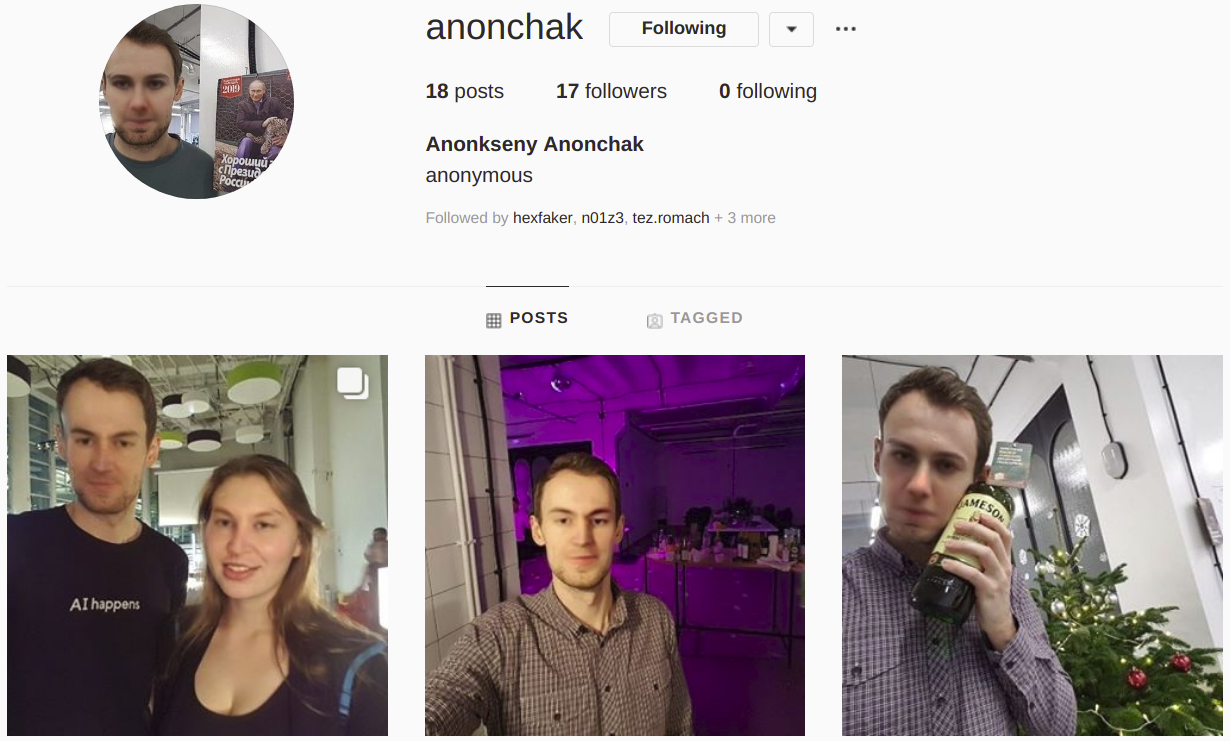

It should be noted that our work was not in vain. Thanks to DeepAnon, one “anonym”, strongly worried about the privacy of his data, was finally able to keep instagram about his life. He is no longer afraid that the cameras in the city will be able to recognize his face from photos from social networks, but his friends will be able to recognize him. The faces of all people on his instagram are also anonymized.

Links to repositories that we used:

https://github.com/shaoanlu/faceswap-GAN

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

https://github.com/sacmehta/ESPNet

https://github.com/JiahuiYu / generative_inpainting

https://github.com/NVIDIA/FastPhotoStyle

PS Also, for the fan, I tried to retrain the network for replacing people with one of the ODS members . Try to guess at who. The networks gave the name - TestesteroNet.