New V8 and Node.js Speed: Optimization Techniques Today and Tomorrow

- Transfer

Node.js, since its inception, depends on the V8 JS engine, which provides the execution of language commands that we all know and love. V8 is a JavaScript virtual machine written by Google for the Chrome browser. From the very beginning, V8 was created in order to make JavaScript fast, at least to provide more speed than competing engines. For a dynamic language without strong typing, achieving high performance is not an easy task. V8 and other engines are developing, better and better solving this problem. However, the new engine is not just “increasing the speed of JS execution”. This is also the need for new approaches to code optimization. Not everything that was the fastest today will please us with maximum performance in the future. Not everything that was considered slow will remain so.

How will TurboFan V8 specs affect code optimization? How will the techniques considered optimal today show themselves in the near future? How do V8 performance killers behave these days, and what can you expect from them? In this material we tried to find answers to these and many other questions. Here

is the fruit of the joint work of David Mark Clements and Matteo Collina . The material was checked by Francis Hinkelmann and Benedict Meirer from the V8 development team.

The central part of the V8 engine, which allows it to execute JavaScript at high speed, is the JIT (Just In Time) compiler. It is a dynamic compiler that can optimize code as it runs. When V8 was first created, the JIT compiler was called FullCodeGen, it was (as Yang Guo rightly noted ) the first optimizing compiler for this platform. The V8 team then created the Crankshaft compiler, which included many performance optimizations that were not implemented in FullCodeGen.

As a person who watched JavaScript from the 90s and used it all this time, I noticed that often which sections of the JS code will work slowly and which will quickly turn out to be completely unobvious, regardless of which engine is used. The reasons why programs ran slower than expected were often hard to understand.

In recent years, Matteo Collina and I have focused on figuring out how to write high-performance code for Node.js. Naturally, this implies knowing which approaches are fast and which are slow when our code is executed by the V8 JS engine.

Now, it's time to review all our performance assumptions as the V8 team wrote a new JIT compiler: TurboFan.

We are going to consider well-known software constructs that lead to the abandonment of optimizing compilation. In addition, here we will deal with more complex research aimed at studying the performance of different versions of V8. All this will be done through a series of microbenchmarks launched using different versions of Node and V8.

Of course, before optimizing the code for V8, we first need to focus on the design of the API, algorithms, and data structures. These micro-benchmarks can be seen as indicators of how JavaScript execution in Node is changing. We can use these indicators to change the overall style of our code and the ways in which we improve performance after applying the usual optimizations.

We will consider the performance of microbenchmarks in versions V8 5.1, 5.8, 5.9, 6.0, and 6.1.

In order to understand how the V8 versions are related to Node versions, we note the following: the V8 5.1 engine is used in Node 6, the Crankshaft JIT compiler is used here, the V8 5.8 engine is used in Node versions 8.0 through 8.2, and Crankshaft is used here, and TurboFan.

Currently, it is expected that in Node 8.3, or possibly in 8.4, there will be a V8 engine version 5.9 or 6.0. The latest version of V8 at the time of this writing is 6.1. It is integrated into Node in the node-v8 experimental repository . In other words, V8 6.1 will end up in some future version of Node.

Test code and other materials used in the preparation of this article can be found here.

Here is a document in which, among other things, there are unprocessed test results.

Most micro benchmarks are done on Macbook Pro 2016, 3.3 GHz Intel Core i7, 16 GB 2133 MHz LPDDR3 memory. Some of them (working with numbers, deleting properties of objects) were performed on the MacBook Pro 2014, 3 GHz Intel Core i7, 16 GB 1600 MHz DDR3-memory. Performance measurements for different versions of Node.js were performed on the same computer. We made sure that other programs did not affect the test results.

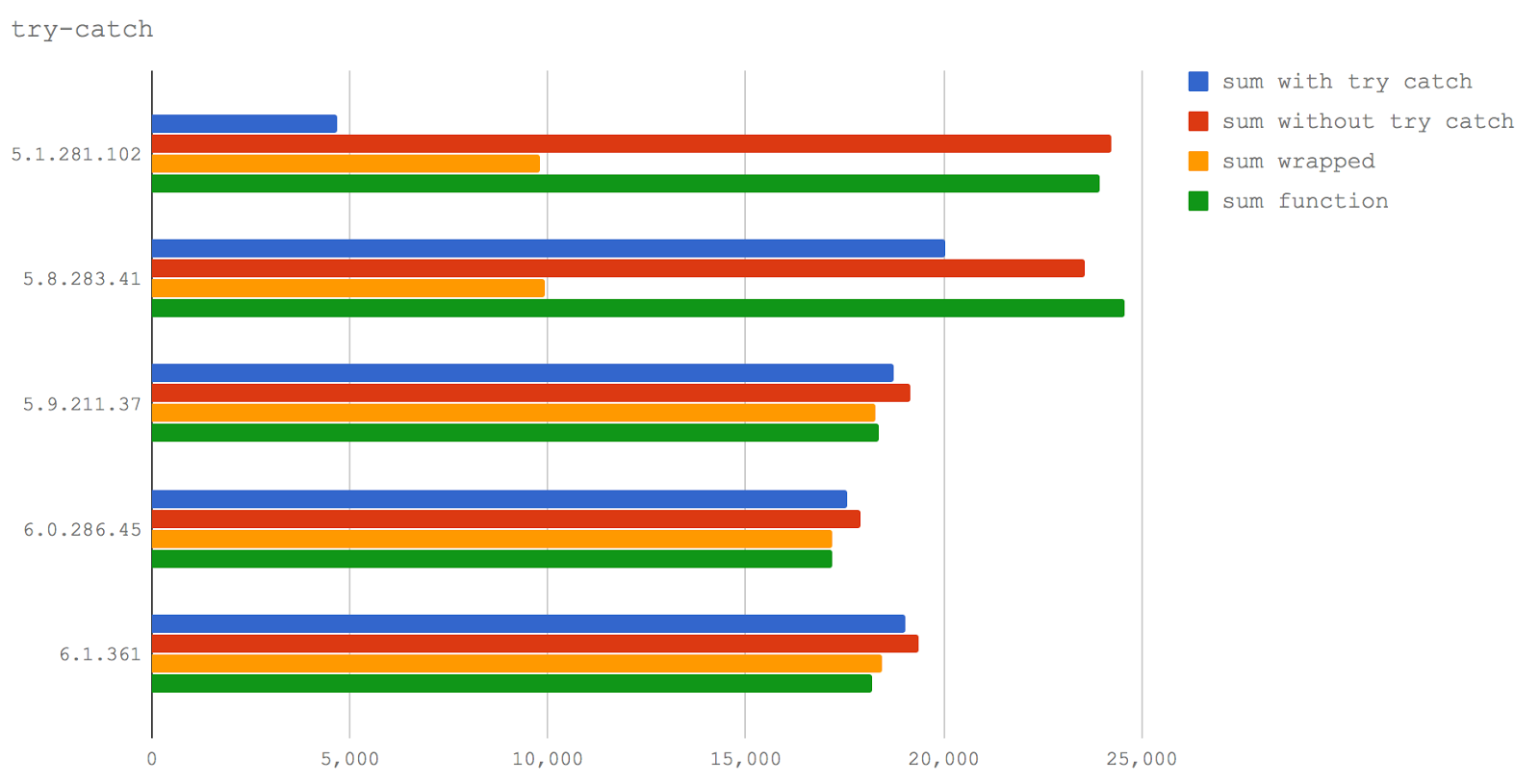

Let's look at our tests and talk about what the results mean for the future Node. All tests were performed using benchmark.js, the data on each of the diagrams indicates the number of operations per second, that is, the higher the value, the better.

One of the well-known deoptimization patterns is to use blocks

Please note that hereinafter in the lists of test descriptions, in parentheses, the short names of the tests in English will be given. These names are used to indicate the results in charts. In addition, they will help you navigate the code that was used during the tests.

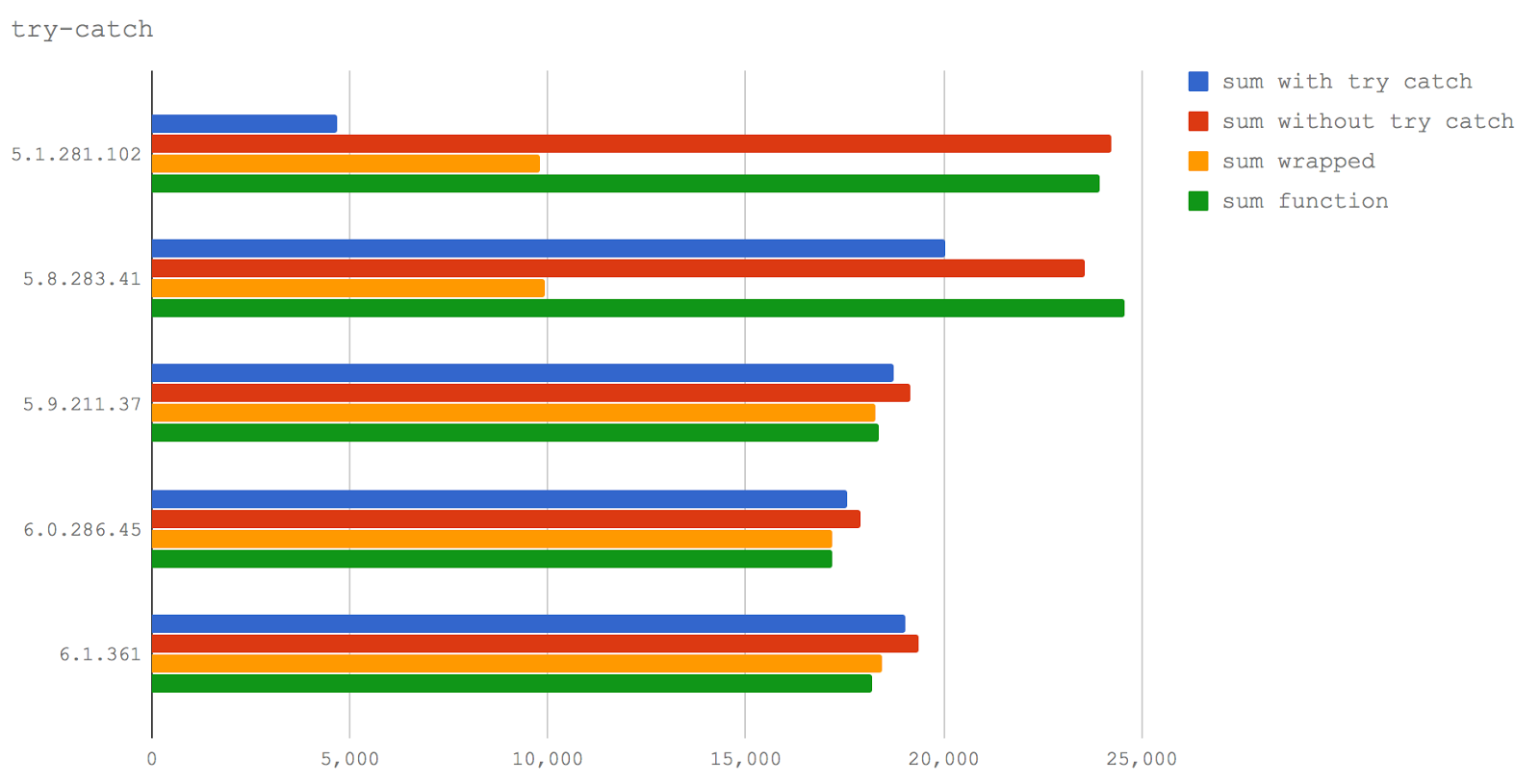

In this test, we compare four test cases:

→ Test code on GitHub

We can see that what is already known about the negative impact

It should also be noted that calling a function from a block

However, in Node 8.3+, calling a function from a block has

Nevertheless, do not calm down. While working on some materials for the optimization seminar, we found an errorwhen a rather specific set of circumstances can lead to an endless cycle of deoptimization / reoptimization in TurboFan. This may well be considered the next performance killer pattern.

For many years, the team was

The problem with it

The V8 engine's approach to creating high-performance objects with properties is to create a class at the C ++ level, based on the "shape" of the object, that is, on what keys and values the object has (including the keys and values of the prototype chain). These constructs are known as “hidden classes”. However, this type of optimization is performed at runtime. If you are not sure about the shape of the object, V8 has another property search mode: hash table search. Such a property search is much slower.

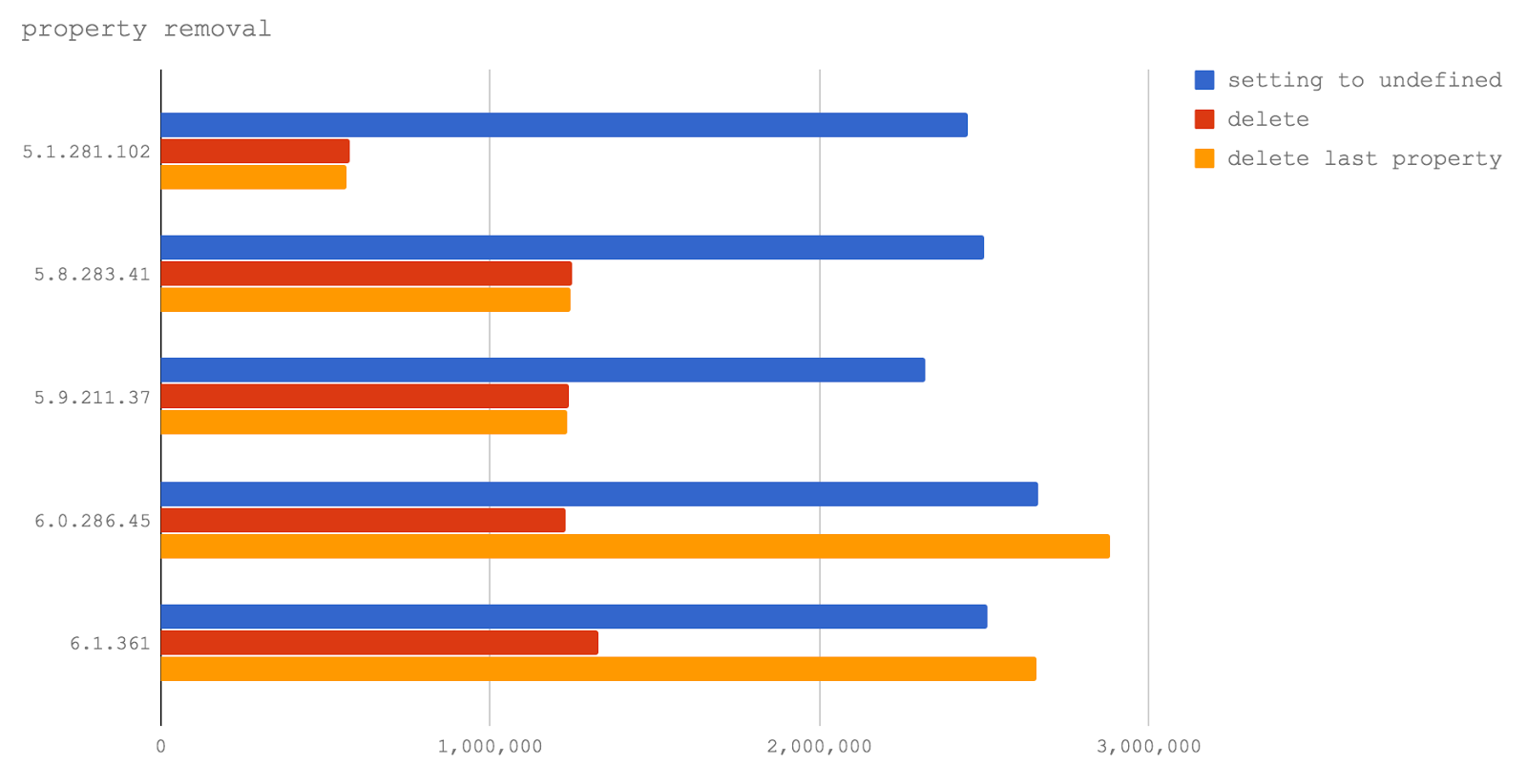

Historically, when we delete a

Now let's find out if the new TurboFan implementation solves the problem of removing properties from objects.

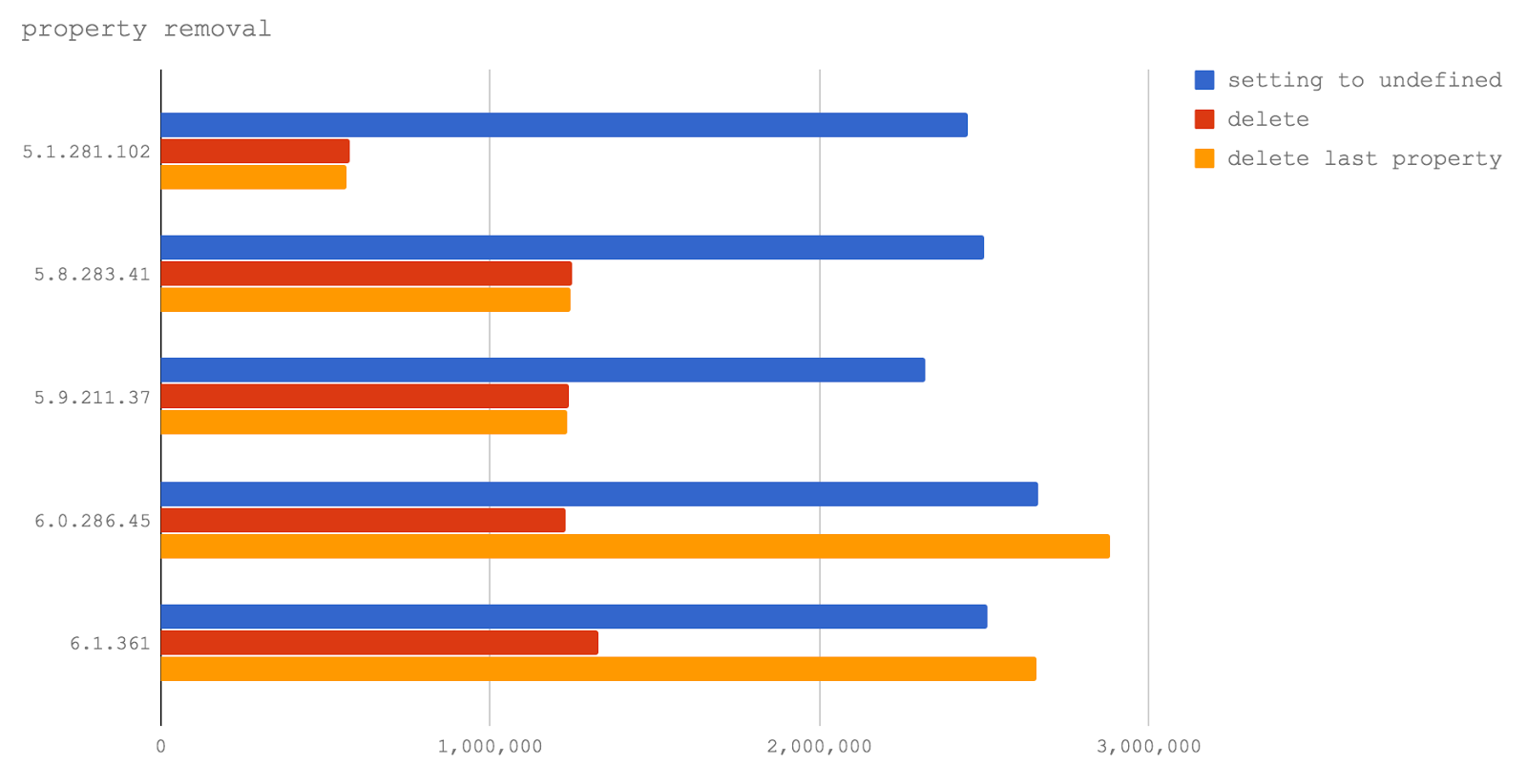

Here we compare three test cases:

→ Test code on GitHub

In V8 6.0 and 6.1 (they are not used in any of Node releases yet), deleting the last property added to the object corresponds to the optimized TurboFan path of the program execution, and thus, is even faster than setting the property c

However, the use of this operator still leads to a serious performance drop when accessing properties if a property that is not the last of those added was removed from the object. This observation helped us make Jacob Kummerov, which pointed out the peculiarity of our tests, in which only the option with the removal of the last added property was investigated. We express our gratitude to him. As a result, no matter how much we would like to say that the command

A typical problem with an implicitly created object

In order to use the methods of arrays or the features of their behavior, indexed properties

However, when an implicitly created object is

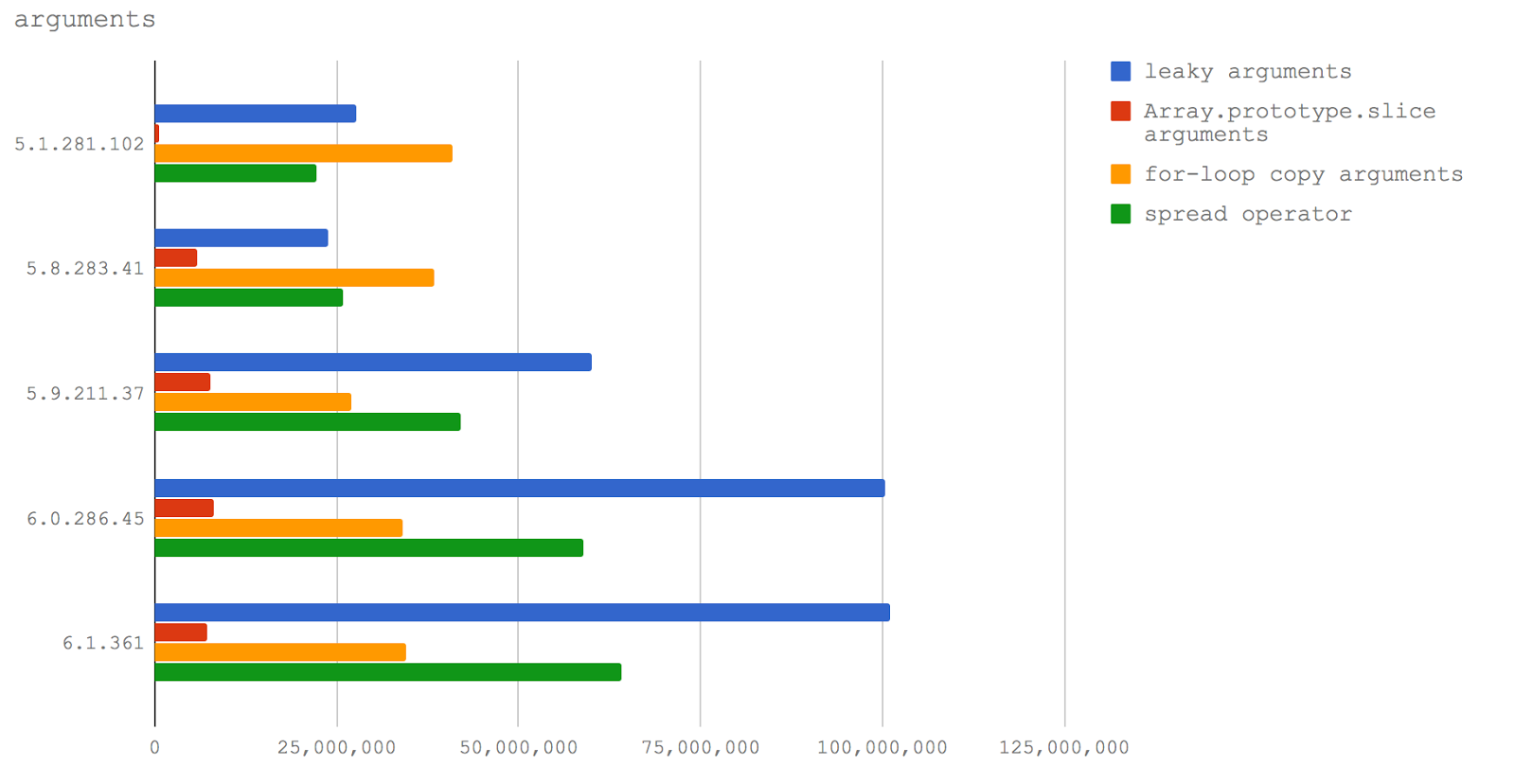

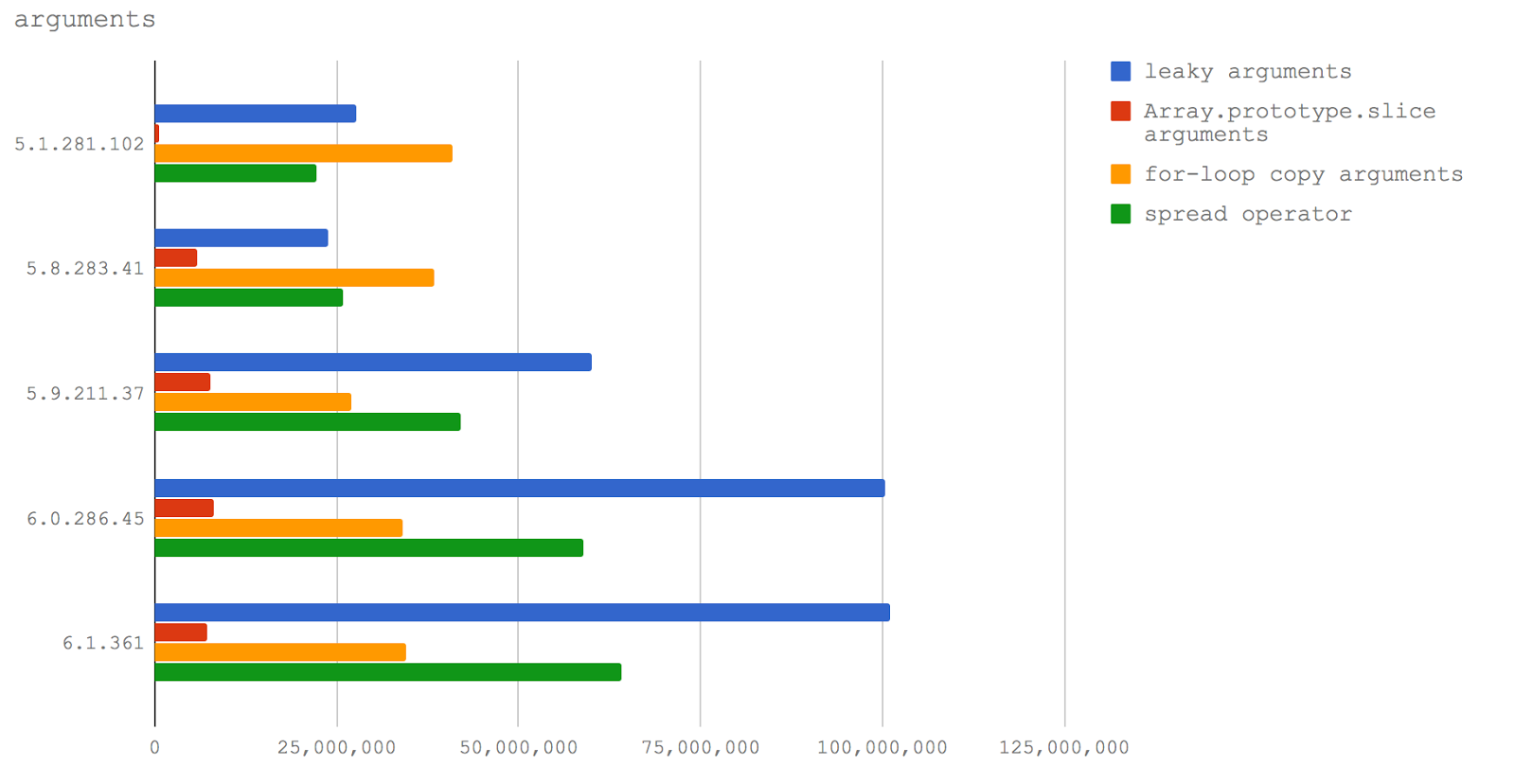

The following microbenchmark is aimed at exploring two interrelated situations in the four versions of V8. Namely, this is the price of the leak

Here are our test cases:

→ Test code on GitHub

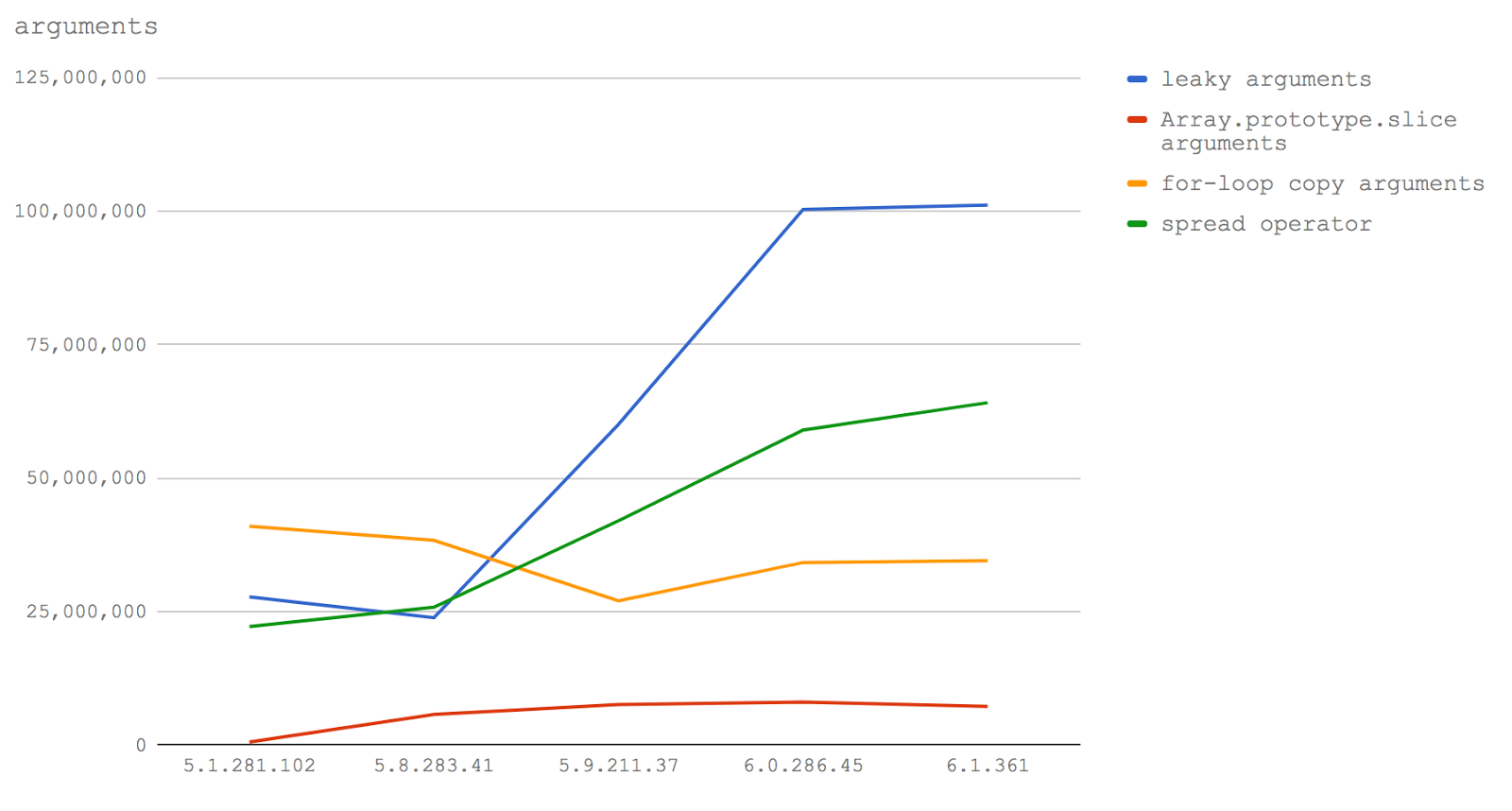

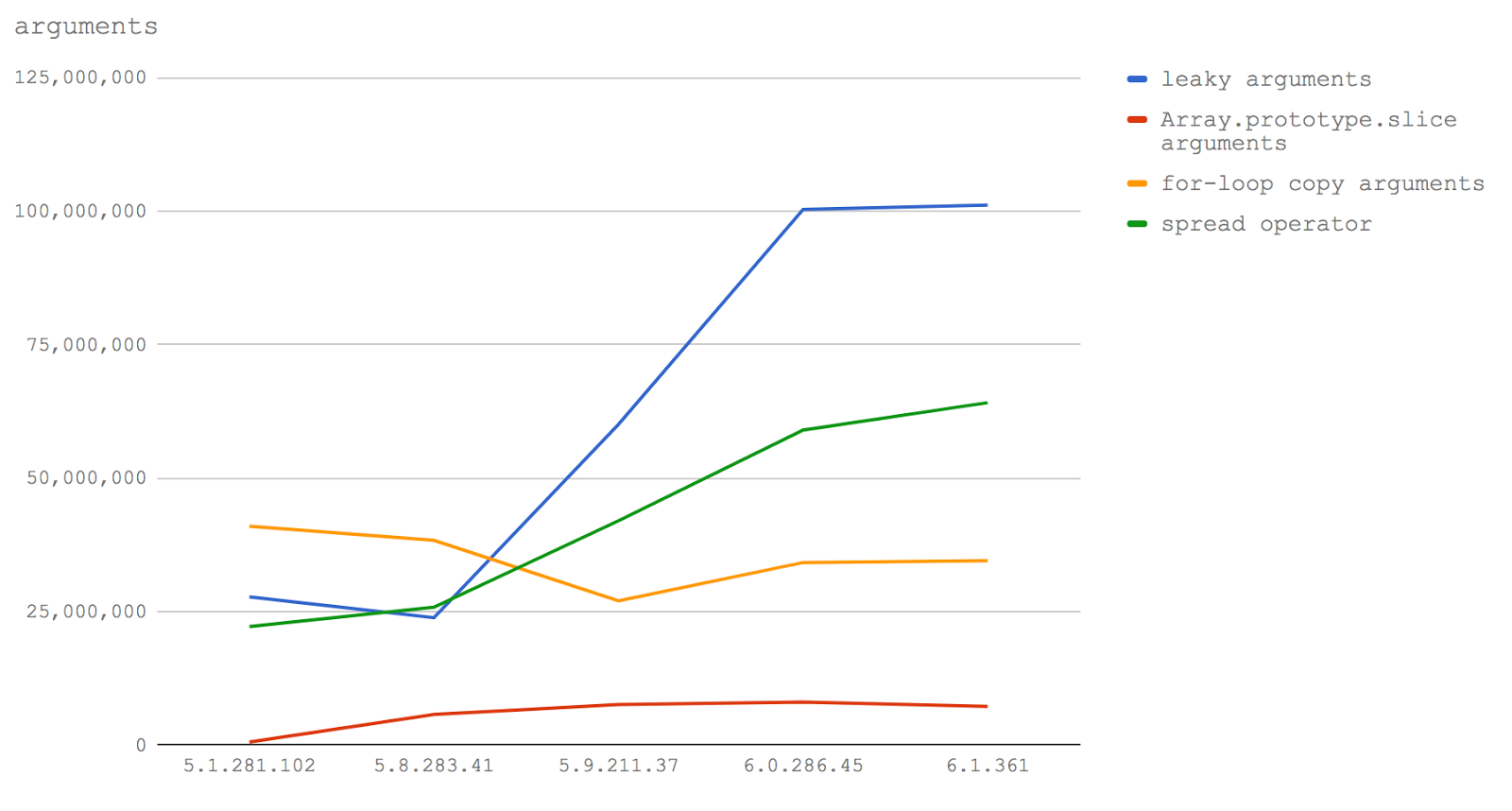

Now let's take a look at the same data presented in the form of a line graph in order to emphasize changes in performance characteristics.

Here are the conclusions that can be drawn from all of this. If you need to write productive code that provides for processing the input data of a function in the form of an array (which I know from experience that I need quite often), then in Node 8.3 and higher you need to use the extension operator. In Node 8.2 and below, you should use a loop

Next, in Node 8.3+ performance degradation when passing an object

Partial application (or currying) of functions allows you to save a certain state in the visibility areas of the enclosed circuit.

For instance:

In this example, the

A shorter form of partial use of the function has been available since EcmaScript 5 thanks to the method

However, usually the method is

Our test measures the difference between usage

Here are four test cases.

→ Test code on GitHub

A linear diagram of the test results clearly shows the almost complete absence of differences between the considered methods of working with functions in the latest versions of V8. Interestingly, a partial application using arrow functions is much faster than using ordinary functions (at least in our tests). In fact, it almost coincides with a direct function call. In V8 5.1 (Node 6) and 5.8 (Node 8.0-8.2) it is

The fastest way to curry in all versions of Node is to use arrow functions. In recent versions, the difference between this method and application is

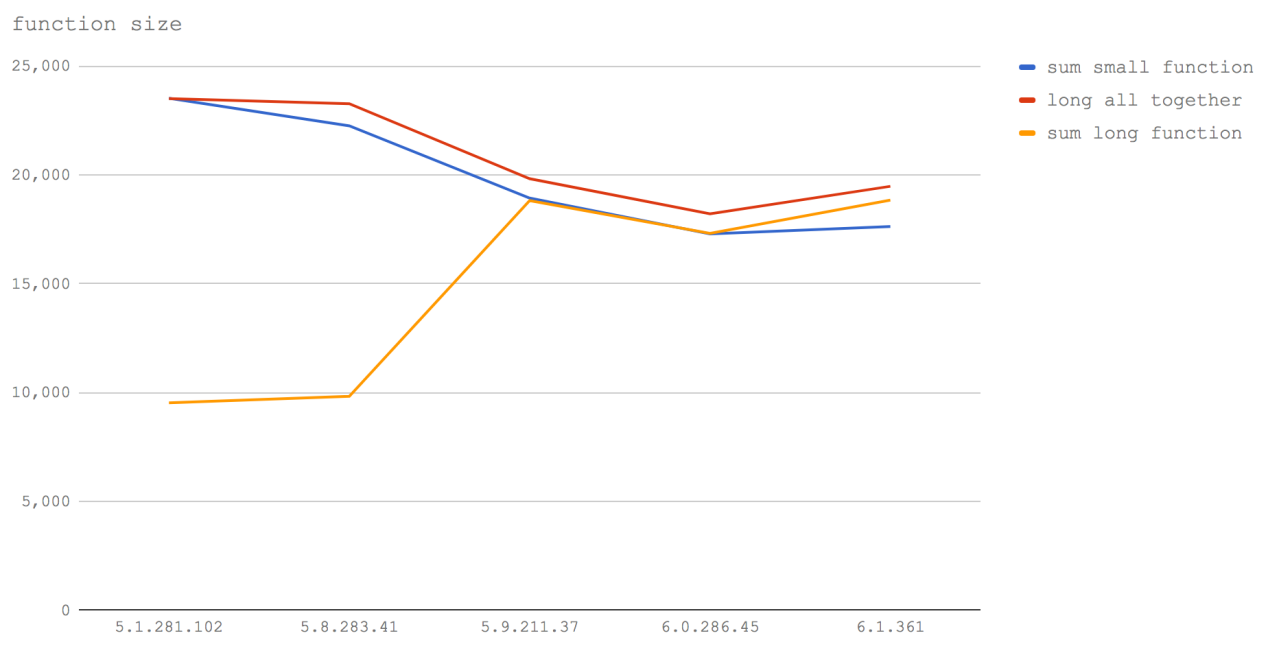

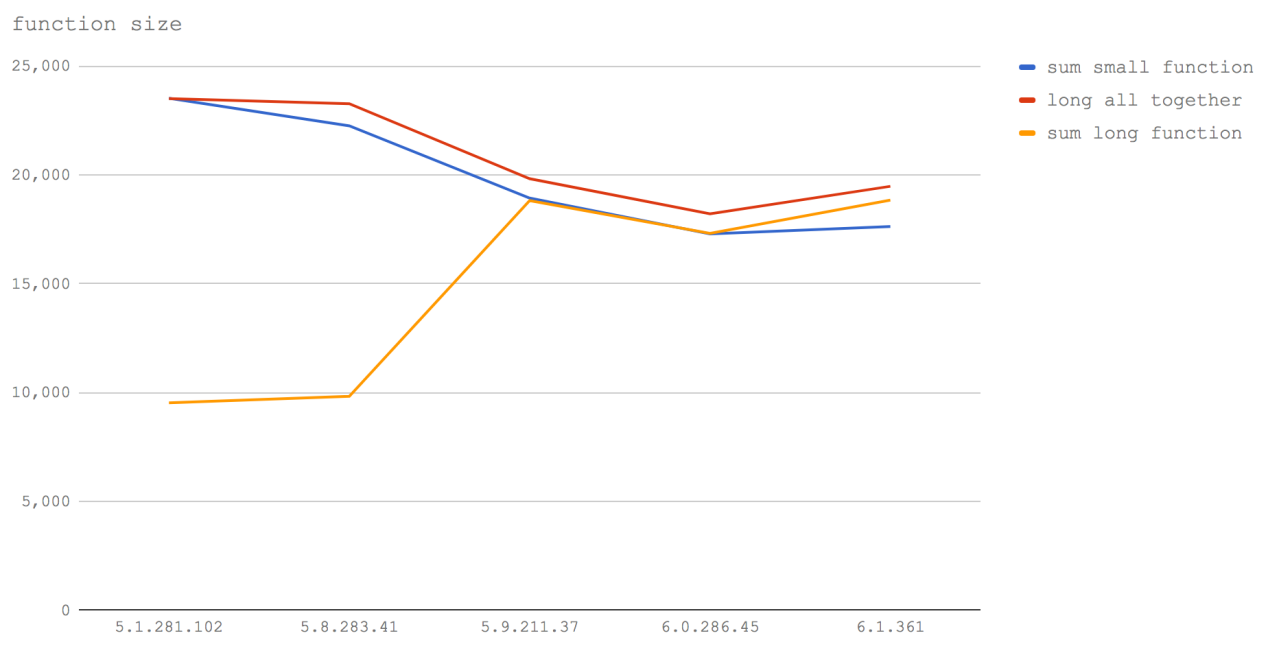

The size of the function, including its signature, spaces, and even comments, can affect whether the V8 can make the function inline or not. Yes, it is: adding comments to a function can decrease performance by about 10%. Will this change in the future?

In this test, we explore three scenarios:

→ GitHub test code

In V8 5.1 (Node 6), the sum small function and long all together tests show the same result. This perfectly illustrates how embedding works. When we call a small function, it is similar to the fact that V8 writes the contents of this function to the place where it is called. Therefore, when we write the text of the function (even with the addition of comments), we manually embed it in the place of the call and the performance turns out to be the same. Again, in V8 5.1 (Node 6), you can see that calling the function, supplemented by comments, after the function reaches a certain size, leads to significantly slower code execution.

In Node 8.0-8.2 (V8 5.8), the situation as a whole remains the same, except that the cost of calling a small function has increased markedly. This is probably due to the mixing of Crankshaft and TurboFan elements, when one function can be in Crankshaft and the other in TurboFan, which leads to a breakdown in the mechanisms of embedding (that is, there should be a transition between clusters of sequentially built-in functions).

In V8 5.9 and higher (Node 8.3+), adding extraneous characters, such as spaces or comments, does not affect the performance of functions. This is because TurboFan uses Abstract Syntax Tree to calculate the size of a function (AST), instead of like Crankshaft, count characters. Instead of taking into account the number of bytes of the function, TurboFan analyzes the actual instructions of the function, therefore, starting with V8 5.9 (Node 8.3+), spaces, characters that make up the variable names, function signatures and comments no longer affect whether the function can to be embedded . In addition, it should be noted that the overall performance of functions is declining.

The main conclusion here is that the functions are still worth doing as small as possible. At the moment, you still need to avoid unnecessary comments (and even spaces) inside functions. In addition, if you strive for maximum performance, manually embedding functions (that is, transferring the function code to the place of the call, which eliminates the need to call functions) stably remains the fastest approach. Of course, you need to keep a balance here, because, after the real executable code reaches a certain size, the function will not be built-in anyway, so thoughtless copying the code of other functions into your own can cause performance problems. In other words, manually embedding functions is a potential shot in the leg. In most cases, it is better to entrust the embedding of functions to the compiler.

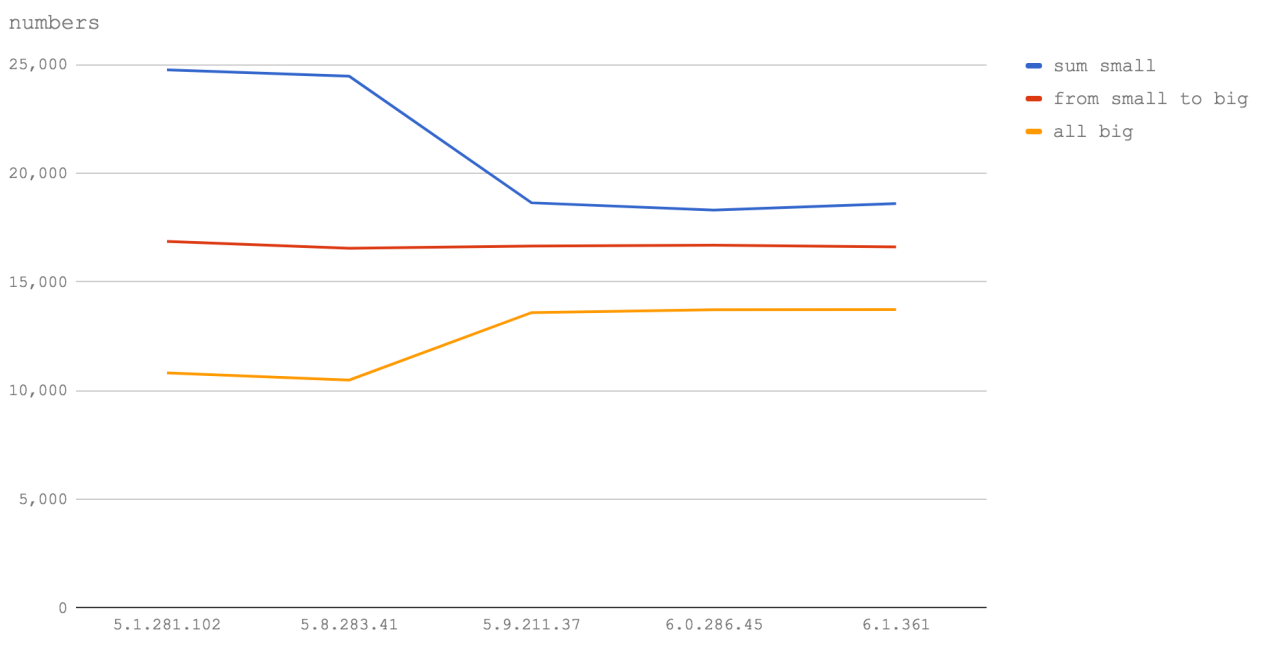

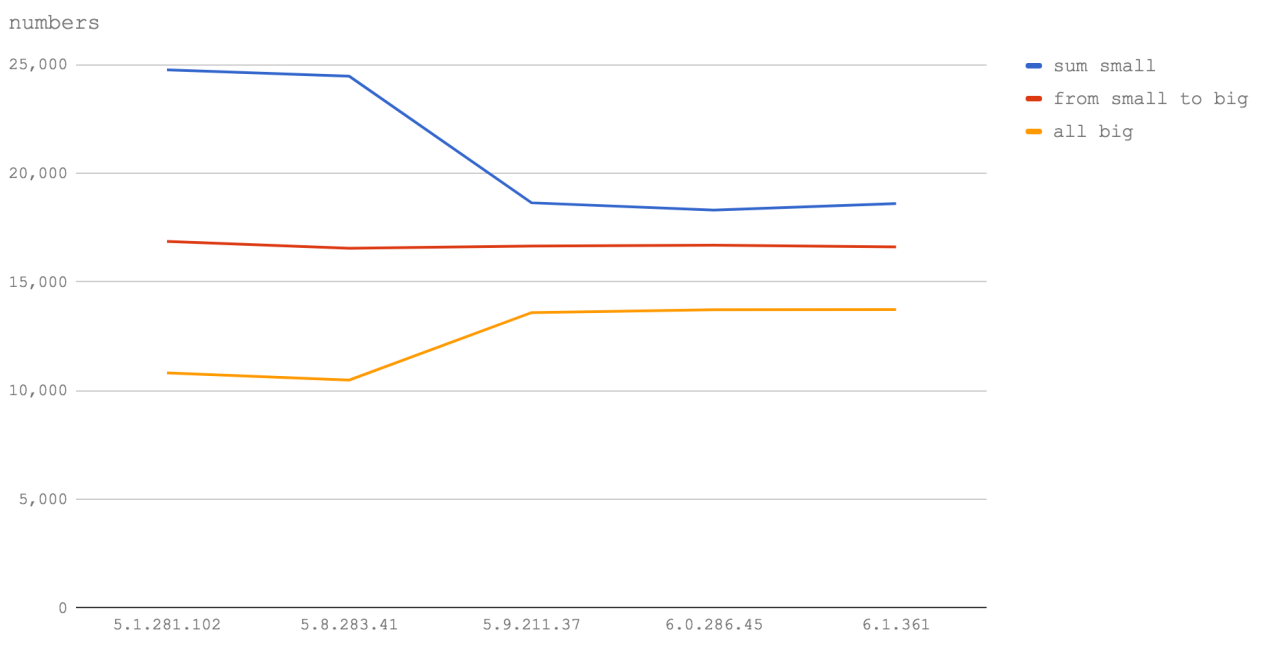

It is well known that JavaScript has only one numeric type:

However, V8 is implemented in C ++, so the basic type of JavaScript numeric value is a matter of choice.

In the case of integers (that is, when we specify numbers in JS without a decimal point), V8 considers all numbers to be 32-bit - as long as they cease to be such. This seems like a fair choice, since in many cases the numbers are in the range 2147483648 -2147483647. If the JS-number (in its entirety) exceeds 2147483647, the JIT compiler has to dynamically change the basic type of the numerical value to the double-precision type (floating point) - this can, in potential, have a certain effect on other optimizations.

In this test, we will consider three scenarios:

→ Test code on GitHub

The diagram allows us to say that, whether it is a question of Node 6 (V8 5.1), or of Node 8 (V8 5.8), or even of future versions of Node, the above observation remains valid. Namely, it turns out that calculations using integers greater than 2147483647 lead to the fact that the functions are executed at a speed that is in the region of half or two-thirds of the maximum. Therefore, if you have long digital IDs - put them in strings.

In addition, it is very noticeable that operations with numbers falling within the 32-bit range are performed much faster in Node 6 (V8 5.1), as well as in Node 8.1 and 8.2 (V8 5.8), than in Node 8.3+ (V8 5.9+ ) However, operations on double precision numbers in Node 8.3+ (V8 5.9+) are faster. This is probably because of the slowdown in processing 32-bit numbers, and does not apply to the speed of calling functions or loops

Jakob Kummerov , Yang Guo and the V8 team helped us to make the results of this test more accurate and more accurate. We are grateful to them for this.

Taking values of all properties of an object and performing some actions with them is a common task. There are many ways to solve it. Find out which of the methods is the fastest in the studied versions of V8 and Node.

Here are four tests that all tested versions of V8 have undergone:

In addition, we conducted three additional tests for V8 versions 5.8, 5.9, 6.0 and 6.1:

We did not run these tests in V8 5.1 (Node 6), because this version does not support the EcmaScript 2017 built-in method

→ Test code on GitHub

In Node 6 (V8 5.1) and Node 8.0-8.2 (V8 5.8), using a loop

In V8 6.0 (Node 8.3)

In V8 6.1 (that is, in future versions of Node), the performance of the method using

TurboFan seems to be driven by the desire to optimize the designs that are characteristic of an intuitive programming approach. Thus, optimization is performed for use cases that are most convenient for the developer.

Using

In addition, for those who are used to using the cycle

Creating objects in JS is something that happens all the time, so this process will be very useful to investigate.

We are going to conduct three sets of tests:

→ GitHub test code

In Node 6 (V8 5.1), all approaches show approximately the same results.

In Node 8.0-8.2 (V8 5.8), when creating objects from EcmaScript 2015 classes, performance is less than half that achievable using object literals or constructor functions. As you understand, it is quite obvious what to use in these versions of Node.

In V8 5.9, different ways of creating objects again show the same performance.

Then, in V8 6.0 (hopefully it will be Node 8.3 or 8.4) and 6.1 (as long as this version of V8 is not associated with any future Node release), the speed of creating objects is just crazy. Over 500 million operations per second! This is just awesome.

But even here you can see that creating objects using the constructor is a bit slower. Therefore, we believe that in the future, the most productive code will be the one where object literals are used. This suits us, since we, as a general rule, recommend returning object literals from functions (rather than using classes and constructors).

I must say that Jakob Kummerov noted here that TurboFan is able to optimize the selection of objects in our microbenchmark. We plan to research this and update the test results.

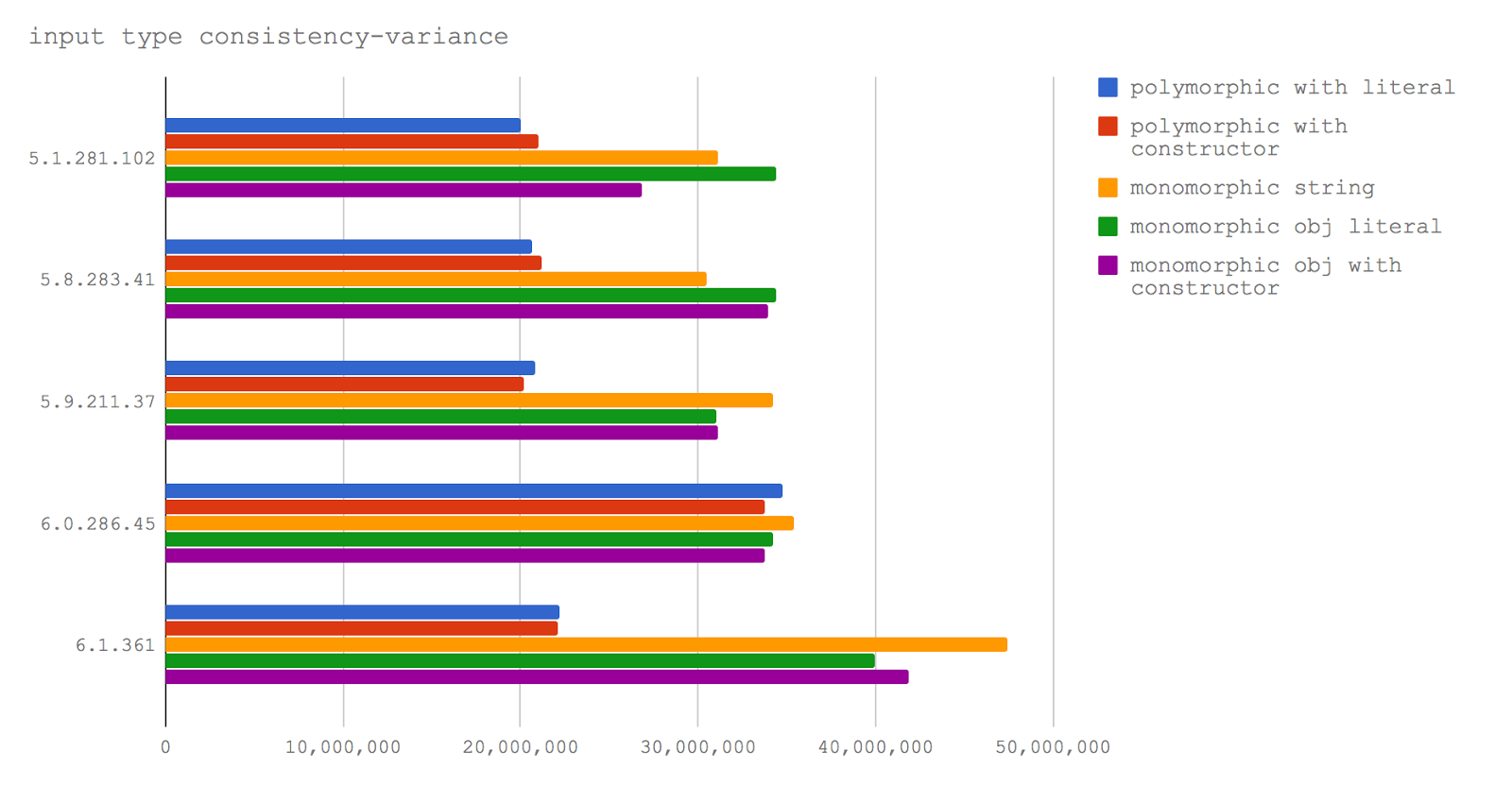

If we always pass an argument of the same type to the function (say, this is a string), it means that we use the function monomorphically. But some functions are designed for polymorphism. This means that the same parameter can be represented by different hidden classes. Perhaps it can be processed as a string, or as an array, or as some arbitrary object. This approach allows, in some situations, to create nice software interfaces, but it affects performance poorly. We will experience the polymorphic and monomorphic use of functions.

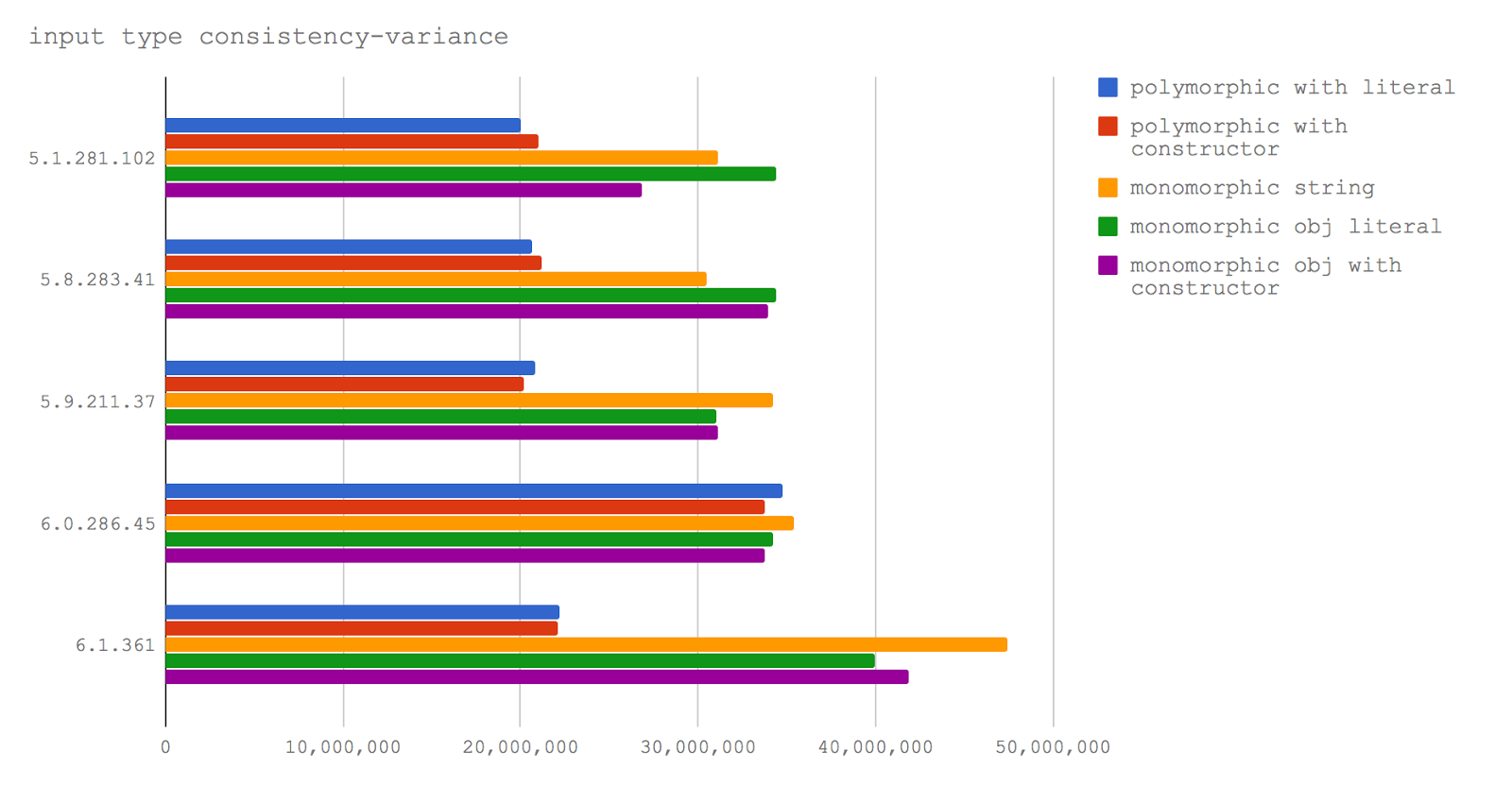

We are going to investigate five test cases:

→ Test code on GitHub The

data on the diagram convincingly show that monomorphic functions work faster than polymorphic ones in all studied versions of V8.

The performance gap between monomorphic and polymorphic functions in V8 6.1 (in the engine that will receive one of future versions of Node) is especially wide, which aggravates the situation. However, it is worth noting that this test uses an experimental node-v8 branch, which uses something like a "night build" of V8, so this result may well not correspond to the actual characteristics of V8 6.1.

When writing code that should be optimal, that is, we are talking about a function that will be constantly called, polymorphism should be avoided. On the other hand, if a function is called once or twice, say, this function is used to prepare the program for work, polymorphic APIs are quite acceptable.

With regard to this test, we want to note that the V8 team has informed us that they could not reliably reproduce the results of this test, using their internal enforcement system

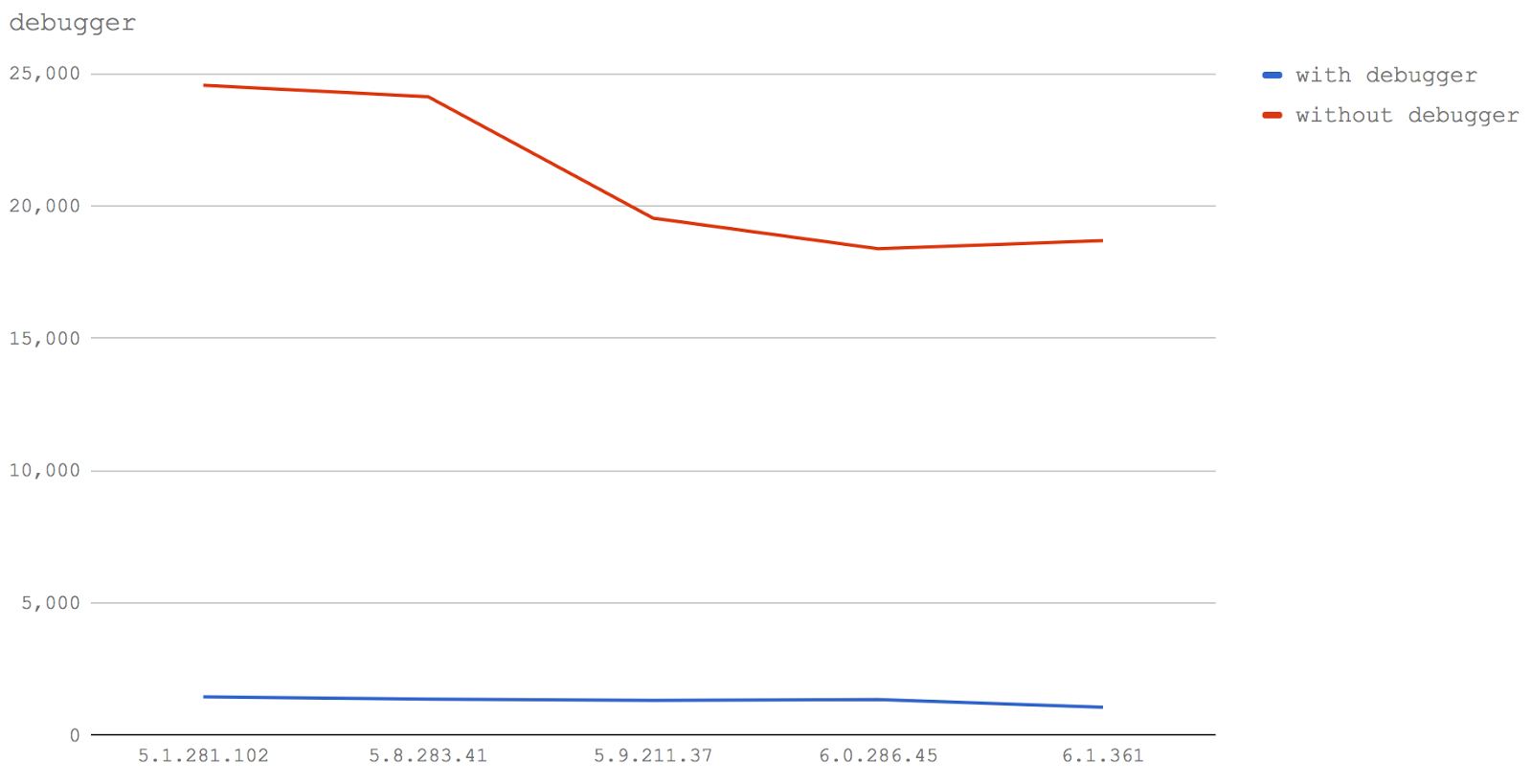

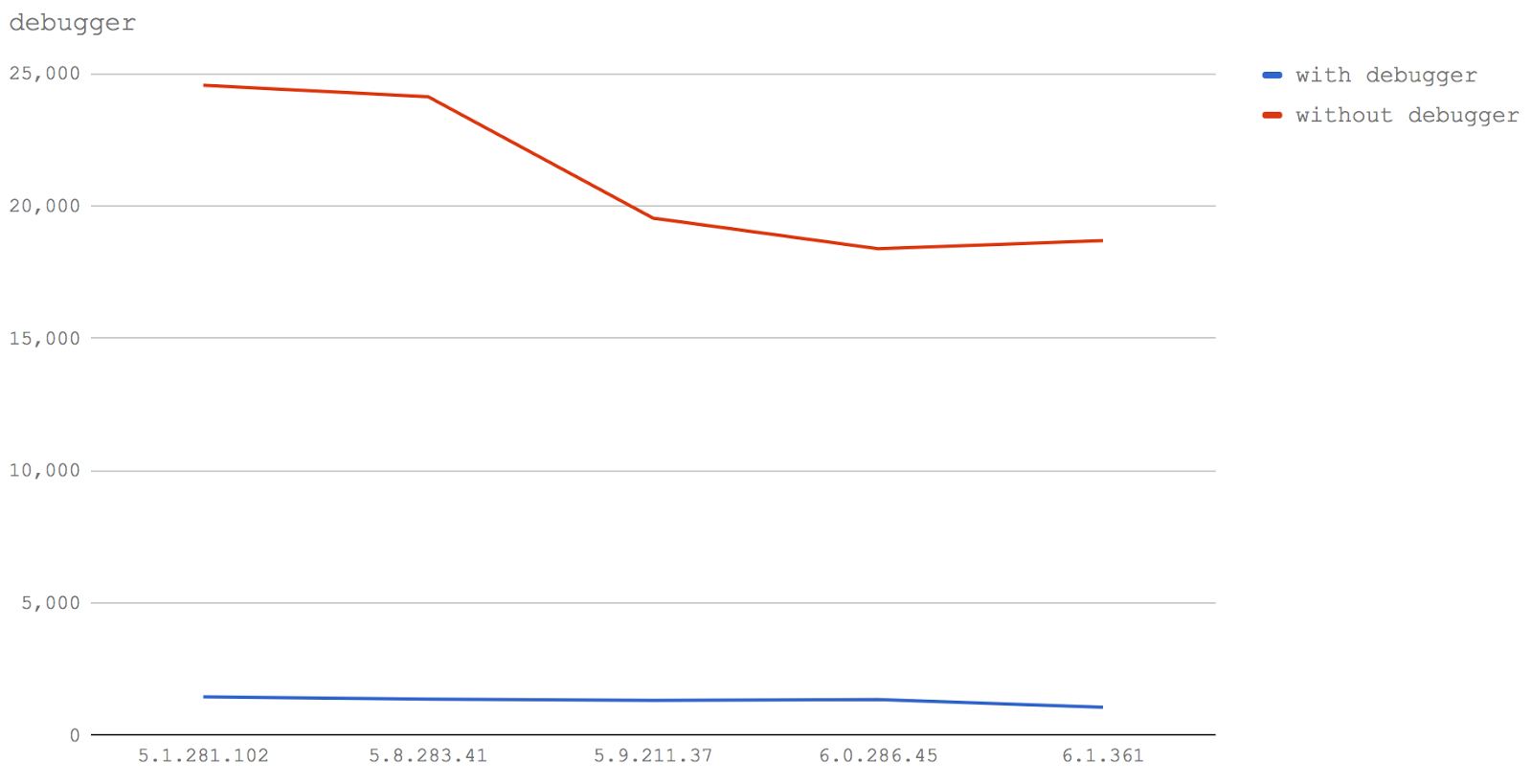

And finally, let's talk about the keyword

Remember to remove this keyword from the production code. Otherwise, there can be no question of any productivity.

Here we investigated two test cases:

→ GitHub test code

Nothing much to say here. All versions of V8 show the strongest drop in performance when using a keyword

Here you can pay attention to the fact that the line of the graph for the test without debugger noticeably goes down in more recent versions of V8.

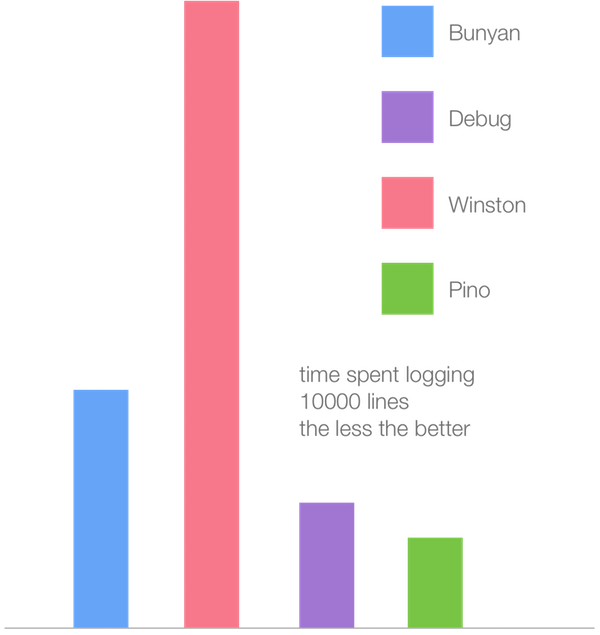

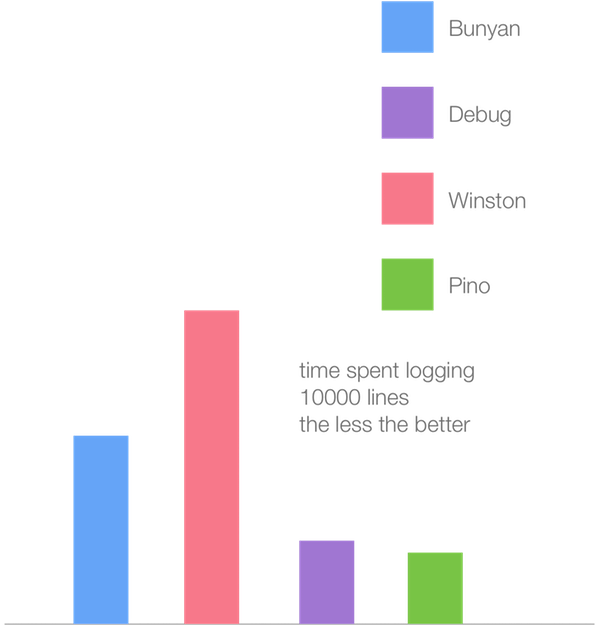

In addition to microbenchmarks, we can take a look at how different versions of the V8 solve practical problems. To do this, we use several popular loggers for Node.js, which we studied with Matteo, creating the Pino logger .

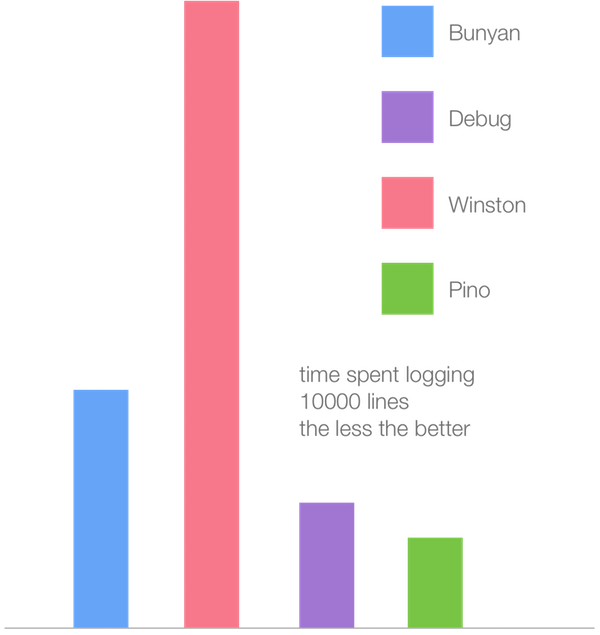

The histogram below shows the time it takes for the most popular loggers to output 10 thousand lines (the lower the column, the better) in Node.js 6.11 (Crankshaft).

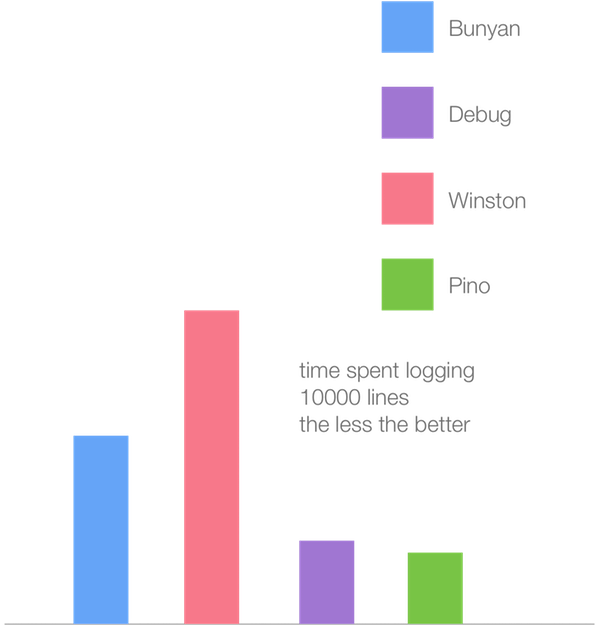

Here is the same, but already using V8 6.1 (TurboFan).

While all the loggers showed about a twofold increase in performance, Winston made the most of the new TurboFan JIT compiler. It seems that in this case several factors influenced the performance at once, which, individually, appeared in our micro-benchmarks. The slowest ways to work in Crankshaft prove to be significantly faster in TurboFan, while what works most quickly when using Crankshaft turns out to be a bit slower in TurboFan. The Winston logger, which was the most leisurely, probably uses techniques that are the slowest in Crankshaft but turn out to be much faster in TurboFan. At the same time, Pino is optimized for maximum performance at Crankshaft. It shows a relatively small increase in productivity.

Some of the tests show that what was slow in V8 5.1, 5.8, and 5.9 turns out to be faster thanks to the full use of TurboFan in V8 6.0 and 6.1. At the same time, what was the fastest loses in performance, often showing the same results as the slower options after increasing their speed.

This is mainly due to the cost of making function calls in TurboFan (V8 6.0 and above). The main idea when working on TurboFan was to optimize what is used most often, and also to ensure that existing "V8 performance killers" would not affect too much the speed of program execution. This led to a general increase in the performance of browser (Chrome) and server (Node) code. The trade-off, it seems, is the performance degradation of those approaches that were previously the fastest. We hope this is a temporary phenomenon. When comparing the performance of loggers, it was found that the overall effect of using TurboFan is a significant increase in the performance of applications with very different code bases (for example, Winston and Pino).

If you have been observing the JavaScript performance situation for some time, adapted to the oddities of the engines, now the time has almost come when you should update your knowledge in this area, take on something new, and forget something. If you strive to write high-quality JS code, then thanks to the efforts of the V8 team, expect an increase in the performance of your applications.

Dear readers! What JavaScript optimization approaches do you use?

How will TurboFan V8 specs affect code optimization? How will the techniques considered optimal today show themselves in the near future? How do V8 performance killers behave these days, and what can you expect from them? In this material we tried to find answers to these and many other questions. Here

is the fruit of the joint work of David Mark Clements and Matteo Collina . The material was checked by Francis Hinkelmann and Benedict Meirer from the V8 development team.

The central part of the V8 engine, which allows it to execute JavaScript at high speed, is the JIT (Just In Time) compiler. It is a dynamic compiler that can optimize code as it runs. When V8 was first created, the JIT compiler was called FullCodeGen, it was (as Yang Guo rightly noted ) the first optimizing compiler for this platform. The V8 team then created the Crankshaft compiler, which included many performance optimizations that were not implemented in FullCodeGen.

As a person who watched JavaScript from the 90s and used it all this time, I noticed that often which sections of the JS code will work slowly and which will quickly turn out to be completely unobvious, regardless of which engine is used. The reasons why programs ran slower than expected were often hard to understand.

In recent years, Matteo Collina and I have focused on figuring out how to write high-performance code for Node.js. Naturally, this implies knowing which approaches are fast and which are slow when our code is executed by the V8 JS engine.

Now, it's time to review all our performance assumptions as the V8 team wrote a new JIT compiler: TurboFan.

We are going to consider well-known software constructs that lead to the abandonment of optimizing compilation. In addition, here we will deal with more complex research aimed at studying the performance of different versions of V8. All this will be done through a series of microbenchmarks launched using different versions of Node and V8.

Of course, before optimizing the code for V8, we first need to focus on the design of the API, algorithms, and data structures. These micro-benchmarks can be seen as indicators of how JavaScript execution in Node is changing. We can use these indicators to change the overall style of our code and the ways in which we improve performance after applying the usual optimizations.

We will consider the performance of microbenchmarks in versions V8 5.1, 5.8, 5.9, 6.0, and 6.1.

In order to understand how the V8 versions are related to Node versions, we note the following: the V8 5.1 engine is used in Node 6, the Crankshaft JIT compiler is used here, the V8 5.8 engine is used in Node versions 8.0 through 8.2, and Crankshaft is used here, and TurboFan.

Currently, it is expected that in Node 8.3, or possibly in 8.4, there will be a V8 engine version 5.9 or 6.0. The latest version of V8 at the time of this writing is 6.1. It is integrated into Node in the node-v8 experimental repository . In other words, V8 6.1 will end up in some future version of Node.

Test code and other materials used in the preparation of this article can be found here.

Here is a document in which, among other things, there are unprocessed test results.

Most micro benchmarks are done on Macbook Pro 2016, 3.3 GHz Intel Core i7, 16 GB 2133 MHz LPDDR3 memory. Some of them (working with numbers, deleting properties of objects) were performed on the MacBook Pro 2014, 3 GHz Intel Core i7, 16 GB 1600 MHz DDR3-memory. Performance measurements for different versions of Node.js were performed on the same computer. We made sure that other programs did not affect the test results.

Let's look at our tests and talk about what the results mean for the future Node. All tests were performed using benchmark.js, the data on each of the diagrams indicates the number of operations per second, that is, the higher the value, the better.

Try / catch problem

One of the well-known deoptimization patterns is to use blocks

try/catch. Please note that hereinafter in the lists of test descriptions, in parentheses, the short names of the tests in English will be given. These names are used to indicate the results in charts. In addition, they will help you navigate the code that was used during the tests.

In this test, we compare four test cases:

- A function that performs calculations in the block

try/catchlocated in it (sum with tr catch). - A function that performs calculations without blocks

try/catch(sum without try catch). - A function call to perform calculations inside a block

try(sum wrapped). - A function call to perform calculations without using

try/catch(sum function).

→ Test code on GitHub

We can see that what is already known about the negative impact

try/catchon performance is confirmed in Node 6 (V8 5.1), and in Node 8.0-8.2 (V8 5.8) try/catchit has a much smaller impact on performance. It should also be noted that calling a function from a block

tryis much slower than calling it outside try- this is true for Node 6 (V8 5.1) and Node 8.0-8.2 (V8 5.8). However, in Node 8.3+, calling a function from a block has

trypractically no effect on performance. Nevertheless, do not calm down. While working on some materials for the optimization seminar, we found an errorwhen a rather specific set of circumstances can lead to an endless cycle of deoptimization / reoptimization in TurboFan. This may well be considered the next performance killer pattern.

Removing properties from objects

For many years, the team was

deleteavoided by anyone who wanted to write high-performance code in JS (well, at least in cases where it was necessary to write the optimal code for the most loaded parts of programs). The problem with it

deletecomes down to how V8 deals with the dynamic nature of JavaScript objects, and with prototype chains (also potentially dynamic) that complicate the search for properties at a low level of engine implementation.The V8 engine's approach to creating high-performance objects with properties is to create a class at the C ++ level, based on the "shape" of the object, that is, on what keys and values the object has (including the keys and values of the prototype chain). These constructs are known as “hidden classes”. However, this type of optimization is performed at runtime. If you are not sure about the shape of the object, V8 has another property search mode: hash table search. Such a property search is much slower.

Historically, when we delete a

deletekey from an object with a command , subsequent operations to access properties will be performed by searching in a hash table. That is why programmers deletetry not to use the command , instead setting the properties toundefined, which, in terms of destroying the value, leads to the same result, but adds complexity when checking the existence of a property. However, usually this approach is good enough, for example, when preparing objects for serialization, since it JSON.stringifydoes not include values undefinedin its output ( undefined, in accordance with the JSON specification, it does not apply to valid values). Now let's find out if the new TurboFan implementation solves the problem of removing properties from objects.

Here we compare three test cases:

- Serializing an object after its property has been set to

undefined(setting to undefined). - Serialization of an object after the command

delete(delete) was used to delete its property . - Serializing the object after the command

deletewas used to delete the property that was added most recently (delete last property).

→ Test code on GitHub

In V8 6.0 and 6.1 (they are not used in any of Node releases yet), deleting the last property added to the object corresponds to the optimized TurboFan path of the program execution, and thus, is even faster than setting the property c

undefined. This is very good, as it indicates that the V8 development team is working to improve the team's performance delete. However, the use of this operator still leads to a serious performance drop when accessing properties if a property that is not the last of those added was removed from the object. This observation helped us make Jacob Kummerov, which pointed out the peculiarity of our tests, in which only the option with the removal of the last added property was investigated. We express our gratitude to him. As a result, no matter how much we would like to say that the command

deletecan and should be used in code written for future Node releases, we are forced to recommend not to do this. The team deletecontinues to negatively impact productivity.Leak and conversion to an array of arguments

A typical problem with an implicitly created object

argumentsavailable in ordinary functions (in contrast, arrow functions of an object argumentsdo not have it) is that it looks like an array, but it is not an array. In order to use the methods of arrays or the features of their behavior, indexed properties

argumentsmust be copied to the array. In the past, JS developers have tended to equate shorter and faster code. Although this approach, in the case of client code, can achieve a reduction in the amount of data that the browser should download, the same can cause problems with server code, where the size of the programs is much less important than the speed of their execution. As a result, a seductively short way to transform an objectargumentsthe array has become quite popular: Array.prototype.slice.call(arguments). Such a command calls an sliceobject's method Array, passing the object argumentsas the context thisfor this method. The method slicesees an object that looks like an array, after which it does its job. As a result, we get an array assembled from the contents of an object argumentssimilar to an array. However, when an implicitly created object is

argumentspassed to something that is outside the context of the function (for example, if it is returned from a function or transferred to another function, as during a call Array.prototype.slice.call(arguments)), this usually causes a performance hit. We examine this statement. The following microbenchmark is aimed at exploring two interrelated situations in the four versions of V8. Namely, this is the price of the leak

argumentsand the price of copying argumentsto an array, which is then passed outside the function instead of an object arguments. Here are our test cases:

- Passing an object to

argumentsanother function without convertingargumentsto an array (leaky arguments). - Creating a copy of the object

argumentsusing the constructArray.prototype.slice(Array.prototype.slice arguments). - Using a loop

forand copying each property (for-loop copy arguments) - Using the extension operator from EcmaScript 2015 to assign an array of input data to a function as a spread operator.

→ Test code on GitHub

Now let's take a look at the same data presented in the form of a line graph in order to emphasize changes in performance characteristics.

Here are the conclusions that can be drawn from all of this. If you need to write productive code that provides for processing the input data of a function in the form of an array (which I know from experience that I need quite often), then in Node 8.3 and higher you need to use the extension operator. In Node 8.2 and below, you should use a loop

forto copy keys from argumentsto a new (previously created) array (you can see the details in the test code). Next, in Node 8.3+ performance degradation when passing an object

arguments it doesn’t happen to other functions, so there may be other advantages in terms of performance if we do not need a full array and we can work with a structure similar to an array but not an array.Partial use (currying) and function context binding

Partial application (or currying) of functions allows you to save a certain state in the visibility areas of the enclosed circuit.

For instance:

function add (a, b) {

return a + b

}

const add10 = function (n) {

return add(10, n)

}

console.log(add10(20))In this example, the

afunction parameter is addpartially applied as the number 10 in the function add10. A shorter form of partial use of the function has been available since EcmaScript 5 thanks to the method

bind:function add (a, b) {

return a + b

}

const add10 = add.bind(null, 10)

console.log(add10(20))However, usually the method is

bindnot used, since it is significantly slower than the above method with a closure. Our test measures the difference between usage

bindand shorting in different versions of the V8. For comparison, a direct call to the original function is used here. Here are four test cases.

- A function that calls another function with a preliminary partial application of the first argument (curry).

- An arrow function that calls another function with the first argument partially applied (fat arrow curry).

- A function created using a method

bindthat partially applies the first argument of another function (bind). - Direct function call without the use of a partial application (direct call).

→ Test code on GitHub

A linear diagram of the test results clearly shows the almost complete absence of differences between the considered methods of working with functions in the latest versions of V8. Interestingly, a partial application using arrow functions is much faster than using ordinary functions (at least in our tests). In fact, it almost coincides with a direct function call. In V8 5.1 (Node 6) and 5.8 (Node 8.0-8.2) it is

bindvery slow, and it seems obvious that using arrow functions for these purposes allows you to achieve the highest speed. However, performance when usingbindstarting with V8 version 5.9 (Node 8.3+) is growing significantly. This approach turns out to be the fastest (although the difference in performance here is almost indistinguishable) in V8 6.1 (Node of future versions). The fastest way to curry in all versions of Node is to use arrow functions. In recent versions, the difference between this method and application is

bindinsignificant, in the current conditions it is faster than using ordinary functions. However, we cannot say that the results obtained are true in any situations, since we probably need to investigate more types of partial application of functions with data structures of various sizes in order to get a more complete picture.Function Code Size

The size of the function, including its signature, spaces, and even comments, can affect whether the V8 can make the function inline or not. Yes, it is: adding comments to a function can decrease performance by about 10%. Will this change in the future?

In this test, we explore three scenarios:

- Call a small function (sum small function).

- The work of a small function, supplemented by comments, performed in built-in mode (long all together).

- Calling a large function with comments (sum long function).

→ GitHub test code

In V8 5.1 (Node 6), the sum small function and long all together tests show the same result. This perfectly illustrates how embedding works. When we call a small function, it is similar to the fact that V8 writes the contents of this function to the place where it is called. Therefore, when we write the text of the function (even with the addition of comments), we manually embed it in the place of the call and the performance turns out to be the same. Again, in V8 5.1 (Node 6), you can see that calling the function, supplemented by comments, after the function reaches a certain size, leads to significantly slower code execution.

In Node 8.0-8.2 (V8 5.8), the situation as a whole remains the same, except that the cost of calling a small function has increased markedly. This is probably due to the mixing of Crankshaft and TurboFan elements, when one function can be in Crankshaft and the other in TurboFan, which leads to a breakdown in the mechanisms of embedding (that is, there should be a transition between clusters of sequentially built-in functions).

In V8 5.9 and higher (Node 8.3+), adding extraneous characters, such as spaces or comments, does not affect the performance of functions. This is because TurboFan uses Abstract Syntax Tree to calculate the size of a function (AST), instead of like Crankshaft, count characters. Instead of taking into account the number of bytes of the function, TurboFan analyzes the actual instructions of the function, therefore, starting with V8 5.9 (Node 8.3+), spaces, characters that make up the variable names, function signatures and comments no longer affect whether the function can to be embedded . In addition, it should be noted that the overall performance of functions is declining.

The main conclusion here is that the functions are still worth doing as small as possible. At the moment, you still need to avoid unnecessary comments (and even spaces) inside functions. In addition, if you strive for maximum performance, manually embedding functions (that is, transferring the function code to the place of the call, which eliminates the need to call functions) stably remains the fastest approach. Of course, you need to keep a balance here, because, after the real executable code reaches a certain size, the function will not be built-in anyway, so thoughtless copying the code of other functions into your own can cause performance problems. In other words, manually embedding functions is a potential shot in the leg. In most cases, it is better to entrust the embedding of functions to the compiler.

32-bit and 64-bit integers

It is well known that JavaScript has only one numeric type:

Number. However, V8 is implemented in C ++, so the basic type of JavaScript numeric value is a matter of choice.

In the case of integers (that is, when we specify numbers in JS without a decimal point), V8 considers all numbers to be 32-bit - as long as they cease to be such. This seems like a fair choice, since in many cases the numbers are in the range 2147483648 -2147483647. If the JS-number (in its entirety) exceeds 2147483647, the JIT compiler has to dynamically change the basic type of the numerical value to the double-precision type (floating point) - this can, in potential, have a certain effect on other optimizations.

In this test, we will consider three scenarios:

- A function that works only with numbers that fit into the 32-bit range (sum small).

- A function that works with a combination of 32-bit numbers and numbers that require a double-precision data type (from small to big) to represent them.

- A function that operates only with double precision numbers (all big).

→ Test code on GitHub

The diagram allows us to say that, whether it is a question of Node 6 (V8 5.1), or of Node 8 (V8 5.8), or even of future versions of Node, the above observation remains valid. Namely, it turns out that calculations using integers greater than 2147483647 lead to the fact that the functions are executed at a speed that is in the region of half or two-thirds of the maximum. Therefore, if you have long digital IDs - put them in strings.

In addition, it is very noticeable that operations with numbers falling within the 32-bit range are performed much faster in Node 6 (V8 5.1), as well as in Node 8.1 and 8.2 (V8 5.8), than in Node 8.3+ (V8 5.9+ ) However, operations on double precision numbers in Node 8.3+ (V8 5.9+) are faster. This is probably because of the slowdown in processing 32-bit numbers, and does not apply to the speed of calling functions or loops

forthat are used in the test code. Jakob Kummerov , Yang Guo and the V8 team helped us to make the results of this test more accurate and more accurate. We are grateful to them for this.

Enumerating Object Properties

Taking values of all properties of an object and performing some actions with them is a common task. There are many ways to solve it. Find out which of the methods is the fastest in the studied versions of V8 and Node.

Here are four tests that all tested versions of V8 have undergone:

- Using a loop

for-inwith an applicationhasOwnPropertyto determine if a property is a property of an object (for-in). - Using

Object.keysand enumerating keys using anreduceobject methodArray. Access to property values is carried out inside the iterator function passedreduce(Object.keys functional). - Using

Object.keysand enumerating keys using anreduceobject methodArray. Access to property values is carried out inside the arrow function-iterator passedreduce(Object.keys functional with arrow). - Iterating over the array returned from

Object.keysin a loopfor. Access to the property values of an object is carried out in the same cycle (Object.keys with for loop).

In addition, we conducted three additional tests for V8 versions 5.8, 5.9, 6.0 and 6.1:

- Using

Object.valuesand enumerating the values of object properties using thereduceobject methodArray(Object.values functional). - The use

Object.valuesand enumeration of values using the method of thereduceobjectArray, while the iterator function passed to the methodreducewas an arrow function (Object.values functional with arrow). - Iterating over the array returned from

Object.valuesin the loopfor(Object.values with for loop).

We did not run these tests in V8 5.1 (Node 6), because this version does not support the EcmaScript 2017 built-in method

Object.values. → Test code on GitHub

In Node 6 (V8 5.1) and Node 8.0-8.2 (V8 5.8), using a loop

for-inis without a doubt the fastest way to iterate over the keys of an object, and then access its property values. This method yields approximately 40 million operations per second, which is 5 times faster than using the closest performance approach, which involves use Object.keys, and yielding approximately 8 million operations per second. In V8 6.0 (Node 8.3)

for-in, something happened to the cycle and performance dropped to the entire fourth of the speed achievable in previous versions. However, this approach has remained the most productive.In V8 6.1 (that is, in future versions of Node), the performance of the method using

Object.keysit grows, this method turns out to be faster than the method with a cycle for-in, however, the speed so far does not even come close to the results that were typical for for-inV8 5.1 and 5.8 (Node 6, Node 8.0-8.2). TurboFan seems to be driven by the desire to optimize the designs that are characteristic of an intuitive programming approach. Thus, optimization is performed for use cases that are most convenient for the developer.

Using

Object.valuesto directly get property values is slower than usingObject.keysand access to object values by key. In addition, procedural cycles are faster than a functional approach. Thus, with this approach, more work may be needed when it comes to enumerating the properties of objects. In addition, for those who are used to using the cycle

for-inbecause of its high performance, the current state of affairs can be very unpleasant. A significant part of the speed is lost, but no alternative is offered to us.Create Objects

Creating objects in JS is something that happens all the time, so this process will be very useful to investigate.

We are going to conduct three sets of tests:

- Creating objects using an object literal.

- Creating objects based on the EcmaScript 2015 class (class).

- Creating objects using the constructor function.

→ GitHub test code

In Node 6 (V8 5.1), all approaches show approximately the same results.

In Node 8.0-8.2 (V8 5.8), when creating objects from EcmaScript 2015 classes, performance is less than half that achievable using object literals or constructor functions. As you understand, it is quite obvious what to use in these versions of Node.

In V8 5.9, different ways of creating objects again show the same performance.

Then, in V8 6.0 (hopefully it will be Node 8.3 or 8.4) and 6.1 (as long as this version of V8 is not associated with any future Node release), the speed of creating objects is just crazy. Over 500 million operations per second! This is just awesome.

But even here you can see that creating objects using the constructor is a bit slower. Therefore, we believe that in the future, the most productive code will be the one where object literals are used. This suits us, since we, as a general rule, recommend returning object literals from functions (rather than using classes and constructors).

I must say that Jakob Kummerov noted here that TurboFan is able to optimize the selection of objects in our microbenchmark. We plan to research this and update the test results.

Polymorphic and monomorphic functions

If we always pass an argument of the same type to the function (say, this is a string), it means that we use the function monomorphically. But some functions are designed for polymorphism. This means that the same parameter can be represented by different hidden classes. Perhaps it can be processed as a string, or as an array, or as some arbitrary object. This approach allows, in some situations, to create nice software interfaces, but it affects performance poorly. We will experience the polymorphic and monomorphic use of functions.

We are going to investigate five test cases:

- Functions are passed both objects created using literals and strings (polymorphic with literal).

- Functions are passed both objects created using the constructor and strings (polymorphic with constructor).

- Functions are passed only strings (monomorphic string).

- Functions are transferred only objects created using literals (monomorphic obj literal).

- Functions are transferred only objects created using the constructor (monomorphic obj with constructor).

→ Test code on GitHub The

data on the diagram convincingly show that monomorphic functions work faster than polymorphic ones in all studied versions of V8.

The performance gap between monomorphic and polymorphic functions in V8 6.1 (in the engine that will receive one of future versions of Node) is especially wide, which aggravates the situation. However, it is worth noting that this test uses an experimental node-v8 branch, which uses something like a "night build" of V8, so this result may well not correspond to the actual characteristics of V8 6.1.

When writing code that should be optimal, that is, we are talking about a function that will be constantly called, polymorphism should be avoided. On the other hand, if a function is called once or twice, say, this function is used to prepare the program for work, polymorphic APIs are quite acceptable.

With regard to this test, we want to note that the V8 team has informed us that they could not reliably reproduce the results of this test, using their internal enforcement system

d8. However, these tests can be reproduced on Node. The test results should be considered on the assumption that the situation may change in Node updates (based on how Node integrates with V8). This question requires additional analysis. We thank Jacob Kummerov for drawing our attention to this.Debugger keyword

And finally, let's talk about the keyword

debugger. Remember to remove this keyword from the production code. Otherwise, there can be no question of any productivity.

Here we investigated two test cases:

- A function that contains a keyword

debugger(with debugger). - A function that does not contain a keyword

debugger(without debugger).

→ GitHub test code

Nothing much to say here. All versions of V8 show the strongest drop in performance when using a keyword

debugger. Here you can pay attention to the fact that the line of the graph for the test without debugger noticeably goes down in more recent versions of V8.

Real-world test: comparing loggers

In addition to microbenchmarks, we can take a look at how different versions of the V8 solve practical problems. To do this, we use several popular loggers for Node.js, which we studied with Matteo, creating the Pino logger .

The histogram below shows the time it takes for the most popular loggers to output 10 thousand lines (the lower the column, the better) in Node.js 6.11 (Crankshaft).

Here is the same, but already using V8 6.1 (TurboFan).

While all the loggers showed about a twofold increase in performance, Winston made the most of the new TurboFan JIT compiler. It seems that in this case several factors influenced the performance at once, which, individually, appeared in our micro-benchmarks. The slowest ways to work in Crankshaft prove to be significantly faster in TurboFan, while what works most quickly when using Crankshaft turns out to be a bit slower in TurboFan. The Winston logger, which was the most leisurely, probably uses techniques that are the slowest in Crankshaft but turn out to be much faster in TurboFan. At the same time, Pino is optimized for maximum performance at Crankshaft. It shows a relatively small increase in productivity.

Summary

Some of the tests show that what was slow in V8 5.1, 5.8, and 5.9 turns out to be faster thanks to the full use of TurboFan in V8 6.0 and 6.1. At the same time, what was the fastest loses in performance, often showing the same results as the slower options after increasing their speed.

This is mainly due to the cost of making function calls in TurboFan (V8 6.0 and above). The main idea when working on TurboFan was to optimize what is used most often, and also to ensure that existing "V8 performance killers" would not affect too much the speed of program execution. This led to a general increase in the performance of browser (Chrome) and server (Node) code. The trade-off, it seems, is the performance degradation of those approaches that were previously the fastest. We hope this is a temporary phenomenon. When comparing the performance of loggers, it was found that the overall effect of using TurboFan is a significant increase in the performance of applications with very different code bases (for example, Winston and Pino).

If you have been observing the JavaScript performance situation for some time, adapted to the oddities of the engines, now the time has almost come when you should update your knowledge in this area, take on something new, and forget something. If you strive to write high-quality JS code, then thanks to the efforts of the V8 team, expect an increase in the performance of your applications.

Dear readers! What JavaScript optimization approaches do you use?