MIT course "Computer Systems Security". Lecture 21: "Tracking data", part 1

- Transfer

- Tutorial

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems." Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: “Introduction: threat models” Part 1 / Part 2 / Part 3

Lecture 2: “Control of hacker attacks” Part 1 / Part 2 / Part 3

Lecture 3: “Buffer overflow: exploits and protection” Part 1 /Part 2 / Part 3

Lecture 4: “Privilege Separation” Part 1 / Part 2 / Part 3

Lecture 5: “Where Security System Errors Come From” Part 1 / Part 2

Lecture 6: “Capabilities” Part 1 / Part 2 / Part 3

Lecture 7: “Native Client Sandbox” Part 1 / Part 2 / Part 3

Lecture 8: “Network Security Model” Part 1 / Part 2 / Part 3

Lecture 9: “Web Application Security” Part 1 / Part 2/ Part 3

Lecture 10: “Symbolic execution” Part 1 / Part 2 / Part 3

Lecture 11: “Ur / Web programming language” Part 1 / Part 2 / Part 3

Lecture 12: “Network security” Part 1 / Part 2 / Part 3

Lecture 13: “Network Protocols” Part 1 / Part 2 / Part 3

Lecture 14: “SSL and HTTPS” Part 1 / Part 2 / Part 3

Lecture 15: “Medical Software” Part 1 / Part 2/ Part 3

Lecture 16: “Attacks through a side channel” Part 1 / Part 2 / Part 3

Lecture 17: “User authentication” Part 1 / Part 2 / Part 3

Lecture 18: “Private Internet viewing” Part 1 / Part 2 / Part 3

Lecture 19: “Anonymous Networks” Part 1 / Part 2 / Part 3

Lecture 20: “Mobile Phone Security” Part 1 / Part 2 / Part 3

Lecture 21: “Data Tracking” Part 1 /Part 2 / Part 3

James Mickens: great, let's get started. Thank you for coming to the lecture on this special day before Thanksgiving. I am glad you guys are so committed to computer security and I am sure that you will be in demand on the labor market. Feel free to refer to me as a source of recommendations. Today we will talk about tracking Taint-tracking infection, in particular, about a system called TaintDroid, which provides the execution of this type of analysis of information flows in the context of Android smartphones.

The main problem raised in the lecture article is the fact that applications can retrieve data. The idea is that your phone contains a lot of confidential information - a list of contacts, your phone number, email address and all that. If the operating system and the phone itself are not careful, then the malicious application may be able to extract some of this information and send it back to your home server, and the server will be able to use this information for all types of ill-fated things that we will talk about later.

In a global sense, the TaintDroid article offers a solution: keep track of sensitive data as it passes through the system, and, in fact, stop it before it is transmitted over the network. In other words, we must prevent the possibility of transferring data as an argument to network system calls. Apparently, if we can do this, then we can essentially stop the leak right at the moment when it starts to happen.

You might think, why is Android’s traditional permissions not enough to prevent this type of data from being extracted? The reason is that these permissions do not have the correct grammar to describe the type of attack that we are trying to prevent. Permissions for Android typically deal with application rights to write or read anything from a particular device. But now we are talking about what is on a different semantic level. Even if an application has been granted the right to read information or write data to a device such as a network, it can be dangerous to allow an application to read or write certain sensitive data to a device that it has permission to interact with.

In other words, using traditional Android security policies, it is difficult to talk about specific data types. It is much easier to talk about whether the application is accessing the device or not. Perhaps we can solve this problem using an alternative solution, I will mark it with an asterisk.

This alternative solution is to never install applications that can read sensitive data and / or access the network. At first glance, it seems that the problem has been fixed. Because if an application cannot do both of these things at the same time, it will either not be able to get to sensitive data, or it can read it, but it will not be able to send it over the network. What do you think is the catch?

Everyone is already thinking about holiday turkey, I can see it in your eyes. Well, the main reason why this is a bad idea is that this measure can break the work of many legitimate applications. After all, there are many programs, for example, email clients, which in fact should be able to read some confidential data and send it over the network.

If we just say that we are going to prevent this kind of activity, then we will actually prohibit the work of many applications on the phone, which users probably will not like.

There is another problem here - even if we implemented this solution, it will not prevent data leakage through a bunch of different third-party channel mechanisms. For example, in previous lectures we considered that the browser’s cache, for example, may contribute to the leakage of information about the user’s visit to a particular site. Therefore, even with the implementation of such a security policy, we will not be able to control all third-party channels. A little later we will talk about third-party channels.

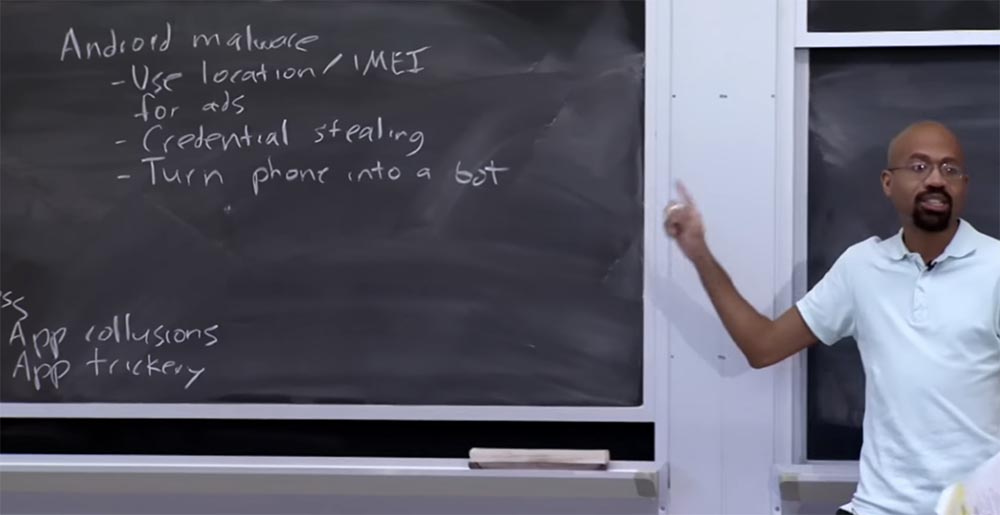

The proposed solution will not stop application collusion, when two applications can work together to break the security system. For example, what to do if one application does not have access to the network, but it can communicate with the second application that has it? After all, it is possible that the first application can use the IPC Android mechanisms to transfer confidential data to an application that has network permissions, and this second application can upload this information to the server. But even if the applications are not in collusion, there may be some kind of trick when one application can force other applications to accidentally give out sensitive data.

It is possible that there is some flaw in the e-mail program, because of which it receives too many random messages from other components of the system. Then we could create a special intent intent to spoof the mail program, and it would force the Gmail application to email something important outside the phone. So this alternative solution does not work well enough.

So, we are very concerned that confidential data is leaving the phone. Consider what in practice do malicious applications for Android. Are there any attacks in the real world that can be prevented by tracking Taint-tracking infections? The answer is yes. Malware is becoming an increasing problem for mobile phones. The first thing a malicious application can do is use your location or IMEI to advertise or impose services.

Malicious software can determine your physical location. For example, you are near the MIT campus, so you are a hungry student, so why don't you visit my snack bar on wheels, which is located very close?

IMEI is an integer representing the unique identifier of your phone. It can be used for your tracking in different places, especially in those where you would not want to "light up". Thus, in nature there are malicious programs that can do such things.

The second thing malware does is steal your personal data. They may try to steal your phone number or contact list and try to upload these things to a remote server. It may be necessary to impersonate you, for example, in a message that will later be used to send spam.

Perhaps the worst thing that malware can do, at least for me, is turn your phone into a bot.

This, of course, is a problem that our parents did not have to face. Modern phones are so powerful that they can be used to send spam. There are many malware targeting specific corporate environments that do just that. Once in your phone, they begin to use it as part of the spam network.

Student: is it malware that specifically targets the Android OS, or is it just a typical application? If this is a typical application, then perhaps we would be able to secure it with permissions?

Professor:This is a very good question. There are both types of malware. As it turned out, it's pretty easy to get users to click on different buttons. I will give you an example that concerns not so much malware as careless behavior of people.

There is a popular game Angry Birds, you go to the App Store and look for it in the application search bar. The first in the search results you will be given the original game Angry Birds, and in the second line may be the application Angry Birdss, with two s at the end. And many people will prefer to download this second application, because it may cost less than the original version. Further, during installation, this application will write that after installation you will allow it to do this and that, and you will say: “Of course, no problem!”, Because you received the desired Angry Birds for a mere penny. After this "boom" - and you are on the hook of a hacker!

But you are absolutely right when you assume that if the Android security model is correct, then installing malware will depend entirely on the stupidity or naivety of users who give it access to the network, for example, when your game Tic-Tac-Toe should not have access to network.

So you can turn your phone into a bot. This is terrible for many reasons, not only because your phone sends spam, but also because you may be paying for the data of all those emails that are sent from your phone. In addition, the battery is rapidly discharged, because your phone is constantly busy sending spam.

There are applications that will use your personal information to cause harm. Especially bad in this bot is that it can really look at your contact list and send spam on your behalf to people who know you. At the same time, the likelihood that they will click on something malicious in this letter increases many times over.

So, preventing the extraction of information is a good thing, but it does not prevent the possibility of hacking itself. There are mechanisms to which we must pay attention first of all, because they prevent an attacker from seizing your smartphone by educating users what they can click on and what they should not click on in any way.

Thus, taint-tracking by itself is not a sufficient solution to prevent a situation that threatens to seize your phone.

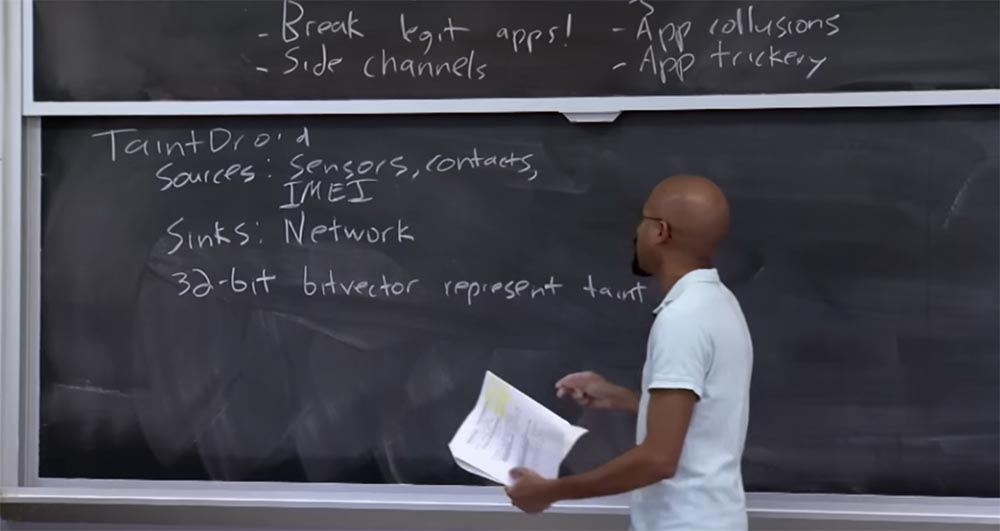

Let's take a look at how TaintDroid works. As I mentioned earlier, TaintDroid will keep track of all your sensitive information as it spreads through the system. So, TaintDroid distinguishes what is called the "information sources" Information sources and "information sinks" Information sinks. Sources of information generate confidential data. Usually these are sensors - GPS, accelerometer and the like. It can be your contact list, IMEI, everything that can connect you, a particular user, with your real phone. These are devices that generate infectious information, called sources of infected data - Taint source.

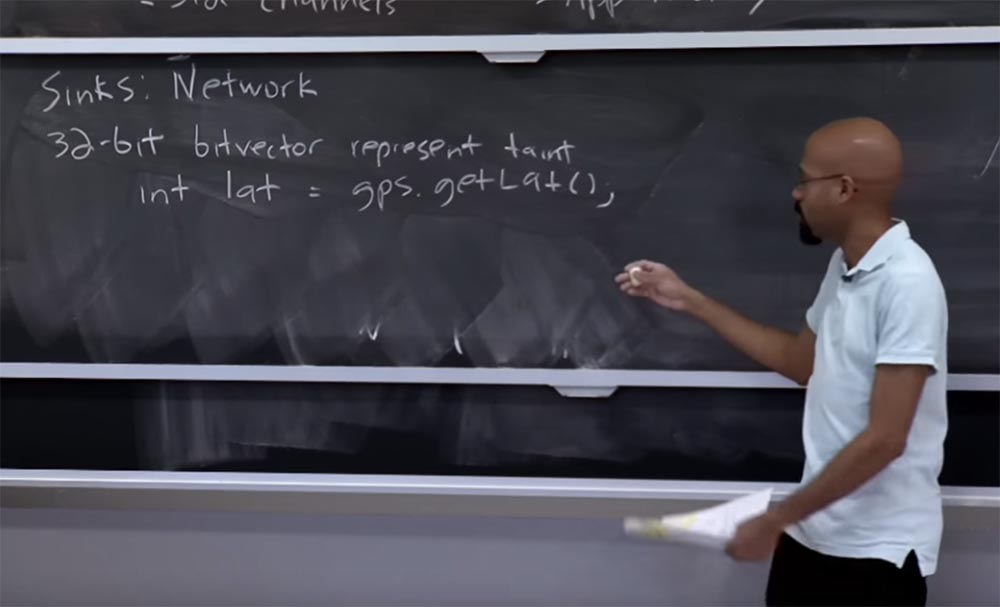

In this case, information sinks are places where infected data should not leak. In the case of TaintDroid, the main absorber is the network. Later we will talk about the fact that you can imagine more places where the information flows, but the network has a special place in TaintDroid. In a more general-purpose system than a phone, there may be other Information sinks, but TaintDroid is designed to prevent leaks to the network.

In TaintDroid, a 32-bit bitvector is used to represent Taint infection. This means that you can have no more than 32 separate sources of infection.

Therefore, each confidential information will have a unit located in a certain position if it has been infected by a particular source of infection. For example, it was obtained from GPS data, from something from your contact list, and so on and so forth.

Interestingly, the 32 sources of infection are actually not that many. The question is whether this number is large enough for this particular system and whether it is large enough for general systems suffering from information leaks. In the particular case of TaintDroid, 32 sources of infection are a reasonable value, because this problem concerns a limited flow of information.

Considering all the sensors that are present on your phone, confidential databases and the like, 32 seems to be the correct value in terms of storing these infected flags. As we will see from the implementation of this system, 32 is actually a very convenient number, because it corresponds to 32 bits, an integer with which you can effectively construct these flags.

However, as we will discuss later, if you want to give programmers the ability to control information leaks, that is, to specify your own sources of infection and your own types of leaks, then 32 bits may not be enough. In this case, you should think about including more complex runtime support to indicate more space.

Roughly speaking, when you look at how an infection flows through the system, in a general sense, it happens from right to left. I will give a simple example. If you have an operator, for example, you declare an integer variable that is equal to the latitude of your location: Int lat = gps.getLat (), then essentially the thing to the right of the equal sign generates a value that has some associated with her infection.

So a specific flag will be set that says: “hey, this value that I return comes from a confidential source”! So the infection will come from here, on the right side, and go here, to the left, to infect this part of lat. Here is how it looks in the eyes of a human developer who writes source code. However, the Dalvik virtual machine uses this register format at a lower level to create programs, and this is actually how the taint semantics are implemented in reality.

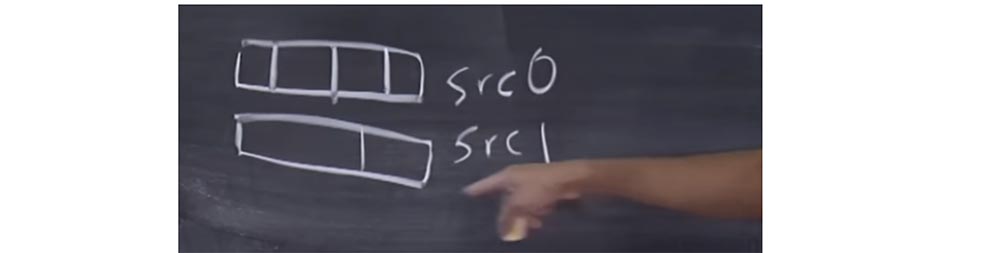

In the table to one of the lecture articles there is a large list of commands describing how infection affects these types of commands. For example, you can imagine that you have a move-op operation that points to the destination dst and the source srs. In the Dalvik virtual machine, on an abstract computing engine, this can be considered as registers. As I have already said, the infection goes from the right side to the left side, so in this case, when the Dalvik interpreter executes the instructions on the right side, it examines the taint label of the sourse parameter and assigns it to the dst parameter.

Suppose that we have another instruction in the form of a binary-op binary operation that performs something like an addition. We have one dst destination and two sources: srs0 and srs1. In this case, when the Dalvik interpreter processes this instruction, it takes taint from both sources, combines them, and then assigns this union to the destination dst.

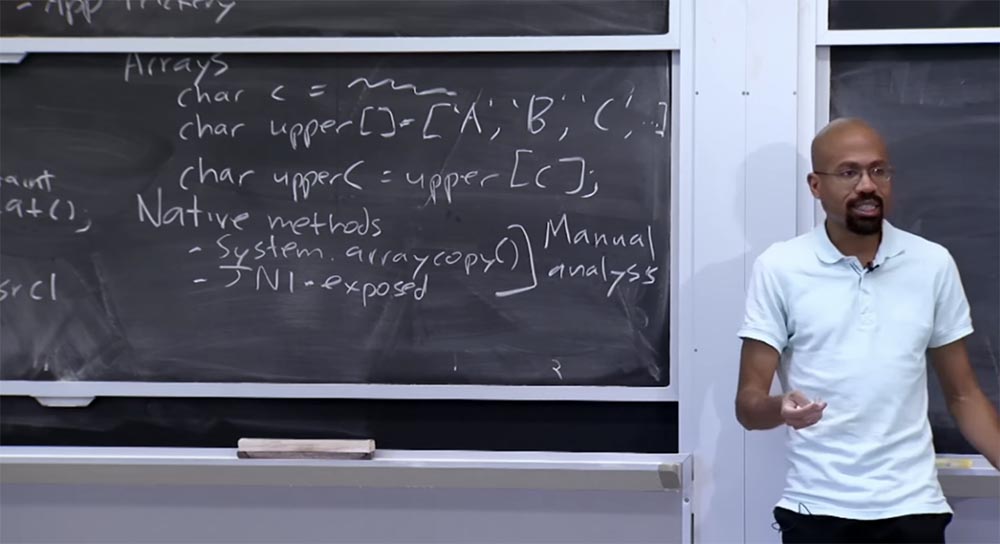

It is quite simple. The table lists the different types of instructions that you will see, but as a first approximation, these are the most common ways in which an infection spreads through the system. Let's look at the particularly interesting cases that are mentioned in the article. One such special case is associated with arrays.

Suppose you have a command char c, which assigns a certain value C. In this case, the program declares a certain array of char upper [] which will contain capital letters "A", "B", "C": char upper [] = ["A "," B "," C "]

A very common thing in code is to index into an array like this, using C directly, because, as we all know, Kernighan and Richie teach that mostly characters are whole numbers. So you can imagine that there is some char upperC code that says that the capital versions of these symbols “A”, “B”, “C” correspond to specific indices in this table: char upperC = upper [C]

This raises the question of which infection should get upperC in this case. It seems that in previous cases we had everything simple, but in this case we have a lot of things happening. We have an array ["A", "B", "C"], which may have a type of infection and we have this symbol C, which may also have its own type of infection. Here, the Dalvik virtual machine acts in the same way as it did in the binary-op case. It assigns a combination of infections [C] and an array to the upperC symbol.

Intuition tells us that in order to create upperC, we need to know something about the upper [] array. We need to know something about this index [C]. Therefore, I think that this thing, upperC should be as confidential as these two things combined.

Student:can you explain again what exactly does taint mean for move op and binary op operations?

Professor: let's look at the move op move operation. Imagine that this operation is srs ... actually, let me clarify. Each variable, I will now tell you what this variable is, is an integer number that has a bunch of bits set in accordance with the existing taint. Imagine that each of these values flying around has a bound integer flying around it, in which several bits are set.

Suppose that this srs source had two sets of bits related to the fact that it was infected with two things. The interpreter will look at this source srs as an associated integer and say: “I have to take this integer in which 2 sets of bits are set and make this number the infected tag for srs”. So this is a simple case.

A more difficult case is to understand what this taint union looks like. Imagine that we have two sources, srs0 and srs1, and each one contains bits of taint that look like this:

\

\ Then the dst destination will contain all these bits:

\

\As I said, one of the reasons why we want to represent all sources of infection in 32 bits is that in this case, we simply perform bitwise operations. Thus, it really reduces the overhead of implementing these infected bits. If you need to display a larger universe of taints, you may have great difficulty, because you can no longer use these effective bitwise operations.

So, how arrays work is a bit like processing an operation in the case of binary-op. At the same time, upperC will receive a union of bits from [C] and [“A”, “B”, “C”]. The design solution for TaintDroid is that developers associate a separate taint infecting table with each array. In other words, they try not to infect certain elements. This allows them to save storage space, because for each array they declare only one 32-bit entity that “floats” around this array and represents all the infections belonging to this array.

One of the questions is why it is safe to not have a more accurate system for working with taint. After all, an array is a data set, so why don't we have a bunch of labels for every thing that is in this array? The answer is that by associating only one taint tag with an array and making it the union of all the things contained inside, we get a re-evaluation of the infection. In other words, if you have an array containing two things inside you, and this array is infected by combining the infections of these two things, then this approach to the problem is redundant. Because if something gets access to one of these things, it does not care about infecting the second thing.

However, the redundant approach is preferable to the insufficient approach, because in this case we will always be right. In other words, if we underestimate the amount of infection that exists in some element, then we can accidentally reveal what we did not want to reveal. When overestimating the infection, the worst thing that can happen is that we will prevent the leakage beyond the limits of the phone that could be easily transferred over the network. So in this case we make a mistake in favor of security.

Another example that is mentioned in the article is a kind of a special case of the spread of infection, in which such things as Native methods, or machine-dependent methods are mentioned. Native methods can exist inside the virtual machine itself. For example, the Dalvik virtual machine provides functions such as system.arraycopy (), so we can transfer something through them, and inside the virtual machine for speed reasons, this is implemented as C or C ++ code. This is one of the examples of the Native method that you can have.

You can also have a machine-dependent method called JNI. JNI, or Java Native Interface - a mechanism for running C or C ++ code on a Java virtual machine. Essentially, it allows Java code to invoke non-Java code. This interface is implemented using x86, ARM or similar things and has a whole bunch of calls that allow two types of stacks to interact.

The problem with these machine-dependent methods from the point of view of tracking taint is that this machine code cannot be executed directly by the Dalvik interpreter. Often this is not even Java code, but C or C ++ code. This means that as soon as the executable stream falls into one of these Native methods, TaintDroid cannot spread the infection as it does in the case of Java code.

\

\This seems a bit problematic, because these things look like black boxes, and you want to make sure that as a result of applying these methods you still get new taint. The way in which the authors solve this problem is a manual analysis. Essentially they say that there are not too many of these methods. For example, the Dalvik virtual machine provides only a certain number of functions, such as system.arraycopy (), so the developer can look at a relatively small number of calls and find out where the taint relationship should be. That is, the developer can look at arraycopy () and say: “since I know the semantics of this operation, I know that we have to infect the values returned by this function by setting this function to have input values in a certain way”.

What is the scale of this? If there is a relatively small number of things that are processed in the virtual machine by machine code, everything will be fine. Because if you assume that the interface of the Dalvik virtual machine does not change very often, then in fact it is not too burdensome to consider all these things manually, study the documentation and figure out how the infection will spread.

Using the JNI machine-dependent method may or may not be more troublesome. The authors of the article provide some empirical evidence that suggests that many applications do not contain codes written in C or C ++, so this will not be a big problem.

They also argue that for some methods it is possible to automate the way in which infection calculations are performed. For example, if machine-dependent methods use only integers or strings of such numbers, then you can simply develop a standard thing that marks the output values of functions with tags, obtained by summing all infections of the input values. So in practice it will not create any problems.

26:25 min.

The course MIT "Security of computer systems." Lecture 21: "Tracking data", part 2

Full version of the course is available here .

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr's users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until January for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only here2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?