Automatic compression of stored data in redis

The problem is that during peak hours the network interface cannot cope with the transmitted amount of data.

From the available solution options, the compression of the stored data

tl; dr was chosen : saving memory> 50% and network> 50%. It's about a plugin for PREDIS , which automatically compresses data before sending it to redis.

As you know, redis uses a binary safe text protocol and the data is stored in its original form. In our application, redis stores serialized php objects and even pieces of html code, which is very suitable for the very concept of compression - the data is homogeneous and contains many repeating groups of characters.

In the process of finding a solution, a discussion was found in the group - the developers do not plan to add compression to the protocol ... So we will do it ourselves.

So, the concept: if the size of the data transferred for saving to redis is more than N bytes, then compress the data using gzip before saving. When receiving data from redis, check the first bytes of data for the presence of a gzip header and, if found, unpack the data before passing it to the application.

Since we use predis to work with redis, the plugin was written for it.

Let's start small and write a mechanism for working with compression -

We take out the logic of checking the size of the input data in the class

We make an implementation based on gzencode for compression, which has magic bytes equal

A nice bonus - if you use RedisDesktopManager , then it automatically unpacks gzip when viewing. I tried to see the result of the plugin working in it and, until I found out about this feature, I thought that the plugin did not work :)

In predis there is a Processor mechanism that allows you to change the arguments of commands before transferring them to the repository, we will use it. By the way, based on this mechanism, the standard predis package has a prefix that allows you to dynamically add a string to all keys.

The processor looks for commands that implement one of the interfaces:

1.

2.

The logic turned out to be strange, do not you think? Why is the compression of the arguments explicitly and called by the processor, but the logic for unpacking the answers is not? Take a look at the command creation code that uses predis (

Because of this logic, we have several problems.

The first one is that the processor can affect the command only after it has already received the arguments. This does not allow us to transfer some external dependency

The second problem is that the processor cannot affect the processing of the server response command. Because of this, the unpacking logic is forced to be in

Together, these two problems led to the fact that the mechanism for unpacking is stored inside the team and the unpacking logic itself is not explicit. I think the processor in predis should be divided into two stages - the preprocessor (for transforming the arguments before sending it to the server) and the postprocessor (for transforming the response from the server). I shared these thoughts with predis developers.

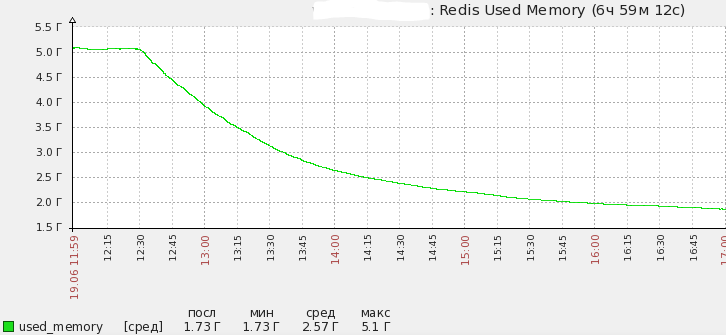

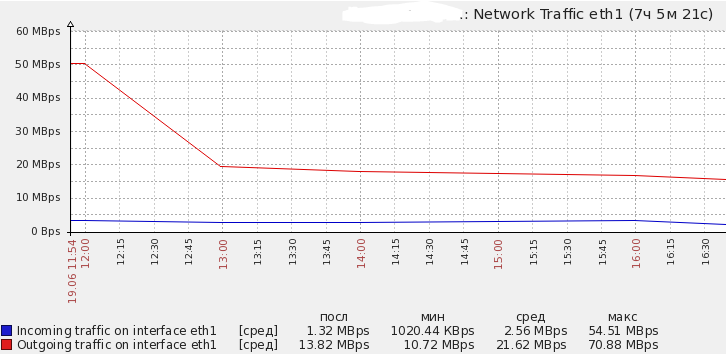

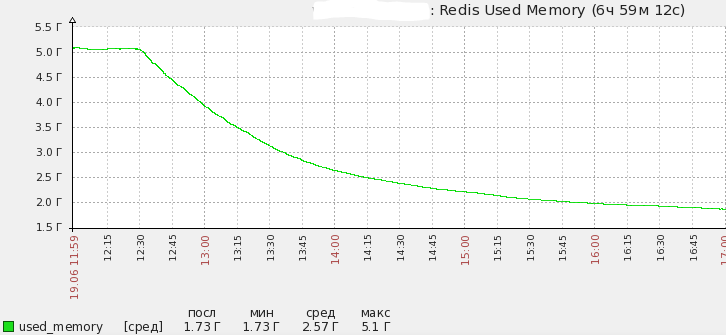

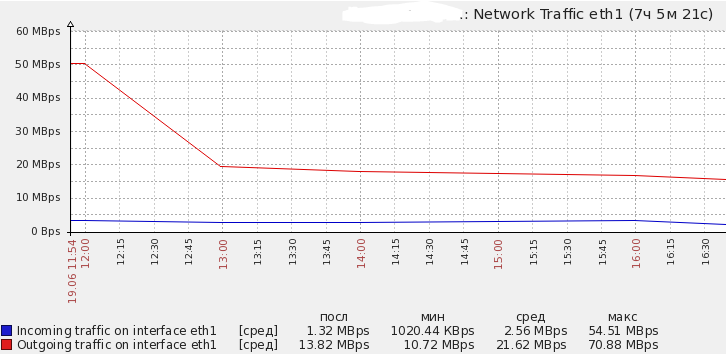

About the results of plugin activation on the graphs of one of the cluster instances:

How to install and start using:

Upd : link to the plugin on GitHub .

From the available solution options, the compression of the stored data

tl; dr was chosen : saving memory> 50% and network> 50%. It's about a plugin for PREDIS , which automatically compresses data before sending it to redis.

As you know, redis uses a binary safe text protocol and the data is stored in its original form. In our application, redis stores serialized php objects and even pieces of html code, which is very suitable for the very concept of compression - the data is homogeneous and contains many repeating groups of characters.

In the process of finding a solution, a discussion was found in the group - the developers do not plan to add compression to the protocol ... So we will do it ourselves.

So, the concept: if the size of the data transferred for saving to redis is more than N bytes, then compress the data using gzip before saving. When receiving data from redis, check the first bytes of data for the presence of a gzip header and, if found, unpack the data before passing it to the application.

Since we use predis to work with redis, the plugin was written for it.

Let's start small and write a mechanism for working with compression -

CompressorInterface- methods for determining whether to compress, compression, determining whether to unpack and unpack itself. The class constructor will take a threshold value in bytes, starting from which compression is enabled. This interface allows you to implement your favorite compression algorithm yourself, for example, tube WinRAR. We take out the logic of checking the size of the input data in the class

AbstractCompressorso as not to duplicate it in each of the implementations.AbstractCompressor

We use single-byte encoding

abstractclassAbstractCompressorimplementsCompressorInterface{

const BYTE_CHARSET = 'US-ASCII';

protected $threshold;

publicfunction__construct(int $threshold){

$this->threshold = $threshold;

}

publicfunctionshouldCompress($data): bool{

if (!\is_string($data)) {

returnfalse;

}

return \mb_strlen($data, self::BYTE_CHARSET) > $this->threshold;

}

}We use single-byte encoding

mb_strlento overcome possible problems with mbstring.func_overloadand to prevent attempts to automatically determine the encoding from the data.We make an implementation based on gzencode for compression, which has magic bytes equal

\x1f\x8b\x08"(by them we will understand that the string needs to be unpacked).Gzipcompressor

classGzipCompressorextendsAbstractCompressor{

publicfunctioncompress(string $data): string{

$compressed = @\gzencode($data);

if ($compressed === false) {

thrownew CompressorException('Compression failed');

}

return $compressed;

}

publicfunctionisCompressed($data): bool{

if (!\is_string($data)) {

returnfalse;

}

return0 === \mb_strpos($data, "\x1f" . "\x8b" . "\x08", 0, self::BYTE_CHARSET);

}

publicfunctiondecompress(string $data): string{

$decompressed = @\gzdecode($data);

if ($decompressed === false) {

thrownew CompressorException('Decompression failed');

}

return $decompressed;

}

}A nice bonus - if you use RedisDesktopManager , then it automatically unpacks gzip when viewing. I tried to see the result of the plugin working in it and, until I found out about this feature, I thought that the plugin did not work :)

In predis there is a Processor mechanism that allows you to change the arguments of commands before transferring them to the repository, we will use it. By the way, based on this mechanism, the standard predis package has a prefix that allows you to dynamically add a string to all keys.

classCompressProcessorimplementsProcessorInterface{

private $compressor;

publicfunction__construct(CompressorInterface $compressor){

$this->compressor = $compressor;

}

publicfunctionprocess(CommandInterface $command){

if ($command instanceof CompressibleCommandInterface) {

$command->setCompressor($this->compressor);

if ($command instanceof ArgumentsCompressibleCommandInterface) {

$arguments = $command->compressArguments($command->getArguments());

$command->setRawArguments($arguments);

}

}

}

}The processor looks for commands that implement one of the interfaces:

1.

CompressibleCommandInterface- shows that the command supports compression and describes a method for the implementation to receive CompressorInterface. 2.

ArgumentsCompressibleCommandInterface- the heir to the first interface, shows that the command supports argument compression. The logic turned out to be strange, do not you think? Why is the compression of the arguments explicitly and called by the processor, but the logic for unpacking the answers is not? Take a look at the command creation code that uses predis (

\Predis\Profile\RedisProfile::createCommand()):publicfunctioncreateCommand($commandID, array $arguments = array()){

// вырезаны проверки и поиск реализации команды

$command = new $commandClass();

$command->setArguments($arguments);

if (isset($this->processor)) {

$this->processor->process($command);

}

return $command;

}Because of this logic, we have several problems.

The first one is that the processor can affect the command only after it has already received the arguments. This does not allow us to transfer some external dependency

GzipCompressorinto it ( in our case, but it could also be some other mechanism that needs to be initialized outside of predis, for example, an encryption system or a mechanism for signing data). Because of this, an interface appeared with a method for compressing arguments. The second problem is that the processor cannot affect the processing of the server response command. Because of this, the unpacking logic is forced to be in

CommandInterface::parseResponse(), which is not entirely correct.Together, these two problems led to the fact that the mechanism for unpacking is stored inside the team and the unpacking logic itself is not explicit. I think the processor in predis should be divided into two stages - the preprocessor (for transforming the arguments before sending it to the server) and the postprocessor (for transforming the response from the server). I shared these thoughts with predis developers.

Typical Set Command Code

useCompressibleCommandTrait;

useCompressArgumentsHelperTrait;

publicfunctioncompressArguments(array $arguments): array{

$this->compressArgument($arguments, 1);

return $arguments;

}Typical Get Command Code

useCompressibleCommandTrait;

publicfunctionparseResponse($data){

if (!$this->compressor->isCompressed($data)) {

return $data;

}

return$this->compressor->decompress($data);

}About the results of plugin activation on the graphs of one of the cluster instances:

How to install and start using:

composer require b1rdex/predis-compressibleuseB1rdex\PredisCompressible\CompressProcessor;

useB1rdex\PredisCompressible\Compressor\GzipCompressor;

useB1rdex\PredisCompressible\Command\StringGet;

useB1rdex\PredisCompressible\Command\StringSet;

useB1rdex\PredisCompressible\Command\StringSetExpire;

useB1rdex\PredisCompressible\Command\StringSetPreserve;

usePredis\Client;

usePredis\Configuration\OptionsInterface;

usePredis\Profile\Factory;

usePredis\Profile\RedisProfile;

// strings with length > 2048 bytes will be compressed

$compressor = new GzipCompressor(2048);

$client = new Client([], [

'profile' => function(OptionsInterface $options)use($compressor){

$profile = Factory::getDefault();

if ($profile instanceof RedisProfile) {

$processor = new CompressProcessor($compressor);

$profile->setProcessor($processor);

$profile->defineCommand('SET', StringSet::class);

$profile->defineCommand('SETEX', StringSetExpire::class);

$profile->defineCommand('SETNX', StringSetPreserve::class);

$profile->defineCommand('GET', StringGet::class);

}

return $profile;

},

]);Upd : link to the plugin on GitHub .