The story of how cognitive technology helps keep karma

Recently, we argued with one of my friends about whether to leave negative reviews and negatively evaluate someone else’s work. For example, you come to the bank, and there you are a naughty consultant. I am convinced that it’s worth it, because without this assessment a person will continue to be rude. A friend believes that this is a big minus in your karma, you can’t offend people, they themselves will understand everything with time. Around the same time, we had a hackfest for partners, at which I saw a solution that could save each of us’s karma. Sin is not to share. As you may have guessed from the name, under the cut we will focus on development based on cognitive services.

Here should be a text from the series "you know how important it is for any company to evaluate the quality of service - this is the basis of development." In my opinion, these are quite banal truths, so let’s omit them.

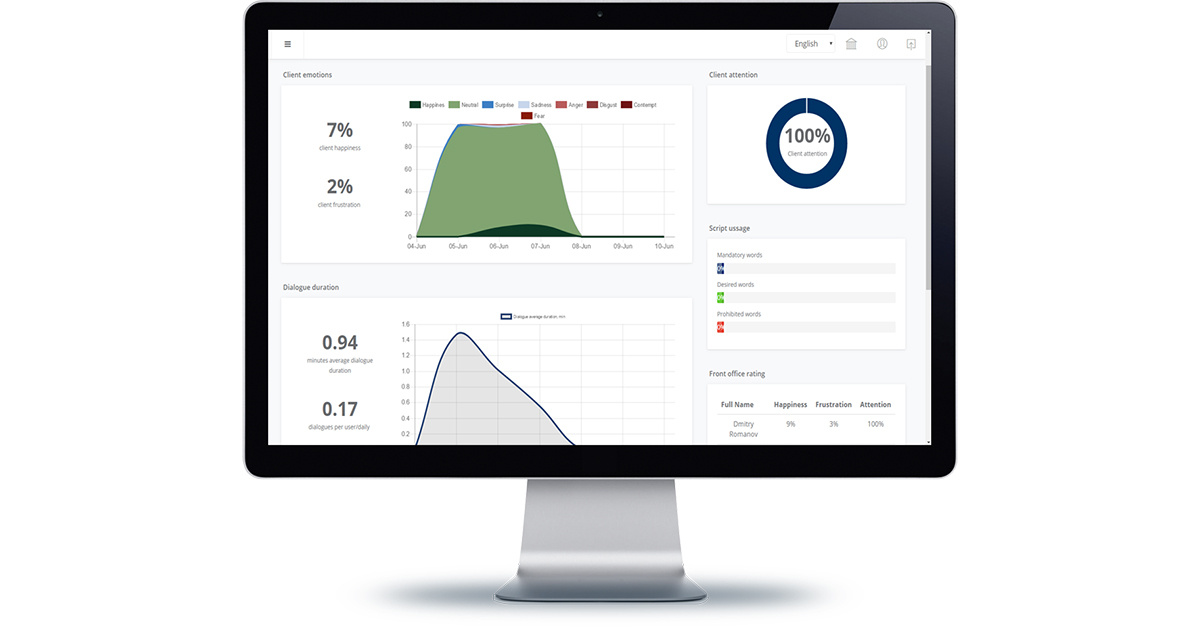

The Heedbook service , which I will talk about today, has one very cool advantage over other ways of evaluating employees' work with clients - this is an automatic assessment of client emotions in real time. That is, returning to my friend, his tolerance will not save the consultant from a real assessment of the work. And the wolves are full, and the sheep are whole, and the karma of a friend too.

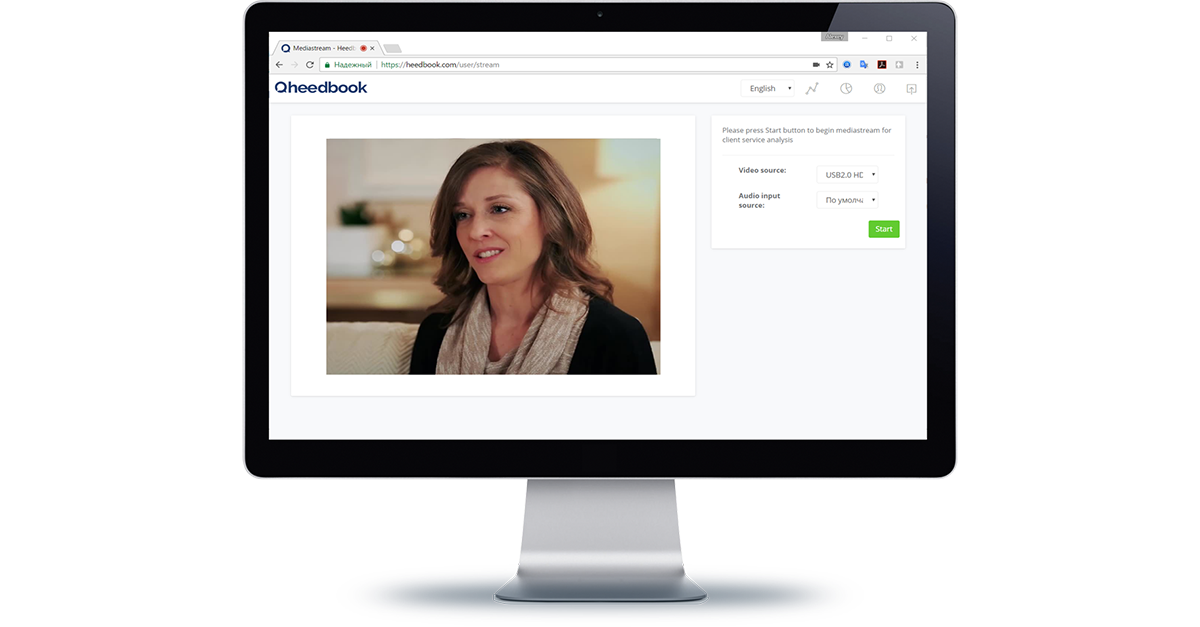

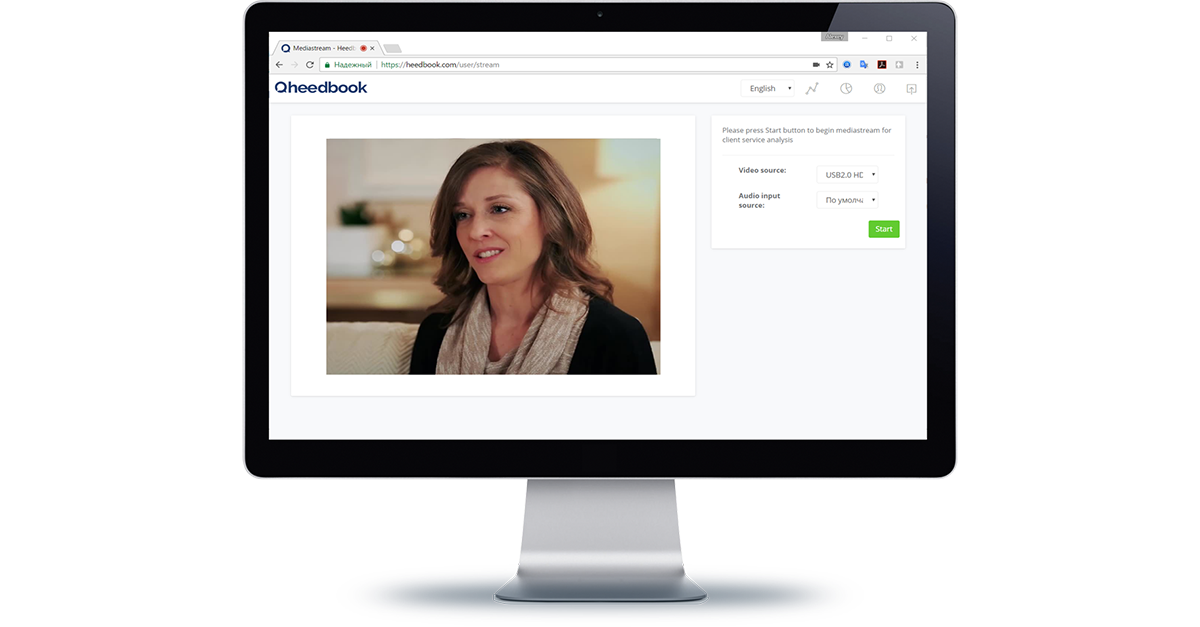

1. A front-line employee of a bank (or a pharmacy, or an MFC, or a store, or similar enterprises) enters the system through a browser at the beginning of the working day.

2. A client comes to him, for example, my friend.

3. The system receives and analyzes the video stream from the webcam in real time in the background.

4. Information is processed by systems of intellectual recognition of emotions, speech and other client parameters.

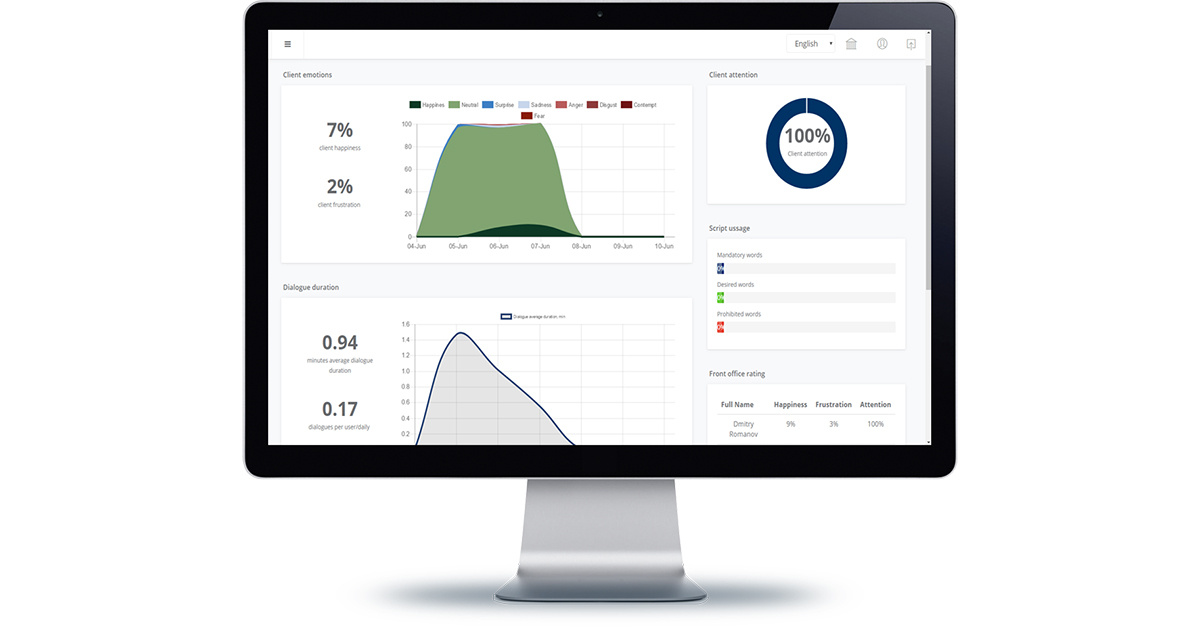

5. Based on the results of the analysis, the system provides detailed analytics on the structure of emotions and the share of positive / negative emotions of the client, the attention of the client to the employee, the content of the dialogue, the use of script servicing or forbidden phrases.

6. The head of the office and employees of the parent company receive detailed information about the quality of customer service by managers and customers. (And here we see how our consultant begins to get nervous.))

In addition to the above, for each employee the average customer service time, the number of customers served, and the structure of the client base by demographic indicators are determined.

Another interesting feature, the director can connect to the video stream from the workplaces of front-line employees, as well as view video recordings of dialogs later with detailed analytics on them. That is, a new scenario is added to our story when a consultant begins to be rude to my friend, and then suddenly his eyes widen and he becomes sweet and polite. )

Well, the last interesting detail, the Heedbook has a rating system for employees.

Together with Dima Soshnikov, we partially helped the guys in designing the solution. The first thing they did was decide to get away from monolithic architecture and make a system built on microservices (as you can see from my last articles, in my opinion this is a very interesting topic). To do this, use Azure Functions . In fact, we also thought about WebJob, but it has performance limitations and pricing does not come from the number of operations performed.

The main AF development environment is the online function editor on the Azure portal. Also, from the end of May 2017, you can create AF using Visual Studio 2017 UPD 3.

Since AF is a new Microsoft product, there is no complete documentation on it yet, so below we will analyze an example of one AF Heedbook project. This will save time if you decide to build a microservice architecture based on Azure.

AF trigger can be an Http request, the appearance of Blob in Azure Blob storage, actions in OneDrive or just a timer. The project implements almost all of the above options triggers for AF. We also implemented AF cascades when the work of one AF launches another, thus providing a single business data analysis process.

An example of our AF is triggered by the appearance of a blob - a picture. Using this AF, we will determine the number of people in the picture and their emotions. We do this using the Microsoft Face API cognitive service .

First you need to connect the necessary libraries of cognitive services. For the online AF editor, you have to do this manually by creating the project.json file and writing all the necessary dependencies there:

In the case of creating AF in Visual Studio 2017 UPD 3, we simply connect the necessary dependencies using Nuget.

Next we need to register the AF trigger and output parameters. In our case, this is the appearance of a blob in a specific container and the recording of recognition results in the Azure MsSql table. This is done in the function.json file:

So, the Azure Functions code itself!

In this case, it is an asynchronous procedure in conjunction with the Cognitive Services Face API. AF receives Stream blob and transfers it to CS:

Next, selects the largest face in the frame:

And writes the recognition results to the database:

It's simple, isn't it? The future is in microservices. )

Okay, not so simple, really.

AF, unfortunately, currently has a number of limitations (name binding does not work, there are library conflicts). Fortunately, there are always many walkarrounds in the .Net development world - and if you can’t solve the problem in a basic scenario, you can find several workarounds.

As you know, modern operating systems try to save battery life as much as possible, stopping the proactive work of all applications in the background. This also applies to streaming in a web, mobile or desktop application. After a long survey on this subject. we opted for a web solution.

We get the video and audio stream from the webcam using

In the future, the service will move towards creating its own audio recognition models, but at the moment, it is necessary to use external providers of Russian language recognition services. It was difficult to choose a good service that provides high-quality speech recognition in Russian. The current configuration of the system uses a combination of speech recognition systems, for Russian speech Goolge Speech Api is used, which in testing showed the best results of recognition quality.

In fact, this decision is not just tales of the future. In the near future, Heedbook will begin working in the Moscow Region MFC and the country's largest bank.

Heedbook team will be grateful for comments on their solution, and will also be glad to work with professionals in the field of ML, data analysis, SEO and working with large clients. Write your thoughts in the comments or email info@heedbook.com .

Introduction

Here should be a text from the series "you know how important it is for any company to evaluate the quality of service - this is the basis of development." In my opinion, these are quite banal truths, so let’s omit them.

Save karma, cheap

The Heedbook service , which I will talk about today, has one very cool advantage over other ways of evaluating employees' work with clients - this is an automatic assessment of client emotions in real time. That is, returning to my friend, his tolerance will not save the consultant from a real assessment of the work. And the wolves are full, and the sheep are whole, and the karma of a friend too.

How it works:

1. A front-line employee of a bank (or a pharmacy, or an MFC, or a store, or similar enterprises) enters the system through a browser at the beginning of the working day.

2. A client comes to him, for example, my friend.

3. The system receives and analyzes the video stream from the webcam in real time in the background.

4. Information is processed by systems of intellectual recognition of emotions, speech and other client parameters.

5. Based on the results of the analysis, the system provides detailed analytics on the structure of emotions and the share of positive / negative emotions of the client, the attention of the client to the employee, the content of the dialogue, the use of script servicing or forbidden phrases.

6. The head of the office and employees of the parent company receive detailed information about the quality of customer service by managers and customers. (And here we see how our consultant begins to get nervous.))

In addition to the above, for each employee the average customer service time, the number of customers served, and the structure of the client base by demographic indicators are determined.

Another interesting feature, the director can connect to the video stream from the workplaces of front-line employees, as well as view video recordings of dialogs later with detailed analytics on them. That is, a new scenario is added to our story when a consultant begins to be rude to my friend, and then suddenly his eyes widen and he becomes sweet and polite. )

Well, the last interesting detail, the Heedbook has a rating system for employees.

How it works: through the eyes of the developer

Microservice architecture Azure Functions

Together with Dima Soshnikov, we partially helped the guys in designing the solution. The first thing they did was decide to get away from monolithic architecture and make a system built on microservices (as you can see from my last articles, in my opinion this is a very interesting topic). To do this, use Azure Functions . In fact, we also thought about WebJob, but it has performance limitations and pricing does not come from the number of operations performed.

The main AF development environment is the online function editor on the Azure portal. Also, from the end of May 2017, you can create AF using Visual Studio 2017 UPD 3.

Since AF is a new Microsoft product, there is no complete documentation on it yet, so below we will analyze an example of one AF Heedbook project. This will save time if you decide to build a microservice architecture based on Azure.

AF trigger can be an Http request, the appearance of Blob in Azure Blob storage, actions in OneDrive or just a timer. The project implements almost all of the above options triggers for AF. We also implemented AF cascades when the work of one AF launches another, thus providing a single business data analysis process.

An example of our AF is triggered by the appearance of a blob - a picture. Using this AF, we will determine the number of people in the picture and their emotions. We do this using the Microsoft Face API cognitive service .

First you need to connect the necessary libraries of cognitive services. For the online AF editor, you have to do this manually by creating the project.json file and writing all the necessary dependencies there:

{

"frameworks": {

"net46":{

"dependencies": {

"Microsoft.ProjectOxford.Common": "1.0.324",

"Microsoft.ProjectOxford.Face": "1.2.5"

}

}

}

}

In the case of creating AF in Visual Studio 2017 UPD 3, we simply connect the necessary dependencies using Nuget.

Next we need to register the AF trigger and output parameters. In our case, this is the appearance of a blob in a specific container and the recording of recognition results in the Azure MsSql table. This is done in the function.json file:

{

"bindings": [

{

"name": "InputFace",

"type": "blobTrigger",

"direction": "in",

"path": "frames/{name}",

"connection": "heedbookhackfest_STORAGE"

},

{

"type": "apiHubTable",

"name": "FaceData",

"dataSetName": "default",

"tableName": "FaceEmotionGuid",

"connection": "sql_SQL",

"direction": "out"

}

],

"disabled": false

}

So, the Azure Functions code itself!

#r "System.IO"

using System.IO;

using Microsoft.ProjectOxford.Face;

using Microsoft.ProjectOxford.Common.Contract;

public static async Task Run(Stream InputFace, string name, IAsyncCollector FaceData, TraceWriter log)

{

log.Info($"Processing face {name}");

var namea = Path.GetFileNameWithoutExtension(name).Split('-');

var cli = new FaceServiceClient();

var res = await cli.DetectAsync(InputFace,false,false,new FaceAttributeType[] { FaceAttributeType.Age, FaceAttributeType.Emotion, FaceAttributeType.Gender});

var fc = (from f in res

orderby f.FaceRectangle.Width

select f).FirstOrDefault();

if (fc!=null)

{

var R = new FaceEmotion();

R.Time = DateTime.ParseExact(namea[1],"yyyyMMddHHmmss",System.Globalization.CultureInfo.InvariantCulture.DateTimeFormat);

R.DialogId = int.Parse(namea[0]);

var t = GetMainEmotion(fc.FaceAttributes.Emotion);

R.EmotionType = t.Item1;

R.FaceEmotionGuidId = Guid.NewGuid();

R.EmotionValue = (int)(100*t.Item2);

R.Sex = fc.FaceAttributes.Gender.ToLower().StartsWith("m");

R.Age = (int)fc.FaceAttributes.Age;

await FaceData.AddAsync(R);

log.Info($" - recorded face, age={fc.FaceAttributes.Age}, emotion={R.EmotionType}");

}

else log.Info(" - no faces found");

}

public static Tuple GetMainEmotion(EmotionScores s)

{

float m = 0;

string e = "";

foreach (var p in s.GetType().GetProperties())

{

if ((float)p.GetValue(s)>m)

{

m = (float)p.GetValue(s);

e = p.Name;

}

}

return new Tuple(e,m);

}

public class FaceEmotion

{

public Guid FaceEmotionGuidId { get; set; }

public DateTime Time { get; set; }

public string EmotionType { get; set; }

public float EmotionValue { get; set; }

public int DialogId { get; set; }

public bool Sex { get; set; }

public int Age { get; set; }

}

In this case, it is an asynchronous procedure in conjunction with the Cognitive Services Face API. AF receives Stream blob and transfers it to CS:

var res = await cli.DetectAsync(InputFace,false,false,new FaceAttributeType[] { FaceAttributeType.Age, FaceAttributeType.Emotion, FaceAttributeType.Gender});

Next, selects the largest face in the frame:

var fc = (from f in res

orderby f.FaceRectangle.Width

select f).FirstOrDefault();

And writes the recognition results to the database:

await FaceData.AddAsync(R);

It's simple, isn't it? The future is in microservices. )

About problems

Okay, not so simple, really.

AF, unfortunately, currently has a number of limitations (name binding does not work, there are library conflicts). Fortunately, there are always many walkarrounds in the .Net development world - and if you can’t solve the problem in a basic scenario, you can find several workarounds.

Shooting video and audio in the background

As you know, modern operating systems try to save battery life as much as possible, stopping the proactive work of all applications in the background. This also applies to streaming in a web, mobile or desktop application. After a long survey on this subject. we opted for a web solution.

We get the video and audio stream from the webcam using

GetUserMedia(). Next, we must record the received video and audio stream and extract data from there for transmission to the backend. This works if the browser window is constantly active, as soon as you make the browser bookmark inactive - it becomes inaccessible to write data. Our task was to create a system that will work in the background and will not prevent the employee from fulfilling his direct duties. Therefore, the solution was to create our own stream variable, where we record and extract video and audio stream data.The quality of recognition of the Russian language

In the future, the service will move towards creating its own audio recognition models, but at the moment, it is necessary to use external providers of Russian language recognition services. It was difficult to choose a good service that provides high-quality speech recognition in Russian. The current configuration of the system uses a combination of speech recognition systems, for Russian speech Goolge Speech Api is used, which in testing showed the best results of recognition quality.

Back to reality

In fact, this decision is not just tales of the future. In the near future, Heedbook will begin working in the Moscow Region MFC and the country's largest bank.

Heedbook team will be grateful for comments on their solution, and will also be glad to work with professionals in the field of ML, data analysis, SEO and working with large clients. Write your thoughts in the comments or email info@heedbook.com .