“Break the vote on RIT ++.” Give 1,000,000 RPS

The second day of RIT ++ has passed, and in hot pursuit we want to talk about how the whole world tried to break our voting ballot . Under the cut - code, metrics, the names of the winners and the most active participants, and other dirty details.

Shortly before RIT ++, we thought about how to entertain people? We decided to make a vote for the coolest programming language. And so that real-time results are displayed on the dashboard. The voting procedure was made simple: it was possible to go to the ODN.PW website from any device, indicate your name and e-mail, and vote for any language. They didn’t play with the list of languages - they took the nine most popular ones according to GitHub, made the language “Other” the tenth point. The colors for the dice were also taken from GitHub.

But we understood that cheating would be inevitable, and with the use of "heavy artillery" - all of our own, advanced audience, this is not a vote at mommy’s forum. Therefore, we decided to welcome the wrapping in all possible ways. Moreover, they suggested trying the community to put our vote on a big load. And to make it even easier for participants, they posted a link to the API for markups using bots. And at the same time we decided to give incentive prizes to the first three participants with the most RPS. A separate nomination was prepared for that strong man who could break the voting ball alone.

The first day

The voting ballot was launched almost from the very beginning of the work of RIT ++, and it worked until 18 o’clock. Our entertainment was liked by visitors and speakers of RIT ++. High-performance service specialists have been actively involved in the RPS race. The booth guests vividly discussed ways to put a voting ballot. Teams of adherents of one or another language spontaneously arose, who began to come up with promotion strategies. Someone immediately sat down and began to write microservices or bots to participate in the vote.

Some companies participating in RIT ++ and providing secure hosting services also joined our competition. By the very end of the day, by joint efforts, the participants were still able to briefly lay down the system. Well, as they put it, the service worked, we just ran into the ceiling by the number of simultaneously registered votes. Therefore, by 18 o’clock we suspended the vote, otherwise the results would be unreliable.

According to the results of the first day, we received 160 million votes, and the peak load reached 20,000 RPS. It is curious that on this day the first and second places were taken by the active participant of RIT ++

Nikolay Matsievsky (Airi) and speaker Elena Grakhovats from Openprovider.

At night, we prepared for the next day to meet him fully armed: we optimized communication with the base and put nginx in front of the Node.js application on each worker.

Second day

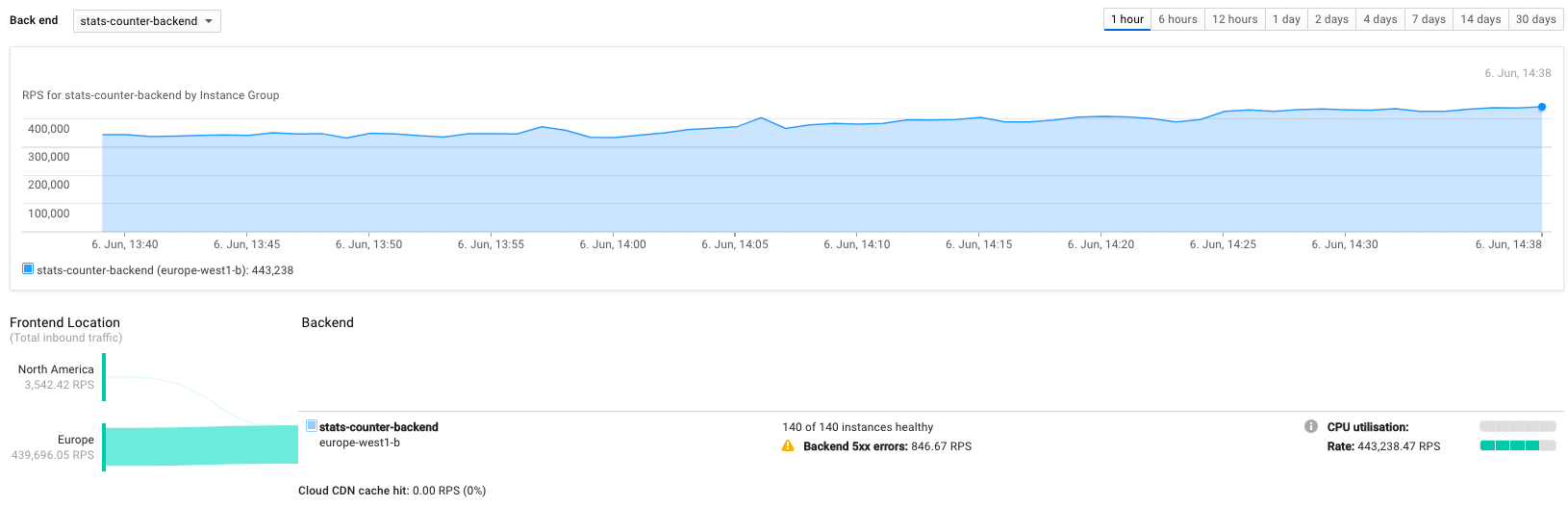

Many were interested in our proposal to put a vote, because the race for RPS is a fascinating task. We were already “waiting” in the morning: as soon as we switched DNS, as the number of RPS soared to 100,000. And after half an hour the load rose to 300,000 RPS.

It's funny that when we just started to develop a voting ballot, we decided that "it would be nice to support 100,000 RPS." And just in case, they laid the maximum performance of 1 million RPS, but at the same time they did not even seriously consider the possibility of approaching such an indicator. And by the middle of the second day they were almost betting on whether we would break the ceiling of a million requests per second. As a result, we have reached about 500,000 RPS.

Implementation

We sawed the project together for 1.5 days, just before RIT ++. The vote was posted on the Google Cloud Platform cloud service. Three-level architecture:

• Upper level: a balancer acting as a frontend to which a stream of requests arrives. He throws the load on the servers.

• Intermediate: backend on Node.js 8.0. The number of machines involved is scaled depending on the current load. This is done sparingly, and not with a margin, so as not to overpay for nothing. By the way, the projector cost 8,000 rubles.

• Lower level: a clustered MongoDB for storing votes, consisting of three servers (one master and two slaves).

All voting components are open source, available on Github:

• Backend: https://github.com/spukst3r/counter-store

• Frontend: https://github.com/weglov/treechart

During the development of the backend, there was an idea in the air to cache every request for wrapping votes and periodically send them to the database. But due to lack of time, uncertainty in the number of participants and banal laziness, it was decided to postpone this idea and leave sending data to the database for each request. At the same time, the performance of MongoDB in this mode is checked.

Well, as the first day showed, it was necessary to fasten the cache right away. Each Node.js worker issued no more than 3000 RPS for each POST on / poll, and the MongoDB master coughed heavily with LA> 100. Not even helping optimize the aggregation of queries to obtain statistics by changing the read preference to use slaves for reading. Well, nothing, it's time to implement the cache for wrapping up the counters and for checking the validity of the email (which was wrapped in a simple _.memoize , because we never delete users). We also used a new project in the Google Compute Engine, with more quotas.

After turning on voice caching, MongoDB felt great, showing LA <1 even at peak load. And the productivity of each worker has increased by 50% - up to 4500 RPS. For periodic data sending, we used bulkWrite with the ordered parameter disabled , to leave the order of execution of requests on the base side to optimize speed.

On the first day, a Node.js server worked on each worker, creating four child processes through the cluster module, each of which listened to port 3000. For the second day, we abandoned such a server and gave HTTP processing to “professionals”. The experiments showed that nginx, which interacts with the application through a unix socket, gives approximately +500 RPS. The setting is fairly standard for a large number of connections: increased worker_rlimit_nofile, sufficient worker_connections, enabled tcp_nopush and tcp_nodelay. By the way, disabling the Nagle algorithm helped raise RPS in Node.js. In each virtual machine it was required to increase the limit on the number of open files and the maximum size of the backlog.

Summary

For two days, no participant alone could not put our service. But at the end of the first day by common efforts we achieved that the system did not have time to register all incoming requests. On the second day, we set a record for a load of ~ 450,000 RPS. The difference in the RPS readings at the front (which calculated and averaged RPS over the actual entries in the database) and the Google monitoring readings remains a mystery to us.

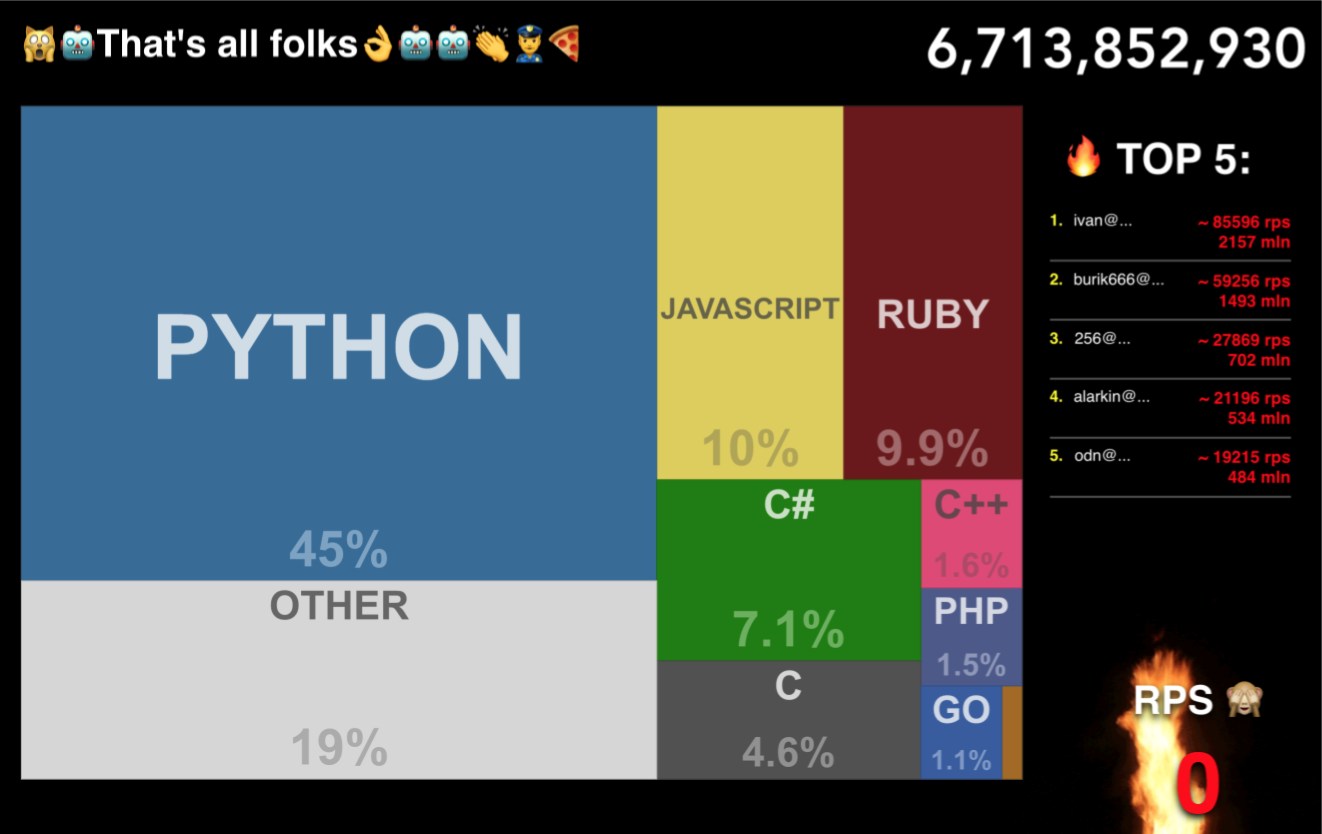

And we are pleased to announce the winners of our little competition:

1st place - {"_id": "ivan@buymov.ru", "count": 2107126721}

2nd place - {"_id": "burik666@gmail.com", "count": 1453014107}

3rd place - {"_id ":" 256@flant.com "," count ": 626160912}

For prizes, write to kosheleva_ingram_micro !

UPD: Hall of Fame TOP50