Yes, Python is slow, but I don't care

- Transfer

Talk about reduced productivity for the sake of productivity.

I take a break from my discussion of asyncio in Python to talk about Python speed. Let me introduce myself, I am an ardent fan of Python, and use it wherever I can. One of the reasons people oppose this language is that it is slow. Some refuse to even try to work on it just because "X is faster." Here are my thoughts on this subject.

Programs used to run for a very long time. CPU and memory resources were expensive, and runtime was an important indicator. And how much they paid for electricity! However, those days are long gone because the main rule is:

You may object: “My company cares about speed. I am creating a web application in which all responses come in less than x milliseconds "or" clients left us because they thought our application was too slow. " I am not saying that speed is not important at all, I just want to say that it has ceased to be the most important; this is not your most expensive resource.

When you use the word "speed" in the context of programming, most likely you mean the performance of the CPU. But when your CEO uses it in relation to programming, then it means business speed. The most important metric is time to market. Ultimately, it doesn’t matter how fast your products work, it doesn’t matter what programming language they are written in or how much resources are required to run them. The only thing that will make your company survive or die is time to market. I’m no longer talking about how quickly the idea starts to generate income, but about how quickly you can release the first version of the product. The only way to survive in business is to grow faster than competitors. It doesn’t matter how many ideas you have if competitors implement them faster. You must be the first in the market, or at least keep up. Slow down a little - and perish.

Large companies such as Amazon, Google or Netflix understand the importance of rapid development. They specifically build their business so as to quickly innovate. Microservices help them with this. This article has nothing to do with whether you should build your architecture on them, but at least agree that Amazon and Google should use them. Microservices are inherently slow. Their very concept is to use network calls. In other words, instead of calling a function, you go for a walk on the network. What could be worse in terms of performance ?! Network calls are very slow compared to processor cycles. However, large companies still prefer to build microservice architecture. I really don't know what could be slower. The biggest disadvantage of this approach is performance, while the plus will be time to market. By building teams around small projects and codebases, a company can iterate and innovate much faster. We see that even very large companies care about the time to market, and not just startups.

Large companies such as Amazon, Google or Netflix understand the importance of rapid development. They specifically build their business so as to quickly innovate. Microservices help them with this. This article has nothing to do with whether you should build your architecture on them, but at least agree that Amazon and Google should use them. Microservices are inherently slow. Their very concept is to use network calls. In other words, instead of calling a function, you go for a walk on the network. What could be worse in terms of performance ?! Network calls are very slow compared to processor cycles. However, large companies still prefer to build microservice architecture. I really don't know what could be slower. The biggest disadvantage of this approach is performance, while the plus will be time to market. By building teams around small projects and codebases, a company can iterate and innovate much faster. We see that even very large companies care about the time to market, and not just startups.

If you are writing a network application such as a web server, most likely the processor is not the bottleneck of your application. In the process of processing the request, several network calls will be made, for example, to a database or cache. These servers can be arbitrarily fast, but everything depends on the speed of data transmission over the network. Here is a really cool article comparing the execution time of each operation. In it, the author considers one second per processor cycle. In this case, the request from California to New York will stretch for 4 years. Here is such a slow network. For approximate calculations, suppose that transmitting data over a network inside a data center takes about 3 ms, which is equivalent to 3 months on our reading scale. Now let's take a program that loads the CPU. Suppose she needs 100,000 cycles to process one call, this will be equivalent to 1 day. Suppose we write in a language that is 5 times slower - it will take 5 days. For those who are ready to wait 3 months, the difference of 4 days is not so important.

Ultimately, the fact that python is slow no longer matters. The speed of the language (or processor time) is almost never a problem. Google described this in his research.. And there, by the way, it is said about the development of a high-performance system. In conclusion, they come to the following conclusion:

In other words:

What about such arguments: “All this is fine, but what if the CPU becomes a bottleneck and it starts to affect performance?” or "The language x is less demanding on iron than y"? They also have a place to be, however you can scale the application horizontally endlessly. Just add servers, and the service will work faster :) Without a doubt, Python is more demanding on hardware than the same C, but the cost of a new server is much less than the cost of your time. That is, it will be cheaper to buy resources, rather than rushing to optimize something every time.

Throughout the article, I insisted that the most important thing is the time of the developer. So the question remains: is Python X faster during development? It's funny, but I, the Google and some more , can confirm how productive Python. He hides so many things from you, helping to focus on your main task and not being distracted by all sorts of little things. Let's look at some examples from life.

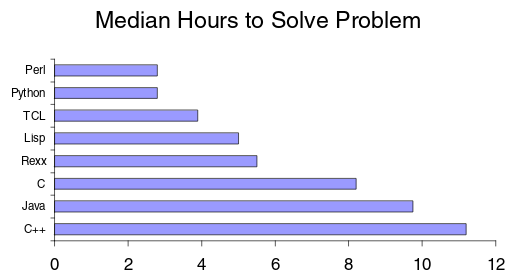

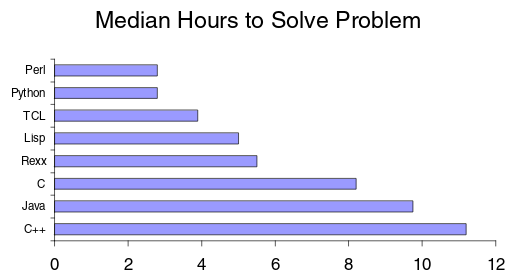

For the most part, all controversy surrounding Python performance slides into a comparison of dynamic and static typing. I think everyone will agree that statically typed languages are less productive, but just in case, here's a good articleexplaining why. As for Python itself, there is a good research report that looks at how long it took to write code to process strings in different languages.

In the above study, Python is more than 2 times more productive than Java. Many other programming languages also give similar results. Rosetta Code conducted a fairly extensive study of the differences in learning programming languages. In the article, they compare python with other interpreted languages and state:

Apparently, a Python implementation will typically have fewer lines of code than any other language. This may seem like a terrible metric, but several studies , including those mentioned above, show that the time spent on each line of code is approximately the same in each language. Thus, the fewer lines of code, the greater the productivity. Even codinghorror itself (a C # programmer) wrote an article stating that Python is more productive.

I think it is fair to say that Python is more productive than many other programming languages. This is mainly due to the fact that it comes "with batteries", and also has many third-party libraries for all occasions. Here is a short articlecomparing Python and X. If you don’t believe me, you can see for yourself how simple and convenient this programming language is. Here is your first program:

My reasoning above could add up to the opinion that optimization and speed of program execution do not matter at all. In many cases this is not the case. For example, you have a web application and some endpoint, which really slows down. You may even have certain requirements in this regard. Our example is based on some assumptions:

We will not deal with micro-optimization of the entire application. Everything should be "fast enough." Your users may notice if processing the request takes seconds, but they will never notice the difference between 35 ms and 25 ms. You only need to make the application "good enough."

To understand how to optimize, we must first understand what exactly needs to be optimized. Finally:

If optimization does not eliminate the bottleneck of the application, then you will simply waste your time and do not solve the real problem. You should not continue development until you fix the brakes. If you are trying to optimize something without knowing specifically what, then the result is unlikely to satisfy you. This is called “premature optimization” - performance improvement without any metrics. D. Knut is often credited with the following quote, although he maintains that it is not his:

In other words, it says that most of the time you do not need to think about optimization. The code is already good :) And in the case when it is not, you need to rewrite no more than 3%, no one will praise you if you make the processing of the request several nanoseconds faster. Optimize what is measurable.

Premature optimization, as a rule, consists in calling faster methods. apparently, assembler insertions are implied> or the use of specific data structures because of their internal implementation. We were taught at the university that if two algorithms have the same Big-O asymptotics, then they are equivalent. Even if one of them is 2 times slower. Computers are now so fast that it's time to measure computational complexity on a lot of data. That is, if you have two O (log n) functions, but one is two times slower than the other, then this does not really matter. As the size of the data increases, they both begin to show approximately the same runtime. That is why premature optimization is the root of all evil; It takes our time and almost never helps our overall performance.

In Big-O terms, all programming languages have O (n) complexity, where n is the number of lines of code or instructions. It doesn't matter how slow the language or its virtual machine will be - they all have a common asymptote. <approx. trans. the author means that even if the language X is now twice as slow as Y, then in the future after optimizations they will be approximately equal in speed> In accordance with this reasoning, we can say that the “fast” programming language is only prematurely optimized, and it is not clear from what metrics.

What I like about Python is that it allows you to optimize a small piece of code at a time. Let's say you have a Python method that you consider your bottleneck. You have optimized it several times, perhaps following the advice from here and here , and now you have come to the conclusion that Python itself is a bottleneck. But it has the ability to call C code, which means that you can rewrite this method in C to reduce the performance problem. You can use this method along with the rest of the code without any problems.

This allows you to write well-optimized bottleneck methods in any language that compiles into assembler code, that is, you continue to write in Python most of the time and move on to low-level programming, only you really need it.

There is also the Cython language, which is a superset of Python. It is a mixture with typed C. Any Python code is also valid in Cython, which translates to C. You can mix C types and duck typing. Using Cython, you get performance gains only in a bottleneck, without rewriting the rest of the code. So does, for example, EVE Online. This MMoRPG uses only Python and Cython for the entire stack, optimizing only where it is needed. In addition, there are other ways. For example, PyPy is a Python JIT implementation that can give you significant speedup while running long-lived applications (like a web server), simply by replacing CPython (the default implementation) with PyPy.

So, the main points:

Thanks for attention!

I take a break from my discussion of asyncio in Python to talk about Python speed. Let me introduce myself, I am an ardent fan of Python, and use it wherever I can. One of the reasons people oppose this language is that it is slow. Some refuse to even try to work on it just because "X is faster." Here are my thoughts on this subject.

Performance is no longer important

Programs used to run for a very long time. CPU and memory resources were expensive, and runtime was an important indicator. And how much they paid for electricity! However, those days are long gone because the main rule is:

Optimize the use of your most expensive resources.Historically, processor time was the most expensive. This is what they are leading to in the study of computer science, focusing on the effectiveness of various algorithms. Alas, this is no longer the case - hardware is now cheaper than ever, and after it, the execution time is becoming cheaper. The most expensive is the time of the employee, that is, you. It is much more important to solve the problem than speed up the execution of the program. I repeat for those who simply leaf through the article:

It is much more important to solve the problem than speed up the execution of the program.

You may object: “My company cares about speed. I am creating a web application in which all responses come in less than x milliseconds "or" clients left us because they thought our application was too slow. " I am not saying that speed is not important at all, I just want to say that it has ceased to be the most important; this is not your most expensive resource.

Speed is the only thing that matters.

When you use the word "speed" in the context of programming, most likely you mean the performance of the CPU. But when your CEO uses it in relation to programming, then it means business speed. The most important metric is time to market. Ultimately, it doesn’t matter how fast your products work, it doesn’t matter what programming language they are written in or how much resources are required to run them. The only thing that will make your company survive or die is time to market. I’m no longer talking about how quickly the idea starts to generate income, but about how quickly you can release the first version of the product. The only way to survive in business is to grow faster than competitors. It doesn’t matter how many ideas you have if competitors implement them faster. You must be the first in the market, or at least keep up. Slow down a little - and perish.

The only way to survive in business is to grow faster than competitors.

Let's talk about microservices

Large companies such as Amazon, Google or Netflix understand the importance of rapid development. They specifically build their business so as to quickly innovate. Microservices help them with this. This article has nothing to do with whether you should build your architecture on them, but at least agree that Amazon and Google should use them. Microservices are inherently slow. Their very concept is to use network calls. In other words, instead of calling a function, you go for a walk on the network. What could be worse in terms of performance ?! Network calls are very slow compared to processor cycles. However, large companies still prefer to build microservice architecture. I really don't know what could be slower. The biggest disadvantage of this approach is performance, while the plus will be time to market. By building teams around small projects and codebases, a company can iterate and innovate much faster. We see that even very large companies care about the time to market, and not just startups.

Large companies such as Amazon, Google or Netflix understand the importance of rapid development. They specifically build their business so as to quickly innovate. Microservices help them with this. This article has nothing to do with whether you should build your architecture on them, but at least agree that Amazon and Google should use them. Microservices are inherently slow. Their very concept is to use network calls. In other words, instead of calling a function, you go for a walk on the network. What could be worse in terms of performance ?! Network calls are very slow compared to processor cycles. However, large companies still prefer to build microservice architecture. I really don't know what could be slower. The biggest disadvantage of this approach is performance, while the plus will be time to market. By building teams around small projects and codebases, a company can iterate and innovate much faster. We see that even very large companies care about the time to market, and not just startups.The processor is not your bottleneck.

If you are writing a network application such as a web server, most likely the processor is not the bottleneck of your application. In the process of processing the request, several network calls will be made, for example, to a database or cache. These servers can be arbitrarily fast, but everything depends on the speed of data transmission over the network. Here is a really cool article comparing the execution time of each operation. In it, the author considers one second per processor cycle. In this case, the request from California to New York will stretch for 4 years. Here is such a slow network. For approximate calculations, suppose that transmitting data over a network inside a data center takes about 3 ms, which is equivalent to 3 months on our reading scale. Now let's take a program that loads the CPU. Suppose she needs 100,000 cycles to process one call, this will be equivalent to 1 day. Suppose we write in a language that is 5 times slower - it will take 5 days. For those who are ready to wait 3 months, the difference of 4 days is not so important.

Ultimately, the fact that python is slow no longer matters. The speed of the language (or processor time) is almost never a problem. Google described this in his research.. And there, by the way, it is said about the development of a high-performance system. In conclusion, they come to the following conclusion:

The decision to use an interpreted programming language in high-performance applications may be paradoxical, but we are faced with the fact that the CPU is rarely a limiting factor; the expressiveness of the language means that most programs are small and spend most of the time on I / O, and not on their own code. Moreover, the flexibility of the interpreted implementation was useful, both in the simplicity of experiments at the linguistic level, and in providing us with the opportunity to explore ways of distributing computing on many machines.

In other words:

CPU is rarely a deterrent.

What if we still run into the CPU?

What about such arguments: “All this is fine, but what if the CPU becomes a bottleneck and it starts to affect performance?” or "The language x is less demanding on iron than y"? They also have a place to be, however you can scale the application horizontally endlessly. Just add servers, and the service will work faster :) Without a doubt, Python is more demanding on hardware than the same C, but the cost of a new server is much less than the cost of your time. That is, it will be cheaper to buy resources, rather than rushing to optimize something every time.

So is Python fast?

Throughout the article, I insisted that the most important thing is the time of the developer. So the question remains: is Python X faster during development? It's funny, but I, the Google and some more , can confirm how productive Python. He hides so many things from you, helping to focus on your main task and not being distracted by all sorts of little things. Let's look at some examples from life.

For the most part, all controversy surrounding Python performance slides into a comparison of dynamic and static typing. I think everyone will agree that statically typed languages are less productive, but just in case, here's a good articleexplaining why. As for Python itself, there is a good research report that looks at how long it took to write code to process strings in different languages.

In the above study, Python is more than 2 times more productive than Java. Many other programming languages also give similar results. Rosetta Code conducted a fairly extensive study of the differences in learning programming languages. In the article, they compare python with other interpreted languages and state:

Python, in general, is the most concise, even compared to functional languages (an average of 1.2-1.6 times shorter).

Apparently, a Python implementation will typically have fewer lines of code than any other language. This may seem like a terrible metric, but several studies , including those mentioned above, show that the time spent on each line of code is approximately the same in each language. Thus, the fewer lines of code, the greater the productivity. Even codinghorror itself (a C # programmer) wrote an article stating that Python is more productive.

I think it is fair to say that Python is more productive than many other programming languages. This is mainly due to the fact that it comes "with batteries", and also has many third-party libraries for all occasions. Here is a short articlecomparing Python and X. If you don’t believe me, you can see for yourself how simple and convenient this programming language is. Here is your first program:

import __hello__But what if speed is really important?

My reasoning above could add up to the opinion that optimization and speed of program execution do not matter at all. In many cases this is not the case. For example, you have a web application and some endpoint, which really slows down. You may even have certain requirements in this regard. Our example is based on some assumptions:

- there is some endpoint that runs slowly

- there are some metrics that determine how slow query processing can be

We will not deal with micro-optimization of the entire application. Everything should be "fast enough." Your users may notice if processing the request takes seconds, but they will never notice the difference between 35 ms and 25 ms. You only need to make the application "good enough."

Disclaimer

I must say that there are some applications that process real-time data that need microoptimization, and every millisecond matters. But this is more an exception than a rule.

To understand how to optimize, we must first understand what exactly needs to be optimized. Finally:

Any improvements made anywhere other than a bottleneck are an illusion. - Gene Kim.

If optimization does not eliminate the bottleneck of the application, then you will simply waste your time and do not solve the real problem. You should not continue development until you fix the brakes. If you are trying to optimize something without knowing specifically what, then the result is unlikely to satisfy you. This is called “premature optimization” - performance improvement without any metrics. D. Knut is often credited with the following quote, although he maintains that it is not his:

Premature optimization is the root of all evil.To be precise, a more complete quote:

We must forget about efficiency, say, at 97% of the time: premature optimization is the root of all evils. However, we should not miss our opportunity in these critical 3%.

In other words, it says that most of the time you do not need to think about optimization. The code is already good :) And in the case when it is not, you need to rewrite no more than 3%, no one will praise you if you make the processing of the request several nanoseconds faster. Optimize what is measurable.

Premature optimization, as a rule, consists in calling faster methods. apparently, assembler insertions are implied> or the use of specific data structures because of their internal implementation. We were taught at the university that if two algorithms have the same Big-O asymptotics, then they are equivalent. Even if one of them is 2 times slower. Computers are now so fast that it's time to measure computational complexity on a lot of data. That is, if you have two O (log n) functions, but one is two times slower than the other, then this does not really matter. As the size of the data increases, they both begin to show approximately the same runtime. That is why premature optimization is the root of all evil; It takes our time and almost never helps our overall performance.

In Big-O terms, all programming languages have O (n) complexity, where n is the number of lines of code or instructions. It doesn't matter how slow the language or its virtual machine will be - they all have a common asymptote. <approx. trans. the author means that even if the language X is now twice as slow as Y, then in the future after optimizations they will be approximately equal in speed> In accordance with this reasoning, we can say that the “fast” programming language is only prematurely optimized, and it is not clear from what metrics.

Optimizing python

What I like about Python is that it allows you to optimize a small piece of code at a time. Let's say you have a Python method that you consider your bottleneck. You have optimized it several times, perhaps following the advice from here and here , and now you have come to the conclusion that Python itself is a bottleneck. But it has the ability to call C code, which means that you can rewrite this method in C to reduce the performance problem. You can use this method along with the rest of the code without any problems.

This allows you to write well-optimized bottleneck methods in any language that compiles into assembler code, that is, you continue to write in Python most of the time and move on to low-level programming, only you really need it.

There is also the Cython language, which is a superset of Python. It is a mixture with typed C. Any Python code is also valid in Cython, which translates to C. You can mix C types and duck typing. Using Cython, you get performance gains only in a bottleneck, without rewriting the rest of the code. So does, for example, EVE Online. This MMoRPG uses only Python and Cython for the entire stack, optimizing only where it is needed. In addition, there are other ways. For example, PyPy is a Python JIT implementation that can give you significant speedup while running long-lived applications (like a web server), simply by replacing CPython (the default implementation) with PyPy.

So, the main points:

- optimize the use of the most expensive resource - that is, you, not the computer;

- Choose a language / framework / architecture that allows you to develop products as quickly as possible. You should not choose a programming language just because programs work on it quickly;

- if you have performance problems - determine where exactly;

- and, most likely, these are not processor or Python resources;

- if this is still Python (and you have already optimized the algorithm), implement the problem spot in Cython / C;

- and get back to your main job quickly.

Thanks for attention!