About Instagram disconnecting the Python garbage collector and starting to live

- Transfer

By disabling the Python garbage collector (GC), which frees up memory by tracking and deleting unused data, Instagram has become 10% faster. Yes, you heard right! By disabling the garbage collector, you can reduce the amount of memory consumed and increase the efficiency of the processor cache. Want to know why this is happening? Then fasten your seat belts!

Instagram web server runs on Django in multiprocess mode, where the master process copies itself, creating dozens of workflows that receive requests from users. As an application server, we use uWSGI in prefork mode to control the allocation of memory between the master process and workflows.

To prevent Django from running out of memory, the uWSGI master process provides the ability to restart a workflow when its resident memory (RSS) exceeds a predetermined limit.

First, we decided to find out why RSS workflows begin to grow so quickly right after the master generates them. We noticed that although RSS starts at 250 MB, the size of shared memory used is reduced in a few seconds from 250 MB to almost 140 MB (the size of shared memory can be found in

There is a copy -on-write (CoW) mechanism in the Linux kernel that serves to optimize the operation of child processes. A child process at the beginning of its existence shares each page of memory with its parent. The page is copied to the process’s own memory only during recording.

But in the Python world, interesting things happen due to reference counting. Each time a Python object is read, the interpreter will increase its reference count, which is essentially a write operation to its internal data structure. This causes CoW. It turns out that with Python we actually use Copy-on-Read (CoR)!

The question is ripening: are we copying on write for immutable objects, such as code objects? Since it

We start on Instagram with a simple one. As an experiment, we added a small hack to the CPython interpreter, made sure that the reference count does not change for code objects, and then installed this CPython on one of the production servers.

The result disappointed us: nothing has changed in the use of shared memory. When we tried to find out why this happens, we realized that we could not find any reliable metrics to prove that our hack worked, and we could not prove the connection between shared memory and a copy of the code object. Obviously, we lost sight of something. Conclusion: before following your theory, prove it.

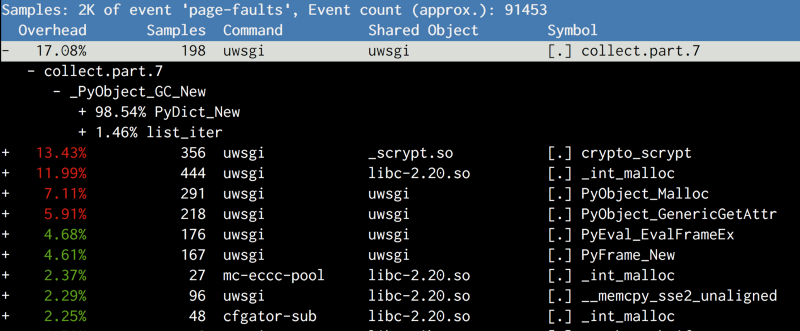

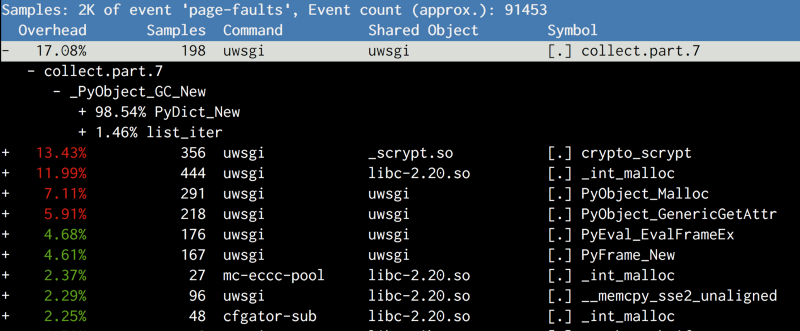

Googling a little on the topic of Copy-on-Write, we found out that Copy-on-Write is associated with errors in the absence of pages in memory (page faults, or page interruptions). Each CoW operation causes a page break in the process. Linux’s built-in performance monitoring tools allow you to record system events, including page interrupts, and when possible even display a stack trace!

We again went to the production server, rebooted it, waited until the master process spawned child processes, recognized the PID of the workflow, and then ran the following command:

Using the stack trace, we got an idea of when page interruptions occur in the process.

The results were different from what we expected. The main suspect was not copying code objects, but a method

The garbage collector in CPython is called deterministically based on a threshold value. The default threshold is very low, so the garbage collector starts at a very early stage. It maintains linked lists containing information about creating objects, and linked lists are mixed during garbage collection. Since the structure of the linked list exists together with the object itself (just like

Well, since the garbage collector treacherously betrayed us, let's turn it off!

We have added a call

Patching msgpack was unacceptable for us, as it opened up the possibility for other libraries to do the same without informing us. First, you need to prove that disabling the garbage collector really helps. The answer lies again in

Thus, we successfully increased the amount of shared memory for each workflow from 140 MB to 225 MB, and the total amount of used memory on the host dropped to 8 GB on each machine. This saved 25% of RAM on all Django servers. With such a supply of free space, we can both start a lot more processes and increase the threshold for resident memory. As a result, this increases the throughput of the Django layer by over 10%.

After experimenting with many settings, we decided to test our theory in a wider context: on a cluster. The results were not long in coming, and our continuous deployment process fell apart, as the web server started to reboot much slower with the garbage collector turned off. It usually took less than 10 seconds to restart, but when the garbage collector was turned off, it sometimes took up to 60 seconds.

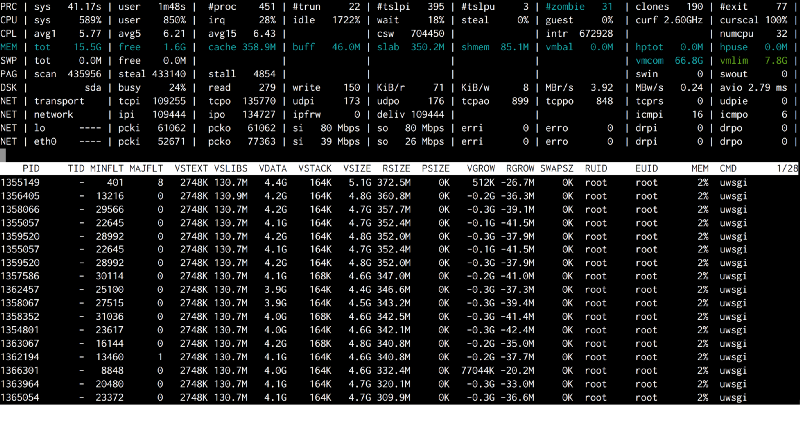

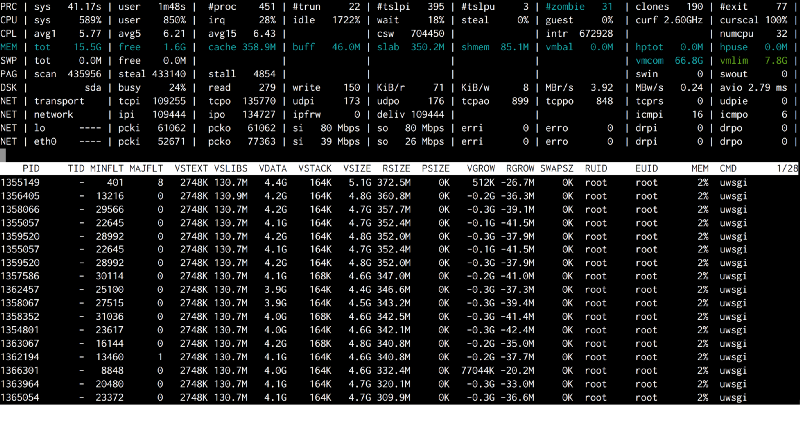

This bug was difficult to reproduce, as the behavior was not deterministic. After many experiments, it was possible to determine the exact steps of the reproduction. When this happened, the free memory on this host dropped to almost zero and jumped back, filling the entire cache. Then there came a time when all the code or data had to be read from disk (DSK 100%), and everything worked slowly.

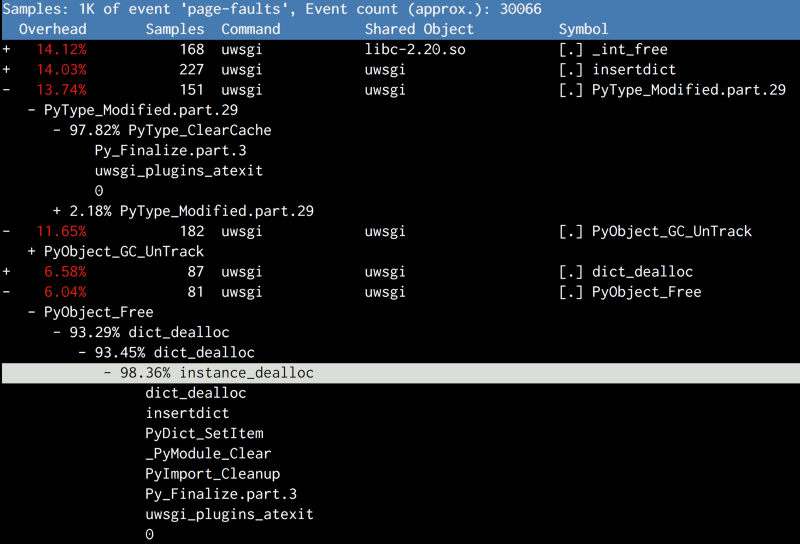

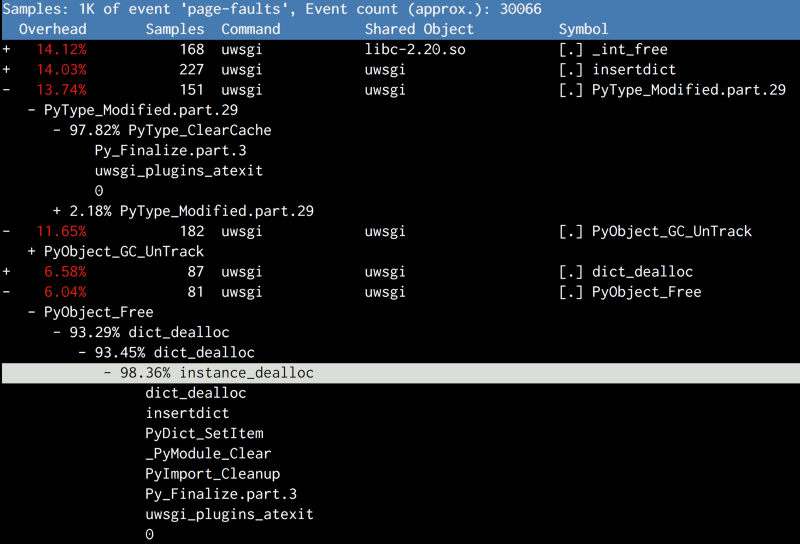

This could indicate that Python is doing the final garbage collection when the interpreter stops, which could cause a giant leap in the amount of memory used in a very short period of time. And again, I decided to prove it first, and then decide how to fix it. So, I commented out the call

Obviously we couldn't just turn off

Finally, we needed to apply our solution on a larger scale. We tried to apply it on all servers, but this again broke the continuous deployment process. However, this time only machines with old processor models (Sandy Bridge) were affected, and it was even more difficult to reproduce. Conclusion: always test old customers / equipment, as they break most easily.

Since our continuous deployment process is fast enough to understand what is happening, I added a separate utility

And again, when performing performance profiling, we come across

Why bother cleaning anything? The process will die, and we will receive a replacement for it. What we need to worry about is the atexit function handlers, which clean up behind our applications. But don't worry about Python cleanups. Here's how we eventually changed our boot script:

The solution is based on the fact that atexit functions are run from the register in the reverse order. The atexit function completes the rest of the cleanup, and then calls

Having changed only two lines, we finally rolled out the solution to all our servers. By carefully adjusting the thresholds for memory, we got a total performance gain of 10%!

In comprehending the performance improvement, we had a couple of questions:

First, shouldn't Python memory overflow without garbage collection, since it no longer clears? (Recall that there is no real stack in Python's memory since all objects are stored on the heap)

Fortunately, this is not the case. The primary mechanism for releasing objects in Python is reference counting. When a reference to an object is deleted (when called

Second question: where does the performance gain come from?

Turning off the garbage collector gives a double win:

With the garbage collector disabled, the cache-miss rate drops by 2–3%, which is the main reason for the 10% improvement in IPC. Cache misses are expensive because they slow down the processor computing pipeline. A slight increase in the CPU cache hit rating can significantly improve IPC. The fewer copy-on-write (CoW) operations are performed, the more cache lines with different virtual addresses (in different work processes) point to the same address in physical memory, which leads to an increase in the cache hit rating.

As you can see, not every component works as we think, and the results can sometimes be unexpected. Therefore, continue research and be amazed at how everything really works!

How we run our web server

Instagram web server runs on Django in multiprocess mode, where the master process copies itself, creating dozens of workflows that receive requests from users. As an application server, we use uWSGI in prefork mode to control the allocation of memory between the master process and workflows.

To prevent Django from running out of memory, the uWSGI master process provides the ability to restart a workflow when its resident memory (RSS) exceeds a predetermined limit.

How memory works

First, we decided to find out why RSS workflows begin to grow so quickly right after the master generates them. We noticed that although RSS starts at 250 MB, the size of shared memory used is reduced in a few seconds from 250 MB to almost 140 MB (the size of shared memory can be found in

/proc/PID/smaps). Numbers here are not very interesting, since they are constantly changing, but how quickly the allocated memory is freed up (by almost 1/3 of the total memory) is of interest. Then we decided to find out why this shared memory becomes the private memory of each process at the beginning of its life.Our Assumption: Copy-on-Read

There is a copy -on-write (CoW) mechanism in the Linux kernel that serves to optimize the operation of child processes. A child process at the beginning of its existence shares each page of memory with its parent. The page is copied to the process’s own memory only during recording.

But in the Python world, interesting things happen due to reference counting. Each time a Python object is read, the interpreter will increase its reference count, which is essentially a write operation to its internal data structure. This causes CoW. It turns out that with Python we actually use Copy-on-Read (CoR)!

#define PyObject_HEAD \

_PyObject_HEAD_EXTRA \

Py_ssize_t ob_refcnt; \

struct _typeobject *ob_type;

...

typedef struct _object {

PyObject_HEAD

} PyObject;The question is ripening: are we copying on write for immutable objects, such as code objects? Since it

PyCodeObjectis actually a “subclass” PyObject, obviously, yes. Our first idea was to disable link counting for PyCodeObject.Attempt number 1: disable link counting for code objects

We start on Instagram with a simple one. As an experiment, we added a small hack to the CPython interpreter, made sure that the reference count does not change for code objects, and then installed this CPython on one of the production servers.

The result disappointed us: nothing has changed in the use of shared memory. When we tried to find out why this happens, we realized that we could not find any reliable metrics to prove that our hack worked, and we could not prove the connection between shared memory and a copy of the code object. Obviously, we lost sight of something. Conclusion: before following your theory, prove it.

Page Interrupt Analysis

Googling a little on the topic of Copy-on-Write, we found out that Copy-on-Write is associated with errors in the absence of pages in memory (page faults, or page interruptions). Each CoW operation causes a page break in the process. Linux’s built-in performance monitoring tools allow you to record system events, including page interrupts, and when possible even display a stack trace!

We again went to the production server, rebooted it, waited until the master process spawned child processes, recognized the PID of the workflow, and then ran the following command:

perf record -e page-faults -g -p Using the stack trace, we got an idea of when page interruptions occur in the process.

The results were different from what we expected. The main suspect was not copying code objects, but a method

collectbelonging gcmodule.cto and called when the garbage collector starts. After reading how GC works in CPython, we developed the following theory: The garbage collector in CPython is called deterministically based on a threshold value. The default threshold is very low, so the garbage collector starts at a very early stage. It maintains linked lists containing information about creating objects, and linked lists are mixed during garbage collection. Since the structure of the linked list exists together with the object itself (just like

ob_refcount), mixing these objects in linked lists will cause CoW of the corresponding pages, which is an unfortunate side effect./* GC information is stored BEFORE the object structure. */

typedef union _gc_head {

struct {

union _gc_head *gc_next;

union _gc_head *gc_prev;

Py_ssize_t gc_refs;

} gc;

long double dummy; /* force worst-case alignment */

} PyGC_Head;Attempt number 2: try disabling the garbage collector

Well, since the garbage collector treacherously betrayed us, let's turn it off!

We have added a call

gc.disable()to our download script. Rebooted the server - and again failure! If we look at perf again, we will see what gc.collectis still being called, and copying to memory is still in progress. After a little debugging in GDB, we found that one of the external libraries we use (msgpack) calls gc.enable()to revive the garbage collector, so it gc.disable()was useless in the boot script. Patching msgpack was unacceptable for us, as it opened up the possibility for other libraries to do the same without informing us. First, you need to prove that disabling the garbage collector really helps. The answer lies again in

gcmodule.c. As an alternativegc.disablewe performed gc.set_threshold(0), and this time not a single library returned this value to its place. Thus, we successfully increased the amount of shared memory for each workflow from 140 MB to 225 MB, and the total amount of used memory on the host dropped to 8 GB on each machine. This saved 25% of RAM on all Django servers. With such a supply of free space, we can both start a lot more processes and increase the threshold for resident memory. As a result, this increases the throughput of the Django layer by over 10%.

Attempt number 3: completely disable the garbage collector

After experimenting with many settings, we decided to test our theory in a wider context: on a cluster. The results were not long in coming, and our continuous deployment process fell apart, as the web server started to reboot much slower with the garbage collector turned off. It usually took less than 10 seconds to restart, but when the garbage collector was turned off, it sometimes took up to 60 seconds.

2016-05-02_21:46:05.57499 WSGI app 0 (mountpoint='') ready in 115 seconds on interpreter 0x92f480 pid: 4024654 (default app)This bug was difficult to reproduce, as the behavior was not deterministic. After many experiments, it was possible to determine the exact steps of the reproduction. When this happened, the free memory on this host dropped to almost zero and jumped back, filling the entire cache. Then there came a time when all the code or data had to be read from disk (DSK 100%), and everything worked slowly.

This could indicate that Python is doing the final garbage collection when the interpreter stops, which could cause a giant leap in the amount of memory used in a very short period of time. And again, I decided to prove it first, and then decide how to fix it. So, I commented out the call

Py_Finalizein the uWSGI plugin for Python, and the problem disappeared. Obviously we couldn't just turn off

Py_Finalize. Many important cleaning procedures depended on this method. In the end, we added a dynamic flag to CPython that completely turned off garbage collection. Finally, we needed to apply our solution on a larger scale. We tried to apply it on all servers, but this again broke the continuous deployment process. However, this time only machines with old processor models (Sandy Bridge) were affected, and it was even more difficult to reproduce. Conclusion: always test old customers / equipment, as they break most easily.

Since our continuous deployment process is fast enough to understand what is happening, I added a separate utility

atopinto our installation script. Now we could catch the moment when the cache was almost full, and all uWSGI processes threw many MINFLTs (minor errors of missing pages in memory).

And again, when performing performance profiling, we come across

Py_Finalize. When shutting down, in addition to garbage collection, Python performs several cleanup operations: such as destroying type objects or unloading modules. And it again harmed the general memory.

Attempt number 4: Last step to turn off the garbage collector: no cleaning

Why bother cleaning anything? The process will die, and we will receive a replacement for it. What we need to worry about is the atexit function handlers, which clean up behind our applications. But don't worry about Python cleanups. Here's how we eventually changed our boot script:

# gc.disable() doesn't work, because some random 3rd-party library will

# enable it back implicitly.

gc.set_threshold(0)

# Suicide immediately after other atexit functions finishes.

# CPython will do a bunch of cleanups in Py_Finalize which

# will again cause Copy-on-Write, including a final GC

atexit.register(os._exit, 0)The solution is based on the fact that atexit functions are run from the register in the reverse order. The atexit function completes the rest of the cleanup, and then calls

os._exit(0)to complete the current process. Having changed only two lines, we finally rolled out the solution to all our servers. By carefully adjusting the thresholds for memory, we got a total performance gain of 10%!

Look back

In comprehending the performance improvement, we had a couple of questions:

First, shouldn't Python memory overflow without garbage collection, since it no longer clears? (Recall that there is no real stack in Python's memory since all objects are stored on the heap)

Fortunately, this is not the case. The primary mechanism for releasing objects in Python is reference counting. When a reference to an object is deleted (when called

Py_DECREF), Python always checks to see if the reference count for that object has reached zero. In this case, the deallocator of this object will be called. The main task of garbage collection is to destroy cyclic dependencies when the link counting mechanism does not work.

#define Py_DECREF(op) \

do { \

if (_Py_DEC_REFTOTAL _Py_REF_DEBUG_COMMA \

--((PyObject*)(op))->ob_refcnt != 0) \

_Py_CHECK_REFCNT(op) \

else \

_Py_Dealloc((PyObject *)(op)); \

} while (0)Let's figure out where the winnings come from.

Second question: where does the performance gain come from?

Turning off the garbage collector gives a double win:

- We freed almost 8 GB of RAM on each server and were able to use them to create more work processes on servers with limited memory bandwidth, or to reduce the number of process restarts on servers with a CPU power limit;

- CPU bandwidth has also increased as the number of instructions executed per clock cycle (IPC) increases by almost 10%.

# perf stat -a -e cache-misses,cache-references -- sleep 10

Performance counter stats for 'system wide':

268,195,790 cache-misses # 12.240 % of all cache refs [100.00%]

2,191,115,722 cache-references

10.019172636 seconds time elapsedWith the garbage collector disabled, the cache-miss rate drops by 2–3%, which is the main reason for the 10% improvement in IPC. Cache misses are expensive because they slow down the processor computing pipeline. A slight increase in the CPU cache hit rating can significantly improve IPC. The fewer copy-on-write (CoW) operations are performed, the more cache lines with different virtual addresses (in different work processes) point to the same address in physical memory, which leads to an increase in the cache hit rating.

As you can see, not every component works as we think, and the results can sometimes be unexpected. Therefore, continue research and be amazed at how everything really works!

Oh, and come to us to work? :)wunderfund.io is a young foundation that deals with high-frequency algorithmic trading . High-frequency trading is a continuous competition of the best programmers and mathematicians around the world. By joining us, you will become part of this fascinating battle.

We offer interesting and complex data analysis and low latency development tasks for enthusiastic researchers and programmers. A flexible schedule and no bureaucracy, decisions are quickly taken and implemented.

Join our team: wunderfund.io