How we doubled the speed of working with Float in Mono

- Transfer

My friend Aras recently wrote the same ray tracer in different languages, including C ++, C #, and the Unity Burst compiler. Of course, it is natural to expect that C # will be slower than C ++, but it seemed interesting to me that Mono is so slower than .NET Core.

His published indicators were poor:

- C # (.NET Core): Mac 17.5 Mray / s,

- C # (Unity, Mono): Mac 4.6 Mray / s,

- C # (Unity, IL2CPP): Mac 17.1 Mray / s

I decided to see what was happening and document places that could be improved.

As a result of this benchmark and studying this problem, we found three areas in which improvement is possible:

- First, you need to improve the default Mono settings, because users usually don’t configure their settings

- Secondly, we need to actively introduce the world to the backend of LLVM code optimization in Mono

- Thirdly, we improved the tuning of some Mono parameters.

The reference point of this test was the results of the ray tracer run on my machine, and since I have different hardware, we can not compare the numbers.

The results on my home iMac for Mono and .NET Core were as follows:

| Working environment | Results, MRay / sec |

|---|---|

.NET Core 2.1.4 debug build dotnet run | 3.6 |

.NET Core 2.1.4 release build dotnet run -c Release | 21.7 |

Vanilla Mono, mono Maths.exe | 6.6 |

| Vanilla Mono with LLVM and float32 | 15.5 |

In the process of studying this problem, we found a couple of problems, after the correction of which the following results were obtained:

| Working environment | Results, MRay / sec |

|---|---|

| Mono with LLVM and float32 | 15.5 |

| Advanced Mono with LLVM, float32 and fixed inline | 29.6 |

The big picture:

Just by applying LLVM and float32, you can increase the performance of floating point code by almost 2.3 times. And after tuning, which we added to Mono as a result of these experiments, you can increase productivity by 4.4 times compared to the standard Mono - these parameters in future versions of Mono will become the default parameters.

In this article I will explain our findings.

32-bit and 64-bit Float

Aras uses 32-bit floating-point numbers (type

floatin C # or System.Single.NET) for the bulk of the calculations . In Mono, we made a mistake a long time ago - all 32-bit floating point calculations were performed as 64-bit, and the data was still stored in 32-bit areas. Today, my memory is not as sharp as before, and I can’t remember exactly why we made such a decision.

I can only assume that it was influenced by trends and ideas of that time.

Then a positive aura hovered around float computing with increased accuracy. For example, Intel x87 processors used 80-bit precision for floating point calculations, even when the operands were double, which provided users with more accurate results.

At that time, the idea was also relevant that in one of my previous projects - Gnumeric spreadsheets - statistical functions were implemented more efficiently than in Excel. Therefore, many communities are well aware of the idea that more accurate results with increased accuracy can be used.

At the initial stages of Mono development, most of the mathematical operations performed on all platforms could receive only double at the input. 32-bit versions were added to C99, Posix and ISO, but in those days they were not widely available for the entire industry (for example,

sinfthis is a float version sin, fabsf- version fabs, and so on). In short, the early 2000s were a time of optimism.

Applications paid a heavy price for increasing computation time, but Mono was mainly used for desktop Linux applications serving HTTP pages and some server processes, so floating-point speed was not the problem we encountered daily. It became noticeable only in some scientific benchmarks, and in 2003 they were rarely developed on .NET.

Today, games, 3D applications, image processing, VR, AR, and machine learning have made floating point operations a more common type of data. The trouble does not come alone, and there are no exceptions. Float was no longer the friendly data type used in the code in just a couple of places. They turned into an avalanche, from which there is nowhere to hide. There are a lot of them and their spread cannot be stopped.

Workspace flag float32

Therefore, a couple of years ago we decided to add support for performing 32-bit float operations using 32-bit operations, as in all other cases. We called this feature of the workspace “float32”. In Mono, it is enabled by adding an option in the working environment

--O=float32, and in Xamarin applications this parameter is changed in the project settings. This new flag was well received by our mobile users, because basically mobile devices are still not too powerful, and they are preferable to process data faster than having increased accuracy. We recommended that mobile users turn on the LLVM optimizing compiler and the float32 flag at the same time.

Although this flag has been implemented for several years, we did not make it the default one to avoid unpleasant surprises for users. However, we began to encounter cases in which surprises arise due to standard 64-bit behavior, see this bug report submitted by the Unity user .

Now we will use Mono by default

float32, progress can be tracked here: https://github.com/mono/mono/issues/6985 . In the meantime, I returned to the project of my friend Aras. He used the new APIs that were added to .NET Core. Although .NET Core has always performed 32-bit float operations as 32-bit floats, the API

System.Mathstill performs conversions from floattodouble. For example, if you need to calculate the sine function for a float value, then the only option is to call Math.Sin (double), and you will have to convert from float to double. To fix this, a new type has been added to .NET Core

System.MathFthat contains single-precision floating-point math operations, and now we just ported this [System.MathF]to Mono . The transition from 64-bit to 32-bit float significantly improves performance, which can be seen from this table:

| Work environment and options | Mrays / second |

|---|---|

| Mono with System.Math | 6.6 |

Mono with System.Math and -O=float32 | 8.1 |

| Mono with System.MathF | 6.5 |

Mono with System.MathF and -O=float32 | 8.2 |

That is, the application

float32in this test really improves performance, and MathF has little effect.LLVM setup

In the course of this research, we found that although there is support in the Fast JIT Mono compiler

float32, we did not add this support to the LLVM backend. This meant that Mono with LLVM was still performing costly conversions from float to double. Therefore, Zoltan added support for the LLVM code generation engine

float32. Then he noticed that our inliner uses the same heuristics for Fast JIT as those used for LLVM. When working with Fast JIT, it is necessary to strike a balance between JIT speed and execution speed, therefore we limited the amount of embedded code to reduce the amount of work of the JIT engine.

But if you decide to use LLVM in Mono, then you strive for the code as fast as possible, so we changed the settings accordingly. Today, this parameter can be changed using the environment variable

MONO_INLINELIMIT, but in fact it needs to be written to the default values. Here are the results with the modified LLVM settings:

| Work environment and options | Mrays / seconds |

|---|---|

Mono with System.Math --llvm -O=float32 | 16.0 |

Mono with System.Math --llvm -O=float32, constant heuristics | 29.1 |

Mono with System.MathF --llvm -O=float32, constant heuristics | 29.6 |

Next steps

Little effort was needed to make all of these improvements. These changes were led by periodic discussions at Slack. I even managed to make a few hours one evening to transfer

System.MathFto Mono. The Aras ray tracer code has become an ideal subject to study because it was self-sufficient, it was a real application, and not a synthetic benchmark. We want to find other similar software that can be used to study the binary code that we generate, and make sure that we pass LLVM the best data for the optimal execution of its work.

We are also considering updating our LLVM, and using the new added optimizations.

Separate note

Extra precision has nice side effects. For example, reading the pool requests of the Godot engine, I saw that there is an active discussion on whether to make the accuracy of floating point operations customizable at compile time ( https://github.com/godotengine/godot/pull/17134 ).

I asked Juan why this might be necessary for someone, because I believed that 32-bit floating-point operations are quite enough for games.

Juan explained that in the general case, floats work great, but if you “move away” from the center, say, move 100 kilometers from the center of the game, a calculation error starts to accumulate, which can lead to interesting graphical glitches. You can use different strategies to reduce the impact of this problem, and one of them is work with increased accuracy, for which you have to pay for performance.

Shortly after our conversation on my Twitter feed, I saw a post demonstrating this problem: http://pharr.org/matt/blog/2018/03/02/rendering-in-camera-space.html

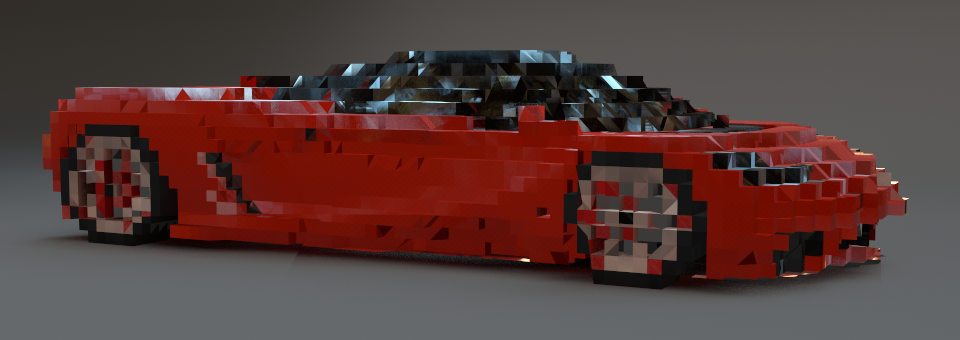

The problem is shown in the images below. Here we see a sports car model from the pbrt-v3-scenes package **. Both the camera and the scene are near the origin, and everything looks great.

** (Author of the car model Yasutoshi Mori .)

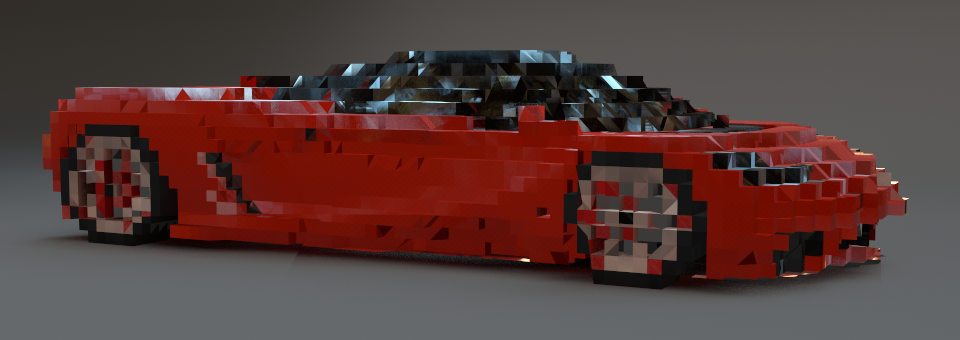

Then we move the camera and the scene 200,000 units xx, yy and zz from the origin. It can be seen that the model of the machine has become quite fragmented; this is solely due to a lack of precision in floating point numbers.

If we move even further 5 × 5 × 5 times, 1 million units from the origin, the model begins to disintegrate; the machine turns into an extremely crude voxel approximation of itself, both interesting and terrifying. (Keanu asked the question: Is Minecraft so cubic simply because everything is rendered very far from the origin?)

** (I apologize to Yasutoshi Mori for what we did with his beautiful model.)