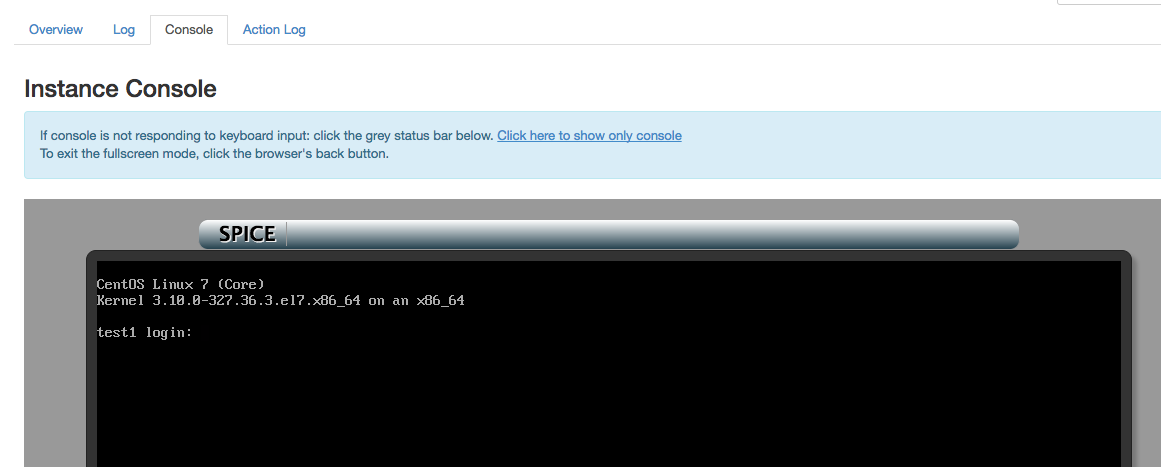

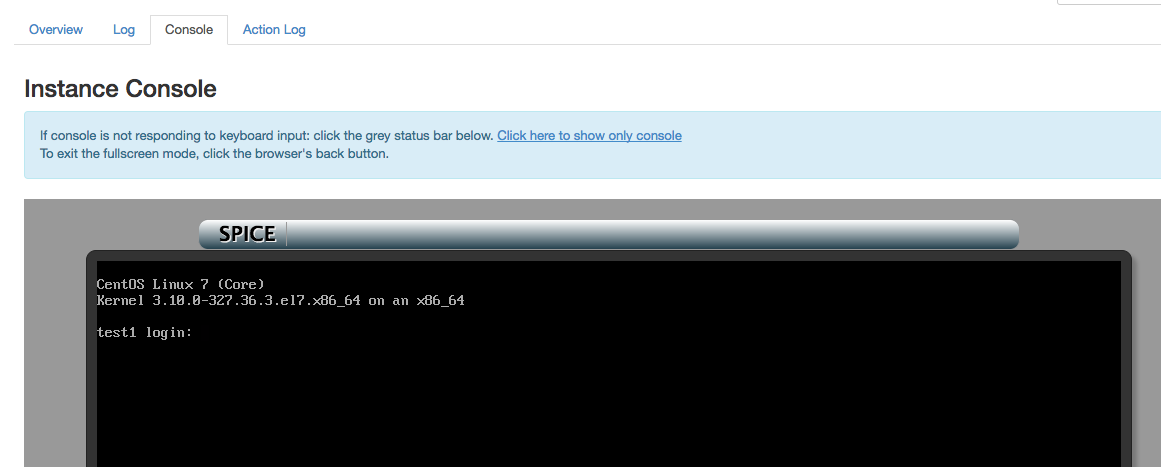

Configure SPICE Console for Virtual Machines in OpenStack

- Tutorial

This article will be of interest to administrators of the OpenStack cloud platform. It will be about displaying a virtual machine console in a dashboard. The fact is that, by default, OpenStack uses the noVNC console, which works with acceptable speed within the local network, but is not suitable for working with virtual machines running in a remote data center. In this case, the responsiveness of the console, to put it mildly, depressing.

This article will discuss how to configure the much faster SPICE console in your OpenStack installation .

OpenStack has two protocols for the virtual console graphical console - VNC and SPICE. Out of the box comes the web implementation of the VNC client - noVNC.

About SPICE know much less people. In general, SPICE is a remote access protocol that supports a lot of useful things, such as streaming video, audio, copy-paste, USB forwarding and much more. However, the OpenStack dashboard uses the SPICE-HTML5 web client, which does not support all these functions, but it displays the virtual console very efficiently and quickly, that is, it does just what you need.

In the official documentation ( link1 , link2) Openstack quite a bit of information on setting up the SPICE console. In addition, it says that "VNC must be explicitly disabled to get access to the SPICE console." This is not entirely true, it is more likely that when the VNC console is turned on, you cannot use the SPICE console from the dashboard (but you can still use the API, that is, “nova get-spice-console” using python-novaclient) . In addition, the SPICE console will be available only for new virtual machines, the old ones will still only use VNC before hard reboot, resize or migration.

So, in this article I used two versions of OpenStack from Mirantis at once: Kilo (Mirantis OpenStack 7.0) and Mitaka (Mirantis OpenStack 9.0). As with all enterprise distributions, a configuration with three controller nodes and HTTPS on the frontend is used. The qemu-kvm hypervisor, the operating system everywhere is Ubuntu 14.04, the cloud was deployed via Fuel.

The configuration affects both the controller nodes and the comp. On the controller nodes, do the following.

We put the spice-html5 package itself:

In the Nova config, enter the following values:

/etc/nova/nova.conf

WhereIs the FQDN of your Horizon Dashboard. Obviously, the certificate and key above must match FRONTEND_FQDN, otherwise a modern browser will not let the SPICE widget work. If your Horizon does not use HTTPS, then SSL settings can be omitted.

For the simultaneous operation of noVNC and SPICE, you need to do this feint:

To work through HTTPS, you need to use Secure Websockets, for this you will have to fix the /usr/share/spice-html5/spice_auto.html file. In this section of the code, you need to fix "ws: //" to "wss: //"

/usr/share/spice-html5/spice_auto.html

Again, for the simultaneous operation of noVNC and SPICE, you need to fix the upstart scripts /etc/init/nova-novncproxy.conf and /etc/init/nova-spicehtml5proxy.conf. In both scripts you need to comment out one line:

/etc/init/nova-spicehtml5proxy.conf

/etc/init/nova-novncproxy.conf

Actually, this allows you to remove the console type check from the file / etc / default / nova-consoleproxy.

Now you need to fix the Haproxy configs:

/etc/haproxy/conf.d/170-nova-novncproxy.cfg

/etc/haproxy/conf.d/171-nova-spiceproxy.cfg

where PUBLIC_VIP is the IP address on which FRONTEND_FQDN hangs.

Finally, we restart the services on the node controller:

here p_haproxy is the Pacemaker resource for Haproxy, through which numerous OpenStack services work.

On each compute node, you need to make changes to the Nova config:

/etc/nova/nova.conf

here COMPUTE_MGMT_IP is the address of the management interface of this compute node (in Mirantis OpenStack there is a separation into public and management networks).

After that, you need to restart the nova-compute service:

Now one important point. I already wrote above that we do not turn off VNC, because in this case, existing virtual machines will lose the console in Dashboard. However, if we deploy the cloud from scratch, then it makes sense to turn off VNC altogether. To do this, in the Nova config on all nodes set:

But anyway, if we activate VNC and SPICE together in a cloud in which virtual machines are already spinning, then after all the above steps, outwardly nothing will change either for already running virtual machines or for new ones - the noVNC console will still open. If you look at the Horizon settings, the type of console used is controlled by the following settings:

/etc/openstack-dashboard/local_settings.py

By default, the value is AUTO, that is, the type of console is selected automatically. But what does this mean? The point is in one file where the consoles are prioritized:

/usr/share/openstack-dashboard/openstack_dashboard/dashboards/project/instances/console.py

As you can see, the VNC console takes precedence, if any. If it is not, then the SPICE console will be searched. It makes sense to swap the first two points, then existing virtual machines will still work with slow VNC, and new ones with the new fast SPICE. Just what you need!

Subjectively, you can say that the SPICE console is very fast. In the mode without graphics, there are no brakes at all, in the graphic mode everything is also fast, and compared to the VNC protocol, it’s just heaven and earth! So I recommend it to everyone!

On this, the configuration can be considered complete, but in the end I will show how, in fact, both of these consoles look in the libvirt XML config:

Obviously, if you have network access to the compute node of the virtual machine, then you can use any other VNC / SPICE client instead of the web interface by simply connecting to the above TCP port in the configuration (in this case, 5900 for VNC and 5901 for SPICE) .

This article will discuss how to configure the much faster SPICE console in your OpenStack installation .

OpenStack has two protocols for the virtual console graphical console - VNC and SPICE. Out of the box comes the web implementation of the VNC client - noVNC.

About SPICE know much less people. In general, SPICE is a remote access protocol that supports a lot of useful things, such as streaming video, audio, copy-paste, USB forwarding and much more. However, the OpenStack dashboard uses the SPICE-HTML5 web client, which does not support all these functions, but it displays the virtual console very efficiently and quickly, that is, it does just what you need.

In the official documentation ( link1 , link2) Openstack quite a bit of information on setting up the SPICE console. In addition, it says that "VNC must be explicitly disabled to get access to the SPICE console." This is not entirely true, it is more likely that when the VNC console is turned on, you cannot use the SPICE console from the dashboard (but you can still use the API, that is, “nova get-spice-console” using python-novaclient) . In addition, the SPICE console will be available only for new virtual machines, the old ones will still only use VNC before hard reboot, resize or migration.

So, in this article I used two versions of OpenStack from Mirantis at once: Kilo (Mirantis OpenStack 7.0) and Mitaka (Mirantis OpenStack 9.0). As with all enterprise distributions, a configuration with three controller nodes and HTTPS on the frontend is used. The qemu-kvm hypervisor, the operating system everywhere is Ubuntu 14.04, the cloud was deployed via Fuel.

The configuration affects both the controller nodes and the comp. On the controller nodes, do the following.

We put the spice-html5 package itself:

apt-get install spice-html5In the Nova config, enter the following values:

/etc/nova/nova.conf

[DEFAULT]

ssl_only = True

cert = '/path/to/SSL/cert'

key = '/path/to/SSL/key'

web=/usr/share/spice-html5

[spice]

spicehtml5proxy_host = ::

html5proxy_base_url = https://:6082/spice_auto.html

enabled = True

keymap = en-us Where

For the simultaneous operation of noVNC and SPICE, you need to do this feint:

cp -r /usr/share/novnc/* /usr/share/spice-html5/To work through HTTPS, you need to use Secure Websockets, for this you will have to fix the /usr/share/spice-html5/spice_auto.html file. In this section of the code, you need to fix "ws: //" to "wss: //"

/usr/share/spice-html5/spice_auto.html

function connect()

{

var host, port, password, scheme = "wss://", uri;Again, for the simultaneous operation of noVNC and SPICE, you need to fix the upstart scripts /etc/init/nova-novncproxy.conf and /etc/init/nova-spicehtml5proxy.conf. In both scripts you need to comment out one line:

/etc/init/nova-spicehtml5proxy.conf

script

[ -r /etc/default/nova-consoleproxy ] && . /etc/default/nova-consoleproxy || exit 0

#[ "${NOVA_CONSOLE_PROXY_TYPE}" = "spicehtml5" ] || exit 0/etc/init/nova-novncproxy.conf

script

[ -r /etc/default/nova-consoleproxy ] && . /etc/default/nova-consoleproxy || exit 0

#[ "${NOVA_CONSOLE_PROXY_TYPE}" = "novnc" ] || exit 0Actually, this allows you to remove the console type check from the file / etc / default / nova-consoleproxy.

Now you need to fix the Haproxy configs:

/etc/haproxy/conf.d/170-nova-novncproxy.cfg

listen nova-novncproxy

bind :6080 ssl crt /var/lib/astute/haproxy/public_haproxy.pem no-sslv3 no-tls-tickets ciphers AES128+EECDH:AES128+EDH:AES256+EECDH:AES256+EDH

balance source

option httplog

option http-buffer-request

timeout http-request 10s

server controller1 192.168.57.6:6080 ssl verify none check

server controller2 192.168.57.3:6080 ssl verify none check

server controller3 192.168.57.7:6080 ssl verify none check /etc/haproxy/conf.d/171-nova-spiceproxy.cfg

listen nova-novncproxy

bind :6082 ssl crt /var/lib/astute/haproxy/public_haproxy.pem no-sslv3 no-tls-tickets ciphers AES128+EECDH:AES128+EDH:AES256+EECDH:AES256+EDH

balance source

option httplog

timeout tunnel 3600s

server controller1 192.168.57.6:6082 ssl verify none check

server controller2 192.168.57.3:6082 ssl verify none check

server controller3 192.168.57.7:6082 ssl verify none check where PUBLIC_VIP is the IP address on which FRONTEND_FQDN hangs.

Finally, we restart the services on the node controller:

service nova-spicehtml5proxy restart

service apache2 restart

crm resource restart p_haproxyhere p_haproxy is the Pacemaker resource for Haproxy, through which numerous OpenStack services work.

On each compute node, you need to make changes to the Nova config:

/etc/nova/nova.conf

[spice]

spicehtml5proxy_host = ::

html5proxy_base_url = https://:6082/spice_auto.html

enabled = True

agent_enabled = True

server_listen = ::

server_proxyclient_address = COMPUTE_MGMT_IP

keymap = en-us here COMPUTE_MGMT_IP is the address of the management interface of this compute node (in Mirantis OpenStack there is a separation into public and management networks).

After that, you need to restart the nova-compute service:

service nova-compute restartNow one important point. I already wrote above that we do not turn off VNC, because in this case, existing virtual machines will lose the console in Dashboard. However, if we deploy the cloud from scratch, then it makes sense to turn off VNC altogether. To do this, in the Nova config on all nodes set:

[DEFAULT]

vnc_enabled = False

novnc_enabled = FalseBut anyway, if we activate VNC and SPICE together in a cloud in which virtual machines are already spinning, then after all the above steps, outwardly nothing will change either for already running virtual machines or for new ones - the noVNC console will still open. If you look at the Horizon settings, the type of console used is controlled by the following settings:

/etc/openstack-dashboard/local_settings.py

# Set Console type:

# valid options would be "AUTO", "VNC" or "SPICE"

# CONSOLE_TYPE = "AUTO"By default, the value is AUTO, that is, the type of console is selected automatically. But what does this mean? The point is in one file where the consoles are prioritized:

/usr/share/openstack-dashboard/openstack_dashboard/dashboards/project/instances/console.py

...

CONSOLES = SortedDict([('VNC', api.nova.server_vnc_console),

('SPICE', api.nova.server_spice_console),

('RDP', api.nova.server_rdp_console),

('SERIAL', api.nova.server_serial_console)])

...As you can see, the VNC console takes precedence, if any. If it is not, then the SPICE console will be searched. It makes sense to swap the first two points, then existing virtual machines will still work with slow VNC, and new ones with the new fast SPICE. Just what you need!

Subjectively, you can say that the SPICE console is very fast. In the mode without graphics, there are no brakes at all, in the graphic mode everything is also fast, and compared to the VNC protocol, it’s just heaven and earth! So I recommend it to everyone!

On this, the configuration can be considered complete, but in the end I will show how, in fact, both of these consoles look in the libvirt XML config:

Obviously, if you have network access to the compute node of the virtual machine, then you can use any other VNC / SPICE client instead of the web interface by simply connecting to the above TCP port in the configuration (in this case, 5900 for VNC and 5901 for SPICE) .