What's New in Windows Server 2016 Failover Clustering

The author of the article is Roman Levchenko ( www.rlevchenko.com ), MVP - Cloud and Datacenter Management

Hello! More recently, Windows Server 2016 has been announced globally available, meaning that it is now possible to start using the new version of the product in your infrastructure. The list of innovations is quite extensive and we have already described some of them ( here and here ), but in this article we will analyze high-availability services, which, in my opinion, are the most interesting and used (especially in virtualization environments).

Cluster OS Rolling upgrade

Cluster migration in previous versions of Windows Server caused significant downtime due to the inaccessibility of the original cluster and the creation of a new one based on the updated OS on the nodes with the subsequent migration of roles between the clusters. Such a process carries increased requirements for staff qualifications, certain risks and uncontrolled labor costs. This fact is especially true for CSP or other customers who have time limits on the unavailability of services within the SLA. Do not describe what a significant SLA violation means for a resource provider)

Windows Server 2016 corrects the situation through the possibility of combining Windows Server 2012 R2 and Windows Server 2016 on nodes within the same cluster during its upgrade (Cluster OS Rolling Upgrade (hereinafter CRU)).

From the name, you can guess that the cluster migration process consists mainly in a phased reinstallation of the OS on the servers, but we will talk about this in more detail a little later.

First we define the list of "goodies" that the CRU provides:

- Complete lack of downtime when upgrading WS2012R2 Hyper-V / SOFS clusters. For other cluster roles (for example, SQL Server) their unavailability is possible (less than 5 minutes), which is necessary for working out a one-time failover.

- No need for additional hardware. As a rule, a cluster is built from the consideration of the possible inaccessibility of one or more nodes. In the case of CRU, the unavailability of nodes will be planned and phased. Thus, if a cluster can easily survive the temporary absence of at least 1 of the nodes, then additional nodes are not required to achieve zero-downtime. If you plan to upgrade several nodes at once (this is supported), then you need to plan the load distribution between available nodes in advance.

- Creating a new cluster is not required. CRU uses the current CNO.

- The transition process is reversible (until the cluster level increases).

- In-Place Upgrade Support. But, it is worth noting that the recommended option for updating cluster nodes is the full installation of WS2016 without saving data (clean-os install). In the case of In-Place Upgrade, it is mandatory to check the full functionality after updating each of the nodes (event logs, etc.).

- CRU is fully supported by VMM 2016 and can be additionally automated through PowerShell / WMI.

CRU process using the example of a 2-node Hyper-V cluster:

- A preliminary backup of the cluster (DB) and running resources is recommended. The cluster must be operational, nodes are accessible. If necessary, fix existing problems before migration and suspend backup tasks before starting the transition.

- Update cluster nodes in Windows Server 2012 R2 using Cluster Aware Updating (CAU) or manually through WU / WSUS.

- With the configured CAU, it is necessary to temporarily disable it to prevent its possible impact on the placement of roles and the state of nodes during the transition.

- The CPUs on the nodes must have SLAT support to support the execution of virtual machines within WS2016. This condition is mandatory.

- On one of the nodes we perform the transfer of roles (drain roles) and exclusion from the cluster (evict):

- After the node is excluded from the cluster, we perform the recommended full installation of WS2016 (clean OS install, Custom: Install Windows only (advanced) )

- After reinstalling, return the network settings back *, update the node and install the necessary roles and components. In my case, the presence of the Hyper-V role and, of course, Failover Clustering is required.

New-NetLbfoTeam -Name HV -TeamMembers tNIC1,tNIC2 -TeamingMode SwitchIndependent -LoadBalancingAlgorithm DynamicAdd-WindowsFeature Hyper-V, Failover-Clustering -IncludeManagementTools -RestartNew-VMSwitch -InterfaceAlias HV -Name VM -MinimumBandwidthMode Weight -AllowManagementOS 0

* Using Switch Embedded Teaming is possible only after the transition to WS2016 is complete. - Add the node to the appropriate domain.

Add-Computer -ComputerName HV01 -DomainName domain.com -DomainCredential domain\rlevchenko - Return the node to the cluster. The cluster will start working in mixed mode to support WS2012R2 functionality without support for new WS2016 features. It is recommended that you complete the upgrade of the remaining sites within 4 weeks.

- We move the cluster roles back to the HV01 node to redistribute the load.

- Repeat steps (4-9) for the remaining node (HV02).

- After upgrading the nodes to WS2016, it is necessary to raise the functional level (Mixed Mode - 8.0, Full - 9.0) of the cluster to complete the migration.

PS C: \ Windows \ system32> Update-ClusterFunctionalLevel

Updating the functional level for cluster hvcl.

Warning: You cannot undo this operation. Do you want to continue?

[Y] Yes [A] Yes to All [N] No [L] No to All [S] Suspend [?] Help (default is Y): a

Name

- Hvcl - (optional and with caution) Updating the VM configuration version to include new Hyper-V features. Shutdown of VM is required and preliminary backup is desirable. The VM version in 2012R2 is 5.0, and in 2016 RTM it is 8.0. The example shows the command to update all VMs in the cluster:

Get-ClusterGroup|? {$_.GroupType -EQ "VirtualMachine"}|Get-VM|Update-VMVersion

The list of VM versions supported by 2016 RTM:

Cloud witness

In any cluster configuration, you need to consider the features of Witness placement to provide additional voice and overall quorum. Witness in 2012 R2 can be built on the basis of a shared external file resource or disk accessible to each of the cluster nodes. Let me remind you that the necessity of Witness configuration is recommended for any number of nodes, starting from 2012 R2 (dynamic quorum).

In Windows Server 2016, a new Cloud Witness-based quorum configuration model is available to enable DR-building based on Windows Server and other scenarios.

Cloud Witness uses Microsoft Azure resources (Azure Blob Storage, via HTTPS, ports on the nodes must be accessible) to read / write service information, which changes when the status of cluster nodes changes. The blob-file name is made in accordance with the unique identifier of the cluster - therefore, one Storage Account can be provided to several clusters at once (1 blob-file per cluster within the automatically created msft-cloud-witness container). The cloud storage size requirements are minimal for witness to work and does not require large maintenance costs. Also, hosting in Azure eliminates the need for a third site when configuring Stretched Cluster and disaster recovery solutions.

Cloud Witness can be used in the following scenarios:

- To provide a DR cluster hosted on different sites (multi-site).

- Clusters without shared storage (Exchange DAG, SQL Always-On, and others).

- Guest clusters running on both Azure and on-premises.

- Storage Clusters with or without shared storage (SOFS).

- Clusters within a workgroup or across domains (new WS2016 functionality).

The process of creating and adding Cloud Witness is quite simple:

- Create a new Azure Storage Account (Locally-redundant storage) and in the account properties copy one of the access keys.

- Run the quorum configuration wizard and select Select the Quorum Witness - Configure a Cloud Witness.

- Enter the name of the created storage account and insert the passkey.

- Upon successful completion of the configuration wizard, Witness will appear in Core Resources.

- Blob file in container:

For simplification, you can use PowerShell:

Workgroup and Multi-Domain Clusters

In Windows Server 2012 R2 and earlier, the global requirement must be met before creating a cluster: nodes must be members of the same domain. The Active Directory Detached cluster, introduced in 2012 R2, has a similar requirement and does not significantly simplify it.

In Windows Server 2016, you can create a cluster without binding to AD within the workgroup or between nodes that are members of different domains. The process is similar to creating a deattached cluster in 2012 R2, but it has some features:

- Supported only within the WS2016 environment.

- Requires Failover Clustering role.

Install-WindowsFeature Failover-Clustering -IncludeManagementTools - On each of the nodes you need to create a user with membership in the Administrators group or use the built-in account. record. Password and user name must be identical.

net localgroup administrators cluadm /add

If the “Requested Registry access is not allowed” error occurs, you must change the LocalAccountTokenFilterPolicy policy value .New-ItemProperty -Path HKLM:\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System -Name LocalAccountTokenFilterPolicy -Value 1 - Primary DNS-suffix on hosts must be defined.

- Cluster creation is supported both through PowerShell and through the GUI.

New-Cluster -Name WGCL -Node rtm-1,rtm-2 -AdministrativeAccessPoint DNS -StaticAddress 10.0.0.100 - As Witness, you can use only Disk Witness or the Cloud Witness described earlier. File Share Witness is unfortunately not supported.

Supported use cases:

| Role | Support Status | Comment |

|---|---|---|

| SQL Server | Supported by | Using SQL Server Integrated Authentication Recommended |

| File server | Supported but not recommended | Lack of Kerberos authentication, which is basic to SMB |

| Hyper v | Supported but not recommended | Only Quick Migration is available. Live migration is not supported |

| Message Queuing (MSMQ) | Not supported | MSMQ ADDS Required |

Virtual Machine Load Balancing / Node Fairness

Dynamic Optimization, available in VMM, partially migrated to Windows Server 2016 and provides basic load balancing on nodes in automatic mode. To move resources, Live Migration and heuristics are used, on the basis of which the cluster every 30 minutes decides to balance or not:

- Current% of memory usage on the node.

- Average CPU load in 5 minute interval.

The maximum allowable load values are determined by the AutoBalancerLevel value :

get-cluster| fl *autobalancer*

AutoBalancerMode : 2

AutoBalancerLevel : 1| AutoBalancerLevel | Aggressiveness balancing | Comment |

|---|---|---|

| 1 (default) | Low | Perform balancing when loading a node more than 80% according to one of the heuristics |

| 2 | Medium | When loading more than 70% |

| 3 | High | When loading more than 60% |

Balancer parameters can also be defined in the GUI (cluadmin.msc). By default, Low aggression level and constant balancing mode are used.

For verification, I use the following parameters:

AutoBalancerLevel: 2

(Get-Cluster).AutoBalancerLevel = 2AutoBalancerMode: 2

(Get-Cluster).AutoBalancerMode = 2First, we simulate the load on the CPU (about 88%) and then on the RAM (77%). Because an average level of aggressiveness was determined when making a decision on balancing, and our load values above a certain value (70%) virtual machines on a loaded node should move to a free node. The script expects a moment of live migration and displays the elapsed time (from the point where the download started to the node until the migration of the VM).

In the case of a heavy load on the CPU, the balancer moved more than 1 VM, while the RAM load - 1 VM was moved within the designated 30 minute interval, during which the node load was checked and the VM was transferred to other nodes to achieve <= 70% resource utilization.

When using VMM, the built-in balancing on the nodes is automatically disabled and replaced with the more recommended balancing mechanism based on Dynamic Optimization, which allows you to further configure the optimization mode and interval.

Virtual machine start ordering

Changing the logic for starting VMs within a cluster in 2012 R2 is based on the concept of priorities (low, medium, high), the task of which is to ensure the inclusion and accessibility of more important VMs before starting the rest of the "dependent" VMs. This is usually required for multi-tier services built, for example, based on Active Directory, SQL Server, IIS.

To increase functionality and efficiency, Windows Server 2016 has added the ability to determine the dependencies between VMs or VM groups to decide whether they will start correctly using Set or sets of cluster groups. They are mainly aimed at use in conjunction with VMs, but can also be used for other cluster roles.

For example, use the following scenario:

1 VM Clu-VM02is an application dependent on the availability of Active Directory running on virtual. Car Clu-VM01 . And VM Clu-VM03 , in turn, depends on the availability of the application located on VM Clu-VM02.

Create a new set using PowerShell:

Active Directory VM:

PS C: \> New-ClusterGroupSet -Name AD-Group Clu-VM01

Name: AD

GroupNames: {Clu-VM01}

ProviderNames: {}

StartupDelayTrigger: Delay

StartupCount: 4294967295

IsGlobal: False

StartupDelay: 20

Application:

New-ClusterGroupSet -Name Application -Group Clu-VM02 Application-

dependent service:

New-ClusterGroupSet -Name SubApp -Group Clu-VM03

Add dependencies between sets:

Add-ClusterGroupSetDependency -Name Application -Provider AD

Add-ClusterGroupSetDependency -Name SubApp -Provider Application

If necessary, you can change the set parameters using Set-ClusterGroupSet . Example:

Set-ClusterGroupSet Application -StartupDelayTrigger Delay -StartupDelay 30StartupDelayTrigger defines the action that must be performed after the start of the group:

- Delay - wait 20 seconds (default). Used in conjunction with StartupDelay.

- Online - wait for group availability status in set.

StartupDelay - delay time in seconds. 20 seconds by default.

isGlobal - determines the need to run a set before starting other sets of cluster groups (for example, a set with groups of Active Directory VMs must be globally accessible and, therefore, start earlier than other collections).

Let's try to start VM Clu-VM03:

Active Directory is waiting for availability on Clu-VM01 (StartupDelayTrigger - Delay, StartupDelay - 20 seconds)

After starting Active Directory, the dependent application starts on Clu-VM02 (StartupDelay is applied at this stage).

And the last step is to launch the VM Clu-VM03 itself.

VM Compute / Storage Resiliency

In Windows Server 2016, new modes of operation of nodes and VMs have appeared to increase the degree of their stability in scenarios of problematic interaction between cluster nodes and to prevent the complete inaccessibility of resources due to the reaction to "small" problems before the occurrence of more global ones (proactive action).

Isolated mode

On the HV01, the clustering service suddenly became unavailable, i.e. the node has problems of intra-cluster interaction. In this scenario, the node is placed in an Isolated (ResiliencyLevel) state and is temporarily excluded from the cluster.

Virtual machines on an isolated node continue to run * and become Unmonitored (that is, the cluster service does not "care" about the VM data).

* When running a VM on SMB: Online status and correct execution (SMB does not require a “cluster identity” for access). In the case of the block type of VM storage, the status is Paused Critical due to the inaccessibility of Cluster Shared Volumes for an isolated node.

If the node during ResiliencyDefaultPeriod (by default 240 seconds) does not return the clustering service (in our case), then it will move the node to the Down status.

Quarantined mode

Suppose that the HV01 node successfully returned the clustering service to operation, exited Isolated mode, but within an hour the situation repeated 3 or more times (QuarantineThreshold). In this scenario, WSFC will put the node in Quarantined mode for a default of 2 hours (QuarantineDuration) and move the VM of this node to a known “healthy” one.

If we are sure that the source of the problems has been eliminated, we can enter the node back into the cluster:

It is important to note that no more than 25% of the cluster nodes can be in quarantine at the same time.

For customization, use the above options and cmdlet Get-Cluster:

(Get-Cluster). QuarantineDuration = 1800Storage Resiliency

In previous versions of Windows Server, working out the inaccessibility of r / w operations for virt. disk (loss of connection to the storage) is primitive - VMs are turned off and cold boot is required at the subsequent start. In Windows Server 2016, when such problems occur, the VM switches to the Paused-Critical (AutomaticCriticalErrorAction) status, having previously “frozen” its operational state (its inaccessibility will remain, but there will be no unexpected shutdown).

When reconnecting during a timeout (AutomaticCriticalErrorActionTimeout, 30 minutes by default), the VM exits paused-critical and becomes accessible from the “point” when the problem was identified (analogy is pause / play).

If the timeout is reached before the storage is back in operation, the VM will shut down (turn off action)

Site-Aware / Stretched Clusters and Storage Replica

A topic that deserves a separate post, but we will try to briefly get to know you now.

Previously, we were advised by third-party solutions (a lot of $) for creating full-fledged distributed clusters (providing SAN-to-SAN replication). With the advent of Windows Server 2016, reducing the budget by several times and increasing unification when building such systems becomes a reality.

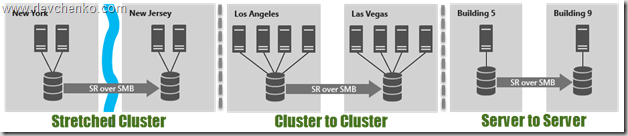

Storage Replica allows synchronous (!) And asynchronous replication between any storage systems (including Storage Spaces Direct) and supporting any workloads - is the basis of multi-site clusters or a full-fledged DR solution. SR is available only in the Datacenter edition and can be used in the following scenarios:

The use of SR within a distributed cluster is especially possible due to the presence of automatic failover and close work with site-awareness, which was also presented in Windows Server 2016. Site-Awarieness allows you to define groups of cluster nodes and bind them to a physical location (site fault domain / site) for the formation of custom failover policies, placement of Storage Spaces Direct data and VM distribution logic. In addition, it is possible to bind not only at the site level, but also to lower levels (node, rack, chassis).

New-ClusterFaultDomain –Name Voronezh –Type Site –Description “Primary” –Location “Voronezh DC”

New-ClusterFaultDomain –Name Voronezh2 –Type Site –Description “Secondary” –Location “Voronezh DC2”

New-ClusterFaultDomain -Name Rack1 -Type Rack

New-ClusterFaultDomain -Name Rack2 -Type Rack

New-ClusterFaultDomain -Name HPc7000 -type Chassis

New-ClusterFaultDomain -Name HPc3000 -type Chassis

Set-ClusterFaultDomain –Name HV01 –Parent Rack1

Set-ClusterFaultDomain –Name HV02 –Parent Rack2

Set-ClusterFaultDomain Rack1,HPc7000 -parent Voronezh

Set-ClusterFaultDomain Rack2,HPc3000 -parent Voronezh2

Such an approach within the multi-site cluster has the following advantages:

- Failover processing initially occurs between nodes within the Fault domain. If all nodes in the Fault Domain are unavailable, then only move to another.

- Draining Roles (role migration during maintenance mode, etc.) checks the possibility of moving first to a site within the local site and only then moves them to another.

- The balancing of CSV (redistribution of cluster disks between nodes) will also strive to work out within the framework of the native fault-domain / site.

- VMs will try to be located in the same site as their dependent CSVs. If CSVs migrate to another site, then VMs will begin their migration to the same site in 1 minute.

Additionally, using the site-awareness logic, it is possible to determine the “parent” site for all newly created VMs / roles:

(Get-Cluster).PreferredSite = <наименование сайта>Or configure more granularly for each cluster group:

(Get-ClusterGroup -Name ИмяВМ).PreferredSite = <имя предпочтительного сайта>Other innovations

- Support for Storage Spaces Direct and Storage QoS.

- Resizing shared vhdx for guest clusters without downtime, support for Hyper-V replication and res. copying at the host level.

- Improved performance and scaling of CSV Cache with support for tiered spaces, storage spaces direct and deduplication (giving dozens of GB of RAM to the cache is no problem).

- Changes in cluster logging (time zone information, etc.) + active memory dump (a new alternative to full memory dump) to simplify diagnosing problems.

- The cluster can now use several interfaces within the same subnet. It is not necessary to configure different subnets on the adapters for their identification by the cluster. Adding happens automatically.

With this, our overview tour of the new WSFC features in Windows Server 2016 is now complete. I hope that the material is useful. Thanks for reading and comments.

Have a great day!