We cover the project with smoke tests until it burns out.

Hello, Habr! Once, at our internal seminar, my leader - the head of the testing department - began his speech with the words "testing is not necessary." In the hall, everyone was quiet, some even tried to fall off the chairs. He continued his thought: without testing, it is quite possible to create a complex and expensive project. And, most likely, it will work. But imagine how much more confident you will feel, knowing that the product works as it should.

Badoo releases are pretty common. For example, the server side, along with the desktop web, will be released twice a day. So we know firsthand that complex and slow testing is a stumbling block to development. Quick testing is happiness. So, today I will talk about how smoke testing is organized at Badoo.

What is smoke testing

This term was first used by stove-makers, who, having assembled an oven, closed all the plugs, flooded it and saw that the smoke came only from the intended places. Wikipedia

In its original application, smoke testing is designed to test the simplest and most obvious cases, without which any other type of testing would be unreasonably redundant.

Let's look at a simple example. The pre-production of our application is located at bryak.com (any coincidence with real sites is random). We prepared and uploaded a new release there for testing. What is worth checking first? I would start by checking that the application is still opening. If the web-server responds with “200”, then everything is fine and you can begin to check the functionality.

How to automate such a check? In principle, you can write a functional test that will raise the browser, open the desired page and make sure that it displays as it should. However, this solution has several disadvantages. Firstly, it’s a long time: the process of launching the browser will take longer than the verification itself. Secondly, this requires the maintenance of additional infrastructure: for the sake of such a simple test, we need to keep a server with browsers somewhere. Conclusion: it is necessary to solve the problem differently.

Our first smoke test

In Badoo, the server side is written mostly in PHP. Unit tests, for obvious reasons, are written on it. So we already have PHPUnit. In order not to produce technologies unnecessarily, we decided to write smoke tests also in PHP. In addition to PHPUnit, we need a client library for working with URLs (libcurl) and a PHP extension for working with it - cURL.

In fact, the tests just make the requests we need to the server and check the answers. Everything is tied to the getCurlResponse () method and several types of assertions.

The method itself looks something like this:

public function getCurlResponse(

$url,

array $params = [

‘cookies’ => [],

‘post_data’ => [],

‘headers’ => [],

‘user_agent’ => [],

‘proxy’ => [],

],

$follow_location = true,

$expected_response = ‘200 OK’

)

{

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, $url);

curl_setopt($ch, CURLOPT_HEADER, 1);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

if (isset($params[‘cookies’]) && $params[‘cookies’]) {

$cookie_line = $this->prepareCookiesDataByArray($params[‘cookies’]);

curl_setopt($ch, CURLOPT_COOKIE, $cookie_line);

}

if (isset($params[‘headers’]) && $params[‘headers’]) {

curl_setopt($ch, CURLOPT_HTTPHEADER, $params[‘headers’]);

}

if (isset($params[‘post_data’]) && $params[‘post_data’]) {

$post_line = $this->preparePostDataByArray($params[‘post_data’]);

curl_setopt($ch, CURLOPT_POST, 1);

curl_setopt($ch, CURLOPT_POSTFIELDS, $post_line);

}

if ($follow_location) {

curl_setopt($ch, CURLOPT_FOLLOWLOCATION, 1);

}

if (isset($params[‘proxy’]) && $params[‘proxy’]) {

curl_setopt($ch, CURLOPT_PROXY, $params[‘proxy’]);

}

if (isset($params[‘user_agent’]) && $params[‘user_agent’]) {

$user_agent = $params[‘user_agent’];

} else {

$user_agent = USER_AGENT_DEFAULT;

}

curl_setopt($ch, CURLOPT_USERAGENT, $user_agent);

curl_setopt($ch, CURLOPT_AUTOREFERER, 1);

$response = curl_exec($ch);

$this->logActionToDB($url, $user_agent, $params);

if ($follow_location) {

$this->assertTrue(

(bool)$response,

'Empty response was received. Curl error: ' . curl_error($ch) . ', errno: ' . curl_errno($ch)

);

$this->assertServerResponseCode($response, $expected_response);

}

curl_close($ch);

return $response;

}The method itself is able to return a server response at a given URL. The input accepts parameters such as cookies, headers, user agent and other data necessary to form the request. When a response from the server is received, the method checks that the response code matches the expected one. If this is not the case, the test crashes with an error reporting this. This is to make it easier to determine the cause of the fall. If the test falls on some assert, telling us that there is no element on the page, the error will be less informative than the message that the response code, for example, is “404” instead of the expected “200”.

When the request is sent and the response is received, we log the request so that in the future, if necessary, it is easy to reproduce the chain of events if the test crashes or breaks. I will talk about this below.

The simplest test looks something like this:

public function testStartPage()

{

$url = ‘bryak.com’;

$response = $this->getCurlResponse($url);

$this->assertHTMLPresent('', $response, 'Error: test cannot find body element on the page.');

}Such a test passes in less than a second. During this time, we checked that the start page responds with "200", and there is a body element on it. With the same success, we can check any number of elements on the page, the duration of the test will not change significantly.

Pluses of such tests:

- speed - the test can be run as often as necessary. For example, for every code change;

- Do not require special software and hardware to work;

- they are easy to write and maintain;

- they are stable.

Regarding the last paragraph. I mean - no less stable than the project itself.

Login

Imagine that three days have passed since we wrote our first smoke test. Of course, during this time we covered all the unauthorized pages that we found with tests. We sat a bit, rejoiced, but then realized that all the most important things in our project are under authorization. How to get the opportunity to test this too?

What is the difference between an authorized page and an unauthorized one? From the point of view of the server, everything is simple: if the request contains information by which the user can be identified, we will be returned an authorized page.

The easiest option is an authorization cookie. If you add it to the request, the server "recognizes us". Such a cookie can be hardcoded in the test, if its lifetime is quite large, but it can be received automatically by sending requests to the authorization page. Let's take a closer look at the second option.

On our site, the authorization page looks like this:

We are interested in the form where you need to enter the username and password of the user.

We open this page in any browser and open the inspector. Enter user data and submit form.

A request appeared in the inspector, which we need to simulate in the test. You can see what data, in addition to the obvious (login and password), is sent to the server. For each project, it’s different: it can be remote token, data from any cookies received earlier, user agent, and so on. Each of these parameters will have to be preliminarily obtained in the test before generating an authorization request.

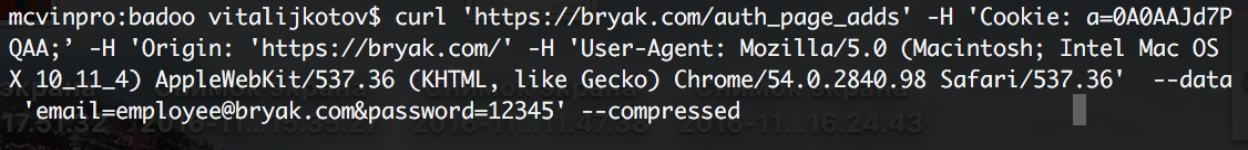

In the development tools of any browser, you can copy the request by selecting copy as cURL. In this form, the command can be inserted into the console and viewed there. There you can test it by changing or adding parameters.

In response to such a request, the server will return cookies to us, which we will add to further requests in order to test authorized pages.

Since authorization is a rather long process, I propose to get an authorization cookie only once for each user and save it somewhere. For example, we have such cookies stored in an array. The key is the user login, and the value is information about them. If there is no key for the next user yet, we will authorize. If there is, we make a request of interest to us immediately.

An example of a test code checking an authorized page looks something like this:

public function testAuthPage()

{

$url = ‘bryak.com’;

$cookies = $this->getAuthCookies(‘employee@bryak.com’, ‘12345’);

$response = $this->getCurlResponse($url, [‘cookies’ => $cookies]);

$this->assertHTMLPresent('', $response, 'Error: test cannot find body element on the page.');

}As we can see, a method has been added that receives an authorization cookie and simply adds it to a further request. The method itself is implemented quite simply:

public function getAuthCookies($email, $password)

{

// check if cookie already has been got

If (array_key_exist($email, self::$known_cookies)) {

return self::$known_cookies[$email];

}

$url = self::DOMAIN_STAGING . ‘/auth_page_adds’;

$post_data = [‘email’ => $email, ‘password’ => $password];

$response = $this->getCurlResponse($url, [‘post_data’ => $post_data]);

$cookies = $this->parseCookiesFromResponse($response);

// save cookie for further use

self::$known_cookies[$email] = $cookies;

return $cookies;

}The method first checks to see if there is an authorization cookie already received for a given e-mail (in your case it can be a login or something else). If there is, he returns it. If not, he makes a request to the authorization page (for example, bryak.com/auth_page_adds) with the necessary parameters: e-mail and user password. In response to this request, the server sends headers, among which there are cookies that interest us. It looks something like this:

HTTP/1.1 200 OK

Server: nginx

Content-Type: text/html; charset=utf-8

Transfer-Encoding: chunked

Connection: keep-alive

Set-Cookie: name=value; expires=Wed, 30-Nov-2016 10:06:24 GMT; Max-Age=-86400; path=/; domain=bryak.comUsing these simple regular expressions, we need to get the name cookie and its value (in our example, name = value) from these headers. We have a method that parses the answer, looks like this:

$this->assertTrue(

(bool)preg_match_all('/Set-Cookie: (([^=]+)=([^;]+);.*)\n/', $response, $mch1),

'Cannot get "cookies" from server response. Response: ' . $response

);After the cookies are received, we can safely add them to any request to make it authorized.

Analysis of falling tests

From the above it follows that such a test is a set of server requests. We make a request, manipulate the answer, make the next request, and so on. The thought creeps into my head: if such a test falls on the tenth request, it may not be easy to figure out the reason for its fall. How to simplify your life?

First of all, I would like to advise to maximize the atomization of tests. You should not check 50 different cases in one test. The simpler the test, the easier it will be in the future.

It is also useful to collect artifacts. When our test crashes, it saves the last server response in an HTML file and throws it into the artifact storage, where this file can be opened from the browser by specifying the name of the test.

For example, our test fell on the fact that it could not find a piece of HTML on the page:

LinkWe go to our collector and open the corresponding page: You

can work with this page in the same way as with any other HTML page in the browser. You can use the CSS locator to try to find the missing element and, if it really is not there, decide that it has either changed or lost. Maybe we found a bug! If the element is in place, perhaps we made a mistake in the test somewhere - we must carefully look in this direction.

Logging also helps to simplify life. We try to log all requests that the fallen test did so that they could easily be repeated. Firstly, this allows you to quickly make a set of similar actions with your hands to reproduce the error, and secondly, to identify frequently falling tests, if any.

In addition to helping with parsing errors, the logs described above help us build a list of authorized and unauthorized pages that we tested. Looking at it, it’s easy to look for and fill in the gaps.

Last, but not least, I can advise - tests should be as convenient as possible. The easier it is to run them, the more often they will be used. The more understandable and concise the report of the fall, the more carefully they will be studied. The simpler the architecture, the more tests will be written and the less time it will take to write a new one.

If it seems to you that it is inconvenient to use the tests - most likely it does not seem to you. This must be dealt with as soon as possible. Otherwise, you run the risk of starting to pay less attention to these tests at some point, and this may lead to skipping the production error.

In words, the thought seems obvious, I agree. But in fact, we all have a lot to strive for. So simplify and optimize your creations and live without bugs. :)

Summary

At the moment, we * open Timsiti * wow, already 605 tests. All tests, if they are not run in parallel, pass in less than four minutes.

During this time, we are convinced that:

- our project opens in all languages (of which we have more than 40 in production);

- for the main countries, the correct forms of payment are displayed with the appropriate set of payment methods;

- Basic API requests work correctly

- Landing for redirects works correctly (including on a mobile site with the appropriate user agent);

- All internal projects are displayed correctly.

Tests on Selenium WebDriver for all this would require many times more time and resources.

Of course, this is not a replacement for Selenium. We still have to check the correct client behavior and cross-browser cases. We can replace only those tests that check the server behavior. But, in addition to this, we can carry out preliminary testing, quick and easy. If at the stage of smoke testing there were errors and “smoke doesn’t come from there”, is it possible to run a long set of heavy Selenium tests before the fixes? This is at your discretion! :)

Thank you for your attention.

Vitaliy Kotov, QA Automation Engineer.