Deep Learning: Combining a Deep Convolutional Neural Network with a Recurrent Neural Network

- Transfer

We present to you the final article from the Deep Learning cycle, which reflects the results of the work on training the GNSS for images from certain areas using the example of recognition and tagging of clothing items. You will find the previous parts under the cut.

1. Comparison of frameworks for symbolic deep learning .

2. Transfer learning and fine-tuning of deep convolutional neural networks .

3. The combination of a deep convolutional neural network with a recurrent neural network .

Note: further the narration will be conducted on behalf of the author.

If we talk about the role of clothing in society, the recognition of things can have many fields of application. For example, the work of Liu and other authors on the identification and search for unmarked garments in images will help to recognize the most similar elements in the e-commerce database. Young and Yu look at the recognition of clothing elements from a different perspective: they believe that this information can be used in the context of video surveillance to identify suspected crimes.

In this article, based on the materials of Vang and Vinyasa , we will create an optimized model for classifying clothing items, a brief description of images, and predicting tags for them.

This work was inspired by recent improvements in machine translation, the task of which is to translate individual words or sentences. Now you can translate much faster using recurrent neural networks (RNS), while achieving high speed. The RNC encoder reads the original tag or label and converts it into a fixed-length vector representation, which, in turn, is used as the initial latent state of the RNS decoder that forms the tag or label. We suggest going the same way using a deep convolutional neural network (GNSS) instead of the RNC encoder. In recent years, it has become clear that GNSS networks can create a sufficient representation of the input image by embedding it in a fixed-length vector that can be used for various tasks.

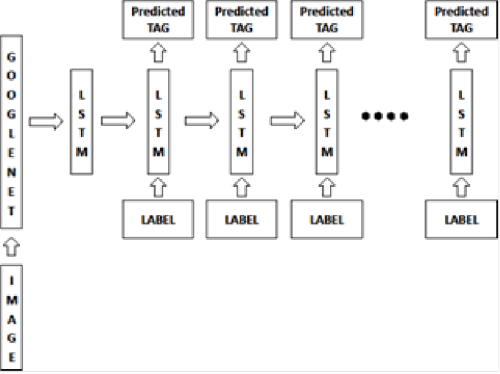

The RNS-SNA model for creating clothing image tags

In this work, the GoogLeNet model pre-trained on ImageNet will be used to extract the SNA components from the DR data set. Then, the SNA functions for training the RNS model with long-term short-term memory for predicting DR tags.

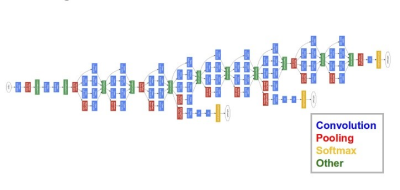

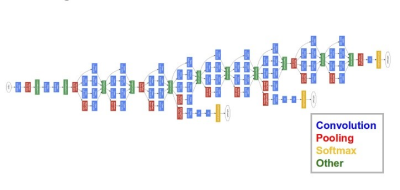

GoogLeNet Architecture

We use the DR data set : a full pipeline for recognizing and classifying people's clothes in natural conditions. This solution can be applied in various fields, for example, in e-commerce, during events, in online advertising and so on. Pipeline consists of several stages: recognition of body parts, various channels and visual attributes. DR defines 15 classes of clothing and introduces a reference set of assessment data for its classification, consisting of more than 80,000 images. We also use the DR dataset to predict clothing tags for images that have not been previously analyzed.

Images from training and test data sets have completely different indicators: resolution, aspect ratio, colors, and so on. For neural networks, we need input data of a fixed size, so we transferred all the images to the format: 3 × 224 × 224.

One of the drawbacks of irregular neural networks is their excessive flexibility: they are equally well trained in recognizing both details of clothing and interference, which increases the likelihood of overfitting. We apply Tikhonov regularization (or L2-regularization) to avoid this. However, even after that there was a significant performance gap between learning and checking DR images, which indicates an overfitting in the fine-tuning process. To eliminate this effect, we use data padding for the DR image dataset.

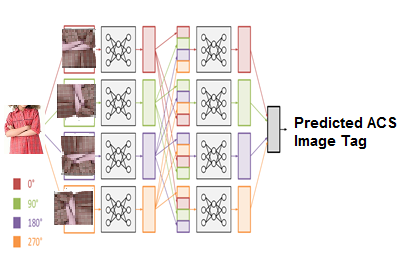

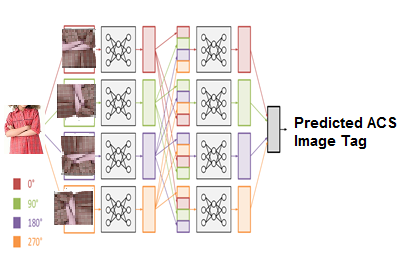

There are many ways to complement the data, for example, flipping horizontally, randomly cropping, changing colors. Since the color information of these images is very important, we only use the rotation of the images at different angles: 0, 90, 180 and 270 degrees.

Augmented Image DR

To deal with the problem of predicting DR tags, we follow the fine-tuning scenario from the previous part . The GoogLeNet model we used was originally trained on the ImageNet dataset. The ImageNet dataset contains about 1 million natural images and 1,000 tags / categories. Our tagged DR dataset contains about 80,000 “clothing” images and 15 tags / categories. The DR dataset is not enough to train such a complex network as GoogLeNet. Therefore, we use weighted data from a GoogLeNet model trained in the ImageNet dataset. We fine-tune all levels of the pre-trained GoogLeNet model through continuous back distribution.

Replacing the input layer of a pre-trained GoogLeNet network with DR images

In this work, we use the RNS model with long-term short-term memory, which has high performance when performing sequential tasks. At the heart of this model is a memory cell that encodes knowledge of the observed input data at each moment in time. The behavior of the cell is controlled by gates, in the role of which layers are used, which are applied multiplicatively. Three shutters are used here:

Recursive neural network with long-term short-term memory.

The memory block contains cell “c”, controlled by three gates. Recursion connections are shown in blue: the output “m” at time (t – 1) is fed back to memory during “t” through three gates; the cell value is fed back through the “forget” shutter; the predicted word at time (t – 1) is fed back in addition to the memory output “m” at time “t” in Softmax to predict the tag.

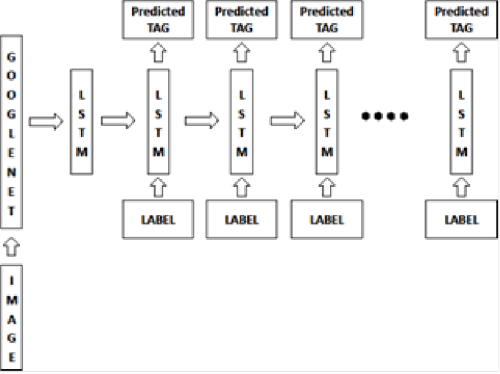

A model with long-term short-term memory is trained to predict the core tags for each image. The functions of the GNSS DR (obtained using GoogLeNet) are used as an input. Then training of the model with long-term short-term memory (LSTM) is performed on the basis of combinations of these GNSS functions and labels for pre-processed images.

A copy of the LSTM memory is created for each LSTM image and for each label so that all LSTMs use the same parameters. The output (m) × (t − 1) of the LSTM at time (t –1) is fed to the LSTM at time (t). All recurring connections are converted to proactive connections in the final release. We have seen that supplying an image at each stage of time as an additional input gives unsatisfactory results, since the network perceives interference in the images and produces an overfitting. Losses are reduced in relation to all LSTM parameters, the upper level of the convolutional neural network for embedding images and embedding tags.

GNSS-RNS architecture for predicting DR tags

We use the model described above to predict DR tags. The accuracy of tag predictions in this model increases rapidly in the first part of iterations and stabilizes after about 20,000 iterations.

Each image in the DR data set consists only of unique types of clothes; there are no images that combine different types of clothes. Despite this, when testing images with several types of clothing, our trained model accurately creates tags for these untreated test images (accuracy was about 80%).

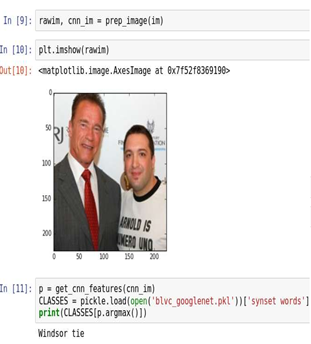

Below is an example of the output of our prediction: in the test image you see a man in a suit and a man in a T-shirt.

Test image not previously analyzed

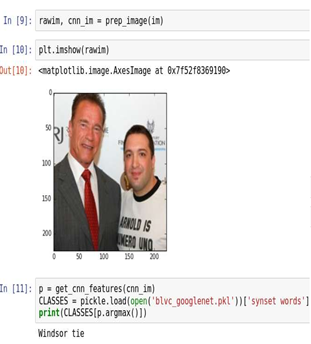

The figure below shows the prediction of GoogLeNet tags using the GoogLeNet model with ImageNet training.

Predicting GoogLeNet tags for a test image using the GoogLeNet model with ImageNet training

As you can see, the prediction for this model is not very accurate, because the test image receives the “Windsor tie” tag. The figure below shows the prediction of DR tags using the RNS-GNSS model described above with long-term short-term memory. As you can see, the prediction for this model is very accurate, because our test image receives the tags "shirt, T-shirt, upper torso, suit."

Predicting GoogLeNet tags for test images using the RNS-GNSS model with long-term short-term memory

Our goal is to develop a model that can predict clothing category tags for clothing images with high accuracy.

In this work, we compare the accuracy of tag prediction of the GoogLeNet model and the RNS-GNSS model with long-term short-term memory. The accuracy of tag prediction of the second model is much higher. A relatively small number of training iterations were used for its training, of the order of 10,000. The accuracy of the predictions of the second model rapidly increases with the number of training iterations and stabilizes after about 20,000 iterations. For this work, we used only one GP.

Thanks to the fine-tuning, you can apply advanced models of GNSS in new areas. In addition, the combined GSNS-RNS model helps to expand the trained model to solve completely different tasks, such as forming tags for clothing images.

Hopefully this series of publications will help you teach GNSS for images from specific areas and reuse existing models.

If you see an inaccuracy in the translation, please report this in private messages.

Series of articles "Deep Learning"

1. Comparison of frameworks for symbolic deep learning .

2. Transfer learning and fine-tuning of deep convolutional neural networks .

3. The combination of a deep convolutional neural network with a recurrent neural network .

Note: further the narration will be conducted on behalf of the author.

Introduction

If we talk about the role of clothing in society, the recognition of things can have many fields of application. For example, the work of Liu and other authors on the identification and search for unmarked garments in images will help to recognize the most similar elements in the e-commerce database. Young and Yu look at the recognition of clothing elements from a different perspective: they believe that this information can be used in the context of video surveillance to identify suspected crimes.

In this article, based on the materials of Vang and Vinyasa , we will create an optimized model for classifying clothing items, a brief description of images, and predicting tags for them.

This work was inspired by recent improvements in machine translation, the task of which is to translate individual words or sentences. Now you can translate much faster using recurrent neural networks (RNS), while achieving high speed. The RNC encoder reads the original tag or label and converts it into a fixed-length vector representation, which, in turn, is used as the initial latent state of the RNS decoder that forms the tag or label. We suggest going the same way using a deep convolutional neural network (GNSS) instead of the RNC encoder. In recent years, it has become clear that GNSS networks can create a sufficient representation of the input image by embedding it in a fixed-length vector that can be used for various tasks.

The RNS-SNA model for creating clothing image tags

In this work, the GoogLeNet model pre-trained on ImageNet will be used to extract the SNA components from the DR data set. Then, the SNA functions for training the RNS model with long-term short-term memory for predicting DR tags.

GoogLeNet Architecture

Data

We use the DR data set : a full pipeline for recognizing and classifying people's clothes in natural conditions. This solution can be applied in various fields, for example, in e-commerce, during events, in online advertising and so on. Pipeline consists of several stages: recognition of body parts, various channels and visual attributes. DR defines 15 classes of clothing and introduces a reference set of assessment data for its classification, consisting of more than 80,000 images. We also use the DR dataset to predict clothing tags for images that have not been previously analyzed.

Images from training and test data sets have completely different indicators: resolution, aspect ratio, colors, and so on. For neural networks, we need input data of a fixed size, so we transferred all the images to the format: 3 × 224 × 224.

One of the drawbacks of irregular neural networks is their excessive flexibility: they are equally well trained in recognizing both details of clothing and interference, which increases the likelihood of overfitting. We apply Tikhonov regularization (or L2-regularization) to avoid this. However, even after that there was a significant performance gap between learning and checking DR images, which indicates an overfitting in the fine-tuning process. To eliminate this effect, we use data padding for the DR image dataset.

There are many ways to complement the data, for example, flipping horizontally, randomly cropping, changing colors. Since the color information of these images is very important, we only use the rotation of the images at different angles: 0, 90, 180 and 270 degrees.

Augmented Image DR

Fine-tuning GoogleNet for DR

To deal with the problem of predicting DR tags, we follow the fine-tuning scenario from the previous part . The GoogLeNet model we used was originally trained on the ImageNet dataset. The ImageNet dataset contains about 1 million natural images and 1,000 tags / categories. Our tagged DR dataset contains about 80,000 “clothing” images and 15 tags / categories. The DR dataset is not enough to train such a complex network as GoogLeNet. Therefore, we use weighted data from a GoogLeNet model trained in the ImageNet dataset. We fine-tune all levels of the pre-trained GoogLeNet model through continuous back distribution.

Replacing the input layer of a pre-trained GoogLeNet network with DR images

RNS training with long-term short-term memory

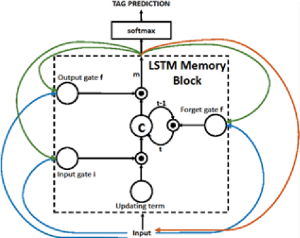

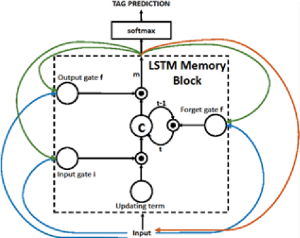

In this work, we use the RNS model with long-term short-term memory, which has high performance when performing sequential tasks. At the heart of this model is a memory cell that encodes knowledge of the observed input data at each moment in time. The behavior of the cell is controlled by gates, in the role of which layers are used, which are applied multiplicatively. Three shutters are used here:

- forget the current value of the cell (forget the shutter f),

- read the input value of the cell (enter gate i),

- display the new value of the cell (display the shutter o).

Recursive neural network with long-term short-term memory.

The memory block contains cell “c”, controlled by three gates. Recursion connections are shown in blue: the output “m” at time (t – 1) is fed back to memory during “t” through three gates; the cell value is fed back through the “forget” shutter; the predicted word at time (t – 1) is fed back in addition to the memory output “m” at time “t” in Softmax to predict the tag.

A model with long-term short-term memory is trained to predict the core tags for each image. The functions of the GNSS DR (obtained using GoogLeNet) are used as an input. Then training of the model with long-term short-term memory (LSTM) is performed on the basis of combinations of these GNSS functions and labels for pre-processed images.

A copy of the LSTM memory is created for each LSTM image and for each label so that all LSTMs use the same parameters. The output (m) × (t − 1) of the LSTM at time (t –1) is fed to the LSTM at time (t). All recurring connections are converted to proactive connections in the final release. We have seen that supplying an image at each stage of time as an additional input gives unsatisfactory results, since the network perceives interference in the images and produces an overfitting. Losses are reduced in relation to all LSTM parameters, the upper level of the convolutional neural network for embedding images and embedding tags.

GNSS-RNS architecture for predicting DR tags

results

We use the model described above to predict DR tags. The accuracy of tag predictions in this model increases rapidly in the first part of iterations and stabilizes after about 20,000 iterations.

Each image in the DR data set consists only of unique types of clothes; there are no images that combine different types of clothes. Despite this, when testing images with several types of clothing, our trained model accurately creates tags for these untreated test images (accuracy was about 80%).

Below is an example of the output of our prediction: in the test image you see a man in a suit and a man in a T-shirt.

Test image not previously analyzed

The figure below shows the prediction of GoogLeNet tags using the GoogLeNet model with ImageNet training.

Predicting GoogLeNet tags for a test image using the GoogLeNet model with ImageNet training

As you can see, the prediction for this model is not very accurate, because the test image receives the “Windsor tie” tag. The figure below shows the prediction of DR tags using the RNS-GNSS model described above with long-term short-term memory. As you can see, the prediction for this model is very accurate, because our test image receives the tags "shirt, T-shirt, upper torso, suit."

Predicting GoogLeNet tags for test images using the RNS-GNSS model with long-term short-term memory

Our goal is to develop a model that can predict clothing category tags for clothing images with high accuracy.

Conclusion

In this work, we compare the accuracy of tag prediction of the GoogLeNet model and the RNS-GNSS model with long-term short-term memory. The accuracy of tag prediction of the second model is much higher. A relatively small number of training iterations were used for its training, of the order of 10,000. The accuracy of the predictions of the second model rapidly increases with the number of training iterations and stabilizes after about 20,000 iterations. For this work, we used only one GP.

Thanks to the fine-tuning, you can apply advanced models of GNSS in new areas. In addition, the combined GSNS-RNS model helps to expand the trained model to solve completely different tasks, such as forming tags for clothing images.

Hopefully this series of publications will help you teach GNSS for images from specific areas and reuse existing models.

If you see an inaccuracy in the translation, please report this in private messages.