Atomic tests and performance tuning

Any software product that is more complicated than “Hello, world!” Needs to be tested is an axiom of development. And the wider its functionality and more complex architecture, the more attention should be paid to testing. Particular care must be taken in the granular measurement of performance. It often happens that in one part they accelerated, and in the other they slowed down, as a result, the result is zero. To prevent this from happening, we very actively use the so-called atomic tests in our work. What is it and what they eat with them, read under the cut.

Any software product that is more complicated than “Hello, world!” Needs to be tested is an axiom of development. And the wider its functionality and more complex architecture, the more attention should be paid to testing. Particular care must be taken in the granular measurement of performance. It often happens that in one part they accelerated, and in the other they slowed down, as a result, the result is zero. To prevent this from happening, we very actively use the so-called atomic tests in our work. What is it and what they eat with them, read under the cut.Background

As Parallels Desktop evolved, it became increasingly difficult for us to test individual functionalities, as well as to find, after the next updates and optimizations, the causes of degraded virtual machine performance. Due to the complexity of the system, it was almost impossible to completely cover it with unit tests. And standard performance measurement packages work on algorithms unknown to us. In addition, they are imprisoned for measurements not in virtual, but in real environments, that is, standard benchmarks are less "sensitive" to the changes made.

As Parallels Desktop evolved, it became increasingly difficult for us to test individual functionalities, as well as to find, after the next updates and optimizations, the causes of degraded virtual machine performance. Due to the complexity of the system, it was almost impossible to completely cover it with unit tests. And standard performance measurement packages work on algorithms unknown to us. In addition, they are imprisoned for measurements not in virtual, but in real environments, that is, standard benchmarks are less "sensitive" to the changes made. We needed a new tool that did not require huge labor costs, as is the case with unit tests, and had better sensitivity and accuracy compared to benchmarks.

Atomic tests

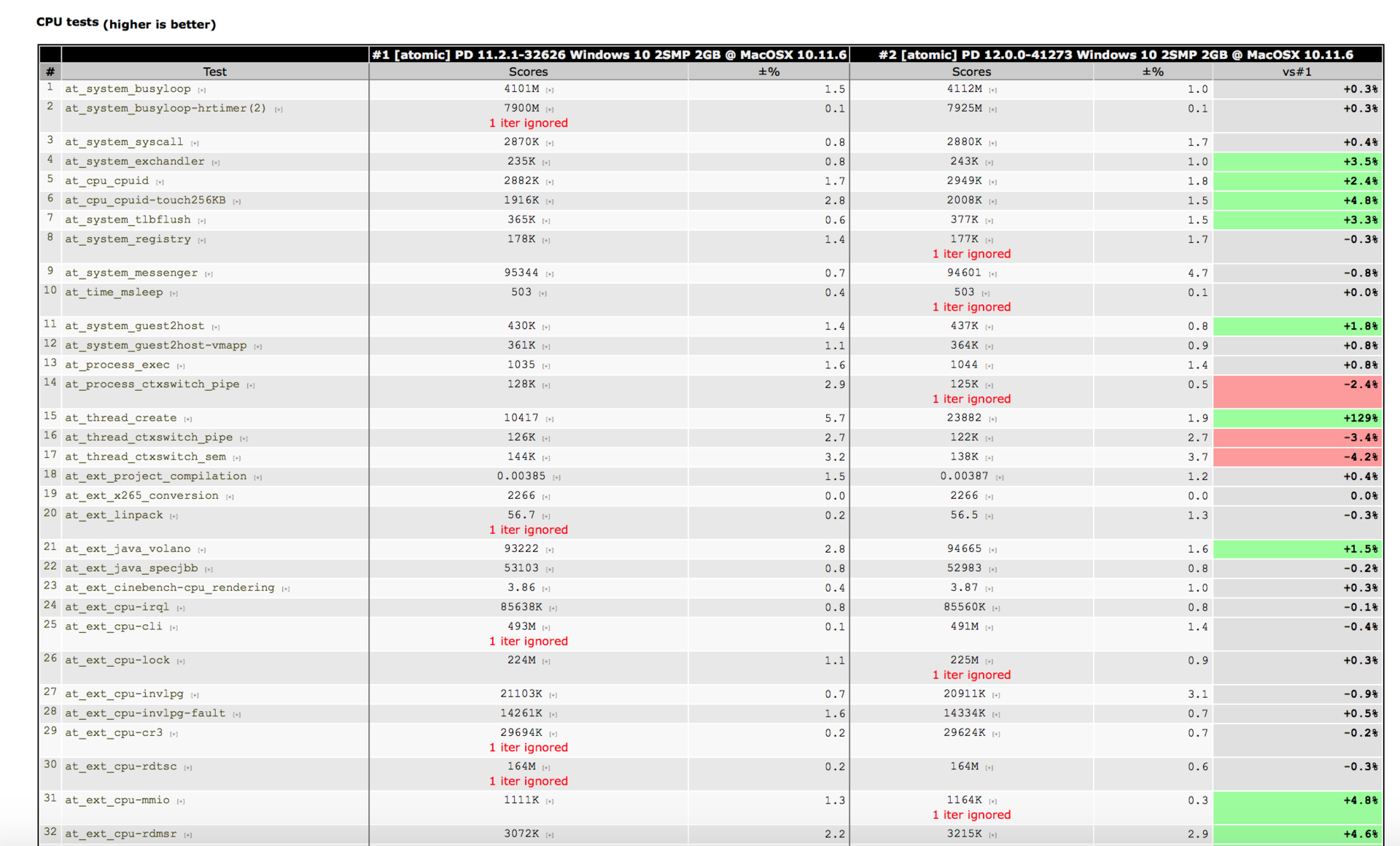

As a result, we developed a set of highly specialized - atomic, atomic - tests. Each of them is designed to measure the performance of some typical hypervisor mechanics (for example, processing VM exits) or the operating system (flushing TLB caches with TLB-shootdown, switching contexts, and the like). Also, using atomic tests, we evaluate the performance of simple operations that are independent of the implementation of the operating system and virtual machine: mathematical calculations, working with memory and disk in different initial conditions, and so on.

The result of each test is the value of a specific metric. Some metrics are interconnected, others not. We supplement the results of atomic tests with information obtained during the automatic run of standard benchmarks. After analyzing the data obtained during testing, you can get an idea of what happened to which subsystem.

Tasks to be Solved

Of course, testing functionality in itself - works or does not work, and if it works, is it correct? - an important and necessary thing. But this is not limited to the range of tasks that we solve using atomic tests.

First of all , we are looking for reasons for the decline in productivity. To do this, we regularly run atomic tests, and if any of them showed regression, then the standard bisect procedure is used: a fork is taken from two commits and binary search searches for the commit that made the regression.

It also happens that during the next testing, a regression was revealed, a bug was declared on it, but the developers did not immediately get their hands on the cause. And when the hands still reach, the conditions under which the bug is easily reproduced may already be lost. In some tasks, there is a very large backlog, and testing all changes in the reverse order is too long and time-consuming. Sometimes it’s not even clear exactly when the degradation of productivity occurred. In such cases, programmers work with what they have: repeatedly test the corresponding functionality, examine the behavior of the system in different conditions and analyze the debugging system logs to find the reason for the described regression of the test.

Second tasksolved using atomic tests - comparison with competitors. We take two systems and test on the same machine under different hypervisors. If our product is inferior in performance in some area, then the developers begin to think carefully why.

And the third task is to determine the effectiveness of optimizations. Even when everything works quickly, developers regularly have some ideas for improving the architecture and performance. And atomic tests help you quickly find out if an innovation has benefited products. It often turns out that optimizations do not improve performance, and sometimes even worsen.

Features of using atomic tests

Atomic tests can be run anywhere - on the host or guest OS. But, like any performance test, they depend on the configuration of the operating system and hardware. So, if you run tests on a host OS that does not match the guest OS, then the results will be useless.

Like any performance tests, they require certain conditions to produce reproducible results. The host OS is very complex (thanks to the virtualization system) and is not a real-time operating system. It may appear unpredictable delays associated with the equipment, to activate various services. The hypervisor is also a complex software product consisting of numerous components that execute in user space, kernel space, and its own context. The guest OS is subject to the same problems as the host. So the most difficult thing when using atomic tests is to get repeatability of test results.

How to do it?

The most important condition for obtaining stable results is the launch of tests by the robot, always under the same initial conditions:

• a clean boot of the system

• the same virtual machine is restored each time from a backup to a physical disk to the same place

• tests are launched directly according to the same algorithms.

If the measurement conditions have changed, then they will seek some reasonable explanation, and the results are always compared before and after the changes.

Life examples

When you need to perform some processing using components located in user space, the hypervisor needs to switch from its own context to the kernel context, then to the user space context and vice versa. And in order to transfer an interrupt to a guest OS, it is required:

1) first, to remove the virtual processor thread from the idle state using a signal from the host OS

2) switch from its context to the hypervisor’s own context

3) transfer the interrupt to the guest OS

The problem is that the processes Switching from the hypervisor context to the kernel context and vice versa is very slow. And when the virtual processor is idle, when you return control to it, very high costs are obtained.

Once in Parallels Desktop we ran into a bug in MacOS X Yosemite 10.10. The system generated hardware interrupts with such intensity that we only did what they processed, and, as a result, the guest OS hung. The situation was aggravated by the fact that hardware interrupts received in the context of the guest OS had to be immediately transferred to the host OS. And for this you have to switch context twice. And with a large number of such interruptions, the guest OS slowed down or hung. This is where our atomic tests came in handy.

Despite the fact that the problem was fixed in 10.10.2, in order to prevent this from happening anymore, and to speed up the guest OS as a whole, we gradually optimized the context switching procedure using a special atomic test, regularly measuring its current speed. For example, instead of executing entirely in our own context, we moved the execution to a context closer to the context of kernel space. As a result, the number of operations during switching decreased and the speed of processing requests to the components of the user space and the transfer of control to the guest operating system from a state of rest increased. In the end, everyone is happy!

We will be happy to answer questions in the comments to the article. Also ask if something seemed obscure.