Introduction to DPDK: Architecture and Operation

- Tutorial

Over the past few years, the topic of Linux network stack performance has become particularly relevant. This is understandable: the volumes of data transmitted over the network and the corresponding loads are growing by the clock, not by the day.

And even the widespread use of 10GE network cards does not solve the problem: in the Linux kernel itself there are many “bottlenecks” that interfere with fast packet processing.

Numerous attempts are being made to get around these bottlenecks. The techniques used to bypass are called kernel bypass (a brief overview can be found, for example, here ). They make it possible to completely exclude the Linux network stack from the packet processing process and make the application working in user space interact with the network device directly. We would like to talk about one of these solutions - DPDK (Data Plane Development Kit), developed by Intel - in today's article.

There are many publications about DPDK, including in Russian (see, for example: 1 , 2, and 3) Among these publications, there are quite good ones, but they do not answer the most important question: how exactly is packet processing using DPDK? What steps does a packet path from a network device to a user consist of?

These are the questions we will try to answer. To find the answers, we had to do a great job: since we did not find all the necessary information in the official documentation, we had to familiarize ourselves with the mass of additional materials and plunge into the study of source codes ... However, first things first. And before we talk about DPDK and what problems it helps to solve, we need to remember how packet processing is performed on Linux. This is where we will begin.

Linux Package Processing: Milestones

So, when the packet arrives on the network card, it is copied from there and copied to the main memory using the DMA mechanism - Direct Memory Access.

UPD . Clarification: on the new hardware, the packet is copied to the Last Level Cache of the socket from where the DMA was initiated, and from there to the memory. Thanks izard .

After that, you need to inform the system about the appearance of a new package and transfer the data further to a specially allocated buffer (Linux allocates such buffers for each package). Linux uses an interrupt mechanism for this purpose: an interrupt is generated whenever a new packet arrives on the system. Then the package still needs to be transferred to user space.

One bottleneck is already obvious: the more packages you have to process, the more resources it takes, which negatively affects the operation of the system as a whole.

UPD . Modern network cards use interrupt moderation technology (this expression is sometimes translated as “interrupt coordination” in Russian), with which you can reduce the number of interruptions and relieve the processor. Thank you T0R for clarification .

The packet data, as mentioned above, is stored in a specially allocated buffer, or, more precisely, in the sk_buff structure . This structure is allocated for each package and freed when the package enters user space. This operation consumes a lot of bus cycles (i.e., cycles transferring data from the CPU to the main memory).

There is one more problem point with the sk_buff structure: the Linux network stack was initially tried to make it compatible with as many protocols as possible. The metadata of all these protocols is also included in the sk_buff structure, but they may simply not be needed to process a particular package. Due to the excessive complexity of the structure, processing slows down.

Another factor that negatively affects performance is context switching. When an application running in user space needs to receive or send a package, it makes a system call and the context switches to kernel mode and then back to user mode. This is associated with tangible costs of system resources.

To solve some of the problems described above, the so-called NAPI (New API) , in which the interrupt method is combined with the polling method, has been added to the Linux kernel since the kernel version 2.6 . We will briefly review how this works.

At first, the network card operates in interrupt mode, but as soon as the packet arrives at the network interface, it registers itself in the poll list and disables interrupts. The system periodically checks the list for new devices and picks up packages for further processing. Once the packets have been processed, the card will be removed from the list, and interrupts will turn on again.

We described the package processing process very fluently. A more detailed description can be found, for example, in a series of articles on the Private Internet Access company blog . However, even a brief review is enough to see the problems that slow down packet processing. In the next section, we describe how these problems are solved with the help of DPDK.

DPDK: how it works

In outline

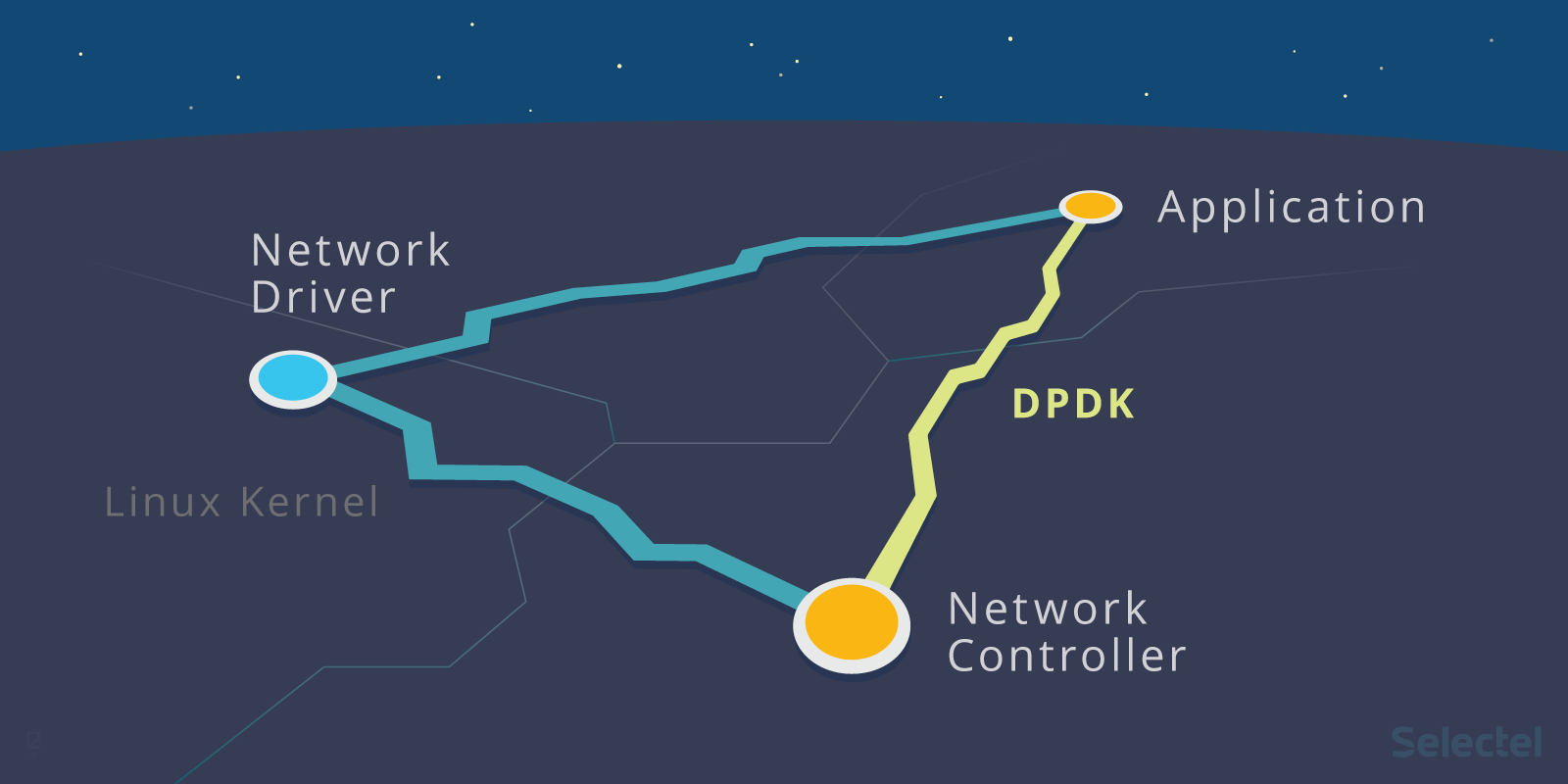

Consider the following illustration:

On the left is the process of processing packets in the “traditional” way, and on the right - using DPDK. As you can see, in the second case, the kernel is not involved at all: interaction with the network card is carried out through specialized drivers and libraries.

If you have already read about DPDK or have at least some experience with it, then you know that the network card ports that will receive traffic will need to be completely out of Linux control - this is done using the dpdk_nic_bind (or dpdk-devbind command ), but in earlier versions) - ./dpdk_nic_bind.py.

How is port transfer under the control of DPDK? Each driver on Linux has so-called bind and unbind files. The network card driver also has them:

ls /sys/bus/pci/drivers/ixgbe

bind module new_id remove_id uevent unbind

To disconnect the device from the driver, you need to write the bus number of this device in an unbind file. Accordingly, to transfer the device under the control of another driver, it will be necessary to write the bus number in its bind file. You can read more about this in this article .

The DPDK installation instructions indicate that the ports must be transferred under the control of the vfio_pci, igb_uio or uio_pci_generic driver.

All these drivers (we will not analyze their features in detail within the framework of this article; we refer interested readers to the articles on kernel.org: 1 and 2) make it possible to interact with devices in user space. Of course, they also include a kernel module, but its functions boil down to initializing devices and providing a PCI interface.

All further work on organizing communication between the application and the network card is undertaken by the PMD driver included in DPDK (short for poll mode driver). DPDK has PMD drivers for all supported network cards, as well as for virtual devices (thanks to T0R for clarification ).

To work with DPDK, you must also configure large memory pages (hugepages). This is necessary to

reduce the burden on TLB.

We will discuss all the nuances in more detail below, but for now briefly describe the main stages of packet processing using DPDK:

- Received packets fall into the ring buffer (we will analyze its device in the next section). The application periodically checks this buffer for new packages.

- If there are new packet descriptors in the buffer, the application accesses the DPDK packet buffers located in a specially allocated memory pool through the pointers in the packet descriptors.

- If there are no packets in the ring buffer, the application polls the DPDK-controlled network devices and then accesses the ring again.

Consider the internal DPDK in more detail.

EAL: abstraction of the environment

EAL (Environment Abstraction Layer) is the central concept of DPDK.

EAL is a set of software tools that provide DPDK in a specific hardware environment and under the control of a specific operating system. In the official DPDK repository, the libraries and drivers that make up the EAL are stored in the rte_eal directory.

This directory stores drivers and libraries for Linux and BSD systems. There are also sets of header files for various processor architectures: ARM, x86, TILE64, PPC64.

The programs included in the EAL, we refer to when building the DPDK from the source code:

make config T=x86_64-native-linuxapp-gcc

In this command, as it is not hard to guess, we indicate that the DPDK needs to be assembled for the x86_84, Linux OS architecture.

It is EAL that provides the “binding” of DPDK to applications. All applications using DPDK (see examples here ) must include the header files included with EAL.

We list the most common of them:

- rte_lcore.h - processor core and socket management functions;

- rte_memory.h - memory management functions;

- rte_pci.h - functions that provide an interface for access to the PCI address space;

- rte_debug.h - trace and debug functions (logging, dump_stack and others);

- rte_interrupts.h - interrupt handling functions.

Read more about the device and the EAL functions in the documentation .

Queue management: rte_ring library

As we said above, the packet received on the network card falls into the receiving queue, which is a ring buffer. In DPDK, newly arrived packets are also placed in a queue implemented on the basis of the rte_ring library. All of the following descriptions of this library are based on the developer's guide, as well as comments on the source code.

When developing rte_ring, the implementation of the ring buffer for FreeBSD was taken as a basis . If you look at the source , then pay attention to this comment: Derived from FreeBSD's bufring.c.

The queue is a ring buffer without blocking, organized according to the FIFO principle (First In, First Out). A circular buffer is a table of pointers to objects stored in memory. All pointers are divided into four types: prod_tail, prod_head, cons_tail, cons_head.

Prod and cons are short for producer (producer) and consumer (consumer). A producer is a process that writes data to the buffer at the current moment, and a consumer is a process that is currently taking data from the buffer.

The tail is the place where writing to the ring buffer is currently being performed. The place from where the buffer is currently being read is called the head .

The meaning of the queuing and queuing operations is as follows: when adding a new object to the queue, everything should end up in such a way that the ring-> prod_tail pointer points to the place where ring-> prod_head previously pointed.

Here we give only a brief description; more details about the scenarios of the operation of the ring buffer can be found in the developer's guide on the DPDK website .

Among the advantages of this approach to queuing, it should be noted, firstly, a higher write speed to the buffer. Secondly, when performing mass queuing and mass withdrawal operations from the queue, cache misses are much less common because pointers are stored in a table.

The disadvantage of implementing a ring buffer in DPDK is the fixed size, which cannot be increased on the fly. In addition, working with a ring structure consumes much more memory than working with a linked list: the ring always uses the maximum possible number of pointers.

Memory Management: rte_mempool library

We already said above that DPDK needs large memory pages (HugePages) to work. The installation instructions recommend creating HugePages of 2 megabytes in size.

These pages are grouped into segments, which are then divided into zones. Objects created by applications or other libraries — for example, queues and packet buffers — are already placed in zones.

These objects also include memory pools created by the rte_mempool library. These are fixed-size object pools that use rte_ring to store free objects and can be identified by a unique name.

Memory alignment techniques may be used to improve performance.

Despite the fact that access to free objects is organized on the basis of a ring buffer without locks, the cost of system resources can be very large. To have access to the ring several processor cores and whenever the kernel refers to the ring, it is necessary to carry out the operation compared with the exchange (compare and set, CAS).

To prevent the ring from becoming a bottleneck, each core receives an additional local cache in the memory pool. The kernel has full access to the cache of free objects using the lock mechanism. When the cache is full or freed completely, the memory pool exchanges data with the ring. This ensures that the kernel accesses frequently used objects.

Buffer Management: rte_mbuf Library

In the Linux network stack, as noted above, the sk_buff structure is used to represent all network packets. DPDK uses the rte_mbuf structure described in the rte_mbuf.h header file for this purpose .

The DPDK buffer management approach is much like the one used by FreeBSD: instead of one large sk_buff structure, there are many small rte_mbuf buffers. Buffers are created before the application using DPDK is launched and stored in memory pools (the rte_mempool library is used to allocate memory).

In addition to the actual packet data, each buffer also contains metadata (message type, length, address of the beginning of the data segment). The buffer also contains a pointer to the next buffer. This is necessary to work with packages containing a large amount of data - in this case, packages can be combined (in the same way as in FreeBSD - more about this can be found, for example, here ).

Other Libraries: An Overview

In the previous sections, we described only the most basic DPDK libraries. But there are many other libraries, which are hardly possible to tell about in the framework of one article. Therefore, we confine ourselves to a brief overview.

Using the LPM library , DPDK implements the Longest Prefix Match (LPM) algorithm , which is used to forward packets based on their IPv4 address. The main functions of this library are to add and remove IP addresses, as well as to search for a new address using the LPM algorithm.

For IPv6 addresses, similar functionality is implemented based on the LPM6 library .

In other libraries, similar functionality is implemented using hash functions. Using rte_hashYou can search a large set of records using a unique key. This library can be used, for example, to classify and distribute packages.

The rte_timer library provides asynchronous execution of functions. The timer can be run once or periodically.

Conclusion

In this article we tried to talk about the internal structure and the principles of DPDK. They tried, but did not tell to the end - this topic is so complex and extensive that one article is clearly not enough. Therefore, wait for the continuation: in the next article we will talk in more detail about the practical aspects of using DPDK.

In the comments we will be happy to answer all your questions. And if any of you have experience using DPDK, then we will be grateful for any comments and additions.

For anyone who wants to know more, here are some useful links on the topic:

- http://dpdk.org/doc/guides/prog_guide/ - a detailed (although confusing in some places) description of all DPDK libraries;

- https://www.net.in.tum.de/fileadmin/TUM/NET/NET-2014-08-1/NET-2014-08-1_15.pdf - a brief overview of DPDK features, comparison with other frameworks of a similar plan ( netmap and PF_RING);

- http://www.slideshare.net/garyachy/dpdk-44585840 - presentation-introduction to the DPDK for beginners;

- http://www.it-sobytie.ru/system/attachments/files/000/001/102/original/LinuxPiter-DPDK-2015.pdf - presentation with explanations of the DPDK device.

If for some reason you cannot leave comments here - welcome to our corporate blog.