Etherblade.net - opensource project to create an ethernet traffic encapsulator on FPGA (part one)

Just want to make a reservation that this article not only implies passive reading, but also invites everyone to join the development. System programmers, hardware developers, network engineers and DevOps engineers are welcome.

As the project goes at the junction of network technologies and hardware design, let's divide our conversation into three parts - it will be easier to adapt the information to a particular audience of readers.

We define the first part as introductory. Here we will talk about the hardware encapsulator of ethernet traffic created on the FPGA, we will discuss its main functions, architectural features and advantages in comparison with software solutions.

The second part, let's call it "network", will be more interesting for hardware developers who want to get acquainted with network technologies closer. It will be devoted to the role that "Etherblade.net" can take in the networks of telecom operators. We will also talk about the concept of SDN (software defined networking) and how open network hardware can complement the solutions of large vendors such as Cisco and Juniper, and even compete with them.

And the third part - “hardware”, which is more likely to interest network engineers who want to join the hardware design and begin to develop network devices on their own. In it, we will look in detail at FPGA-workflow, “the union of software and hardware,” FPGA boards, development environments, and other things that tell you how to get involved in the project “EtherBlade.net”.

So let's go!

Ethernet encapsulation

The goal of the Etherblade.net project is to design and build a device that can, at the hardware level, emulate an L2-ethernet channel over the L3 environment. A simple example of use is the connection of disparate servers and workstations among themselves just as if there was a normal physical ethernet cable between them.

On the Internet you can find different terms for this technology. The most common ones are pseudowire, evpn, L2VPN, e-line / e-tree / e-lan, and so on. Well, a huge number of derivative terms are different for different types of transport networks through which a virtual ethernet channel is laid.

For example, emulation of ethernet over an IP network is provided by the following technologies - EoIP, VxLAN, OTV ;

ethernet emulation over MPLS network using VPLS and EoMPLS technologies ;

ethernet emulation over ethernet is a task of MetroEthernet, PBB-802.1ah , etc. technologies .

The job of marketers is to come up with terms, but if iron designers invented a separate device for each term or abbreviation, they would go crazy. Therefore, the goal of the hardware developers and our goal is to develop a universal device - an encapsulator that can encapsulate ethernet frames into any transport network protocol, be it IP / IPv6, MPLS, Ethernet , etc.

And such an encapsulator has already been implemented and is being developed in a project called “Etherblade-Version1 - encapsulator core”.

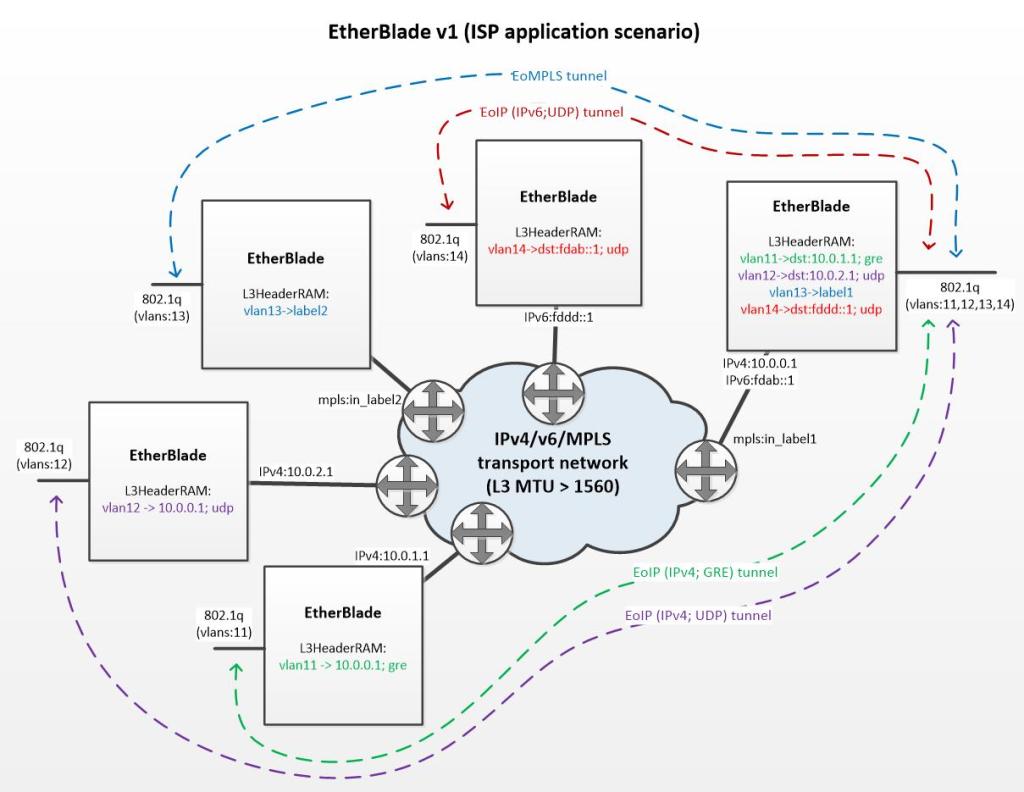

For a better understanding, I propose to consider a picture explaining this principle of encapsulation:

We see that the encapsulators are located along the perimeter of the carrier’s transport network. Each encapsulator has two interfaces (L2 is a trunk port “looking” towards clients, and L3 interface, which “looks” toward transport network) .

Let's take a closer look at the rightmost encapsulator. Clients are connected to it, where the traffic of each client “walks” in a separate vlan-e. A device must be able to create virtual channels for individual clients or, in scientific terms, be able to encapsulate ethernet traffic for different vlans with different L3 headers. The figure shows how one encapsulator emulates four virtual channels for four clients:

- vlan-11 (green) - Ethernet over IP (IPv4 + GRE) ;

- vlan-12 (purple) - Ethernet over IP (IPv4 + UDP) ;

- vlan-13 (blue) - Ethernet over MPLS ;

- vlan-14 (red) - Ethernet over IP (IPv6 + UDP) ;

So, we have dealt with the functionality, now let's talk about the embodiments of the encapsulator.

Why FPGA?

FPGA is, in essence, a chip that replaces a soldering iron and a box of chips (logic elements, memory chips, etc.) . That is, having FPGA, we have the opportunity to create hardware for your needs and tasks.

But besides "a set of elements and a soldering iron" it is necessary to have also schematic diagrams. So, the repository of such schemes, from which you can “solder” the encapsulator inside the FPGA and get a working device, is the project “Etherblade.net”. Another important advantage of FPGA can be called the fact that its elements can be “soldered” for new schemes, but the schemes themselves, thanks to the repository, do not need to be created and verified “from scratch” to implement the new functionality.

And yet, why FPGA, and not a software solution?

Of course, if the question was about developing a system from scratch, then it would be easier and faster to take a ready-made computer and write a program to it than to develop a specialized hardware device.

For simplicity and speed of development, however, you have to pay the worst performance, and this is the main drawback of the software solution. The fact is that software is a computer program that has a variable execution time due to branching and cycles. Add here the microprocessor's permanent interrupts by the operating system and traffic recirculation in the DMA subsystem.

In the hardware implementation, our encapsulator is, in fact, a straight-through “store-and-forward” buffer equipped with additional memory in which the headers are stored. Due to its simplicity and linearity, the hardware solution processes traffic at a speed equal to the capacity of the ethernet channel with minimal delays and stable jitter. As a bonus, add here the lower power consumption and lower cost of FPGA solutions compared to microprocessor systems.

Before moving on to the next topic, let me share with you this link to the video demonstrating the encapsulator in action. The video is connected with English subtitles, and if necessary, “Youtube” has the option to enable auto-translation into Russian.

In the final part of the article I would like to say about a pair of blocks that are also being developed as part of the project “Etherblade.net”.

Development of the receiver "Etherblade-Version2 - decapsulator core"

You may have noticed that in the previous network diagram (where the encapsulators connected to the provider’s network are shown) there is one small remark indicating that the MTU in the network must be greater than 1560. For large telecoms, this is not a problem, as they are work on "dark" fiber-optic channels with jumbo-frames support enabled on the equipment. In this case, the encapsulated ethernet packets, with a maximum size of 1500 bytes and an additional L3 header, can freely “walk” on such networks.

But what if, as a transport network, we would like to use a normal Internet with encapsulators connected, say, to home DSL or 4G modems? In this case, the receiving part of the encapsulators must be able to collect fragmented ethernet frames.

Neither Cisco nor Juniper, at the moment, offer such functionality in their devices, and this is understandable, because their equipment is intended for large telecom operators. The project “EtherBlade.net” was originally oriented to customers of different calibers and already has in its arsenal a developed “hardwired” assembly of fragments, which allows to emulate “ethernet-anywhere” channels with a “telco-grade” quality of service. For detailed documentation and source codes, welcome to https://etherblade.net .

10 Gigabit

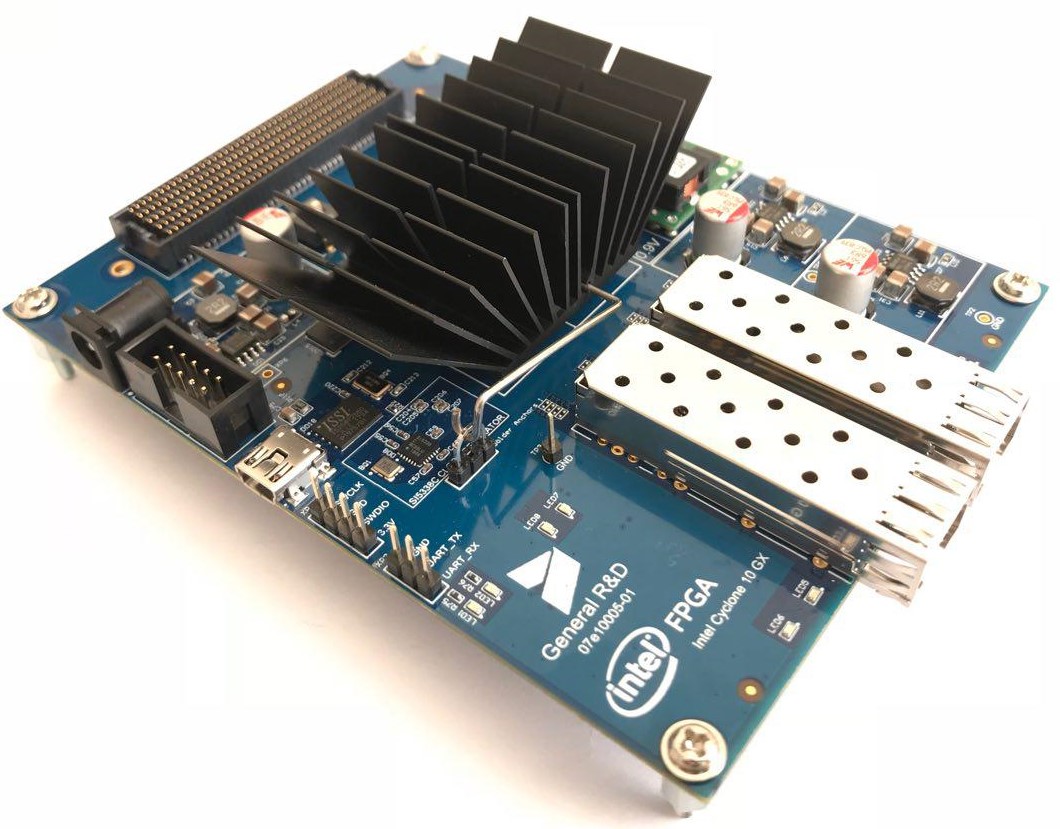

Currently, active work is also underway on a ten-gigabit version of the encapsulator and porting it to hardware, in particular, to the board shown by the Intel R & D team in St. Petersburg at the beginning of the Intel Cyclone 10GX article.

In addition to the functional described in this article, ten-gigabit encapsulators can be used in carrier networks for redirecting “interesting” traffic to DDoS clearing centers, the Yarovoi server, etc. More on this in the next article on the concept of SDN (software defined networking) and the use of Etherblade.net in large networks - stay tuned.