Automate Docker Container Deployment on Custom Infrastructure

Containerization of applications today is not just a fashion trend. Objectively, this approach allows us to optimize the server development process in many ways by unifying the supported infrastructures (dev, test, staging, production). Which ultimately leads to a significant reduction in costs throughout the life cycle of the server application.

While most of Docker's listed benefits are true, those who come across containers in practice may be slightly disappointed. And since Docker is not a panacea, it is only included in the list of “medicines” for the automatic deployment recipe, developers have to master additional technologies, write additional code, etc.

We have been developing our own recipe for automating the configuration and deployment of containers for various infrastructures in the past few months, in parallel with commercial projects. And the result obtained almost completely satisfied our current needs for auto-fixing.

Tool selection

When the need arises for the first time to automatically deploy Docker applications, the first thing that experience suggests (or a search engine) is to try to adapt Docker Compose for this task . Originally conceived as a tool for quickly launching containers on a test infrastructure, Docker Compose, however, can also be used in battle . Another option that we considered as a suitable tool is Ansible , which includes modules for working with containers and Docker images .

But neither one nor the other solution suited us as developers. And the main reason for this lies in the way configurations are described - using YAML files. In order to understand this reason, I will ask a simple question: Do any of you know how to program in YAML? I’m surprised if someone answers in the affirmative. Hence the main drawback of all the tools that use all kinds of markup options (from INI / XML / JSON / YAML to more exotic ones like HCL ) for configuration is the inability to extend the logic in standard ways. Among the shortcomings, one can also mention the lack of autocomplete and the ability to read the source code of the function used, the lack of hints about the type and number of arguments and other joys of using the IDE.

Next, we looked towards Fabricand Capistrano . For configuration, they use the usual programming language (Python and Ruby, respectively), that is, they allow you to write custom logic inside the configuration files with the ability to use external modules, which we, in fact, sought. We did not begin to choose between Fabric and Capistrano for a long time and almost immediately settled on the first. Our choice, first of all, was due to the availability of expertise in Python and its almost complete absence in Ruby. Plus, the rather complicated structure of the Capistrano project was embarrassing .

In general, the choice fell on Fabric. Due to its simplicity, convenience, compactness and modularity, it settled in each of our projects, allowing you to store the application itself and the logic of its deployment in one repository.

Our first experience in writing a config for automatic deployment using Fabric made it possible to perform basic actions to update the application on combat and test infrastructures and allowed us to save a significant amount of developer time (we do not have a separate release manager). But at the same time, the settings file turned out to be rather cumbersome and difficult to transfer to other projects. We thought about how the task of adapting configs for another project is easier and faster. Ideally, I wanted to get a universal and compact deployment configuration template for a standard set of existing infrastructures (test, staging, production). For example, now our auto-config configs look something like this:

fabfile.py

from fabric import colors, api as fab

from fabricio import tasks, docker

##############################################################################

# infrastructures

##############################################################################

@tasks.infrastructure

def STAGING():

fab.env.update(

roledefs={

'nginx': ['devops@staging.example.com'],

},

)

@tasks.infrastructure(color=colors.red)

def PRODUCTION():

fab.env.update(

roledefs={

'nginx': ['devops@example.com'],

},

)

##############################################################################

# containers

##############################################################################

class NginxContainer(docker.Container):

image = docker.Image('nginx')

ports = '80:80'

##############################################################################

# tasks

##############################################################################

nginx = tasks.DockerTasks(

container=NginxContainer('nginx'),

roles=['nginx'],

)

The given code example contains a description of several standard actions for managing the container in which the notorious web server is launched. Here's what we'll see by asking Fabric to list the commands from the directory with this file:

fab --list

Available commands:

PRODUCTION

STAGING

nginx deploy [: force = no, tag = None, migrate = yes, backup = yes] - backup -> pull -> migrate -> update

nginx.deploy deploy [: force = no, tag = None, migrate = yes, backup = yes] - backup -> pull -> migrate -> update

nginx.pull pull [: tag = None] - pull Docker image from registry

nginx.revert revert - revert Docker container to previous version

nginx.rollback rollback [: migrate_back = yes] - migrate_back -> revert

nginx.update update [: force = no, tag = None] - recreate Docker container

Here it’s worth a little clarification that in addition to the typical deploy, pull, update, etc., the list also contains PRODUCTION and STAGING tasks that do not perform any actions at startup, but prepare the environment for working with the selected infrastructure. Without them, most other tasks will fail. This is a “standard” workaround of the fact that Fabric does not support the explicit choice of infrastructure for work. Therefore, in order to start the process of deploying / updating the container with Nginx, for example, on STAGING, you need to run the following command:

fab STAGING nginx

As you might have guessed, almost all the “magic” is hidden behind these lines:

nginx = tasks.DockerTasks(

container=NginxContainer('nginx'),

roles=['nginx'],

)

Ciao, Fabricio!

In general, let me introduce Fabricio , a module that extends the standard capabilities of Fabric by adding functionality for working with Docker containers. The development of Fabricio allowed us to stop thinking about the complexity of implementing an automatic deployment and focus entirely on solving business problems.

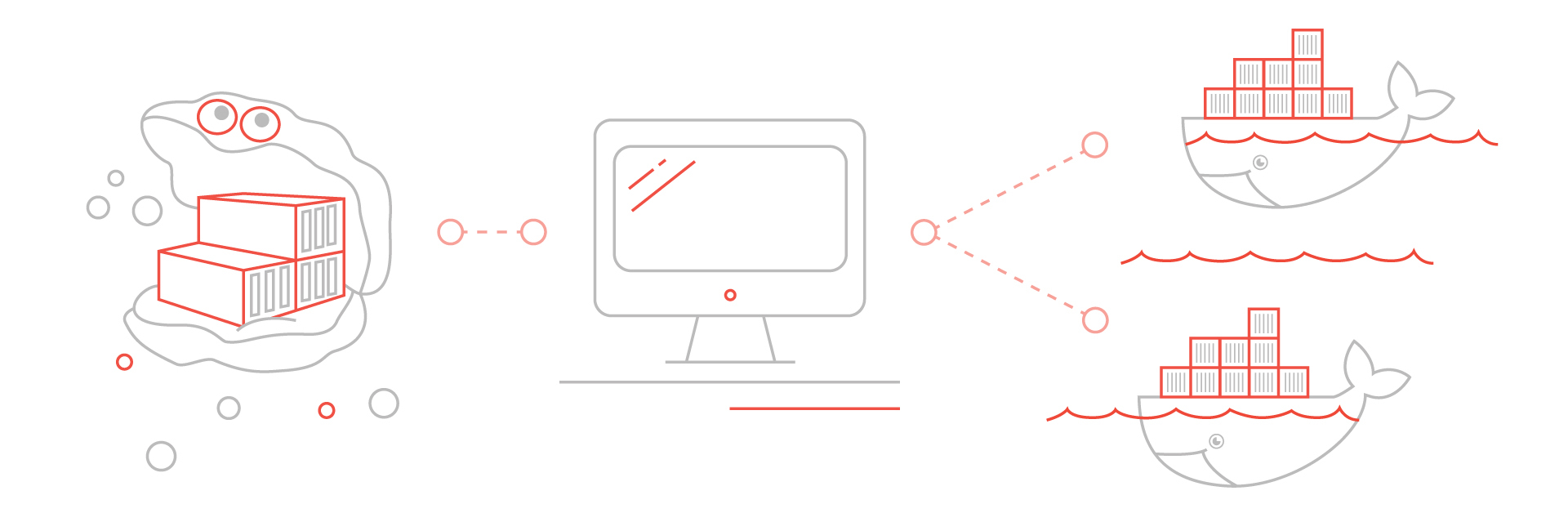

Very often we are faced with a situation where there are restrictions on access to the Internet on the customer’s combat infrastructure. In this case, we solve the deployment task using a private Docker Registry running on the administrator’s local network (or simply on his working computer). To do this, in the example above, you just need to replace the type of tasks - DockerTasks with PullDockerTasks. The list of available commands in this case will take the form:

fab --list

Available commands:

PRODUCTION

STAGING

nginx deploy [: force = no, tag = None, migrate = yes, backup = yes] - prepare -> push -> backup -> pull -> migrate -> update

nginx.deploy deploy [: force = no, tag = None, migrate = yes, backup = yes] - prepare -> push -> backup -> pull -> migrate -> update

nginx.prepare prepare [: tag = None] - prepare Docker image

nginx.pull pull [: tag = None] - pull Docker image from registry

nginx.push push [: tag = None] - push Docker image to registry

nginx.revert revert - revert Docker container to previous version

nginx.rollback rollback [: migrate_back = yes] - migrate_back -> revert

nginx.update update [: force = no, tag = None] - recreate Docker container

New teams prepare and push

docker run --name registry --publish 5000:5000 --detach --restart always registry:2

The example with the assembly of the image differs from the first two, similarly, only by the type of tasks - in this case it is BuildDockerTasks . The list of commands for assembly tasks is the same as in the previous example, except that the prepare command instead of downloading the image from the main Registry builds it from local sources.

To use PullDockerTasks and BuildDockerTasks, you need the Docker client installed on the administrator’s computer. After announcing Docker’s public beta for MacOS and Windows, this is no longer such a headache for users.

Fabricio is a completely open project, any improvementswelcome. At the same time, we ourselves actively continue to supplement it with new features, fix bugs and eliminate inaccuracies, constantly improving the tool we need. At the moment, the main features of Fabricio are:

- assembling Docker images

- launch containers from images with arbitrary tags

- compatibility with parallel task execution mode on different hosts

- the ability to roll back to the previous state (rollback)

- work with public and private Docker Registry

- grouping typical tasks into separate classes

- automatic application and rollback of migrations of Django applications

You can install and try Fabricio through the standard Python package manager:

pip install --upgrade fabricio

Support is still limited to Python 2.5-2.7. This restriction is a direct consequence of the support of the corresponding versions by the Fabric module. We hope that in the near future Fabric will get the opportunity to run in Python 3. Although this is not particularly necessary - in most Linux distributions, as well as MacOS, version 2 is the default version of Python.

I will be glad to answer any questions in the comments, as well as listen to constructive criticism.