How a plane crash can improve analysis of IT packs

On the evening of August 16, 1987, flight 255 from Northwest Airlines flew from Detroit Airport. He crashed a minute later, and 156 people died in the crash. It seems to be an obvious error pilots led to research with the participation of NASA, changes in the design of aircraft and flight procedures. And this story is related to quality management, project management and the issue of guilt and punishment not only of the collectors of the Union MC-10 who suffered the accident, but also of people who have made mistakes in your work.

Photos from the accident site from the Bureau of Aviation Incidents Archives

The evening of August 16, 1987 in Detroit did not please the weather. A thunderstorm was approaching, and the crew of Flight 255 of Northwest Airlines was in a hurry to continue the flight - Detroit was the third of five airports on the route, and the schedule was already half an hour behind. Under the law of meanness, the direction of the wind changed, and the controllers redirected the plane to another lane. It was the shortest in the entire airport, so the co-pilot had to recalculate the aircraft mass and its mileage during takeoff, to make sure that it was possible to take off from it. The trouble does not come alone - in the evening, in the rain, the crew was lost on the taxiways, and when the plane finally left the runway, the schedule was already 45 minutes behind.

The take-off took place at 20:44, and it immediately became clear that something had gone completely wrong, a warning about the danger of stalling sounded in the cockpit, the plane did not want to gain altitude, and tilted from side to side. A few seconds later, he crashed into a lighting pole in the parking lot, lost part of the wing and, already uncontrolled, fell onto the road, slid along it to the overpass, crashing into which it completely collapsed. In the crash killed both pilots, four flight attendants, 149 passengers and two people in a crushed car. Five more people on the ground were injured. The only survivor was four-year-old Cecilia Sheen, who lost her father, mother, and older brother in a disaster.

Flight pattern, source

The physical evidence and the data of the black boxes showed an amazing thing - an experienced and professional crew made an absolutely “childish” mistake - forgot to release the flaps!

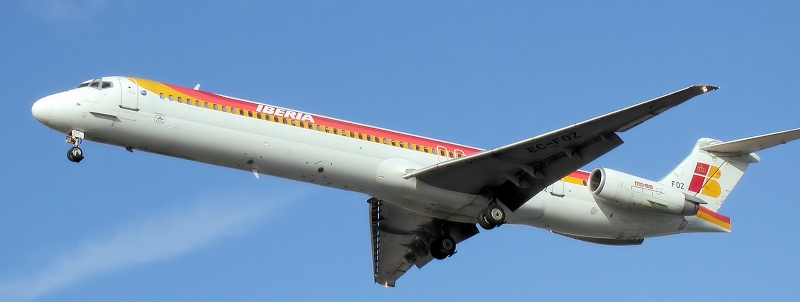

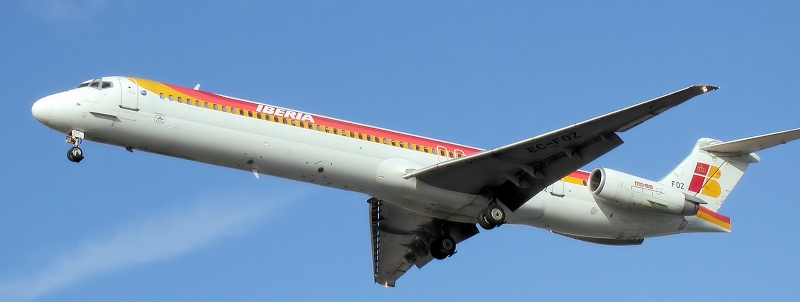

An airplane of the MD-80 family, similar to the one that crashed, photo taken Wikimedia Commons

Larger photo, wing mechanization is clearly visible

The investigation showed that not only flaps were forgotten, but a whole flight card (checklist) of several points that had to be performed on the taxi. During the take-off, the crew even had a hint that they missed something - the on-board computer was not in flight mode, and the traction machine did not turn on. But a person, especially under stress and haste, is able not to notice or forget anything - the crew ignored the hint and did not interrupt the take-off.

There are no fewer questions, on the contrary. Just in case the crew forgot to prepare the plane for take-off, a special system was created that was supposed to give a signal that the flaps and slats are not in the take-off position. But for some reason she was silent. Further, the signal about the danger of stalling had to go for reliability from two speakers, and came only from one. The official investigation established that, for some unknown reason, the P-40 switch, which is located behind the left-hand seat, was opened - this switch simultaneously powered both the warning about the unattended configuration and one of the loudspeaker warning system speakers. The panel with this switch was badly damaged in the crash, and it was impossible to establish whether the switch broke down or was turned off manually.

The switch panel on the left side of the MD-80 cab, the source of the

National Transportation Safety Board (NTSB) investigator John Clark asked the pilot of the same type with the crashed MD-80 to show various warnings as part of the investigation. The pilot also reproduced the correct stall warning from two speakers, and an indication of unreleased flaps. In order to demonstrate the monophonic signal heard in the fateful flight, one of the channels had to be turned off. And the pilot said, “I, of course, never did this, but, they say, some people specifically turn off the warning of unreleased flaps, because it often falsely works on the taxi and is very annoying”, then, without looking, I reached for the very same P-40 switch behind my back and turned off it with the usual movement. And on the switch panel around the P-40 there was more dirt, as if it was constantly turned on and off ...

So the seemingly obvious conclusion “pilot error” basically masked two big problems:

Specially I repeat once again - experienced professionals make mistakes too. Attempts to remedy the situation with repressive measures would have fought the investigation, not the cause — the crew of flight 255 was not irresponsible, and, of course, did not want to die and take innocent people to the grave. The commander of the ship worked in this airline for 31 years, the co-pilot had a flying time of several thousand hours, both of them, according to colleagues, were good pilots, but this did not help at all.

Alas, they did not manage to find solutions quickly - next year for the same reason (they forgot to release flaps) another model aircraft, the Boeing 727, crashed in Dallas. Of the 108 people aboard, 14 were killed. There, however, the crew’s fault was greater - the pilots clearly violated the “sterile” cockpit rule and talked with an incoming flight attendant before takeoff. But on the recording of the voice recorder, the co-pilot says that the flaps are released at the correct angle, despite the fact that this is not the case.

The problem of control cards dealt with in NASA. As a result, recommendations for improvements appeared, starting from the length of the checklist and ending with the font they were printed on. Moreover, in the late 80s computers were actively introduced into aviation, and checklists began to make electronic ones - the computer not only remembers where you stopped at, if you were distracted, but also checked if you did what you noted as done. But, alas, there is still something to improve - the An-148 crash in February of this year was due to the fact that the pilots put off one of the checklist points and forgot to execute it later (for fairness, it should be noted that the aircraft designers also failed was to perform in advance in a relaxed atmosphere).

False positives were removed not only on the MD-80, but also on other machines - they changed the requirements for the system as a whole, so that the flights became safer.

The history of flight 255, in my opinion, bears conclusions that we can apply in ourselves, in IT.

One of the methodologies for implementing these principles is called autopsy without blame (“autopsy without charges”). The variant used in the company where I work is called solution without blame, SWOB (“decision without charges”). Let me briefly quote his principles:

Objectives:

Important:

Promises:

Organizational highlights:

If the pilots could have conveyed to the designers that the false alarms of the warning system for unreleased flaps are very disturbing, then the crash of flight 255 might not have happened. According to the “MS-10 Alliance”, there has not yet been any news on how exactly the sensor has bent , but in the case of the introduction of SWOB analogues, the following scenarios are quite possible:

There is no silver bullet, but SWOB ideas can help one of you. Good luck in improving the processes!

Photos from the accident site from the Bureau of Aviation Incidents Archives

Catastrophe

The evening of August 16, 1987 in Detroit did not please the weather. A thunderstorm was approaching, and the crew of Flight 255 of Northwest Airlines was in a hurry to continue the flight - Detroit was the third of five airports on the route, and the schedule was already half an hour behind. Under the law of meanness, the direction of the wind changed, and the controllers redirected the plane to another lane. It was the shortest in the entire airport, so the co-pilot had to recalculate the aircraft mass and its mileage during takeoff, to make sure that it was possible to take off from it. The trouble does not come alone - in the evening, in the rain, the crew was lost on the taxiways, and when the plane finally left the runway, the schedule was already 45 minutes behind.

The take-off took place at 20:44, and it immediately became clear that something had gone completely wrong, a warning about the danger of stalling sounded in the cockpit, the plane did not want to gain altitude, and tilted from side to side. A few seconds later, he crashed into a lighting pole in the parking lot, lost part of the wing and, already uncontrolled, fell onto the road, slid along it to the overpass, crashing into which it completely collapsed. In the crash killed both pilots, four flight attendants, 149 passengers and two people in a crushed car. Five more people on the ground were injured. The only survivor was four-year-old Cecilia Sheen, who lost her father, mother, and older brother in a disaster.

Flight pattern, source

Investigation

The physical evidence and the data of the black boxes showed an amazing thing - an experienced and professional crew made an absolutely “childish” mistake - forgot to release the flaps!

An airplane of the MD-80 family, similar to the one that crashed, photo taken Wikimedia Commons

Larger photo, wing mechanization is clearly visible

A small educational program. Slats and flaps are special surfaces that deflect and / or slide in the front and rear of the wing to create additional lift at low speed. Used for takeoff and landing.

The investigation showed that not only flaps were forgotten, but a whole flight card (checklist) of several points that had to be performed on the taxi. During the take-off, the crew even had a hint that they missed something - the on-board computer was not in flight mode, and the traction machine did not turn on. But a person, especially under stress and haste, is able not to notice or forget anything - the crew ignored the hint and did not interrupt the take-off.

There are no fewer questions, on the contrary. Just in case the crew forgot to prepare the plane for take-off, a special system was created that was supposed to give a signal that the flaps and slats are not in the take-off position. But for some reason she was silent. Further, the signal about the danger of stalling had to go for reliability from two speakers, and came only from one. The official investigation established that, for some unknown reason, the P-40 switch, which is located behind the left-hand seat, was opened - this switch simultaneously powered both the warning about the unattended configuration and one of the loudspeaker warning system speakers. The panel with this switch was badly damaged in the crash, and it was impossible to establish whether the switch broke down or was turned off manually.

The switch panel on the left side of the MD-80 cab, the source of the

National Transportation Safety Board (NTSB) investigator John Clark asked the pilot of the same type with the crashed MD-80 to show various warnings as part of the investigation. The pilot also reproduced the correct stall warning from two speakers, and an indication of unreleased flaps. In order to demonstrate the monophonic signal heard in the fateful flight, one of the channels had to be turned off. And the pilot said, “I, of course, never did this, but, they say, some people specifically turn off the warning of unreleased flaps, because it often falsely works on the taxi and is very annoying”, then, without looking, I reached for the very same P-40 switch behind my back and turned off it with the usual movement. And on the switch panel around the P-40 there was more dirt, as if it was constantly turned on and off ...

So the seemingly obvious conclusion “pilot error” basically masked two big problems:

- With control cards something is not right, since they are forgotten to do.

- If you remove the false alarms of the warning system of unreleased flaps, then the pilots will be tempted to turn it off.

Specially I repeat once again - experienced professionals make mistakes too. Attempts to remedy the situation with repressive measures would have fought the investigation, not the cause — the crew of flight 255 was not irresponsible, and, of course, did not want to die and take innocent people to the grave. The commander of the ship worked in this airline for 31 years, the co-pilot had a flying time of several thousand hours, both of them, according to colleagues, were good pilots, but this did not help at all.

Alas, they did not manage to find solutions quickly - next year for the same reason (they forgot to release flaps) another model aircraft, the Boeing 727, crashed in Dallas. Of the 108 people aboard, 14 were killed. There, however, the crew’s fault was greater - the pilots clearly violated the “sterile” cockpit rule and talked with an incoming flight attendant before takeoff. But on the recording of the voice recorder, the co-pilot says that the flaps are released at the correct angle, despite the fact that this is not the case.

The problem of control cards dealt with in NASA. As a result, recommendations for improvements appeared, starting from the length of the checklist and ending with the font they were printed on. Moreover, in the late 80s computers were actively introduced into aviation, and checklists began to make electronic ones - the computer not only remembers where you stopped at, if you were distracted, but also checked if you did what you noted as done. But, alas, there is still something to improve - the An-148 crash in February of this year was due to the fact that the pilots put off one of the checklist points and forgot to execute it later (for fairness, it should be noted that the aircraft designers also failed was to perform in advance in a relaxed atmosphere).

False positives were removed not only on the MD-80, but also on other machines - they changed the requirements for the system as a whole, so that the flights became safer.

And then IT?

The history of flight 255, in my opinion, bears conclusions that we can apply in ourselves, in IT.

- The organization should be open to change - if someone sees that something is wrong, it’s best if he has the opportunity to talk about it and is heard and not punished.

- Analyzing what happened fakap we must strive to find the true causes, and not to search for the guilty. Correcting problems is more important than punishment.

One of the methodologies for implementing these principles is called autopsy without blame (“autopsy without charges”). The variant used in the company where I work is called solution without blame, SWOB (“decision without charges”). Let me briefly quote his principles:

Objectives:

- The incident is investigated by the employees who are related to it, with the sole purpose of finding out what can be improved and / or learn from their mistakes (whoever does nothing is not mistaken).

- To fix conclusions for other teams so that they can learn new things and / or offer their ideas.

Important:

- Process improvement is everyone’s business.

- Everyone will be heard.

- Bosses openly new.

Promises:

- Do not be afraid to dig deep and voice even unpleasant reasons. No one will ever be punished for participating in SWOB or anything found on SWOB.

- Intolerable only disrespect for each other.

Organizational highlights:

- Choose an independent facilitator who will take notes and moderate the discussion. The facilitators choose a person who is well respected, so it’s honorable to be one. But the leadership cannot be chosen as a facilitator.

- It is best to hold a SWOB with not quite immediately after the facac to calm down, but not too late, so that people do not forget what happened. Perfect time within a week after a problem.

- Documenting format: first describing the event, then ideas for improving the processes.

Conclusion

If the pilots could have conveyed to the designers that the false alarms of the warning system for unreleased flaps are very disturbing, then the crash of flight 255 might not have happened. According to the “MS-10 Alliance”, there has not yet been any news on how exactly the sensor has bent , but in the case of the introduction of SWOB analogues, the following scenarios are quite possible:

- The collectors accidentally bent the sensor, but, knowing that they would not be punished for confessing, they reported that the sensor was replaced, there was no accident.

- The collectors did not understand any operation, knowing that they would not be punished, they reported on it, they were taught that there was no accident.

- Collectors found that work is inconvenient, knowing that they will not be punished, told about it, the processes have changed, there is no accident.

There is no silver bullet, but SWOB ideas can help one of you. Good luck in improving the processes!