Past, Present, and Future of Docker and Other Container Executables in Kubernetes

- Transfer

Note trans. : We have already written more than one publication (see the links at the end of the article) about executable container runtimes - they are usually in the context of Kubernetes. However, these materials often caused readers questions indicating insufficient understanding of where the next project came from, how it relates to others, and what generally happens in this container zoo.

A recent article from the technical director of the IBM Watson & Cloud Platform on Container Strategy and Linux Architecture - Phil Estes - offers an excellent retrospective and helps you navigate, gain a broader understanding of those who have lost (or never caught) the thread of events. As one of the main maintainers of the Moby and containerd project, a member of the Open Container Initiative (OCI) and Moby technical committees, as well as having the status of Docker Captain, the author writes about the past, present and future of the brave new world of container runtimes. And for the laziest, the material starts with a compact TL; DR on the subject ...

In the world of the Linux operating system, containerization technology has been around for a long time - the first ideas about separate namespaces for file systems and processes appeared more than a decade ago. And in a relatively recent past, LXC appeared and became the standard way for Linux users to interact with powerful isolation technology hidden in the Linux kernel.

However, even despite the attempts of LXC to hide the complexity of combining various technological "entrails" of what we usually call container today, containers remained some kind of magic and were strengthened only in the world especially knowledgeable, and not widely spread among the masses.

Everything changed in 2014 with Dockerwhen a new, developer-friendly wrapper on the same Linux kernel technology that LXC was using — after all, in fact, early versions of Docker behind the scenes were using LXC — and containers became a truly mass phenomenon, because imbued with simplicity and reusability of Docker-container images and simple commands to work with them.

Of course, Docker was not the only one who wanted to gain a share in the container market when the accompanying hype did not think to calm down after the first explosive interest in 2014. Over the years, a variety of alternative ideas have emerged for executable container environments from CoreOS (rkt) , Intel Clear Containers , hyper.sh(lightweight container-based virtualization), as well as Singularity and shifter in the world of high-performance computing (HPC) research.

The market continued to grow and mature, and with the Open Container Initiative (OCI) there were attempts to standardize the original ideas promoted by Docker. To date, many container executable environments are either already compatible with OCI, or on the way to this, offering manufacturers equal conditions to promote their features and capabilities, focused on particular applications.

The next stage in the evolution of containers was the integration of distributed computing containers a la Microservices - and all this in the new world of rapid development and iterations of deployment (we can say that DevOps), which was actively gaining momentum with the popularity of Docker.

Although Apache Mesos and other software orchestration platforms existed for Kubernetes to dominate , K8s took off from a small open source project from Google to the main project of the Cloud Native Computing Foundation (CNCF) . Note trans. : Did you know that CNCF has appeared

in 2015 on the occasion of the release of Kubernetes 1.0? At the same time, the project was transferred by Google to a new independent organization, which became part of The Linux Foundation.

Event on the occasion of the release of K8s 1.0, which, among others, was sponsored by the Mesosphere, CoreOS, Mirantis, OpenStack, Bitnami

From the news about the release of Kubernetes 1.0 on ZDNet

Even after Docker released the rival platform for orchestration - Swarm - built into Docker and With Docker simplicity and a focus on the default secure cluster configuration, this was no longer enough to stop the growing interest in Kubernetes.

However, many stakeholders outside the rapidly gaining popularity of cloud (cloud native) communities were confused. An ordinary observer could not figure out what was going on: the struggle of Kubernetes with Docker or their cooperation? Since Kubernetes was only a platform for orchestration, an executable container environment was required that would directly launch containers that are orchestrated into Kubernetes. From the very beginning, Kubernetes used the Docker engine and, even despite the competitive tensions between Swarm and Kubernetes, the Docker still remained the default runtime and was required for the Kubernetes cluster to function.

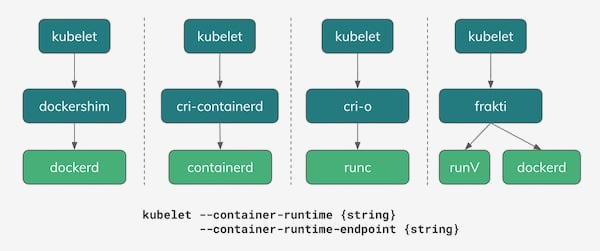

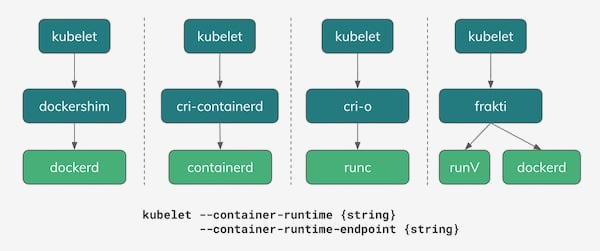

With a small number of executable container environments other than Docker, it seemed clear that pairing runtime with Kubernetes would require a specially written interface — shim — for each of the executable environments. The lack of a clear interface for the implementation of executable container environments made it very difficult to add support for new runtime's in Kubernetes.

To solve the growing complexity of deploying runtimes in Kubernetes, the community defined the interface — the specific functions that the executable container environment must implement within Kubernetes — calling it the Container Runtime Interface (CRI) (it appeared in Kubernetes 1.5). . This event not only helped the problem of the growing number of Kubernetes code base fragments affecting the use of executable container environments, but also contributed to understanding exactly which functions should be supported by potential runtime if they want to comply with CRI.

As it is easy to guess, CRI expects very simple things from the executable environment. Such an environment should be capable of starting and stopping sweeps, handling all container operations in the context of swells (start, stop, pause, kill, delete), and also support the management of container images using the registry. There are also support functions for collecting logs, metrics, etc.

When new features appear in Kubernetes, if they depend on the layer of the container execution environment, then such changes are made to the versioned CRI API. This in turn creates a new functional dependence on Kubernetes and requires the release of new versions of runtime support new features (one of the recent examples is user namespaces).

As of 2018, there are several options for use as executable container environments in Kubernetes. As shown in the illustration below, one of the real options is still Docker with its dockershim , which implements the CRI API. And in fact, in most of today's Kubernetes installations, it is he, Docker, that remains the default executable environment.

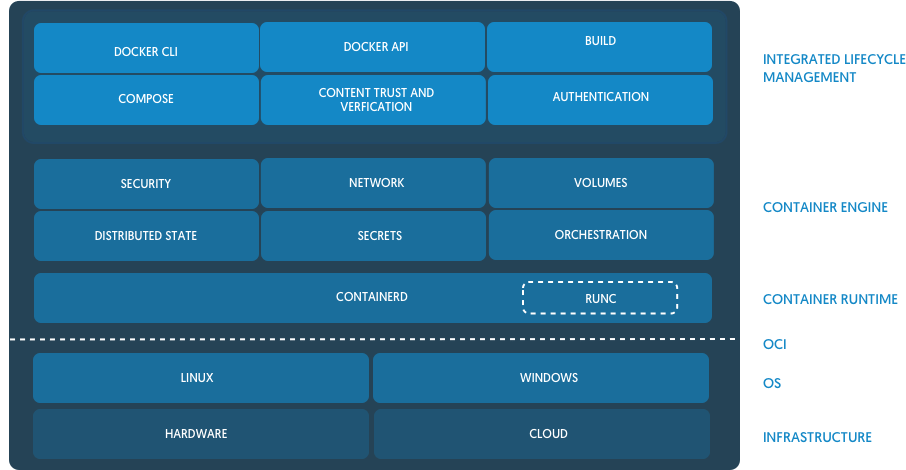

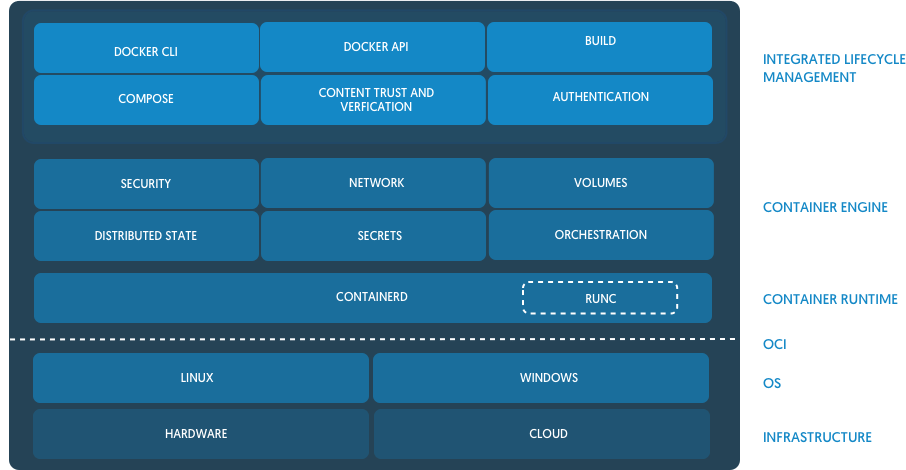

One of the interesting consequences of the tension between the Docker strategy for orchestrating with Swarm and the Kubernetes community was a joint project that took the core of the runtime from the Docker and gathered from it a new jointly developed Open Source project - containerd . Over time, containerd was transferred to CNCF - the same organization that manages and owns the Kubernetes project. (Note trans. : We described the emergence of containerd in more detail in a separate article .)

From the announcement of containerd in the Docker

Containerd blog , being simple, basic and independent from the views of a particular company (un-opinionated) implementation of runtime for both Docker and Kubernetes (via CRI) , began to gain popularity as a potential replacement for Docker in a variety of Kubernetes installations. Today, IBM Cloud and Google Cloud containerd based clusters are in early access / beta mode. Microsoft Azure also promised to switch to containerd in the future, and Amazon is still considering various runtime options for its container solutions (ECS and EKS), while continuing to use Docker.

Red Hat came into the container's executable environment by creating a simple CRI implementation, called cri-o , based on the OCI reference implementation, runc . Docker and containerd are also based on runc, but the creators of cri-o claim that their runtime is “just enough” for Kubernetes and no more is needed - they just added the most necessary for implementing Kubernetes CRI functions on top of the base runc binary. ( Note. For more information about the CRI-O project, we wrote in this article , and about its further development as a podman - here .)

Lightweight virtualization projects: Intel Clear Containers and hyper.sh - appeared in the wilds of the OpenStack Foundation, Kata containers, and offer their vision of virtualized containers for additional isolation, using a CRI implementation called frakti . Both cri-o and containerd also work with Kata containers, so their OCI-compatible runtime can be selected as a pluggable option.

To say that you know the future is usually not very wise, but we can at least fix some emerging trends as the container ecosystem moves from general enthusiasm and hyip to a more mature stage of its existence.

There were early concerns that the container ecosystem would form a fragmented environment, different participants of which would come to differing and incompatible ideas about what a container is. Thanks to the work of OCI and the responsible actions of the main vendors and participants, we saw a healthy environment in the industry among software offerings that favor OCI compatibility.

Even in newer environments, where the standard for using Docker has met less resistance due to existing limitations — for example, in HPC — all attempts to create non-Docker-based executable container environments have also paid attention to OCI. There are discussions about whether OCI can be a viable solution for the specific needs of the communities of scientists and researchers.

By adding standardization of connected executable container environments to Kubernetes using CRI, we can imagine a world in which developers and administrators can select the appropriate software tools and software stacks while waiting and observing interoperability throughout the container ecosystem.

Consider a specific example to better understand this world:

The entire script described above works perfectly thanks to OCI compatibility for executable environments and images, and the fact that CRI provides the flexibility to choose a runtime.

This possible flexibility and choice makes the container ecosystem truly remarkable, and is also a very important condition for the maturity of the industry, which has grown so rapidly since 2014. On the threshold of 2019 and the following years, I see a bright future with continuing innovation and flexibility for those who use and create container-based platforms.

Further information on this topic can be found in a recent Phil Estes speech at QCon NY: “ CRI Runtimes Deep Dive: Who's Running My Kubernetes Pod !? "

Read also in our blog:

A recent article from the technical director of the IBM Watson & Cloud Platform on Container Strategy and Linux Architecture - Phil Estes - offers an excellent retrospective and helps you navigate, gain a broader understanding of those who have lost (or never caught) the thread of events. As one of the main maintainers of the Moby and containerd project, a member of the Open Container Initiative (OCI) and Moby technical committees, as well as having the status of Docker Captain, the author writes about the past, present and future of the brave new world of container runtimes. And for the laziest, the material starts with a compact TL; DR on the subject ...

Main conclusions

- Over time, the choice among the executable environments of containers grew, providing more and more options than the popular Docker engine.

- The Open Container Initiative (OCI) has successfully standardized the concept of a container and a container image in order to ensure interoperability (“interoperability” - approx. Transl.) Between executable environments.

- In Kubernetes, we added the Container Runtime Interface (CRI), which allows connecting executable media containers to the underlying orchestration layer in K8s.

- Innovations in this area allow containers to use lightweight virtualization and other unique isolation techniques for growing security requirements.

- With OCI and CRI, interoperability and choice have become a reality in the ecosystem of executable container and orchestration environments.

In the world of the Linux operating system, containerization technology has been around for a long time - the first ideas about separate namespaces for file systems and processes appeared more than a decade ago. And in a relatively recent past, LXC appeared and became the standard way for Linux users to interact with powerful isolation technology hidden in the Linux kernel.

However, even despite the attempts of LXC to hide the complexity of combining various technological "entrails" of what we usually call container today, containers remained some kind of magic and were strengthened only in the world especially knowledgeable, and not widely spread among the masses.

Everything changed in 2014 with Dockerwhen a new, developer-friendly wrapper on the same Linux kernel technology that LXC was using — after all, in fact, early versions of Docker behind the scenes were using LXC — and containers became a truly mass phenomenon, because imbued with simplicity and reusability of Docker-container images and simple commands to work with them.

Of course, Docker was not the only one who wanted to gain a share in the container market when the accompanying hype did not think to calm down after the first explosive interest in 2014. Over the years, a variety of alternative ideas have emerged for executable container environments from CoreOS (rkt) , Intel Clear Containers , hyper.sh(lightweight container-based virtualization), as well as Singularity and shifter in the world of high-performance computing (HPC) research.

The market continued to grow and mature, and with the Open Container Initiative (OCI) there were attempts to standardize the original ideas promoted by Docker. To date, many container executable environments are either already compatible with OCI, or on the way to this, offering manufacturers equal conditions to promote their features and capabilities, focused on particular applications.

Kubernetes popularity

The next stage in the evolution of containers was the integration of distributed computing containers a la Microservices - and all this in the new world of rapid development and iterations of deployment (we can say that DevOps), which was actively gaining momentum with the popularity of Docker.

Although Apache Mesos and other software orchestration platforms existed for Kubernetes to dominate , K8s took off from a small open source project from Google to the main project of the Cloud Native Computing Foundation (CNCF) . Note trans. : Did you know that CNCF has appeared

in 2015 on the occasion of the release of Kubernetes 1.0? At the same time, the project was transferred by Google to a new independent organization, which became part of The Linux Foundation.

Event on the occasion of the release of K8s 1.0, which, among others, was sponsored by the Mesosphere, CoreOS, Mirantis, OpenStack, Bitnami

From the news about the release of Kubernetes 1.0 on ZDNet

Even after Docker released the rival platform for orchestration - Swarm - built into Docker and With Docker simplicity and a focus on the default secure cluster configuration, this was no longer enough to stop the growing interest in Kubernetes.

However, many stakeholders outside the rapidly gaining popularity of cloud (cloud native) communities were confused. An ordinary observer could not figure out what was going on: the struggle of Kubernetes with Docker or their cooperation? Since Kubernetes was only a platform for orchestration, an executable container environment was required that would directly launch containers that are orchestrated into Kubernetes. From the very beginning, Kubernetes used the Docker engine and, even despite the competitive tensions between Swarm and Kubernetes, the Docker still remained the default runtime and was required for the Kubernetes cluster to function.

With a small number of executable container environments other than Docker, it seemed clear that pairing runtime with Kubernetes would require a specially written interface — shim — for each of the executable environments. The lack of a clear interface for the implementation of executable container environments made it very difficult to add support for new runtime's in Kubernetes.

Container Runtime Interface (CRI)

To solve the growing complexity of deploying runtimes in Kubernetes, the community defined the interface — the specific functions that the executable container environment must implement within Kubernetes — calling it the Container Runtime Interface (CRI) (it appeared in Kubernetes 1.5). . This event not only helped the problem of the growing number of Kubernetes code base fragments affecting the use of executable container environments, but also contributed to understanding exactly which functions should be supported by potential runtime if they want to comply with CRI.

As it is easy to guess, CRI expects very simple things from the executable environment. Such an environment should be capable of starting and stopping sweeps, handling all container operations in the context of swells (start, stop, pause, kill, delete), and also support the management of container images using the registry. There are also support functions for collecting logs, metrics, etc.

When new features appear in Kubernetes, if they depend on the layer of the container execution environment, then such changes are made to the versioned CRI API. This in turn creates a new functional dependence on Kubernetes and requires the release of new versions of runtime support new features (one of the recent examples is user namespaces).

Current landscape CRI

As of 2018, there are several options for use as executable container environments in Kubernetes. As shown in the illustration below, one of the real options is still Docker with its dockershim , which implements the CRI API. And in fact, in most of today's Kubernetes installations, it is he, Docker, that remains the default executable environment.

One of the interesting consequences of the tension between the Docker strategy for orchestrating with Swarm and the Kubernetes community was a joint project that took the core of the runtime from the Docker and gathered from it a new jointly developed Open Source project - containerd . Over time, containerd was transferred to CNCF - the same organization that manages and owns the Kubernetes project. (Note trans. : We described the emergence of containerd in more detail in a separate article .)

From the announcement of containerd in the Docker

Containerd blog , being simple, basic and independent from the views of a particular company (un-opinionated) implementation of runtime for both Docker and Kubernetes (via CRI) , began to gain popularity as a potential replacement for Docker in a variety of Kubernetes installations. Today, IBM Cloud and Google Cloud containerd based clusters are in early access / beta mode. Microsoft Azure also promised to switch to containerd in the future, and Amazon is still considering various runtime options for its container solutions (ECS and EKS), while continuing to use Docker.

Red Hat came into the container's executable environment by creating a simple CRI implementation, called cri-o , based on the OCI reference implementation, runc . Docker and containerd are also based on runc, but the creators of cri-o claim that their runtime is “just enough” for Kubernetes and no more is needed - they just added the most necessary for implementing Kubernetes CRI functions on top of the base runc binary. ( Note. For more information about the CRI-O project, we wrote in this article , and about its further development as a podman - here .)

Lightweight virtualization projects: Intel Clear Containers and hyper.sh - appeared in the wilds of the OpenStack Foundation, Kata containers, and offer their vision of virtualized containers for additional isolation, using a CRI implementation called frakti . Both cri-o and containerd also work with Kata containers, so their OCI-compatible runtime can be selected as a pluggable option.

Predicting the future

To say that you know the future is usually not very wise, but we can at least fix some emerging trends as the container ecosystem moves from general enthusiasm and hyip to a more mature stage of its existence.

There were early concerns that the container ecosystem would form a fragmented environment, different participants of which would come to differing and incompatible ideas about what a container is. Thanks to the work of OCI and the responsible actions of the main vendors and participants, we saw a healthy environment in the industry among software offerings that favor OCI compatibility.

Even in newer environments, where the standard for using Docker has met less resistance due to existing limitations — for example, in HPC — all attempts to create non-Docker-based executable container environments have also paid attention to OCI. There are discussions about whether OCI can be a viable solution for the specific needs of the communities of scientists and researchers.

By adding standardization of connected executable container environments to Kubernetes using CRI, we can imagine a world in which developers and administrators can select the appropriate software tools and software stacks while waiting and observing interoperability throughout the container ecosystem.

Consider a specific example to better understand this world:

- A MacBook developer uses Docker for Mac to develop his application and even uses the built-in support for Kubernetes (the Docker works here as a CRI runtime) to try deploying the new application on K8s platforms.

- The application passes through the CI / CD in the vendor's software, which uses runc and special (written by the vendor) code to package the OCI image and load it into the corporate register of containers for testing.

- Kubernetes' own (on-premises) cluster installation, working with containerd as a CRI runtime, runs the test suite for the application.

- This company for some reason chose Kata containers for certain workloads in production, so when the application is deployed, it runs in the subframes with containerd configured to use Kata containers as runtime instead of runc.

The entire script described above works perfectly thanks to OCI compatibility for executable environments and images, and the fact that CRI provides the flexibility to choose a runtime.

This possible flexibility and choice makes the container ecosystem truly remarkable, and is also a very important condition for the maturity of the industry, which has grown so rapidly since 2014. On the threshold of 2019 and the following years, I see a bright future with continuing innovation and flexibility for those who use and create container-based platforms.

Further information on this topic can be found in a recent Phil Estes speech at QCon NY: “ CRI Runtimes Deep Dive: Who's Running My Kubernetes Pod !? "

PS from translator

Read also in our blog: