Mind Algorithms, or What's New Learning Machines Opens Us About Us

- Transfer

“Science often follows technology, because discoveries give us new methods of understanding the world and new phenomena that need to be explained.”

So says Aram Harrow, professor of physics at the Massachusetts Institute of Technology, in an article “ Why now is the right time to study quantum computing ”.

He believes that the scientific idea of entropy could not be fully understood until the thermodynamics of steam engine technology required it. Similarly, quantum computing emerged from attempts to simulate quantum mechanics on ordinary computers.

So what does all this have to do with machine learning?

Like steam engines, machine learning is a technology designed to solve specific classes of problems. Nevertheless, the results obtained in this area give us intriguing - perhaps fundamental - scientific assumptions about how the human brain functions, how it perceives the world around and learns. Machine learning technology gives us new ways to think about the science of human thinking ... and imagination.

Not machine recognition, but computer imaging

Five years ago, deep learning pioneer Geoff Hinton, who currently combines work at the University of Toronto with work at Google, published the following video.

Hinton trained a five-layer neural network to recognize handwritten numbers from bitmaps. It was a form of machine recognition of objects that made handwriting suitable for machine reading.

But unlike previous works in the same field (in which the main goal was simply to recognize numbers), the Hinton neural network could also carry out the process in the reverse order. That is, based on the concept of a symbol, she could recreate an image corresponding to this concept.

We see how the machine in the literal sense of the word imagines an image with the concept of "8".

Magic is encoded in layers between inputs and outputs. These layers are a kind of associative memory that performs comparisons in both directions (from image to concept and vice versa) within a single neural network.

Can human imagination work like that?

But behind the simplified technology created on the basis of the model of the human brain lies a wider scientific question: does the human imagination work (creating visual images, visualization). If so, then this is a significant discovery.

After all, isn't this what our brain does in a completely natural way? When we see the number 4, we think about the concept of "4". And vice versa: when someone says “8”, we can imagine the image of the figure 8. In our imagination, is

this not a kind of “reverse process” that the mind goes from concept to image (or sound, smell, feeling, etc.) .d.) through the information embedded in the layers? Were we not witnesses of how this network created new images - and, perhaps, in an improved version could create new internal connections?

Concept and contemplation

If visual recognition and imagination are really just a reciprocating relationship between images and concepts, what happens between these layers? Can deep neural networks realize perception through contemplation or a similar process?

Let's look back at the past: 234 years ago, the Critique of Pure Reason, a philosophical work by Immanuel Kant, was published, in which he contends that contemplation is nothing but a representation of a phenomenon.

Kant opposed the idea that human knowledge could be the result of exclusively empirical and rational thinking. He argued that cognition through contemplation must be taken into account. By the definition of “contemplation,” he understands representations that were obtained through sensory perception, where descriptions of empirical objects or sensations act as “concepts”. Together they form human knowledge.

Two centuries later, Professor Alyosha Efros from the computer science department of the University of California, Berkeley, whose specialization is visual understanding, noted: “The visual picture of the world around us has much more details than the words that we have to describe them ". According to Efros, the use of words for teaching models imposes a language restriction on our technologies. Nameless contemplated phenomena are much more than words.

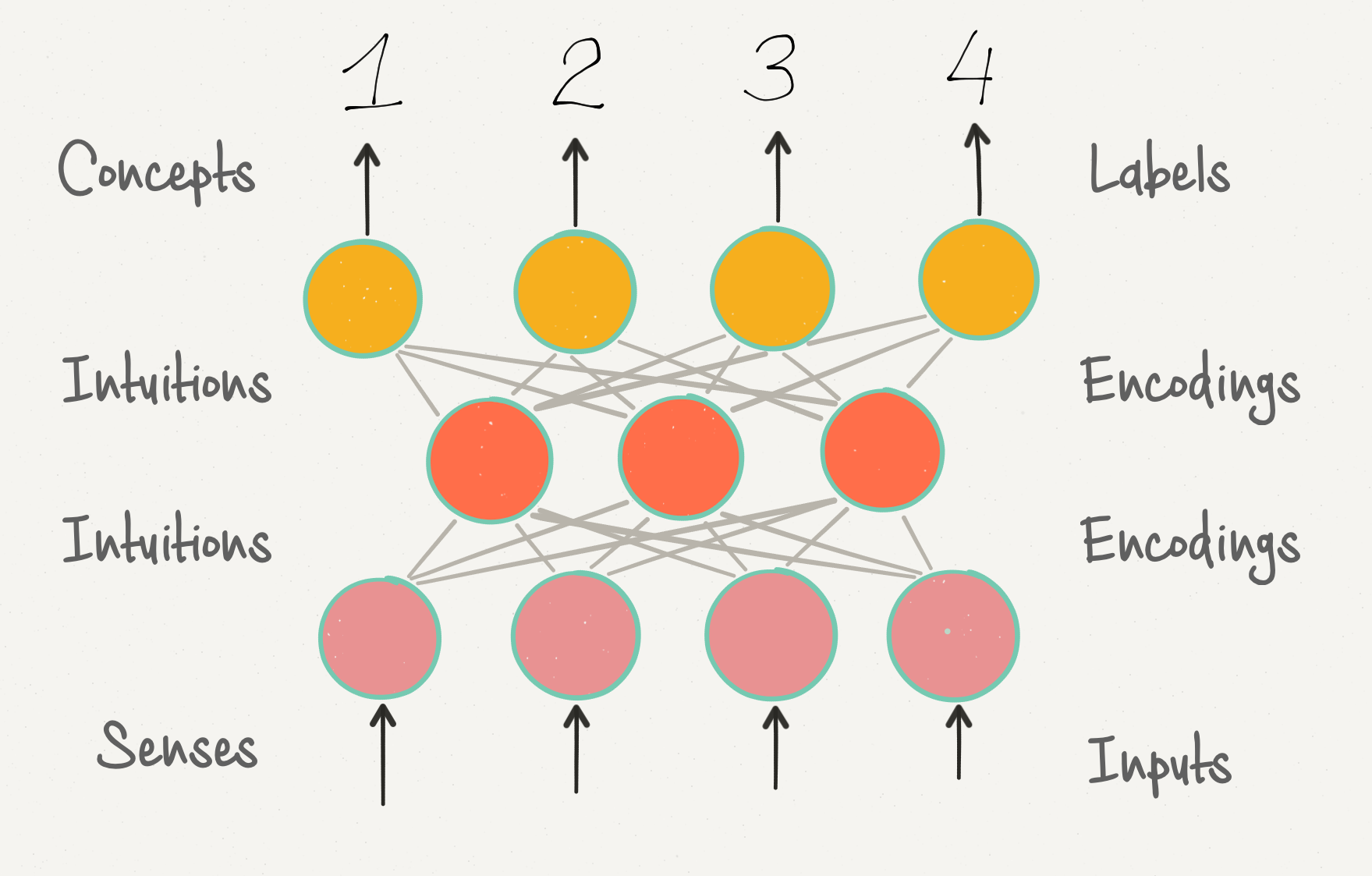

Here we observe an intriguing correspondence between the label in machine learning and the human concept, as well as between the coding in machine learning and human contemplation.

In learning deep networks, we find that activations in successive layers extend from lower conceptual levels of thinking to higher levels, as shown by the Cat Recognition study led by Quoc Le, both of Google and Stanford University. The image recognition network encodes bitmaps in the lowermost layer, then visible angles and edges are encoded in the next layer, and simple shapes in the next layer, etc. These intermediate layers are not required to have any activations corresponding to high-level concepts (for example, such as “cat” or “dog”), but they still encode a distributed representation of the input sensory information. Only the last output layer matches the labels specified by the person, since it is subject to restrictions,

Is this not contemplation?

Consequently, the coding and labels discussed above seem to be the same as Kant called “contemplation” and “concepts”.

This is another example of how machine learning technology helps to understand the principles of human thinking. The network diagram presented above makes us wonder if it is not a significantly simplified architecture of contemplation.

Controversy surrounding the Sepir-Whorf concept

Efros noted: if in the world there are far more phenomena than words to describe them, then are our thoughts limited to words? This question is at the center of the Sepir-Whorf linguistic relativity hypothesis and polemic about whether the language completely defines the boundaries of our knowledge, or whether we are naturally able to comprehend any phenomenon regardless of the language we speak.

In its strong form, this hypothesis argues that the structure and vocabulary of a language affects a person’s perception and understanding of the world.

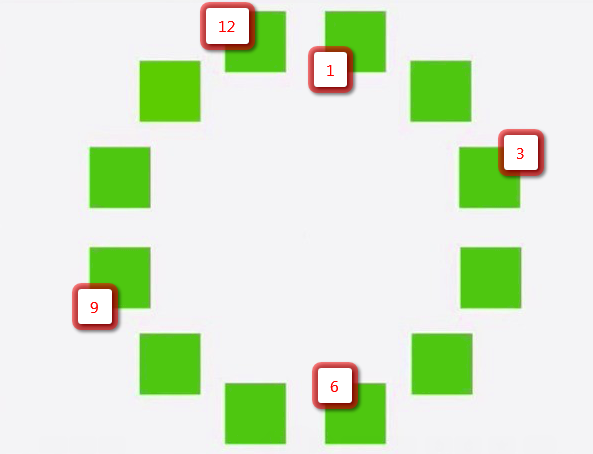

Some of the most impressive results can be obtained thanks to the color text presented here. The most impressive results are given by the color classification test. If you ask to find a square whose shade differs from all the rest, representatives of the Himba ethnic group from northern Namibia, whose language has clear names for these two shades, then they find it almost immediately.

At the same time, all the rest of us are having difficulty with this.

The theory is that if there are words describing different shades, our brain itself will learn to distinguish them, so over time these differences will become more "obvious". In visual perception with the help of our brain, and not with the help of our eyes, language determines our perception.

We see with the help of our brain, not the eye.

In machine learning, we observe something similar. When teaching with a teacher, we train our models so that they determine the corresponding labels or categories of images (or other elements - text, sound, etc.) as accurately as possible. By definition, these models are trained to more effectively distinguish categories with labels than other categories for which labels were not provided. When viewed from the point of view of machine-controlled learning, this effect does not seem surprising. So, perhaps, we should not be too surprised at the results of the test considered above. Language really affects our perception of the world in the same way that labels in machine-controlled learning affect the ability of a model to distinguish categories.

But at the same time, we know that labels are not a prerequisite for the ability to distinguish categories. In Google’s “cat recognition” project, the neural network ultimately completely independently discovers the concepts of “cat”, “dog”, etc. without learning the algorithm for labels. After such training, without proper control, whenever the network receives an image from a certain category (for example, “cats”), the same corresponding set of neurons is activated. After looking at a large number of training images, this network found signs characteristic for each category - as well as differences between different categories.

In the same way, an infant who was repeatedly shown a paper cup will soon be able to recognize its visual image even before it learns the words “paper cup” in order to associate the image with the name. In this sense, the strong form of the Sepir-Whorf hypothesis is not fully valid, because we can form concepts without words to describe them, which we do.

Machine learning under control and without it turned out to be two sides of the same coin in this controversy. And if we recognize them as such, then the Sepir-Whorf concept will not be the subject of controversy, but rather a reflection of human learning with and without a teacher.

I find this analogy incredibly fascinating - and we just started to understand something in this matter. Philosophers, psychologists, linguists and neurophysiologists have long been studying this topic. When processing a huge amount of text, images or audio, the latest deep learning architectures demonstrate comparable to human or better results in image classification, language translation and speech recognition.

Each new discovery in the field of machine learning gives us the opportunity to learn something new about the processes taking place in the human brain. Speaking of our own mind, we get more and more reasons to refer to machine learning.

PS Do you see which square is different from the rest? Write your version in the comments, using a clean picture for “awareness” and the picture below to determine the square number:

PPS There is a version that different monitors visually can “point” to a different square, and I (the translator) agree with it. For the most inquiring minds, I recall that there are purely technical means of testing their assumptions.