How to collect reports from employees and not get into Excel Hell

Earlier, we wrote about the Excel Hell problem and possible solutions. Today we want to consider in more detail one of the cases - namely, the mess that occurs when collecting data from employees or branches in Excel files.

The task of collecting data from departments is relevant if we do not have a single accounting system from which information can always be obtained. For medium and large businesses, these can be periodic reports sent to the parent company, and for small businesses, for example, daily time sheets or project reports.

There is a manager who is interested in obtaining summary information on departments. He sets the task to his closest subordinate (let's call him an “analyst”) to receive this report.

The obvious solution for the analyst is to create an Excel template that he sends out to departments. In the units, in turn, there are employees who own this information. They fill out templates and send files back to the analyst by mail. Half of them are mistaken and send the corrected file the next day.

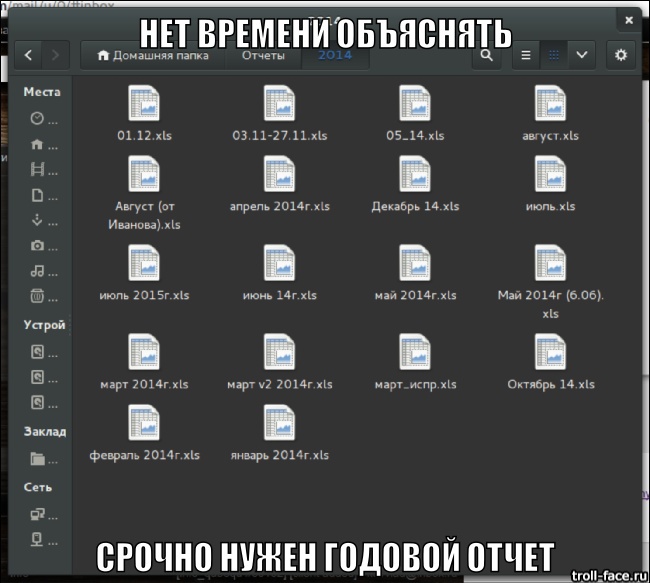

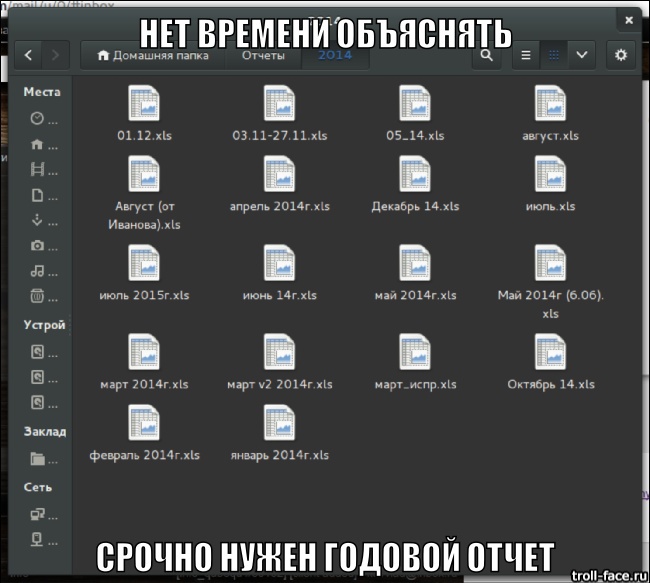

As a result, the analyst spends two days combining all these files into one and preparing a summary report. The manager after viewing the summary report understands that there is not enough data in it, and asks to add another column, which the analyst did not foresee. And the whole chain is repeated from the beginning, “v2.xls templates” are sent out, reporting employees fill them in and send them back. As a result, the analyst has two sets of data, and only he can understand which of them is relevant.

And now the manager begins to like this report and he wants to receive it monthly. After some time, he will want to watch it in dynamics and by unit, which means that our analyst will need to process all the accumulated files for previous periods, including sorting out their versions, merging them into a new summary report, etc. etc. In total, we received a large block of useful data that no one can use.

This is a typical situation of Excel Hell in a single organization.

Consider the main problems in this process.

Each of the problems voiced, of course, has a solution.

Errors when filling out? - You can limit the available values in the cells.

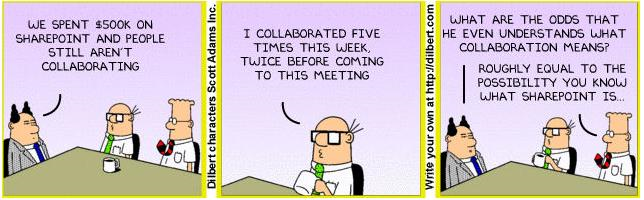

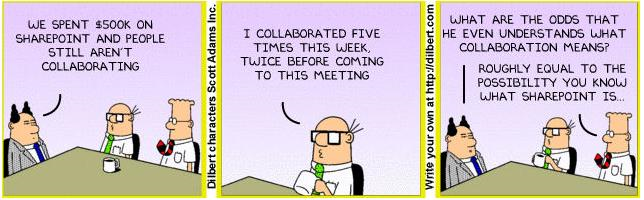

Different versions of the data? - create a spreadsheet in Google Spreadsheet or put Sharepoint.

Typical operations? - write a macro in Excel.

Historical data? - make an Excel pivot table or develop your own database.

Change of structure? - train SQL analytics, if at the last stage the database was created.

Efficiency? - Regulate the process and pay premiums for meeting deadlines.

However, all these solutions require a systematic approach, putting in order all processes and data sources, often also external consulting. You can still implement a year and a half integrated solution that automates all processes, thanks to which, theoretically, there will be no need to assemble such a report.

We think that there is a solution that does not require large expenses for the implementation and restructuring of your business processes, but facilitating access to your information and working with it.

There are a set of conditions that must be observed in this process.

Now we will try, subject to these conditions, to get rid of the problems described above:

We tried to implement all this in our system - QuBeQu (Kubeku). The basic unit of information is not a file, but an indicator with a set of analytical features. QuBeQu has a form and report designer, where the user can quickly create a form of a certain structure, consisting of a set of indicators with the specified characteristics. When filling out the form, the data will fall into a single repository, as the value of the indicator for the given values of the characteristics.

Accordingly, in the summary report, the values of this indicator will be automatically obtained (or calculated using the formula). For ease of filling out forms, we support import from Excel, copy-paste via the clipboard and keyboard navigation on the table. All data is fully versioned. Even if we delete the value in the cell, we can show what number was there, who entered it and who deleted it. In addition, to ensure the traceability of information, all calculated and aggregated values can be disclosed to the source data - you do not need to run through Excel cells and understand what this or that formula means. Formulas are not written in terms of the position of the cells in the table, but in terms of indicators and their characteristics, i.e. in QuBeQu, “Revenue” = ”Income” is “Expense”, not “A1 = B1-C1”.

You will probably say that supporting such a system is costly, because it doesn’t happen that “everything works by itself”. But try to compare how much time the analyst would spend on compiling information from files with the time that he would spend on the system. Even if these values are of the same order, we get a fundamentally different result.

All the data that we received from the reporting employees is structured, they can be quickly and easily used, they will not be lost. There is a single place for storing information, and if there are a lot of analysts, managers and reporting employees, a large amount of data will be accumulated there that can be investigated.

We have described one of the possible uses for QuBeQu. The capabilities of the system are wider than described in this article: connectors to external systems and databases, computing, versioning, dashboards and graphs.

The task of collecting data from departments is relevant if we do not have a single accounting system from which information can always be obtained. For medium and large businesses, these can be periodic reports sent to the parent company, and for small businesses, for example, daily time sheets or project reports.

As a rule, this process is organized as follows

There is a manager who is interested in obtaining summary information on departments. He sets the task to his closest subordinate (let's call him an “analyst”) to receive this report.

The obvious solution for the analyst is to create an Excel template that he sends out to departments. In the units, in turn, there are employees who own this information. They fill out templates and send files back to the analyst by mail. Half of them are mistaken and send the corrected file the next day.

As a result, the analyst spends two days combining all these files into one and preparing a summary report. The manager after viewing the summary report understands that there is not enough data in it, and asks to add another column, which the analyst did not foresee. And the whole chain is repeated from the beginning, “v2.xls templates” are sent out, reporting employees fill them in and send them back. As a result, the analyst has two sets of data, and only he can understand which of them is relevant.

And now the manager begins to like this report and he wants to receive it monthly. After some time, he will want to watch it in dynamics and by unit, which means that our analyst will need to process all the accumulated files for previous periods, including sorting out their versions, merging them into a new summary report, etc. etc. In total, we received a large block of useful data that no one can use.

This is a typical situation of Excel Hell in a single organization.

Consider the main problems in this process.

- Reporting persons enter data with errors.

By default, you can enter anything into the Excel cell. The analyst receives a set of files with different directories, time and number formats, etc. In extreme cases, reporting people manage to glue cells, add new columns or even send a completely different file.

- Different versions of the data.

Errors and changes in the initial requirements lead to the fact that the analyst and the reporting person accumulate several versions of the same reports and data and no one can understand where the latter is.

- The analyst constantly spends time on the same actions.

A typical operation to reduce data from files received from departments turns into the main work of the analyst, and at the output we have only a rigidly fixed report in which there are aggregated values. And the initial information, the verification of which the analyst spent a lot of time, is lost.

- No access to historical data.

The analyst spent a huge amount of time converging and processing data, made a summary report, probably even posted it in a common repository, but how to get the data out of there? How to analyze the dynamics of development? We need to again summarize and process the data only from the summary reports for different periods.

- Change report structure.

If the manager wants to look at the report in another section or add some data that did not exist before, or simply replace the chart view with another - what should I do with all the old reports? Rebuild or leave in "obsolete" form?

- Efficiency

It takes a certain amount of time to collect data and reduce it, for which this data already has time to become outdated.

Each of the problems voiced, of course, has a solution.

Errors when filling out? - You can limit the available values in the cells.

Different versions of the data? - create a spreadsheet in Google Spreadsheet or put Sharepoint.

Typical operations? - write a macro in Excel.

Historical data? - make an Excel pivot table or develop your own database.

Change of structure? - train SQL analytics, if at the last stage the database was created.

Efficiency? - Regulate the process and pay premiums for meeting deadlines.

However, all these solutions require a systematic approach, putting in order all processes and data sources, often also external consulting. You can still implement a year and a half integrated solution that automates all processes, thanks to which, theoretically, there will be no need to assemble such a report.

We think that there is a solution that does not require large expenses for the implementation and restructuring of your business processes, but facilitating access to your information and working with it.

As we see the proper organization of such a process

There are a set of conditions that must be observed in this process.

- Building a template for data collection should not take much time.

The analyst does not want to spend a lot of time thinking through the structure, setting restrictions on input, prohibiting file editing, etc., because at the beginning he still does not realize how important this information is and what he will need to process in the future.

- The manager should be able to change requirements.

Appetite comes with eating, and before receiving the first version of the report it is difficult to understand what data we actually need. This means that it should be possible to change both the structure of the data collected and the structure of the report, without having to re-collect the information already entered.

- Reporting employees should simply submit data.

It is so difficult to get the right report from them on time, and unnecessary problems in the organization of the process strongly affect discipline and efficiency.

- Not only the summary report has value, but also the source data.

Probably, in the future we will want to look at the data in a different context or to calculate new indicators based on them.

- Access to data should be available to all employees of the organization.

All employees should know where to get the data and how to do it.

Now we will try, subject to these conditions, to get rid of the problems described above:

- Reporting persons enter data with errors.

We need a tool that allows you to quickly configure a template for data input, which has basic restrictions on input - for numerical, text and reference data. At the same time, often reporting employees can not always use an external system, which means that you need to give them the opportunity to use the familiar Excel, and import data from there.

- Different versions of the data.

All entered data must be versioned and have a single working version. It should always be clear who introduced the meaning and when.

- Efficiency

The entered values should immediately go to the shared storage.

- Waste of time on typical actions.

Summary reports should be generated automatically, not require separate generation procedures.

- Problem with restructuring.

The generation of a summary report should not depend on the structure of the input forms; previously entered data should not be lost when adding or changing columns and rows in forms and reports.

- Access to historical data.

The entered data should not be stored in forms and files - it should immediately fit into a structure convenient for work and selection.

We tried to implement all this in our system - QuBeQu (Kubeku). The basic unit of information is not a file, but an indicator with a set of analytical features. QuBeQu has a form and report designer, where the user can quickly create a form of a certain structure, consisting of a set of indicators with the specified characteristics. When filling out the form, the data will fall into a single repository, as the value of the indicator for the given values of the characteristics.

Accordingly, in the summary report, the values of this indicator will be automatically obtained (or calculated using the formula). For ease of filling out forms, we support import from Excel, copy-paste via the clipboard and keyboard navigation on the table. All data is fully versioned. Even if we delete the value in the cell, we can show what number was there, who entered it and who deleted it. In addition, to ensure the traceability of information, all calculated and aggregated values can be disclosed to the source data - you do not need to run through Excel cells and understand what this or that formula means. Formulas are not written in terms of the position of the cells in the table, but in terms of indicators and their characteristics, i.e. in QuBeQu, “Revenue” = ”Income” is “Expense”, not “A1 = B1-C1”.

You will probably say that supporting such a system is costly, because it doesn’t happen that “everything works by itself”. But try to compare how much time the analyst would spend on compiling information from files with the time that he would spend on the system. Even if these values are of the same order, we get a fundamentally different result.

All the data that we received from the reporting employees is structured, they can be quickly and easily used, they will not be lost. There is a single place for storing information, and if there are a lot of analysts, managers and reporting employees, a large amount of data will be accumulated there that can be investigated.

Total

We have described one of the possible uses for QuBeQu. The capabilities of the system are wider than described in this article: connectors to external systems and databases, computing, versioning, dashboards and graphs.