Development in our own juice or as we understood that we are not doing what users need

Have you ever thought about the fact that thousands of companies create their products and technologies in isolation from user needs and solve independently invented problems that weakly correlate with real ones?

In terms of creating one of our technologies, we, the developers of Macroscop, belonged to such companies: for 6 years we worked on a function that, in our opinion, should make the lives of thousands of people easier and more convenient.

In 2008, we had the idea to simplify the search process in the archives of video systems as much as possible. Imagine a medium-sized system of 100 cameras and about 1,000 hours of video that they left per day (usually video is recorded only when there is activity in the frame). And you need to find something in these records, but you do not know where and when it happened. You will look at the recordings of the hour, second, third, and by the time you find what you need, curse everything in the world.

We decided to create a tool that allows you to search by video in the same way that Google searches by text: you ask the person with some signs in the system, for example, dressed in a green T-shirt and black jeans, and you get everyone who meets these parameters.

We created such a tool and called it an indexer.(technology indexing objects). The solution works with color combinations of the search pattern: the object is clustered (areas of the same color are selected), its characteristics are determined for each cluster, which as a result form an index. Similarly, indices are calculated for all objects in the archive, and by comparing the program, the operator offers a set of results — all objects whose indices are close to the index of the sample.

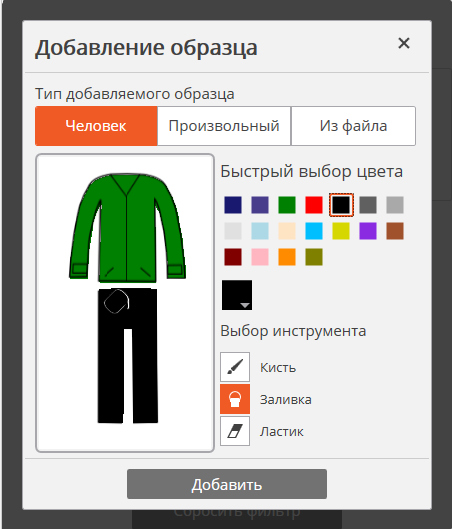

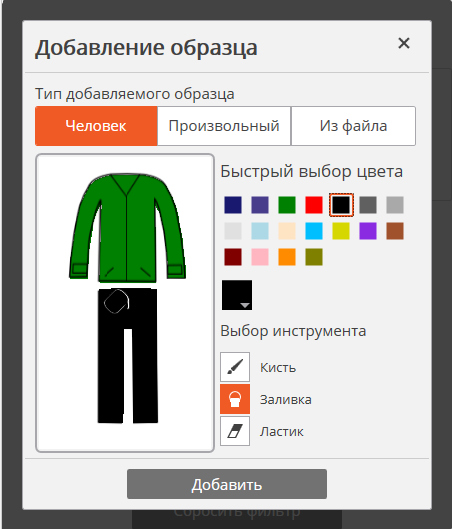

This is how the formation of the search pattern looks in the indexer: the operator can manually color the human figure in the appropriate colors.

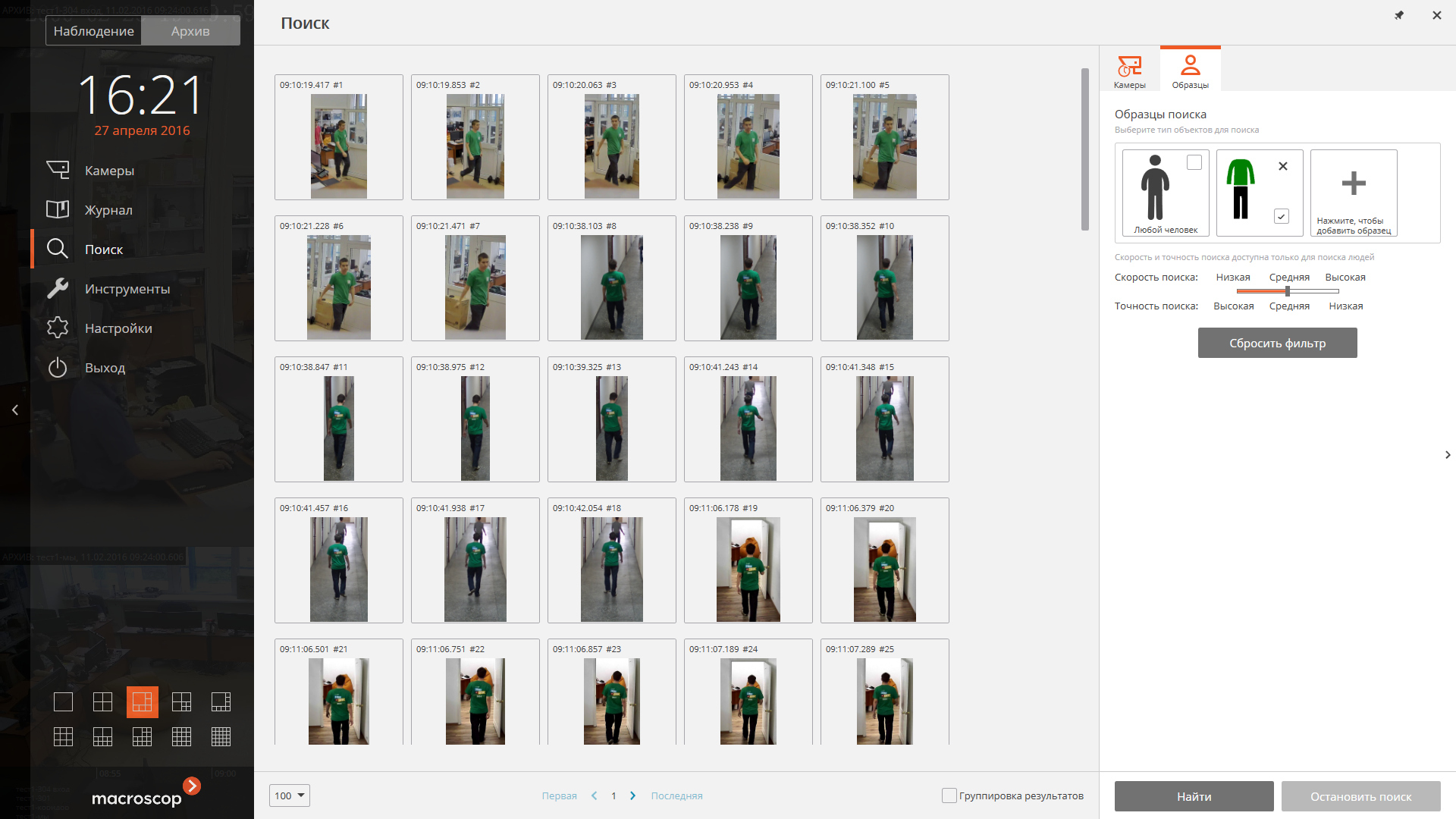

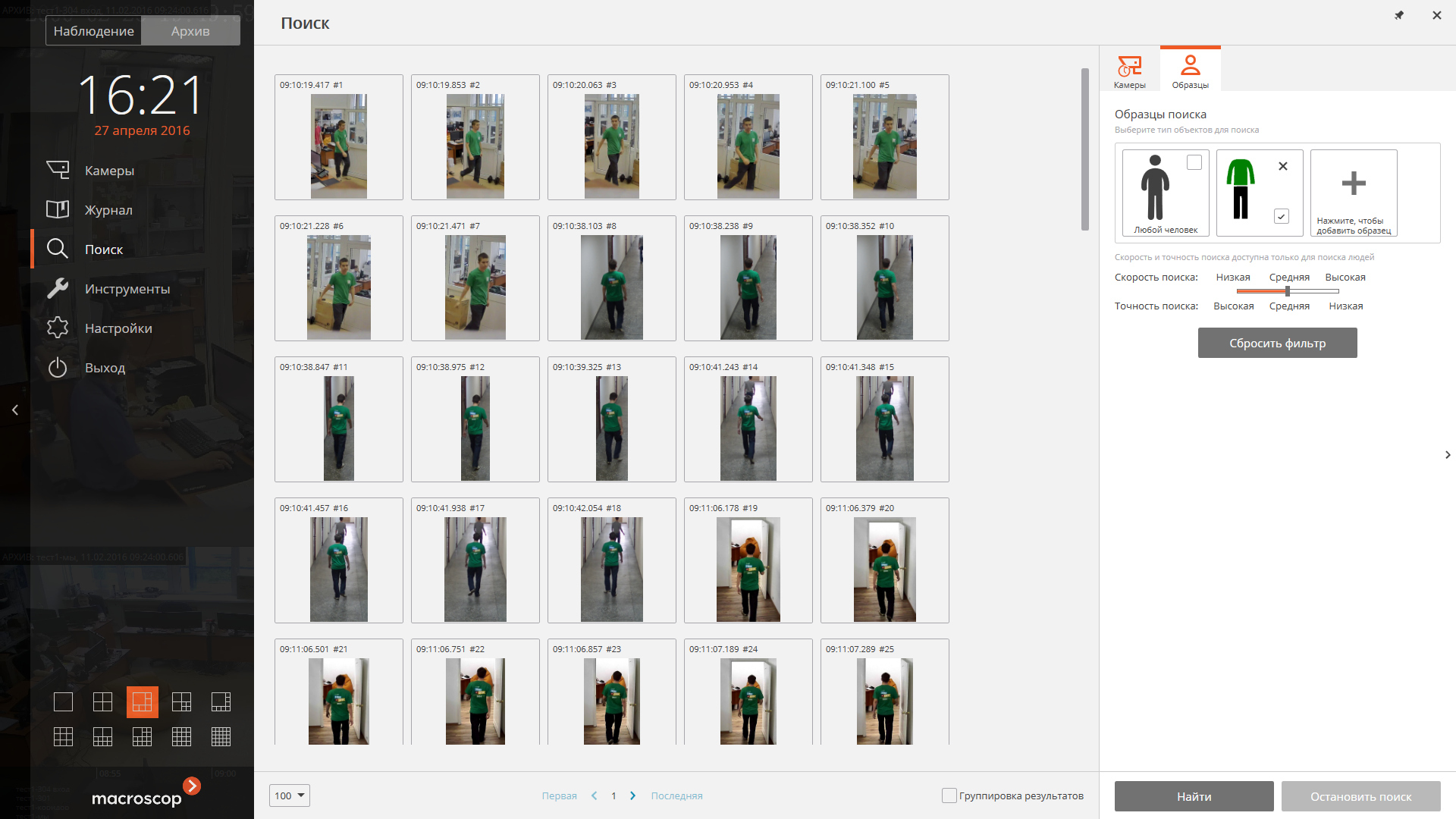

Indexer search results are displayed as a set of images. The operator selects the desired option, and then views the video segment from the archive.

The indexer has become for us the brainchild on which we are obsessed to a certain extent. We were absorbed in this idea and spent a tremendous amount of effort, time and money on development. So we hired 2 teams of high-class developers - a team of “physicists”, graduates of the physics department, and a team of “mathematicians” - graduates of the mechmath, who “competed” for a whole year, solving the indexing problem using different methods. By the way, “physicists” won this competition :)

We conducted a lot of funny experiments, during which we checked how our indexer reacts to this or that lighting, clothes of this or that coloring. For example, that year, walking along the corridor of the business center in which we worked, you could easily meet men in multi-colored family shorts over suits or jeans. These were our developers who tested how the indexer reacts to different patterns and textures.

Work on the development of the indexer continued until 2014. We have made significant progress in development and created a really working tool, but the task of recognizing color combinations is very difficult, so even after 6 years the quality of the search by signs was not ideal. At the same time, the indexer and the interactive search module created on its basis were available for users: it was sold as a plug-in or provided free of charge as part of the maximum software version. From time to time, we released updates, within the framework of which something improved, but something at the same time “fell”. Often it was an indexer, but almost no one ever contacted the company with a problem that searching by signs did not work. And at some point we realized that such requests and complaints do not come because that just nobody uses it and EVEN DOESN’T TRY TO USE. And we are engaged in abstract development, the implementation of ideas that are completely divorced from reality.

In 2014, we recognized that our idea of searching by signs failed, it was impossible to move on in the same mode. We decided to make a U-turn .

The plan was this: to communicate closely with 50 real users of video surveillance systems and find out from them what they are looking for, how they are looking for what they need; to understand whether they really need a search or for them the work of some intellectual functions in real time is important.

We started dating and chatting. During one such meeting, we were told: “Your search for signs is theoretically interesting, but in practice we often need not only to find a person, but to understand how he moved around the object: where he came from, where he was, when and where he went.” Soon, the same need was expressed independently by one another 5 or 7 users.

We thought about how to solve this problem within the framework of our software and provide users with data on the movements of people through the cameras of the entire video surveillance system. We started developing inter-chamber tracking .

Interchamber tracking allows you to track the movement of objects (in the current implementation of people) in the field of view of all cameras in the video system and get the trajectory of this movement. That is, to understand where any person of interest came from, where he went and how he moved within the framework of a video surveillance system. The technology of inter-chamber tracking is based on the same indexer and search by signs. The user selects any person in the frame and sets it as a model for searching on other cameras. Macroscop searches for all visually similar objects on nearby cameras at adjacent time intervals. The user only needs to step by step confirm the right person in the results.

The result of inter-chamber tracking is a way to move a person on the plan of the object, a video from fragments of movement from different cameras or a slide show of images that allow you to restore the full picture of his actions: at what time he appeared on the object, where he was when he left the object.

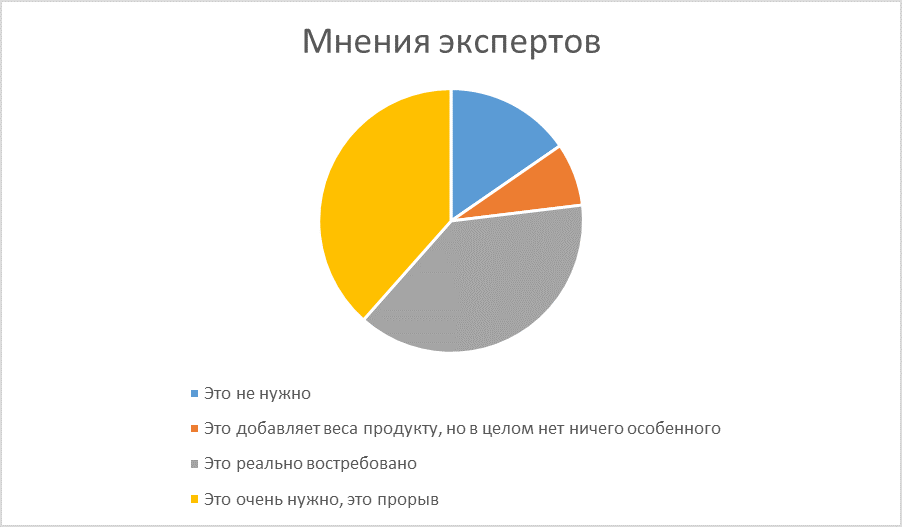

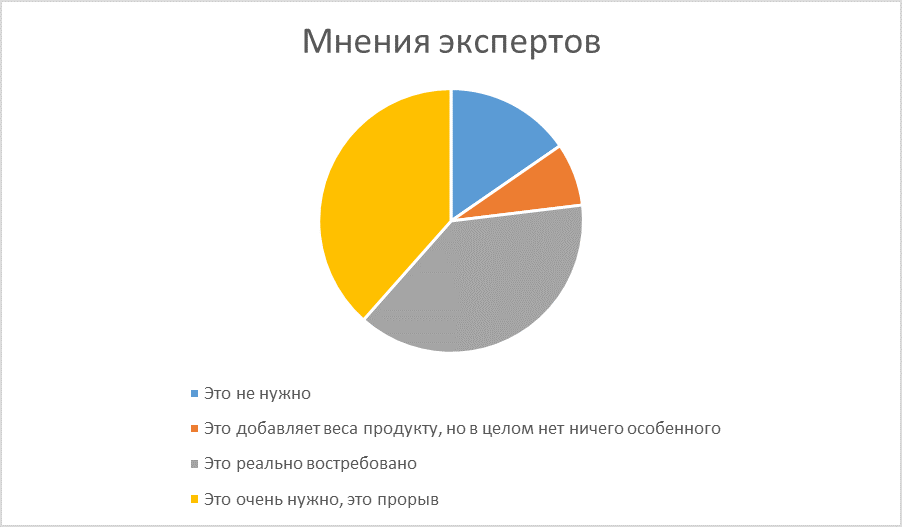

At any convenient opportunity, we continue to validate this idea. So, for example, at the Mips / Securika exhibition last month, we presented inter-chamber tracking and interviewed more than a dozen experts from leading companies in the industry about its usefulness. And here is what the deep and detailed interviews showed:

Our many years of experience in developing the indexer confirmed the well-known truths that are written in books:

1. The generation of ideas in isolation from reality and their subsequent selfless implementation is a very risky task. Have come up with something - interview 10, 50, 100 users. And it’s better not to engage in abstract inventions, but to identify a real sore spot.

2. We felt a need, began to implement a solution - start with prototypes. And constantly test and test your development on all the same real users. The closer the connection between developers and the real world, the higher the chances of not wasting all the effort, money and time to realize the idea in vain.

3. If people do not criticize your product, most likely they simply do not use it.

4. And, finally, the main thing is to recognize in time that your idea has failed, and to be able to admit to yourself and your team about it. Do not be afraid to make U-turns, analyze past experience and be prepared for the fact that a new idea may fail. But sooner or later, trying and making mistakes, still come to success.

We do not know whether inter-chamber tracking will become a useful and popular feature of our product in practice. Therefore, we are not as fanatical about its development as we used to to the development of the indexer. Nevertheless, the developers of the new function have done a lot, and inter-camera tracking technology already exists in the Macroscop release and is available for use both in the full version and in the demo version: macroscop.com/download.html

In terms of creating one of our technologies, we, the developers of Macroscop, belonged to such companies: for 6 years we worked on a function that, in our opinion, should make the lives of thousands of people easier and more convenient.

In 2008, we had the idea to simplify the search process in the archives of video systems as much as possible. Imagine a medium-sized system of 100 cameras and about 1,000 hours of video that they left per day (usually video is recorded only when there is activity in the frame). And you need to find something in these records, but you do not know where and when it happened. You will look at the recordings of the hour, second, third, and by the time you find what you need, curse everything in the world.

We decided to create a tool that allows you to search by video in the same way that Google searches by text: you ask the person with some signs in the system, for example, dressed in a green T-shirt and black jeans, and you get everyone who meets these parameters.

We created such a tool and called it an indexer.(technology indexing objects). The solution works with color combinations of the search pattern: the object is clustered (areas of the same color are selected), its characteristics are determined for each cluster, which as a result form an index. Similarly, indices are calculated for all objects in the archive, and by comparing the program, the operator offers a set of results — all objects whose indices are close to the index of the sample.

This is how the formation of the search pattern looks in the indexer: the operator can manually color the human figure in the appropriate colors.

Indexer search results are displayed as a set of images. The operator selects the desired option, and then views the video segment from the archive.

The indexer has become for us the brainchild on which we are obsessed to a certain extent. We were absorbed in this idea and spent a tremendous amount of effort, time and money on development. So we hired 2 teams of high-class developers - a team of “physicists”, graduates of the physics department, and a team of “mathematicians” - graduates of the mechmath, who “competed” for a whole year, solving the indexing problem using different methods. By the way, “physicists” won this competition :)

We conducted a lot of funny experiments, during which we checked how our indexer reacts to this or that lighting, clothes of this or that coloring. For example, that year, walking along the corridor of the business center in which we worked, you could easily meet men in multi-colored family shorts over suits or jeans. These were our developers who tested how the indexer reacts to different patterns and textures.

Work on the development of the indexer continued until 2014. We have made significant progress in development and created a really working tool, but the task of recognizing color combinations is very difficult, so even after 6 years the quality of the search by signs was not ideal. At the same time, the indexer and the interactive search module created on its basis were available for users: it was sold as a plug-in or provided free of charge as part of the maximum software version. From time to time, we released updates, within the framework of which something improved, but something at the same time “fell”. Often it was an indexer, but almost no one ever contacted the company with a problem that searching by signs did not work. And at some point we realized that such requests and complaints do not come because that just nobody uses it and EVEN DOESN’T TRY TO USE. And we are engaged in abstract development, the implementation of ideas that are completely divorced from reality.

In 2014, we recognized that our idea of searching by signs failed, it was impossible to move on in the same mode. We decided to make a U-turn .

The plan was this: to communicate closely with 50 real users of video surveillance systems and find out from them what they are looking for, how they are looking for what they need; to understand whether they really need a search or for them the work of some intellectual functions in real time is important.

We started dating and chatting. During one such meeting, we were told: “Your search for signs is theoretically interesting, but in practice we often need not only to find a person, but to understand how he moved around the object: where he came from, where he was, when and where he went.” Soon, the same need was expressed independently by one another 5 or 7 users.

We thought about how to solve this problem within the framework of our software and provide users with data on the movements of people through the cameras of the entire video surveillance system. We started developing inter-chamber tracking .

Interchamber tracking allows you to track the movement of objects (in the current implementation of people) in the field of view of all cameras in the video system and get the trajectory of this movement. That is, to understand where any person of interest came from, where he went and how he moved within the framework of a video surveillance system. The technology of inter-chamber tracking is based on the same indexer and search by signs. The user selects any person in the frame and sets it as a model for searching on other cameras. Macroscop searches for all visually similar objects on nearby cameras at adjacent time intervals. The user only needs to step by step confirm the right person in the results.

The result of inter-chamber tracking is a way to move a person on the plan of the object, a video from fragments of movement from different cameras or a slide show of images that allow you to restore the full picture of his actions: at what time he appeared on the object, where he was when he left the object.

At any convenient opportunity, we continue to validate this idea. So, for example, at the Mips / Securika exhibition last month, we presented inter-chamber tracking and interviewed more than a dozen experts from leading companies in the industry about its usefulness. And here is what the deep and detailed interviews showed:

Our many years of experience in developing the indexer confirmed the well-known truths that are written in books:

1. The generation of ideas in isolation from reality and their subsequent selfless implementation is a very risky task. Have come up with something - interview 10, 50, 100 users. And it’s better not to engage in abstract inventions, but to identify a real sore spot.

2. We felt a need, began to implement a solution - start with prototypes. And constantly test and test your development on all the same real users. The closer the connection between developers and the real world, the higher the chances of not wasting all the effort, money and time to realize the idea in vain.

3. If people do not criticize your product, most likely they simply do not use it.

4. And, finally, the main thing is to recognize in time that your idea has failed, and to be able to admit to yourself and your team about it. Do not be afraid to make U-turns, analyze past experience and be prepared for the fact that a new idea may fail. But sooner or later, trying and making mistakes, still come to success.

We do not know whether inter-chamber tracking will become a useful and popular feature of our product in practice. Therefore, we are not as fanatical about its development as we used to to the development of the indexer. Nevertheless, the developers of the new function have done a lot, and inter-camera tracking technology already exists in the Macroscop release and is available for use both in the full version and in the demo version: macroscop.com/download.html