Visualizing concurrency in Go with WebGL

One of the strengths of the Go programming language is its built-in concurrency support, based on Tony Hoare 's Communicating Sequential Processes work . Go is designed for convenient work with multi-threaded programming and makes it very easy to build quite complex concurrent programs. But have you ever wondered what the various concurrency patterns look like visually?

Of course, they thought. One way or another, we all think in visual images. If I ask you about something that includes the numbers “from 1 to 100”, you will instantly “see” them in your head in one form or another, probably not even realizing it. For example, I see a series from 1 to 100 as a line with numbers leaving me, turning 90 degrees to the right by 20 and continuing to 1000+. And digging through the memory, I recall that in the very first kindergarten in the locker room along the wall, numbers were written, and the number 20 was just in the corner. You probably have some idea of your own. Or, another frequent example - imagine all year and 4 seasons of the year - someone sees them as a square, each face of which belongs to a season, someone as a circle, someone else somehow.

Anyway, let me show you my attempt to visualize the basic concurrency patterns with Go and WebGL. These interactive visualizations more or less reflect the way I see it in my head. It will be interesting to hear how different this is from the visualizations of readers.

So, let's start with the simplest example - “Hello, concurrent world”, to get acquainted with the concept of my approach.

Link to the interactive WebGL demo

Here the blue lines represent goroutines, time “runs” down the Y axis. The thin blue lines connecting 'main' and 'go # 19' are the marks of the beginning and end of the life of goroutines, showing ancestors and children. The red arrows show the event of sending a message to the channel, and the sent value is signed. Actually, “sending to the channel” and “reading from the channel” are two separate events, and the behavior will be very different between buffered and unbuffered channels, but I will animate these two events as one - “transmitting the value through the channel”. The string "# 19" in the name of anonymous goroutine is the real ID of the running goroutine. Although it’s impossible to officially recognize the goroutine ID (so that people don’t make other concurrency models, in which identifiers play an important role),"Goroutine IDs . "

In fact, our simplest Hello, world above can be used to create a simple timer. The Go standard library has such convenient functions as time.After or time.Tick, but let's implement our own - we will write a function that creates a channel, starts goroutin, which sleeps the necessary time and writes to the channel, and return this channel to the caller.

Link to an Interactive WebGL Demo

Great, right? But move on.

This interesting concurrency example was taken from Google’s famous Sameer Ajmani Advanced Concurrency Patterns report . Of course, this example is not too advanced, but for those who are only familiar with concurrency in Go, it should be interesting and demonstrative.

So, we have a table channel that acts as a table, there is a Ball Ball, which is an int variable and stores the number of strokes on it, and there are gorutin players who “take the ball from the table” (read from the channel), “ beat him ”(increase the variable) and“ throw him back on the table ”(write to the channel).

Link to an interactive WebGL demo

At this point, I want to once again draw your attention to the link with an interactive WebGL demo , available under each animation - by opening in a new tab, you can move, rotate, increase / decrease and view these 3D animations as you like , as well as slow down / speed up and restart them.

Now, let's run three goroutines, instead of two:

Link to an interactive WebGL demo

In this example, we see that each player takes the ball from the table in turn, and you may wonder - why is it so who guarantees this order?

The answer here is simple - the Go runtime contains a FIFO queue for goroutines who are ready to read from the channel, which is why we observe this order. In our case, each goroutine enters the queue immediately after sending the ball to the table. However, this behavior may change in the future and it is not worth counting on an order of magnitude. But while this is so, and let's run not three, but one hundred goroutines.

Link to an interactive WebGL demo

The FIFO order is now more obvious, isn't it? We can easily launch a million goroutines (they are cheap, and it’s ok to have hundreds of thousands of goroutines in large Go programs), but for our purposes this will be too much. Let's move on to other patterns.

One of the most famous patterns is the so-called fan-in pattern. It is the opposite of the fan-out pattern , which we will discuss later. In short, fan-in is a function that reads from several sources and multiplexes everything into one channel.

For example:

Link to the interactive WebGL demo

As we can see, the first producer generates numbers every 100ms, the second producer every 250ms, and the reader receives numbers from both producers right away. Multiplexing, in fact, occurs in the main function.

The opposite of fan-in is a fan-out or workers pattern. Many goroutines are read from one channel, taking some data for processing and effectively distributing work between the CPU cores. Hence the name " workers ". It is very simple to implement this pattern in Go - run a pack of goroutin, passing the channel through the parameter and write your data to this channel, and multiplexing and distribution will be automatic due to Go runtime.

Link to the interactive WebGL demo

One thing that I would like to draw attention to here is concurrency. It’s easy to notice that gorutins-workers run in parallel, taking “work” through the channels, one after another. From this animation, you can also see that the goroutines do this almost simultaneously. Unfortunately, so far it’s not visible in the animation where goroutine really works, and where it is blocked, and also here the time scale is already close to the error error threshold, but specifically this animation was recorded on a program running on 4 cores, i.e. with GOMAXPROCS = 4 . A little further we will consider this issue in more detail.

In the meantime, let's try something more complicated - workers who have their own, sub-workers.

Link to the interactive WebGL demo

Great. Of course, it was possible to do more and workers, and sub-workers, but I tried to make the animation as clear as possible.

There are much more complex patterns, workers with subworkers with their subworkers, and channels that are themselves transmitted through the channels, but the idea of a fan-out should be clear.

The next popular fan-out pattern is servers . It is distinguished by the fact that goroutines start dynamically, perform the necessary work and are completed. And quite often this pattern is used to implement servers - we listen to the port, accept the connection, start the goroutine, which then will deal with the incoming request, passing the connection to it, and at this time we listen further, waiting for the next connection. This is quite convenient and allows you to implement an effective server withstanding 10K connections, very simple. Take a look at the following example:

Link to an interactive WebGL demo

This example is not very interesting - in fact, nothing really happens here. Although, of course, under the hood there carefully hidden huge complexity and algorithms. "Simplicity is complex."

But let's add some activity to our server, and, say, add a logger to which each goroutine will write the client's address.

Link to an Interactive WebGL Demo

Demonstratively enough, right? This animation shows that our logger can quickly become a bottleneck if the number of connections grows and the logger is not too fast (say, it will serialize data and send it somewhere else). But we can solve this by using the fan-out pattern we already know. Let's write it.

An example server with a worker will be a slightly more advanced version of the just announced solution. It not only starts the logger in several goroutines, but also collects data from them with the results (say, the result of recording to a remote service).

Let's look at the code and animation:

Link to the interactive WebGL demo

We improved our server by effectively distributing the task for the logger between 4 goroutines, but we still see that the logger can become a bottleneck. Thousands of connections converge in one channel. before multiplexing between goroutines. But, of course, this will happen already at much greater loads than in the previous version.

But pretty fan-in / fan-out experiments. Let's look at a more interesting example. One of my favorites is the Eratosthenes Sieve on goroutines and channels, found in the report " Go Concurrency Patterns ". Sieve of Eratosthenes is an ancient algorithm for finding prime numbers up to a given limit. Its essence lies in the sequential striking out of all numbers divisible by each subsequent prime number found. The forehead algorithm is not very efficient, especially on multi-core machines.

An implementation option of this algorithm with goroutines and channels triggers one goroutine for each prime number found, and this goroutine filters the numbers that are divided by it. When the first prime number in goroutine is found, it is sent to the main goroutine (main) and displayed on the screen. This algorithm is also far from the most efficient, but I find it stunningly elegant. Here is the code itself:

Now take a look at the animation.

Link to an interactive WebGL demo

Do not forget to twist it interactively in 3D space at the link above. I really like how illustrative this example is and studying it in 3D can help to understand the algorithm itself better. We see that the first goroutine ( generate ) sends the first prime number (2) to main , then the first goroutine filter starts, sifting two, then three, five, seven ... and each time a new found prime is sent to main - this is especially good seen from the top view. Beautiful algorithm, including in 3D.

Now let's get back to our worker example. Remember, I wrote that this example was run with GOMAXPROCS = 4? This is because all these animations are not drawn, these are real traces of real programs.

To get started, let's refresh our memory and recall what GOMAXPROCS is :

I changed the code of the workers slightly so that they did the real work. loading the processor, not just sleeping. Then I ran the code without any changes on a Linux machine with two processors of 12 cores each - first with GOMAXPROCS = 1, then with GOMAXPROCS = 24.

So. the first animation shows the same program running on the 1st core, the second - on 24 cores.

WebGL animation 1 WebGL animation 24

The speed of time animation is different in these examples (I wanted all the animations to take a fixed time in height), but the difference should be obvious. With GOMAXPROCS = 1, the next worker takes the job (reads from the channel) only when the processor core is released and the previous goroutine worked out its portion. With 24 cores, goroutines almost immediately parse tasks and acceleration is enormous.

However, it is important to understand that an increase in GOMAXPROCS does not always lead to an increase in performance, and there may be times when it even drops from an increase in the number of cores.

What else can we demonstrate from the world of concurrency? One of the things that comes to my mind is goroutine leaks. They can happen by negligence, or, say, if you launched goroutine, but it went out of scope .

The first (and only) time that I encountered a goroutine leak, a terrifying picture appeared in my head, and literally the next weekend I wrote expvarmon for quick monitoring. And now I can visualize this terrifying picture with WebGL.

Take a look:

Link to an interactive WebGL demo

It pains me to even look at it :) Each line is a spent computer resources and a time bomb for your program.

The word concurrency is often translated as “parallelism,” but this is not entirely true. In truth, I don’t know a good translation, that's why I write everywhere without a translation. But the topic itself, explaining the differences between concurrency and concurrency, is disclosed many times , including by Rob Pike in a wonderful eponymous report . Look, if not already.

In short, then:

It is important to understand that these concepts are somewhat orthogonal - a concurrent program may or may not be parallel. We saw an example of this with different GOMAXPROCS a little higher - the same code ran both on the 1st core (sequentially) and on the 24th kernels (parallel).

I could repeat many of the postulates from the above links and reports, but this has already been done before me. I'd better try to show it visually.

So this is concurrency. Just a lot of things running in parallel.

Link to the interactive WebGL demo

And this is concurrency. Even more parallel pieces, which, however, does not change anything.

Link to an interactive WebGL demo

But here it is - concurrency:

And here it is:

And here it is also concurrency:

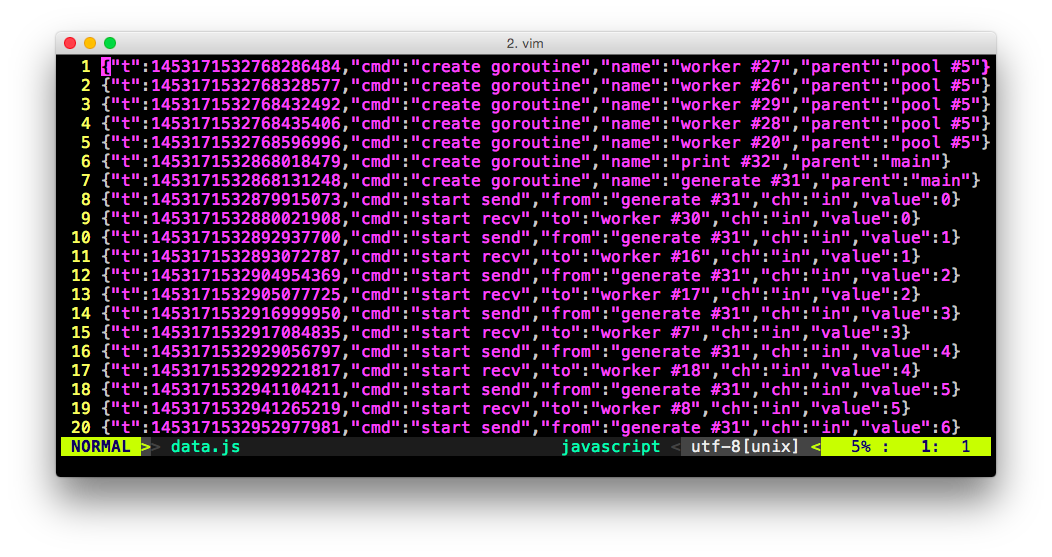

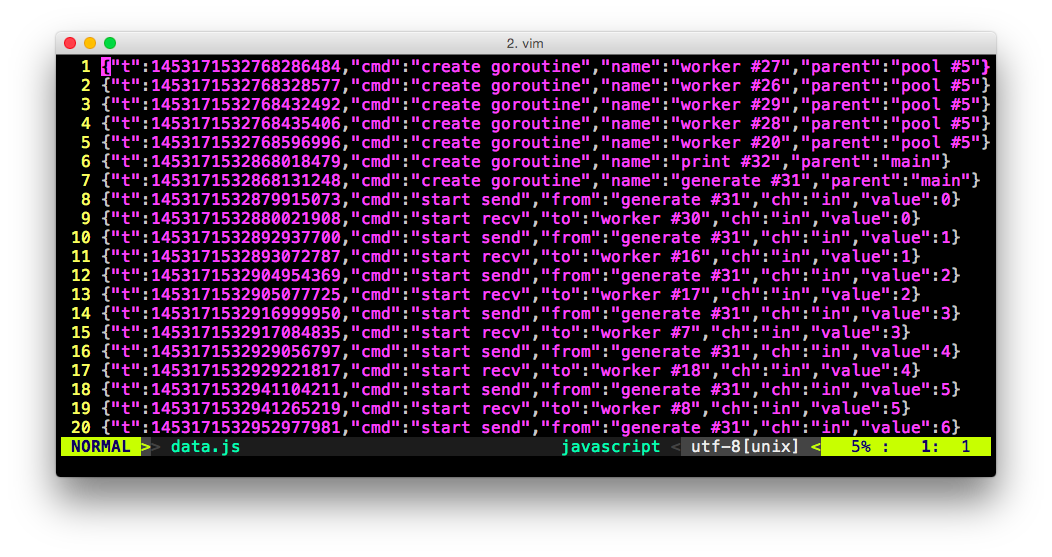

To create these animations, I wrote two programs - gotracer and gothree.js . The first one does the following things:

An example of the resulting JSON:

Next, gothree.js uses the power of the gorgeous Three.js library to draw and animate this data in 3D using WebGL. A small wrapper to squeeze it into one demo page and you're done.

However, this approach is very limited. I had to very carefully select examples, rename channels to get the correct trace. With this approach, there is no easy way to connect the channels between goroutines, if they are called differently inside the function. There are also problems with timing - output to the console sometimes takes more time than launching goroutines, writing to the channel and exiting, so in some examples I had to tighten up a bit by inserting time.Sleep (in the example with timers, the animation is slightly incorrect therefore).

Generally. that’s the main reason I don’t open the code yet. Now I play with execution tracer of Dmitry Vyukov - it seems to give the desired level of detail, although it does not contain information about what was sent to the channel. Perhaps there are some other ways to achieve the most detailed trail, I will explore further. Email me on Twitter or in the comments if you have any ideas. It would be cool for this tool to eventually grow into a 3D concurrency debugger applicable to absolutely any Go program, without exception.

This article was originally in the form of a report at the Go Meetup in Lviv (January 23, 2016) , then published in English on my blog . Also on HackerNewsand on reddit .

Of course, they thought. One way or another, we all think in visual images. If I ask you about something that includes the numbers “from 1 to 100”, you will instantly “see” them in your head in one form or another, probably not even realizing it. For example, I see a series from 1 to 100 as a line with numbers leaving me, turning 90 degrees to the right by 20 and continuing to 1000+. And digging through the memory, I recall that in the very first kindergarten in the locker room along the wall, numbers were written, and the number 20 was just in the corner. You probably have some idea of your own. Or, another frequent example - imagine all year and 4 seasons of the year - someone sees them as a square, each face of which belongs to a season, someone as a circle, someone else somehow.

Anyway, let me show you my attempt to visualize the basic concurrency patterns with Go and WebGL. These interactive visualizations more or less reflect the way I see it in my head. It will be interesting to hear how different this is from the visualizations of readers.

So, let's start with the simplest example - “Hello, concurrent world”, to get acquainted with the concept of my approach.

Hello concurrent world

package main

func main() {

// создаем новый канал типа int

ch := make(chan int)

// запускаем новую анонимную горутину

go func() {

// отправляем 42 в канал

ch <- 42

}()

// ждем, читаем из канала

<-ch

}

Link to the interactive WebGL demo

Here the blue lines represent goroutines, time “runs” down the Y axis. The thin blue lines connecting 'main' and 'go # 19' are the marks of the beginning and end of the life of goroutines, showing ancestors and children. The red arrows show the event of sending a message to the channel, and the sent value is signed. Actually, “sending to the channel” and “reading from the channel” are two separate events, and the behavior will be very different between buffered and unbuffered channels, but I will animate these two events as one - “transmitting the value through the channel”. The string "# 19" in the name of anonymous goroutine is the real ID of the running goroutine. Although it’s impossible to officially recognize the goroutine ID (so that people don’t make other concurrency models, in which identifiers play an important role),"Goroutine IDs . "

Timers

In fact, our simplest Hello, world above can be used to create a simple timer. The Go standard library has such convenient functions as time.After or time.Tick, but let's implement our own - we will write a function that creates a channel, starts goroutin, which sleeps the necessary time and writes to the channel, and return this channel to the caller.

package main

import "time"

func timer(d time.Duration) <-chan int {

c := make(chan int)

go func() {

time.Sleep(d)

c <- 1

}()

return c

}

func main() {

for i := 0; i < 24; i++ {

c := timer(1 * time.Second)

<-c

}

}

Link to an Interactive WebGL Demo

Great, right? But move on.

Ping pong

This interesting concurrency example was taken from Google’s famous Sameer Ajmani Advanced Concurrency Patterns report . Of course, this example is not too advanced, but for those who are only familiar with concurrency in Go, it should be interesting and demonstrative.

So, we have a table channel that acts as a table, there is a Ball Ball, which is an int variable and stores the number of strokes on it, and there are gorutin players who “take the ball from the table” (read from the channel), “ beat him ”(increase the variable) and“ throw him back on the table ”(write to the channel).

package main

import "time"

func main() {

var Ball int

table := make(chan int)

go player(table)

go player(table)

table <- Ball

time.Sleep(1 * time.Second)

<-table

}

func player(table chan int) {

for {

ball := <-table

ball++

time.Sleep(100 * time.Millisecond)

table <- ball

}

}

Link to an interactive WebGL demo

At this point, I want to once again draw your attention to the link with an interactive WebGL demo , available under each animation - by opening in a new tab, you can move, rotate, increase / decrease and view these 3D animations as you like , as well as slow down / speed up and restart them.

Now, let's run three goroutines, instead of two:

go player(table)

go player(table)

go player(table)

Link to an interactive WebGL demo

In this example, we see that each player takes the ball from the table in turn, and you may wonder - why is it so who guarantees this order?

The answer here is simple - the Go runtime contains a FIFO queue for goroutines who are ready to read from the channel, which is why we observe this order. In our case, each goroutine enters the queue immediately after sending the ball to the table. However, this behavior may change in the future and it is not worth counting on an order of magnitude. But while this is so, and let's run not three, but one hundred goroutines.

for i := 0; i < 100; i++ {

go player(table)

}

Link to an interactive WebGL demo

The FIFO order is now more obvious, isn't it? We can easily launch a million goroutines (they are cheap, and it’s ok to have hundreds of thousands of goroutines in large Go programs), but for our purposes this will be too much. Let's move on to other patterns.

Fan-in

One of the most famous patterns is the so-called fan-in pattern. It is the opposite of the fan-out pattern , which we will discuss later. In short, fan-in is a function that reads from several sources and multiplexes everything into one channel.

For example:

package main

import (

"fmt"

"time"

)

func producer(ch chan int, d time.Duration) {

var i int

for {

ch <- i

i++

time.Sleep(d)

}

}

func reader(out chan int) {

for x := range out {

fmt.Println(x)

}

}

func main() {

ch := make(chan int)

out := make(chan int)

go producer(ch, 100*time.Millisecond)

go producer(ch, 250*time.Millisecond)

go reader(out)

for i := range ch {

out <- i

}

}

Link to the interactive WebGL demo

As we can see, the first producer generates numbers every 100ms, the second producer every 250ms, and the reader receives numbers from both producers right away. Multiplexing, in fact, occurs in the main function.

Fan out

The opposite of fan-in is a fan-out or workers pattern. Many goroutines are read from one channel, taking some data for processing and effectively distributing work between the CPU cores. Hence the name " workers ". It is very simple to implement this pattern in Go - run a pack of goroutin, passing the channel through the parameter and write your data to this channel, and multiplexing and distribution will be automatic due to Go runtime.

package main

import (

"fmt"

"sync"

"time"

)

func worker(tasksCh <-chan int, wg *sync.WaitGroup) {

defer wg.Done()

for {

task, ok := <-tasksCh

if !ok {

return

}

d := time.Duration(task) * time.Millisecond

time.Sleep(d)

fmt.Println("processing task", task)

}

}

func pool(wg *sync.WaitGroup, workers, tasks int) {

tasksCh := make(chan int)

for i := 0; i < workers; i++ {

go worker(tasksCh, wg)

}

for i := 0; i < tasks; i++ {

tasksCh <- i

}

close(tasksCh)

}

func main() {

var wg sync.WaitGroup

wg.Add(36)

go pool(&wg, 36, 50)

wg.Wait()

}

Link to the interactive WebGL demo

One thing that I would like to draw attention to here is concurrency. It’s easy to notice that gorutins-workers run in parallel, taking “work” through the channels, one after another. From this animation, you can also see that the goroutines do this almost simultaneously. Unfortunately, so far it’s not visible in the animation where goroutine really works, and where it is blocked, and also here the time scale is already close to the error error threshold, but specifically this animation was recorded on a program running on 4 cores, i.e. with GOMAXPROCS = 4 . A little further we will consider this issue in more detail.

In the meantime, let's try something more complicated - workers who have their own, sub-workers.

package main

import (

"fmt"

"sync"

"time"

)

const (

WORKERS = 5

SUBWORKERS = 3

TASKS = 20

SUBTASKS = 10

)

func subworker(subtasks chan int) {

for {

task, ok := <-subtasks

if !ok {

return

}

time.Sleep(time.Duration(task) * time.Millisecond)

fmt.Println(task)

}

}

func worker(tasks <-chan int, wg *sync.WaitGroup) {

defer wg.Done()

for {

task, ok := <-tasks

if !ok {

return

}

subtasks := make(chan int)

for i := 0; i < SUBWORKERS; i++ {

go subworker(subtasks)

}

for i := 0; i < SUBTASKS; i++ {

task1 := task * i

subtasks <- task1

}

close(subtasks)

}

}

func main() {

var wg sync.WaitGroup

wg.Add(WORKERS)

tasks := make(chan int)

for i := 0; i < WORKERS; i++ {

go worker(tasks, &wg)

}

for i := 0; i < TASKS; i++ {

tasks <- i

}

close(tasks)

wg.Wait()

}

Link to the interactive WebGL demo

Great. Of course, it was possible to do more and workers, and sub-workers, but I tried to make the animation as clear as possible.

There are much more complex patterns, workers with subworkers with their subworkers, and channels that are themselves transmitted through the channels, but the idea of a fan-out should be clear.

Servers

The next popular fan-out pattern is servers . It is distinguished by the fact that goroutines start dynamically, perform the necessary work and are completed. And quite often this pattern is used to implement servers - we listen to the port, accept the connection, start the goroutine, which then will deal with the incoming request, passing the connection to it, and at this time we listen further, waiting for the next connection. This is quite convenient and allows you to implement an effective server withstanding 10K connections, very simple. Take a look at the following example:

package main

import "net"

func handler(c net.Conn) {

c.Write([]byte("ok"))

c.Close()

}

func main() {

l, err := net.Listen("tcp", ":5000")

if err != nil {

panic(err)

}

for {

c, err := l.Accept()

if err != nil {

continue

}

go handler(c)

}

}

Link to an interactive WebGL demo

This example is not very interesting - in fact, nothing really happens here. Although, of course, under the hood there carefully hidden huge complexity and algorithms. "Simplicity is complex."

But let's add some activity to our server, and, say, add a logger to which each goroutine will write the client's address.

package main

import (

"fmt"

"net"

"time"

)

func handler(c net.Conn, ch chan string) {

ch <- c.RemoteAddr().String()

c.Write([]byte("ok"))

c.Close()

}

func logger(ch chan string) {

for {

fmt.Println(<-ch)

}

}

func server(l net.Listener, ch chan string) {

for {

c, err := l.Accept()

if err != nil {

continue

}

go handler(c, ch)

}

}

func main() {

l, err := net.Listen("tcp", ":5000")

if err != nil {

panic(err)

}

ch := make(chan string)

go logger(ch)

go server(l, ch)

time.Sleep(10 * time.Second)

}

Link to an Interactive WebGL Demo

Demonstratively enough, right? This animation shows that our logger can quickly become a bottleneck if the number of connections grows and the logger is not too fast (say, it will serialize data and send it somewhere else). But we can solve this by using the fan-out pattern we already know. Let's write it.

Server + Worker

An example server with a worker will be a slightly more advanced version of the just announced solution. It not only starts the logger in several goroutines, but also collects data from them with the results (say, the result of recording to a remote service).

Let's look at the code and animation:

package main

import (

"net"

"time"

)

func handler(c net.Conn, ch chan string) {

addr := c.RemoteAddr().String()

ch <- addr

time.Sleep(100 * time.Millisecond)

c.Write([]byte("ok"))

c.Close()

}

func logger(wch chan int, results chan int) {

for {

data := <-wch

data++

results <- data

}

}

func parse(results chan int) {

for {

<-results

}

}

func pool(ch chan string, n int) {

wch := make(chan int)

results := make(chan int)

for i := 0; i < n; i++ {

go logger(wch, results)

}

go parse(results)

for {

addr := <-ch

l := len(addr)

wch <- l

}

}

func server(l net.Listener, ch chan string) {

for {

c, err := l.Accept()

if err != nil {

continue

}

go handler(c, ch)

}

}

func main() {

l, err := net.Listen("tcp", ":5000")

if err != nil {

panic(err)

}

ch := make(chan string)

go pool(ch, 4)

go server(l, ch)

time.Sleep(10 * time.Second)

}

Link to the interactive WebGL demo

We improved our server by effectively distributing the task for the logger between 4 goroutines, but we still see that the logger can become a bottleneck. Thousands of connections converge in one channel. before multiplexing between goroutines. But, of course, this will happen already at much greater loads than in the previous version.

Sieve of Eratosthenes

But pretty fan-in / fan-out experiments. Let's look at a more interesting example. One of my favorites is the Eratosthenes Sieve on goroutines and channels, found in the report " Go Concurrency Patterns ". Sieve of Eratosthenes is an ancient algorithm for finding prime numbers up to a given limit. Its essence lies in the sequential striking out of all numbers divisible by each subsequent prime number found. The forehead algorithm is not very efficient, especially on multi-core machines.

An implementation option of this algorithm with goroutines and channels triggers one goroutine for each prime number found, and this goroutine filters the numbers that are divided by it. When the first prime number in goroutine is found, it is sent to the main goroutine (main) and displayed on the screen. This algorithm is also far from the most efficient, but I find it stunningly elegant. Here is the code itself:

// A concurrent prime sieve

package main

import "fmt"

// Send the sequence 2, 3, 4, ... to channel 'ch'.

func Generate(ch chan<- int) {

for i := 2; ; i++ {

ch <- i // Send 'i' to channel 'ch'.

}

}

// Copy the values from channel 'in' to channel 'out',

// removing those divisible by 'prime'.

func Filter(in <-chan int, out chan<- int, prime int) {

for {

i := <-in // Receive value from 'in'.

if i%prime != 0 {

out <- i // Send 'i' to 'out'.

}

}

}

// The prime sieve: Daisy-chain Filter processes.

func main() {

ch := make(chan int) // Create a new channel.

go Generate(ch) // Launch Generate goroutine.

for i := 0; i < 10; i++ {

prime := <-ch

fmt.Println(prime)

ch1 := make(chan int)

go Filter(ch, ch1, prime)

ch = ch1

}

}Now take a look at the animation.

Link to an interactive WebGL demo

Do not forget to twist it interactively in 3D space at the link above. I really like how illustrative this example is and studying it in 3D can help to understand the algorithm itself better. We see that the first goroutine ( generate ) sends the first prime number (2) to main , then the first goroutine filter starts, sifting two, then three, five, seven ... and each time a new found prime is sent to main - this is especially good seen from the top view. Beautiful algorithm, including in 3D.

GOMAXPROCS

Now let's get back to our worker example. Remember, I wrote that this example was run with GOMAXPROCS = 4? This is because all these animations are not drawn, these are real traces of real programs.

To get started, let's refresh our memory and recall what GOMAXPROCS is :

GOMAXPROCS sets the maximum number of CPU cores that can execute code simultaneously

I changed the code of the workers slightly so that they did the real work. loading the processor, not just sleeping. Then I ran the code without any changes on a Linux machine with two processors of 12 cores each - first with GOMAXPROCS = 1, then with GOMAXPROCS = 24.

So. the first animation shows the same program running on the 1st core, the second - on 24 cores.

WebGL animation 1 WebGL animation 24

The speed of time animation is different in these examples (I wanted all the animations to take a fixed time in height), but the difference should be obvious. With GOMAXPROCS = 1, the next worker takes the job (reads from the channel) only when the processor core is released and the previous goroutine worked out its portion. With 24 cores, goroutines almost immediately parse tasks and acceleration is enormous.

However, it is important to understand that an increase in GOMAXPROCS does not always lead to an increase in performance, and there may be times when it even drops from an increase in the number of cores.

Goroutine leaks

What else can we demonstrate from the world of concurrency? One of the things that comes to my mind is goroutine leaks. They can happen by negligence, or, say, if you launched goroutine, but it went out of scope .

The first (and only) time that I encountered a goroutine leak, a terrifying picture appeared in my head, and literally the next weekend I wrote expvarmon for quick monitoring. And now I can visualize this terrifying picture with WebGL.

Take a look:

Link to an interactive WebGL demo

It pains me to even look at it :) Each line is a spent computer resources and a time bomb for your program.

Concurrency is not parallelism

The word concurrency is often translated as “parallelism,” but this is not entirely true. In truth, I don’t know a good translation, that's why I write everywhere without a translation. But the topic itself, explaining the differences between concurrency and concurrency, is disclosed many times , including by Rob Pike in a wonderful eponymous report . Look, if not already.

In short, then:

Concurrency is just a lot of things running in parallel.

Concurrency is a way to structure a program.

It is important to understand that these concepts are somewhat orthogonal - a concurrent program may or may not be parallel. We saw an example of this with different GOMAXPROCS a little higher - the same code ran both on the 1st core (sequentially) and on the 24th kernels (parallel).

I could repeat many of the postulates from the above links and reports, but this has already been done before me. I'd better try to show it visually.

So this is concurrency. Just a lot of things running in parallel.

Link to the interactive WebGL demo

And this is concurrency. Even more parallel pieces, which, however, does not change anything.

Link to an interactive WebGL demo

But here it is - concurrency:

And here it is:

And here it is also concurrency:

How was this done?

To create these animations, I wrote two programs - gotracer and gothree.js . The first one does the following things:

- parses the AST-tree of the source code of the examples on Go (another plus of Go’s simple grammar) and inserts special output on events related to concurrency - start / stop goroutines, write / read to the channel, create the channel

- launches a modified program

- parses output, and generates special JSON with events and timestamps

An example of the resulting JSON:

Next, gothree.js uses the power of the gorgeous Three.js library to draw and animate this data in 3D using WebGL. A small wrapper to squeeze it into one demo page and you're done.

However, this approach is very limited. I had to very carefully select examples, rename channels to get the correct trace. With this approach, there is no easy way to connect the channels between goroutines, if they are called differently inside the function. There are also problems with timing - output to the console sometimes takes more time than launching goroutines, writing to the channel and exiting, so in some examples I had to tighten up a bit by inserting time.Sleep (in the example with timers, the animation is slightly incorrect therefore).

Generally. that’s the main reason I don’t open the code yet. Now I play with execution tracer of Dmitry Vyukov - it seems to give the desired level of detail, although it does not contain information about what was sent to the channel. Perhaps there are some other ways to achieve the most detailed trail, I will explore further. Email me on Twitter or in the comments if you have any ideas. It would be cool for this tool to eventually grow into a 3D concurrency debugger applicable to absolutely any Go program, without exception.

This article was originally in the form of a report at the Go Meetup in Lviv (January 23, 2016) , then published in English on my blog . Also on HackerNewsand on reddit .