Algorithmic Trading: Finding an Effective Data Processing Method Using FPGA

A group of researchers from the University of Toronto published a paper on the use of FPGAs to improve the efficiency of event processing in algorithmic trading on the exchange. We bring to your attention the main points of this document.

Introduction

At the moment, high-frequency trading dominates the financial markets (according to various sources, the share of transactions made using algorithms is now about 70%). Accordingly, the importance of streamlining the processes of executing applications and processing transactions and emerging events on the market is constantly growing - the competition is so strong that microseconds decide everything.

Successful strategies can make a profit for their creators by playing even on microscopic differences in the prices of related assets on different exchanges - for example, if a share of a company is traded on the New York Stock Exchange at a price of $ 40.05, and in Toronto - at $ 40.04 , then the algorithm should buy a share in Canada and sell it in the United States. Every millisecond won here can lead to millions of profits on the horizon of the year.

Algorithmic trading can be modeled as an event processing platform in which financial news and market data are treated as events, like [stock = ABX, TSXask = 40.04, NYSEask = 40.05], and investment strategies are formulated by financial institutions or investors in the form of subscriptions: [ stock = ABX, TSXask 6 = NYSEask] or [stock = ABX, TSXask ≤ 40.04].

Thus, a scalable event processing platform must be able to efficiently find all investment strategies (subscriptions) that correspond to incoming events - and there may be millions of such events per second.

Why use FPGA

The most resource-intensive aspect of the event processing process is matching. The algorithm accepts an input event (quotes stream, market events) and a set of subscriptions (i.e. investment strategies) and returns subscriptions for which matches with market events are found.

To cope with the requirements for the efficiency of data processing in networks with constantly increasing throughput is a non-trivial task. Along with the increase in throughput, the volumes of processed data are also growing. At the same time, building not too expensive systems to work in such a situation is also not easy in view of the fact that existing technologies for creating processors are approaching their limits and their performance is not growing as fast as it was before.

Popular servers often do not cope with the processing of market data at the right speed. As a result, traders and financial institutions are faced with the need to increase the productivity of their infrastructure. You can speed up the operation of algorithms not only by purchasing additional servers, but also using FPGA.

Soft processing approach

The hardware reconfiguration capability allows FPGAs to use Soft microprocessors , which have several important advantages. They are easier to program (this means the possibility of using C instead of Verilog, which requires specialized knowledge), they can be ported to different FPGAs, they can be customized, and they can also be used to interact with other parts of the system.

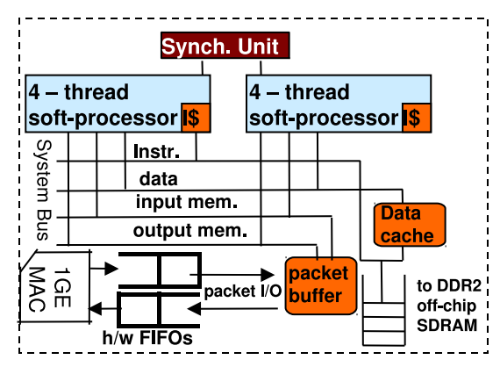

In the current example, the FPGA is located on the NetFPGA network card and communicates with the host machine through DMA on the PCI interface. FPGAs have programmable pins that provide a direct connection to memory and network interfaces - in a regular server, you can only interact with them through the network interface card.

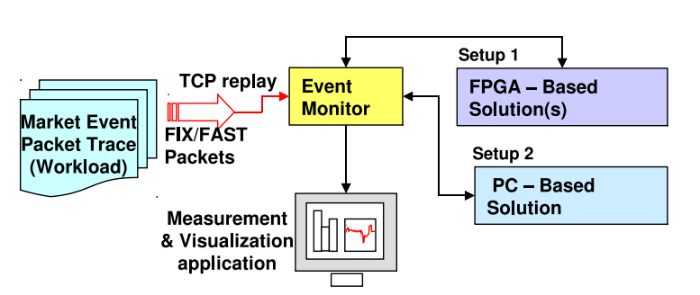

For experiments, a solution was created based on Soft microprocessors running on NetFPGA, as well as a basic version for working with PCs - both implementations used the same matching strategy.

Implementing a Soft Microprocessor

To increase the throughput of an event processing application, NetThreads was selected, which has two single-task, five-stage, multi-threaded processors.

In a single core, instructions from four "iron" threads are processed in a manner similar to round-robin - this allows you to perform calculations even when memory is required. Such a system is well suited for event processing: Soft processors do not suffer from overloading the operating system, which ordinary computers are prone to, they can receive and process packets in parallel, with minimal resources, and they also have access to a timer with a higher resolution (much more than on PC) for processing timeouts and scheduling operations.

Due to the absence of an operating system in NetThreads, packages are considered as character buffers in memory and are available immediately after they are completely received by the processor (the step with copying to the application in user space is skipped).

Only hardware

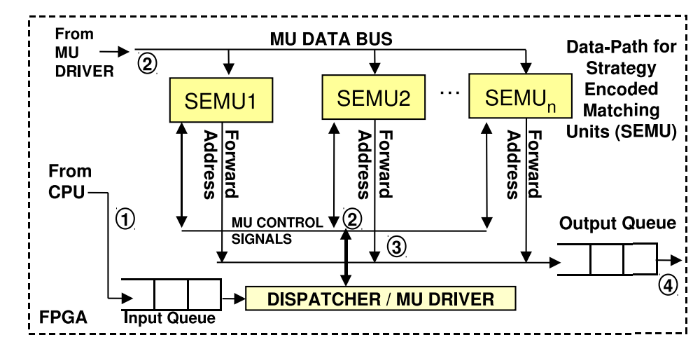

The second approach used implied the creation of an exclusively hardware solution: all the necessary steps are carried out by “custom” iron components, including those used to parse market events and match them with appropriate strategies. This method allows you to achieve the greatest performance, but it is also the most difficult.

Hybrid approach

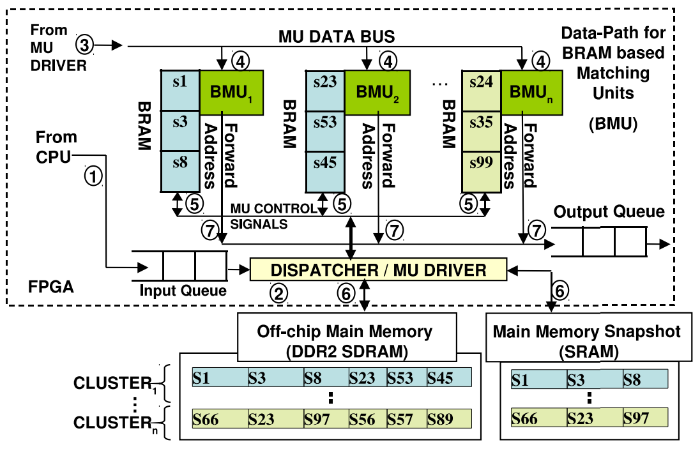

Due to the complexity of using only hardware to support dynamic strategies and event formats, a hybrid approach can be applied that combines the advantages of the two schemes described above. Since FPGAs are usually programmed in low-level languages, it is rather difficult to support the work of communication protocols created in this way.

This problem can be avoided by starting the Soft microprocessor to implement packet processing using software. After parsing incoming packets with market data, the Soft microprocessor transfers them to the hardware.

Conclusion

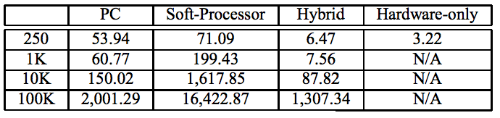

As a result of applying various approaches — using a PC as a base case, a soft approach, only hardware and a hybrid method — the researchers were able to collect data on the effectiveness of each of the presented methods for processing market events.

The load during the experiments varied from 250 to 100 thousand of the analyzed investment strategies. As a result, the use of the hybrid approach made it possible to get ahead of other methods by two or more orders of magnitude (the results are presented in the table above).