NetApp ONTAP: UNMAP in a SAN Environment

The UNMAP command is standardized as part of the T10 SCSI command set and is used to free space from thin moons back to the data store in a SAN environment. As I wrote earlier , the SAN and NAS protocols gradually borrow the best from each other. One of the useful things that appeared a long time ago is the possibility of feedback between the storage system and the host in order to "return" remote blocks to the thin moons, which was previously lacking in the SAN. UNMAP is still rarely used in SAN environments, although it is very useful in combination with both virtualized and non-virtualized environments.

Without the support of the UNMAP team, any thin moons created on the storage side could always only increase in size. Its growth was a matter of time, which always unconditionally ended in the fact that such a thin moon would eventually occupy its full volume, which it is supposed to, i.e. he will eventually become fat.

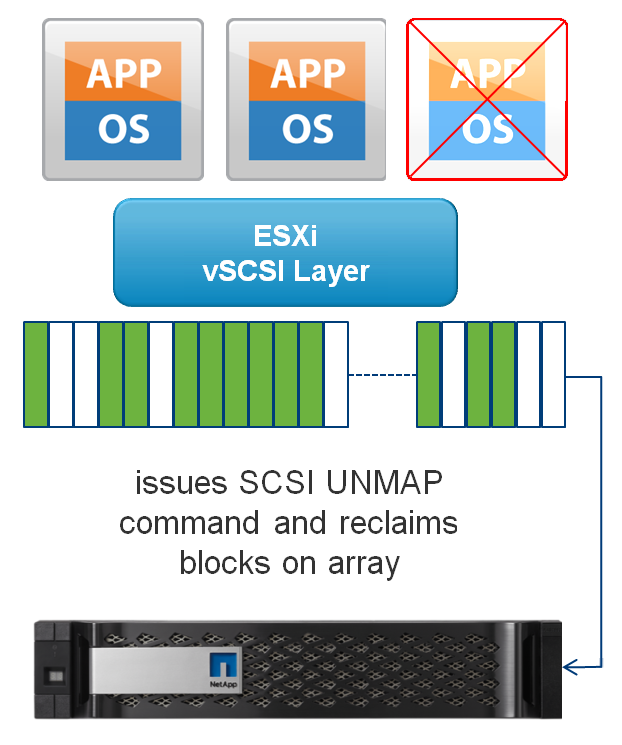

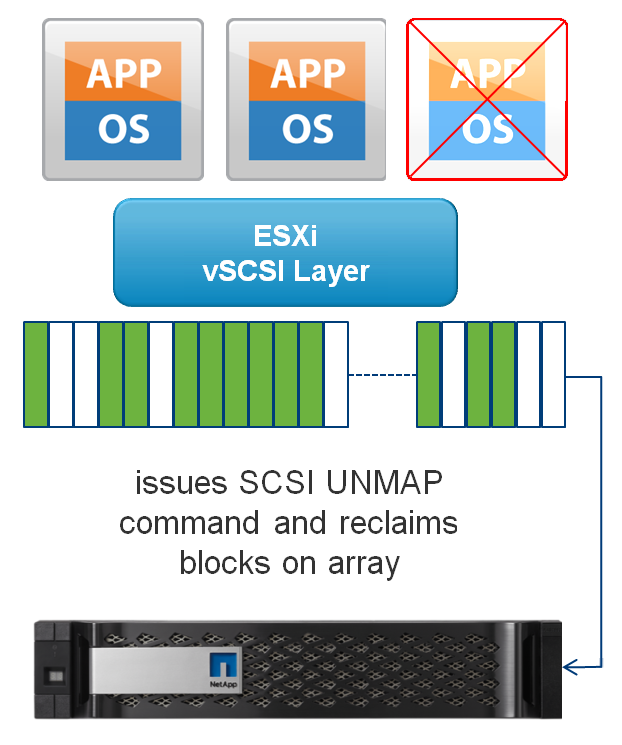

Just imagine you have 3 virtual machines living on the datastore, each occupying 100GB. Your datastore is on a thin moon that occupies 300GB. The occupied space from the storage side and ESXi is the same: 300GB. After removing one VM, the size of your moon from the storage side is still 300GB, and from the side of the hypervisor, the occupied space on the datastore living on this moon is 200GB.

This is due to the fact that the wound did not have a feedback mechanism between the host and the storage system. Namely, when the host recorded a block of information in the moon, SHD in turn marked this block as being used. Further, the host could delete this block, but the storage system did not already know about it. The UNMAP team is this feedback, which heats the block that is no longer needed from the moon. Finally, our thin moons learned not only to gain, but also to reduce their weightstarting with the firmware version (Clustered Data) ONTAP 8.2 .

In version 5.0, the UNMAP function was introduced for the first time, it was enabled by default and was automatically launched when the set value of the remote blocks was reached; in subsequent versions, the mechanism was disabled by default and started manually. Starting with VMFS-6 (vSphere 6.5), space is freed up automatically within 12 hours, if necessary, a manual mechanism for starting space reclamation is also available. It is important to note that the release of the space that will be discussed now takes place at the level of the hypervisor, i.e. Deleted blocks are released only after removing the entire virtual machine or virtual disks , and not inside the guest OS.

If you already have ESXi and ONTAP storage with UNMAP support, but the function is not enabled

You need to enable it from the storage side and from the side of the hypervisor. To begin with, we ’ll turn the moons offline and turn on the space-allocation function on the storage system (if the VMs are left there in a running state, they will shut down incorrectly and may be damaged, so they should either be turned off temporarily or migrated temporarily):

After that, you need to enable unmap from the ESXi side, to do this you need to map out and map the datastore so that ESXi detects UNMAP support (if there are VMs in the running state, they will shut down incorrectly and may be damaged, so it’s worth turning them off or temporarily migrating ):

After that, in order to free up space, you will need to periodically run the command:

It is important to note that UNMAP only works for moons formatted with a partition offset multiple of 1 MB . What does it mean? This means that if you once converted VMFS3 to VMFS5, UNMAP will not work. This is easy to verify, converted VMFS3s have an MBR breakdown table, and VMFS5s that have been recreated have a GPT breakdown.

Pay attention to the Layout column.

Checking the offset is also not difficult, we look at the length of the sector. Let me remind you that the sector is 512 bytes.

Pay attention to the columns "Start Sector" and "End Sector" . So, the last device naa.60a98000486e574f6c4a5052537a7375 has an offset of 1MB (2048 sectors * 512 = 1048576 byte = 1024KB). But the second device naa.60a98000486e574f6c4a5778594e7456 has an offset that is not a multiple of 1MB, it is clearly smaller, UNMAP will not work on it.

You can check whether UNMAP ( Delete Status ) is supported by :

Automatic release of space back into thin moons on storage is supported since vSphere 6.5. For each VMFS-6 datastore, you can prioritize the release of High / Mid / Slow space, which will be returned to the store within 12 hours. You can also start space reclamation and configure prioritization for the VMFS-6 datastore manually from the graphical interface or from the command line.

So, earlier we looked at removing virtual machines from the datastore. It would be logical to do the same when deleting data blocks from inside the guest OS, and not the entire virtual machine or its disks. UNMAP is supported on the storage side with ONTAP and so that the UNMAP mechanism works when deleting data from VMDK, i.e. from inside the guest OS, from the storage side, nothing additional is required to be implemented, it is enough for UNMAP to be enabled. It is necessary that the hypervisor can broadcast this information from the virtual machine to the storage, which runs completely at the SCSI level. So, starting with ESXi 6.0, it is now possible to transmit information about remote blocks inside the guest OS.

For UNMAP to work from within the virtual machine, the following conditions must be met and must have:

For many years, all storage vendors said don't create thin virtual disks on thin moons. But now this has changed and in order to release blocks from inside the virtual machine, you need to have thin virtual disks and thin moons on the storage system. In vSphere 6.0, the functionality of returning remote blocks from inside the guest OS was implemented, but had a number of limitations when using UNMAP , for example, Linux machines were not supported. In vSphere 6.0 and older versions with VMFS, the UNMAP function does not start automatically , you need to run the command manually.

In order for the release of space from inside the Windows guest OS to work, the NTFS file system must be formatted with a allocation unit size of 64KB.

Linux virtual machines supporting SPC -4 and running on vSphere 6.5 will now also be able to return the freed space from the inside of the virtual machine back to storage.

How to check if your Linux machine supports the release of space?

It is important to remember that the VMware hypervisor starts UNMAP asynchronously , that is, with a delay. This means that in practice, you will most likely never have 1: 1 occupied space, inside the guest OS / on the hypervisor datastore and on the storage moon.

VVOL technology starting with vSphere 6.0, VM Hardware version 11 and Windows 2012 GOS with the NTFS file system supports the automatic release of space inside the thin moon on the external storage on which this virtual machine is located.

Linux GOS supporting SPC -4 installed on VM Hardware version 11 on vSphere 6.5 will also be able to return space from inside the virtual machine to the thin moons of storage.

This significantly improves the utilization of usable space in a SAN infrastructure using Thing Provisioning and Hardware-assistant snapshots.

Learn more about tuning ESXi 6.x for ONTAP .

Support for UNMAP commands for this OS family began with Windows Server 2012 with the NTFS file system. To enable automatic UNMAP, use Windows Host Utility 6.0.2 or later or ONTAP DSM 4.0 or later. To check if UNMAP is enabled, run

Learn more about tuning Windows Server for ONTAP .

Linux distributions support UNMAP. NetApp officially supports RHEL version 6.2 and higher using the –o discard switch of the mount command and the fstrim utility . Read more in the RHEL6 Storage Admin Guide .

Learn more about tuning Linux Server for ONTAP .

Prior to ONTAP 9, file deletion operations generated so-called Zombie messages.

Let me remind you that ONTAP 9 was released in May 2016. In this version of the Zombie software, messages are no longer generated to free up space, instead, the file is simply marked for deletion. In this connection, the logic of the work of freeing up space has changed significantly:

Implemented UNMAP support, both on the guest OS, host, and NetApp storage side, allows more rational utilization of space in a SAN environment using Thin Provisioning and, as a result, will allow more rational use of storage space with hardware snapshots. Support for UNMAP at the hypervisor level, and even more so within the guest OS, will greatly facilitate the use of these two popular technologies.

Please send messages about errors in the text to the LAN . Comments, additions and questions on the opposite article, please comment.

Without the support of the UNMAP team, any thin moons created on the storage side could always only increase in size. Its growth was a matter of time, which always unconditionally ended in the fact that such a thin moon would eventually occupy its full volume, which it is supposed to, i.e. he will eventually become fat.

Just imagine you have 3 virtual machines living on the datastore, each occupying 100GB. Your datastore is on a thin moon that occupies 300GB. The occupied space from the storage side and ESXi is the same: 300GB. After removing one VM, the size of your moon from the storage side is still 300GB, and from the side of the hypervisor, the occupied space on the datastore living on this moon is 200GB.

This is due to the fact that the wound did not have a feedback mechanism between the host and the storage system. Namely, when the host recorded a block of information in the moon, SHD in turn marked this block as being used. Further, the host could delete this block, but the storage system did not already know about it. The UNMAP team is this feedback, which heats the block that is no longer needed from the moon. Finally, our thin moons learned not only to gain, but also to reduce their weightstarting with the firmware version (Clustered Data) ONTAP 8.2 .

VMware ESXi & UNMAP

In version 5.0, the UNMAP function was introduced for the first time, it was enabled by default and was automatically launched when the set value of the remote blocks was reached; in subsequent versions, the mechanism was disabled by default and started manually. Starting with VMFS-6 (vSphere 6.5), space is freed up automatically within 12 hours, if necessary, a manual mechanism for starting space reclamation is also available. It is important to note that the release of the space that will be discussed now takes place at the level of the hypervisor, i.e. Deleted blocks are released only after removing the entire virtual machine or virtual disks , and not inside the guest OS.

If you already have ESXi and ONTAP storage with UNMAP support, but the function is not enabled

You need to enable it from the storage side and from the side of the hypervisor. To begin with, we ’ll turn the moons offline and turn on the space-allocation function on the storage system (if the VMs are left there in a running state, they will shut down incorrectly and may be damaged, so they should either be turned off temporarily or migrated temporarily):

lun modify -vserver vsm01 -volume vol2 -lun lun1_myDatastore -state offline

lun modify -vserver vsm01 -volume vol2 -lun lun1_myDatastore -space-allocation enabled

lun modify -vserver vsm01 -volume vol2 -lun lun1_myDatastore -state online

After that, you need to enable unmap from the ESXi side, to do this you need to map out and map the datastore so that ESXi detects UNMAP support (if there are VMs in the running state, they will shut down incorrectly and may be damaged, so it’s worth turning them off or temporarily migrating ):

esxcli storage filesystem unmount -l myDatastore

esxcli storage filesystem mount -l myDatastore

esxcli storage vmfs unmap -l myDatastore

After that, in order to free up space, you will need to periodically run the command:

esxcli storage vmfs unmap -l myDatastore

It is important to note that UNMAP only works for moons formatted with a partition offset multiple of 1 MB . What does it mean? This means that if you once converted VMFS3 to VMFS5, UNMAP will not work. This is easy to verify, converted VMFS3s have an MBR breakdown table, and VMFS5s that have been recreated have a GPT breakdown.

# esxcli storage core device partition showguid

Example output:

Device Partition Layout GUID

-------------------------------------------------------------

naa.60a98000486e574f6c4a5778594e7456 0 MBR N/A

naa.60a98000486e574f6c4a5778594e7456 1 MBR N/A

naa.60a98000486e574f6c4a5052537a7375 0 GPT 00000000000000000000000000000000

naa.60a98000486e574f6c4a5052537a7375 1 GPT aa31e02a400f11db9590000c2911d1b8

Pay attention to the Layout column.

Checking the offset is also not difficult, we look at the length of the sector. Let me remind you that the sector is 512 bytes.

# esxcli storage core device partition list

Example output:

Device Partition Start Sector End Sector Type Size

-------------------------------------------------------------------------------------

naa.60a98000486e574f6c4a5778594e7456 0 0 3221237760 0 1649273733120

naa.60a98000486e574f6c4a5778594e7456 1 128 3221225280 fb 1649267277824

naa.60a98000486e574f6c4a5052537a7375 0 0 3221237760 0 1649273733120

naa.60a98000486e574f6c4a5052537a7375 1 2048 3221237727 fb 1649272667648

Pay attention to the columns "Start Sector" and "End Sector" . So, the last device naa.60a98000486e574f6c4a5052537a7375 has an offset of 1MB (2048 sectors * 512 = 1048576 byte = 1024KB). But the second device naa.60a98000486e574f6c4a5778594e7456 has an offset that is not a multiple of 1MB, it is clearly smaller, UNMAP will not work on it.

You can check whether UNMAP ( Delete Status ) is supported by :

# esxcli storage core device vaai status get -d naa

Example output:

naa.60a98000486e574f6c4a5052537a7375

VAAI Plugin Name: VMW_VAAIP_NETAPP

ATS Status: supported

Clone Status: supported

Zero Status: supported

Delete Status: supported

Auto-reclaim space in vSphere 6.5

Automatic release of space back into thin moons on storage is supported since vSphere 6.5. For each VMFS-6 datastore, you can prioritize the release of High / Mid / Slow space, which will be returned to the store within 12 hours. You can also start space reclamation and configure prioritization for the VMFS-6 datastore manually from the graphical interface or from the command line.

esxcli storage vmfs reclaim config get -l DataStoreOnNetAppLUN

Reclaim Granularity: 248670 Bytes

Reclaim Priority: low

esxcli storage vmfs reclaim config set -l DataStoreOnNetAppLUN -p high

UNMAP from the guest OS.

So, earlier we looked at removing virtual machines from the datastore. It would be logical to do the same when deleting data blocks from inside the guest OS, and not the entire virtual machine or its disks. UNMAP is supported on the storage side with ONTAP and so that the UNMAP mechanism works when deleting data from VMDK, i.e. from inside the guest OS, from the storage side, nothing additional is required to be implemented, it is enough for UNMAP to be enabled. It is necessary that the hypervisor can broadcast this information from the virtual machine to the storage, which runs completely at the SCSI level. So, starting with ESXi 6.0, it is now possible to transmit information about remote blocks inside the guest OS.

For UNMAP to work from within the virtual machine, the following conditions must be met and must have:

- Virtual Hardware Version 11

- VMFS6

- vSphere 6.0 * / 6.5

- moon storage should be thin

- virtual disks virtualka should be thin

- guest OS file system must support UNMAP

- for vSphere 6.0 CBT must be turned off

- enable UNMAP on the hypervisor, if necessary: esxcli storage vmfs unmap -l myDatastore

- enable UNMAP on storage

Never thin on thin

For many years, all storage vendors said don't create thin virtual disks on thin moons. But now this has changed and in order to release blocks from inside the virtual machine, you need to have thin virtual disks and thin moons on the storage system. In vSphere 6.0, the functionality of returning remote blocks from inside the guest OS was implemented, but had a number of limitations when using UNMAP , for example, Linux machines were not supported. In vSphere 6.0 and older versions with VMFS, the UNMAP function does not start automatically , you need to run the command manually.

Windows Guest OS support

In order for the release of space from inside the Windows guest OS to work, the NTFS file system must be formatted with a allocation unit size of 64KB.

Linux Guest OS SPC-4 support

Linux virtual machines supporting SPC -4 and running on vSphere 6.5 will now also be able to return the freed space from the inside of the virtual machine back to storage.

How to check if your Linux machine supports the release of space?

Checking for SPC-4 Support in a Linux Virtual Machine

To do this, run the command

The Unmap command supported (LBPU) parameter set to 1 means that a thin disk is used, which is what we need. A value of 0 means that the type of virtual disk is thick (eager or sparse), UNMAP will not work with thick disks.

Version version = 0x02 [SCSI-2] means that UNMAP will not work, we need version SPC-4:

sg_vpd -p lbpv

Logical block provisioning VPD page (SBC):

Unmap command supported (LBPU): 1

Write same (16) with unmap bit supported (LBWS): 0

Write same (10) with unmap bit supported (LBWS10): 0The Unmap command supported (LBPU) parameter set to 1 means that a thin disk is used, which is what we need. A value of 0 means that the type of virtual disk is thick (eager or sparse), UNMAP will not work with thick disks.

sg_inq /dev/sdc -d

standard INQUIRY:

PQual=0 Device_type=0 RMB=0 version=0x02 [SCSI-2]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=0 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=0]

EncServ=0 MultiP=0 [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=1 Sync=1 Linked=0 [TranDis=0] CmdQue=1

length=36 (0x24) Peripheral device type: disk

Vendor identification: VMware

Product identification: Virtual disk

Product revision level: 1.0Version version = 0x02 [SCSI-2] means that UNMAP will not work, we need version SPC-4:

sg_inq -d /dev/sdb

standard INQUIRY:

PQual=0 Device_type=0 RMB=0 version=0x06 [SPC-4]

[AERC=0] [TrmTsk=0] NormACA=0 HiSUP=0 Resp_data_format=2

SCCS=0 ACC=0 TPGS=0 3PC=0 Protect=0 [BQue=0]

EncServ=0 MultiP=0 [MChngr=0] [ACKREQQ=0] Addr16=0

[RelAdr=0] WBus16=1 Sync=1 Linked=0 [TranDis=0] CmdQue=1

length=36 (0x24) Peripheral device type: disk

Vendor identification: VMware

Product identification: Virtual disk

Product revision level: 2.0Let's check that UNMAP is up and running:

To do this, run the command

a value of 1 indicates that the guest OS notifies the SCSI level of deleted blocks from the file system.

Check if space is freed up using UNMAP:

If you get the error "blkdiscard: / dev / sdb: BLKDISCARD ioctl failed: Operation not supported" then UNMAP does not work. If there is no error, it remains to mount the file system with the discard key :

grep . /sys/block/sdb/queue/discard_max_bytes

1a value of 1 indicates that the guest OS notifies the SCSI level of deleted blocks from the file system.

Check if space is freed up using UNMAP:

sg_unmap --lba=0 --num=2048 /dev/sdb

#или

blkdiscard --offset 0 --length=2048 /dev/sdbIf you get the error "blkdiscard: / dev / sdb: BLKDISCARD ioctl failed: Operation not supported" then UNMAP does not work. If there is no error, it remains to mount the file system with the discard key :

mount /dev/sdb /mnt/netapp_unmap -o discardIt is important to remember that the VMware hypervisor starts UNMAP asynchronously , that is, with a delay. This means that in practice, you will most likely never have 1: 1 occupied space, inside the guest OS / on the hypervisor datastore and on the storage moon.

Vvol

VVOL technology starting with vSphere 6.0, VM Hardware version 11 and Windows 2012 GOS with the NTFS file system supports the automatic release of space inside the thin moon on the external storage on which this virtual machine is located.

Linux GOS supporting SPC -4 installed on VM Hardware version 11 on vSphere 6.5 will also be able to return space from inside the virtual machine to the thin moons of storage.

This significantly improves the utilization of usable space in a SAN infrastructure using Thing Provisioning and Hardware-assistant snapshots.

Learn more about tuning ESXi 6.x for ONTAP .

Windows (Bare Metal)

Support for UNMAP commands for this OS family began with Windows Server 2012 with the NTFS file system. To enable automatic UNMAP, use Windows Host Utility 6.0.2 or later or ONTAP DSM 4.0 or later. To check if UNMAP is enabled, run

fsutil behavior query disabledeletenotify. A state of DisableDeleteNotify = 0 means that UNMAP returns blocks in-band. If the host has several moons connected from several storage systems, some of which do not support UNMAP, it is recommended to turn it off. Learn more about tuning Windows Server for ONTAP .

Linux (Bare Metal)

Linux distributions support UNMAP. NetApp officially supports RHEL version 6.2 and higher using the –o discard switch of the mount command and the fstrim utility . Read more in the RHEL6 Storage Admin Guide .

Learn more about tuning Linux Server for ONTAP .

Zombie

Prior to ONTAP 9, file deletion operations generated so-called Zombie messages.

ONTAP 8 & Zombie Messages

Releasing deleted blocks allows you to significantly save disk space, but on the other hand, if the host asks the storage system to free up more blocks, for example, terabytes of data, your storage system will do this and will not calm down until it finishes, and this is additional activity on the storage system . The release of a terabyte of data will be especially noticeable on disk or hybrid (not All Flash) systems. Therefore, you should be careful about deleting more data in one fell swoop on such systems (terabytes).

If you find yourself in this situation, consult technical support, it may be worth increasing the value of wafl_zombie_ack_limit and wafl_zombie_msg_cnt. If you need to delete all data on the moon, it is better to delete the entire moon on the storage system. All Flash systems, on the other hand, are much less susceptible to such requests, and as a rule, quickly and effortlessly cope with such tasks.

If you find yourself in this situation, consult technical support, it may be worth increasing the value of wafl_zombie_ack_limit and wafl_zombie_msg_cnt. If you need to delete all data on the moon, it is better to delete the entire moon on the storage system. All Flash systems, on the other hand, are much less susceptible to such requests, and as a rule, quickly and effortlessly cope with such tasks.

Deleting files in ONTAP 9 and prioritizing

Let me remind you that ONTAP 9 was released in May 2016. In this version of the Zombie software, messages are no longer generated to free up space, instead, the file is simply marked for deletion. In this connection, the logic of the work of freeing up space has changed significantly:

- firstly the data is deleted in the background

- secondly, it became possible to prioritize the release of space from those volumes that need it more, i.e. those that end up with space.

conclusions

Implemented UNMAP support, both on the guest OS, host, and NetApp storage side, allows more rational utilization of space in a SAN environment using Thin Provisioning and, as a result, will allow more rational use of storage space with hardware snapshots. Support for UNMAP at the hypervisor level, and even more so within the guest OS, will greatly facilitate the use of these two popular technologies.

Please send messages about errors in the text to the LAN . Comments, additions and questions on the opposite article, please comment.