Fight for resources, part 4: Remarkably coming out

Let us understand the regulators of the storage subsystem and see what they can do in the sense of block I / O.

Particularly interesting here is that we enter the territory, where changes in settings that are made after the system has been launched are much less important than decisions that are made even before its deployment.

Take a look at the picture below.

It presents four main resources that a modern computer needs for full-fledged work. Performance tuning is the art of optimally distributing these resources between application processes. Moreover, all these resources are not unlimited and are not equivalent in terms of impact on performance.

The performance of the storage subsystem comes down to the performance of the storage technologies used in it: hard drives, SSD, SAN, NAS - they can vary greatly in access speed and throughput. And a powerful processor and a lot of memory will not save the situation if the storage devices do not meet the requirements of the tasks to be solved.

If you, as a Linux specialist, can influence hardware decisions, try to ensure that your organization has an adequate (or superior) storage platform. This will save you from many problems in the future.

And now let's see what can be done with input / output (I / O) controls.

Officially, the I / O controller is called blkio, but in a good mood it responds to Blocky. Like the CPU controller, Blocky has two modes of operation:

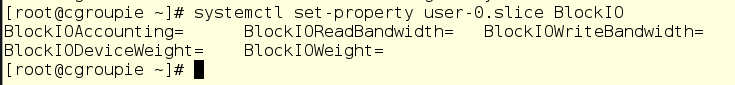

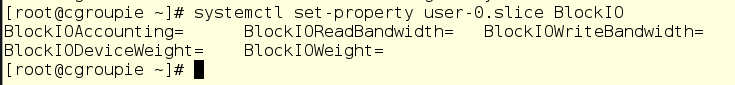

The screenshot below shows the parameters that can be adjusted using the systemctl command. Here we used the auto-help magic on the Tab key to display a list of parameters. This is called bash-completion, and if you are still not using this feature, it's time to install the appropriate PRM.

Relative I / O-balls are governed by the BlockIODeviceWeight and BlockIOWeight parameters. Before playing with these controls, you need to understand this: they only work if the CFQ I / O scheduler is enabled for the storage device.

What is an I / O scheduler? Let's start from afar and remember that the Linux kernel is responsible for ensuring that all hardware components of a computer communicate correctly with each other. And since all these components at the same time want different things, then there is no way without ordering. Well, as we, people, for example, organize our life, structuring it into work, rest, sleep, and so on.

If we talk about storage devices, then the I / O scheduler is responsible for organizing their work within the kernel. It’s just software code that sets the data flow control for block devices, ranging from USB flash drives and hard drives, to virtual disks that are actually files somewhere on the ISCI devices on the SAN network.

And on top of all these devices that can be used in Linux, there are various tasks that a computer must perform. In addition, in real life there is what we in Red Hat call “use cases”. That is why there are different planners focused on different scenarios. These schedulers are called noop, deadline and cfq. In a nutshell, each of them can be described as follows:

Additional information about schedulers can be found in the Red Hat Enterprise Linux 7 Performance Tuning Guide 7.

What was all this reasoning about planners for? Besides, on most computers, cfq is NOT USED by default unless they have SATA drives. Without knowing it, you can change BlockIOWeight until blue in the face without any effect whatsoever. Unfortunately, systemd will not tell you: “Sorry, you are in vain trying to change this parameter. It won't work, because the wrong scheduler is used on the device. ”

So how can you find out about this “interesting” feature? As usual, from the cgroups documentation that we wrote about in the last post. It is always useful to get acquainted with it before using these or other regulators.

Again, we move from general words to specifics: let me introduce you to Mr. Kryakin.

Again, we move from general words to specifics: let me introduce you to Mr. Kryakin.

He does catering, and he has two databases on the application server to track orders. Mr. Kryakin insists that the database of orders for duck dishes is much more important than the base for goose dishes, since geese are impostors on a waterfowl throne.

Both databases are configured as services, and their unit files look like this:

In fact, the scripts invoked in them (duck.sh and goose.sh) do not perform any real work in the database, but merely imitate reading and writing using the cycles of the dd command. Both scripts use the file system / database, which lies on its virtual disk.

Let's run duck and goose and see where they land in the cgroup hierarchy:

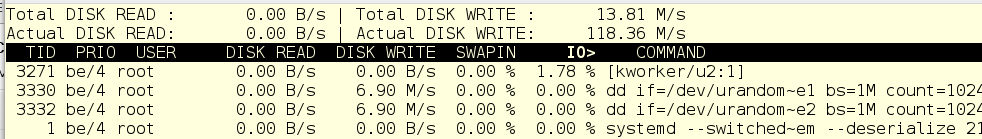

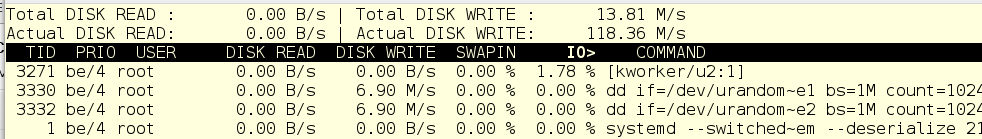

And now, since we know the PIDs of the dd processes, let's turn to the iotop command to see what happens in the storage subsystem:

Hmmm, 12-14 MB / s ... not fast. It seems that Mr. Kryakin did not really invest in the storage system. Although we already had questions about its adequacy, so there is nothing particularly surprising.

Now we look at our two tasks: PID 3301 (goose) and PID 3300 (duck). Each uses I / O at about 6 MB / s. The screen above is slightly different numbers, but in reality they are constantly jumping, and on average, these two tasks equally share the bandwidth of the storage device.

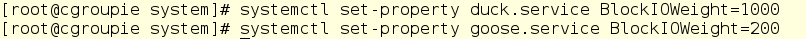

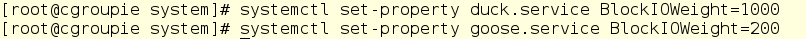

Mr. Kryakin wants the duck to have at least 5 times more I / O throughput than goose, so that orders for duck are always processed first. Let's try to use the BlockIOWeight parameter for this with the following commands:

We look iotop and we see that did not work:

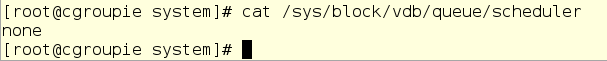

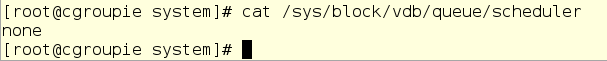

Let's check the I / O scheduler for the / dev / vdb device:

Interesting ... We are trying to change the scheduler to cfq and nothing comes out. Why?

The fact is that our system works on a KVM virtual machine, and it turns out that starting with version 7.1 in Red Hat Enterprise Linux, the scheduler can no longer be changed . And this is not a bug, but a feature associated with the improvement of mechanisms for working with virtualized I / O devices.

But let's not despair. We have two more parameters that can be changed: BlockIOReadBandwidth and BlockIOWriteBandwidth operate at the block device level and ignore the I / O scheduler. Since we know the bandwidth of the device / dev / vdb (about 14 MB / s for reception and return), by limiting the goose to 2 MB / s, we seem to be able to realize Mr. Kryakin’s wish. Let's try:

We look: PID 3426, it is goose, now uses I / O somewhere at 2 MB / s, and PID 3425, I mean, duck, almost all 14!

Hooray, we did what the customer wanted, which means we saved not only a certain number of geese, but also our reputation as a Linux guru.

Particularly interesting here is that we enter the territory, where changes in settings that are made after the system has been launched are much less important than decisions that are made even before its deployment.

Take a look at the picture below.

It presents four main resources that a modern computer needs for full-fledged work. Performance tuning is the art of optimally distributing these resources between application processes. Moreover, all these resources are not unlimited and are not equivalent in terms of impact on performance.

The performance of the storage subsystem comes down to the performance of the storage technologies used in it: hard drives, SSD, SAN, NAS - they can vary greatly in access speed and throughput. And a powerful processor and a lot of memory will not save the situation if the storage devices do not meet the requirements of the tasks to be solved.

If you, as a Linux specialist, can influence hardware decisions, try to ensure that your organization has an adequate (or superior) storage platform. This will save you from many problems in the future.

And now let's see what can be done with input / output (I / O) controls.

It's all about storage devices

Officially, the I / O controller is called blkio, but in a good mood it responds to Blocky. Like the CPU controller, Blocky has two modes of operation:

- Adjustment using relative I / O-ball (shares), which allow you to control performance at the level of all or selected block storage devices by setting values in the range from 10 to 1000. The default is 1000, so any changes only reduce the I / O ball selected user or service. Why 1000, not 1024, as in the case of the CPU? Good question. Apparently, this is the case when the open nature of Linux does not benefit him.

- An absolute bandwidth adjustment that limits the speed of reading and / or writing for a given user or service. By default, this mode is disabled.

The screenshot below shows the parameters that can be adjusted using the systemctl command. Here we used the auto-help magic on the Tab key to display a list of parameters. This is called bash-completion, and if you are still not using this feature, it's time to install the appropriate PRM.

Relative I / O-balls are governed by the BlockIODeviceWeight and BlockIOWeight parameters. Before playing with these controls, you need to understand this: they only work if the CFQ I / O scheduler is enabled for the storage device.

What is an I / O scheduler? Let's start from afar and remember that the Linux kernel is responsible for ensuring that all hardware components of a computer communicate correctly with each other. And since all these components at the same time want different things, then there is no way without ordering. Well, as we, people, for example, organize our life, structuring it into work, rest, sleep, and so on.

If we talk about storage devices, then the I / O scheduler is responsible for organizing their work within the kernel. It’s just software code that sets the data flow control for block devices, ranging from USB flash drives and hard drives, to virtual disks that are actually files somewhere on the ISCI devices on the SAN network.

And on top of all these devices that can be used in Linux, there are various tasks that a computer must perform. In addition, in real life there is what we in Red Hat call “use cases”. That is why there are different planners focused on different scenarios. These schedulers are called noop, deadline and cfq. In a nutshell, each of them can be described as follows:

- Noop - well suited for block storage devices that have no rotating parts (flash, ssd, etc.).

- Deadline is a lightweight planner focused on minimizing delays. By default, it gives priority to reading to the detriment of writing, since most applications stumble on reading.

- Cfq - focused on fair allocation of I / O throughput at the system-wide level. And as we said above, this is the only scheduler that supports relative input / output parameters for cgroups.

Additional information about schedulers can be found in the Red Hat Enterprise Linux 7 Performance Tuning Guide 7.

What was all this reasoning about planners for? Besides, on most computers, cfq is NOT USED by default unless they have SATA drives. Without knowing it, you can change BlockIOWeight until blue in the face without any effect whatsoever. Unfortunately, systemd will not tell you: “Sorry, you are in vain trying to change this parameter. It won't work, because the wrong scheduler is used on the device. ”

So how can you find out about this “interesting” feature? As usual, from the cgroups documentation that we wrote about in the last post. It is always useful to get acquainted with it before using these or other regulators.

Go to the use case

Again, we move from general words to specifics: let me introduce you to Mr. Kryakin.

Again, we move from general words to specifics: let me introduce you to Mr. Kryakin. He does catering, and he has two databases on the application server to track orders. Mr. Kryakin insists that the database of orders for duck dishes is much more important than the base for goose dishes, since geese are impostors on a waterfowl throne.

Both databases are configured as services, and their unit files look like this:

In fact, the scripts invoked in them (duck.sh and goose.sh) do not perform any real work in the database, but merely imitate reading and writing using the cycles of the dd command. Both scripts use the file system / database, which lies on its virtual disk.

Let's run duck and goose and see where they land in the cgroup hierarchy:

And now, since we know the PIDs of the dd processes, let's turn to the iotop command to see what happens in the storage subsystem:

Hmmm, 12-14 MB / s ... not fast. It seems that Mr. Kryakin did not really invest in the storage system. Although we already had questions about its adequacy, so there is nothing particularly surprising.

Now we look at our two tasks: PID 3301 (goose) and PID 3300 (duck). Each uses I / O at about 6 MB / s. The screen above is slightly different numbers, but in reality they are constantly jumping, and on average, these two tasks equally share the bandwidth of the storage device.

Mr. Kryakin wants the duck to have at least 5 times more I / O throughput than goose, so that orders for duck are always processed first. Let's try to use the BlockIOWeight parameter for this with the following commands:

We look iotop and we see that did not work:

Let's check the I / O scheduler for the / dev / vdb device:

Interesting ... We are trying to change the scheduler to cfq and nothing comes out. Why?

The fact is that our system works on a KVM virtual machine, and it turns out that starting with version 7.1 in Red Hat Enterprise Linux, the scheduler can no longer be changed . And this is not a bug, but a feature associated with the improvement of mechanisms for working with virtualized I / O devices.

But let's not despair. We have two more parameters that can be changed: BlockIOReadBandwidth and BlockIOWriteBandwidth operate at the block device level and ignore the I / O scheduler. Since we know the bandwidth of the device / dev / vdb (about 14 MB / s for reception and return), by limiting the goose to 2 MB / s, we seem to be able to realize Mr. Kryakin’s wish. Let's try:

We look: PID 3426, it is goose, now uses I / O somewhere at 2 MB / s, and PID 3425, I mean, duck, almost all 14!

Hooray, we did what the customer wanted, which means we saved not only a certain number of geese, but also our reputation as a Linux guru.