Game client download tracking system

Downloading a game is a rather complicated mechanism, which takes place in several stages, at each of which a failure can occur for one reason or another, be it disconnection, hardware failure, a banal firewall or restrictions on the side of the provider.

Diagnosing errors that occur during loading of the game client in such a diverse environment becomes a completely non-trivial task. And even the use of such advanced solutions as Google Analytics does not allow to fully solve the problem. That is why we had to design and write our own game client download tracking system.

First, let's take a closer look at the game client download process. On a social network, our application is an iframe element with an HTML page (hereinafter simply canvas), which is physically located on our servers. After loading the canvas, the game client is initialized, at the same stage we determine the availability of the flash player and its version. Then the game client is embedded into the page in the form of an object tag. After that, there are 3 main stages that are most interesting to us:

- Actually downloading a file from a CDN (about 3 MB).

- Connect to the API of the social network and receive the necessary data.

- Authorization on the game server and loading resources.

Force majeure situations can occur at every stage, so we monitor them. The game client using the external interface informs the canvas about the result of each of the steps (both successful and not). Canvas, in turn, contains a small javascript-library where the transmitted information is stored. After all stages of loading, data is sent to the server.

Based on this, we can briefly describe the main tasks in the design of the system and highlight the following requirements for it:

- processing a large number of simultaneous requests - notifications and the quickest response to a request;

- determination of the country and region of the user by IP address in real time;

- logging of various data that may expand or supplement over time, and the provision of aggregated statistics, including in real time.

- providing statistics for many simultaneously connected clients in real time.

Obviously, the system must be able to cache data in order to quickly provide information for online monitoring, as well as dynamically scale. Since the data are received continuously, and statistics on countries and regions may be needed at any time, it is necessary to determine the geo-information as notifications arrive.

There was also the issue of updating data on the client side and the load on the server with simultaneous requests. To view statistics from the server, you need to somehow receive data, and for this they usually use the so-called long polling technology.

When using long polling technology, the client sends an Ajax request to the server and waits for new data to appear on the server. Having received the data, the server sends it to the client, after which the client sends a new request and waits again. The question arises: what will happen if several clients simultaneously fulfill such requests and the amount of processed data on them will be significant? Obviously, server load will increase dramatically. Knowing that in the vast majority of cases the same requests are sent to the server, we wanted the server to process a unique request once and send the data to users by itself. That is, the push notification mechanism was used.

Selection of tools and technologies

It was decided to implement the server part of the application on the .net architecture using classic ASHX handlers for incoming requests from game clients. Such handlers are the basic solution for processing web requests in the .net architecture, have been used for a long time and work quickly due to the fact that the request quickly passes through the IIS pipeline of the web server. We need only one handler that processes requests from the client, sent through the "pixel". This refers to the approach when a 1x1 image is inserted on the client, where the URL is configured to transfer the necessary data to the server. Thus, a regular GET request is made. On the server side, for each such request, you need to determine the geo-information by IP-address and save the data in the cache and database. These operations are “expensive”, that is, they require a certain amount of time.

For data storage, we chose the NoSQL database - MongoDB, which allows you to store entities on the basis of the Code First principle, that is, the structure of the database is determined by the entity in the code. In addition, entities can be dynamically modified without the need to change the database structure each time, which allows you to save arbitrary objects transmitted from the client in JSON format and subsequently make various selections on them. In addition, you can dynamically create new collections for new data types, which is great for horizontal scaling. In fact, each unique tracking object is saved in its collection.

After analyzing the requests from the client, we realized that in the vast majority the same requests from different clients are sent to the server, and only in specific cases they differ (for example, when changing the request criteria). As an alternative to lond polling, we decided to use Web sockets (WebSocket), which allow organizing two-way client-server interaction and send requests only to open / close the channel and change states. Thus, the number of client – server requests is reduced. This approach allows you to update data on the server once and send push notifications to all subscribers. As a result, the data is updated instantly, when an event is triggered on the server side, and the number of processed requests on the server is reduced.

In addition, you can organize work without using the web server directly (for example, in a service), and some trivial problems with cross-domain requests and security disappear.

Processing notifications

An asynchronous handler is a boxed solution, because in order to write your asynchronous handler, it is enough to inherit a class from IHttpAsyncHandler and implement the necessary methods and properties. We implemented the wrapper as an abstract class (HttpTaskAsyncHandler) that implements IHttpAsyncHandler. IAsyncResult was implemented through Tasks. As a result, the HttpTaskAsyncHandler contains one required implementation method that takes an HttpContext and returns a Task:

public abstract Task ProcessRequestAsync(HttpContext context);

To implement the asynchronous ASHX handler, it is enough to inherit it from the HttpTaskAsyncHandler and implement the ProcessRequestAsync:

public class YourAsyncHandler : HttpTaskAsyncHandler

{

public override Task ProcessRequestAsync(HttpContext context)

{

return new Task(() =>

{

//Обработка запроса

});

}

}

Actually, the Task itself is created through an overridden ProcessRequestAsync in the context of:

public IAsyncResult BeginProcessRequest(HttpContext context, AsyncCallback callback, object extraData)

{

Task task = ProcessRequestAsync(context);

if (task == null)

return null;

var returnValue = new TaskWrapperAsyncResult(task, extraData);

if (callback != null)

task.ContinueWith(_ => callback(returnValue )); // выполняется асинхронно.

return retVal;

}

TaskWrapperAsyncResult is a wrapper class that implements IAsyncResult:

public object AsyncState { get; private set; }

public WaitHandle AsyncWaitHandle

{

get { return ((IAsyncResult)Task).AsyncWaitHandle; }

}

public bool CompletedSynchronously

{

get { return ((IAsyncResult)Task).CompletedSynchronously; }

}

public bool IsCompleted

{

get { return ((IAsyncResult)Task).IsCompleted; }

}

The EndProcessRequest method verifies that the task has completed:

public void EndProcessRequest(IAsyncResult result)

{

if (result == null)

{

throw new ArgumentNullException();

}

var castResult = (TaskWrapperAsyncResult)result;

castResult.Task.Wait();

}

Asynchronous processing itself occurs through a call to ContinueWith for our wrapper. Since ASHX handlers are far from new, standard calls to them look ugly: ../handlerName.ashx. As a result, you can write HandlerFactory by implementing IHttpHandlerFactory, or write routing by implementing IRouteHandler:

public class HttpHandlerRoute : IRouteHandler

{

private readonly String _virtualPath;

public HttpHandlerRoute(String virtualPath)

{

_virtualPath = virtualPath;

}

public IHttpHandler GetHttpHandler(RequestContext requestContext)

{

var httpHandler = (IHttpHandler)BuildManager.CreateInstanceFromVirtualPath(_virtualPath, typeof(T));

return httpHandler;

}

}

After that, during initialization, you need to set routes for handlers:

RouteTable.Routes.Add(new Route("notify", new HttpHandlerRoute("~/Notify.ashx")));

Upon receipt of notification, geodata is determined by IP address (the determination process will be described later), the information is stored in the cache and database.

Data Caching The

cache is implemented on the basis of MemoryCache . It is cyclic and automatically deletes old data after the specified period of the instance’s life or when the specified amount in memory is exceeded. It is convenient to work and configure the cache, for example, via .config:

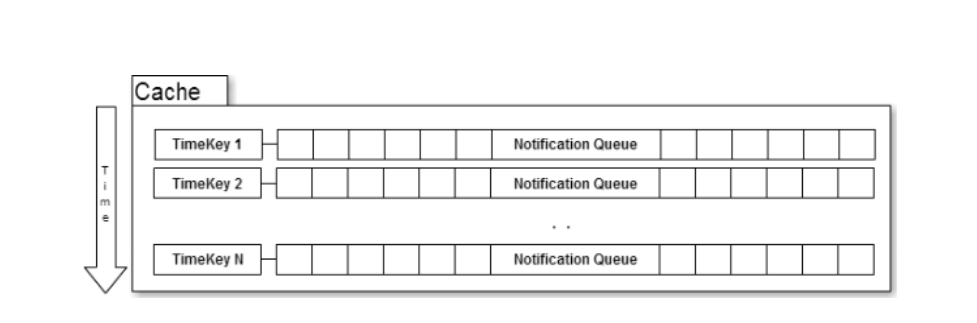

Data storage in the cache was organized in a distributed form, which made it possible to quickly obtain data for certain periods of time. The cache scheme is as follows:

Essentially, a cache is a key – value collection. Where the key is the timestamp (String), and the value is the event queue (Queue).

Events in the cache are queued. Each queue has its own temporary key, in our case it is one minute. As a result, the key looks like [year_month_day_hour_minute]. Example format: “ntnKey_ {0: y_MM_dd_HH_mm}”.

When adding a new event (notification) to the cache, a temporary key is determined and a queue is found, then notification is added there. If the queue does not exist, a new one is created for the current key. Thus, to obtain data for a certain period, it is enough to determine temporary keys and get queues with events. Further data can be converted as needed.

Data storage in the MongoDB database

In parallel with the cache, notification is saved in MongoDB. You can read about all the features and benefits of this database on the official website. Now there are many different NoSQL databases, so the choice must be made based on personal preferences and tasks. From myself, I want to say that it’s easy and pleasant to work with Mongo, it is developing dynamically (version 3.0 has recently been released with the new WiredTiger engine), there are .net providers for working with it, which are also optimized and updated. However, it is worth noting that the use of NoSQL databases is suitable for solving certain problems and it is worthwhile to use them as a replacement for relational databases only after an in-depth analysis. Nowadays, an integrated approach is increasingly encountered - the use of two types in large applications. Typical applications: replacing the logging mechanism, working in conjunction with NodeJS without a backend, storage of dynamically changing data and collections, projects with the architecture of CQRS + Event Sourcing and so on. If you need to select data from several collections and / or perform various manipulations with samples, it is better to use relational databases.

In a specific case, MongoDB worked well and was used for fast uploading with grouping of statistics, cyclic storage (the ability to set the lifetime of records using a special index), as well as geoservice (to be described later).

MongoDB C # /. NET Driver (at the time of writing - version 1.1, recently released version 2.0) was used to work with the database. In general, the driver works well, but it confuses the cumbersome use of objects such as BsonDocument when writing queries.

Fragment:

var countryCodeExistsMatch = new BsonDocument

{

{

"$match",

new BsonDocument

{

{

"CountryCode", new BsonDocument

{

{"$exists", true},

{"$ne", BsonNull.Value}

}

}

}

}

};

Version 1.1 already has support for LINQ queries:

Collection.AsQueryable().Where(s => s.Date > DateTime.UtcNow.AddMinutes(-1 * 10) .OrderBy(d => d.Date);

But, as it turned out in practice when analyzing through the profiler, such requests are not converted to native (BsonDocument) requests. As a result, the entire collection is loaded into memory and iterated over, which is not very good for performance. Therefore, I had to write queries through BsonDocument and database directives. Let's hope the situation is fixed in the recently released .NET 2.0 Driver.

Mongo supports Bulk operations(insert / change / delete multiple instances at once in one operation), which is very useful and allows you to cope well with peak loads. We just save events in the queue and have a working background process that periodically retrieves N notifications and saves through the Bulk Insert to the database. All you need to do is synchronize threads or use Concurrent collections.

The base is fast enough in read mode, but indexes are recommended to increase speed . As a result of proper indexing, the sampling rate can increase by about 10 times. Accordingly, when indexing, the size of the database also increases.

Another feature of Mongo is that it is loaded as much as possible into RAM, that is, when requested, the data remains in RAM and unloaded as needed. You can also set the maximum value of RAM available for the database.

There is also an interesting mechanism called Aggregation Pipeline . It allows you to perform several data operations upon request. The results are conveyed, as it were, and are transformed at each stage.

An example would be the task when you need to select and group data and present the results in some form. This problem can be represented as:

selection grouping provision ($ match, $ group, $ project and $ sort).

The following is an example of a code for selecting events grouped by country code:

var countryCodeExistsMatch = new BsonDocument

{

{

"$match",

new BsonDocument

{

{

"CountryCode", new BsonDocument

{

{"$exists", true},

{"$ne", BsonNull.Value}

}

}

}

}

};

var groupping = new BsonDocument

{

{

"$group",

new BsonDocument

{

{

"_id", new BsonDocument {{"CountryCode", "$CountryCode"}}

},

{

"value", new BsonDocument {{"$sum", 1}}

}

}

}

};

var project = new BsonDocument

{

{

"$project",

new BsonDocument

{

{"_id", 0},

{"hc-key","$_id.CountryCode"},

{"value", 1},

}

}

};

var sort = new BsonDocument { { "$sort", new BsonDocument { { "value", -1 } } } };

var pipelineMathces = requestData.GetPipelineMathchesFromRequest(); //дополнительные условия выборки

var pipeline = new List(pipelineMathces) { countryCodeExistsMatch, groupping, project, sort };

var aggrArgs = new AggregateArgs { Pipeline = pipeline, OutputMode = AggregateOutputMode.Inline };

The result of the selection will be IEnumerable, which can be easily converted to json if necessary:

var result = Notifications.Aggregate(aggrArgs).ToJson(JsonWriterSettings);

An interesting feature of working with the database is the ability to save a JSON object directly into a BSON document. Thus, it is possible to organize the saving of data from the client to the database, and the .net environment does not even need to know which object it processes. Similarly, you can make queries to the database from the client side. More details about all the features and features can be found in the official documentation.

In the next part of the article, we will talk about the GeoIP service, which determines the geodata by the IP address of the request, web sockets, polling server implementation, AngularJS, Highcharts and conduct a brief analysis of the system.