PostgreSQL and btrfs - elephant on an oil diet

Recently, looking at an article on the wiki about file systems, I became interested in btrfs, namely its rich capabilities, stable status and, most importantly, the mechanism of transparent data compression. Knowing how easily databases containing textual information are squeezed, I was curious to clarify how much this is applicable in a usage scenario, for example with postgres.

This testing, of course, cannot be called complete, because only reading is involved and that is linear. But the results already make us think about the possible transition to btrfs in certain cases.

But the main goal is to find out the community’s opinion on how reasonable it is and what pitfalls the transparent compression approach at the file system level may conceal.

For those who do not want to waste time, I will immediately tell you about the findings. The PostgreSQL database hosted on btrfs with the compress = lzo option reduces the size of the database in two (compared to any FS without compression) and, when using multi-threaded sequential read, significantly reduces the load on the disk subsystem.

Physical server - 1 pc.

So, we have a physical machine with 2 disks: the first one stores the main postgres database (which is after initdb), and the second disk is completely formatted without any markup on it into the tested filesystems (ext4, btrfs lzo / zlib).

The table space from the backup copy, which is involved in testing, made using pg_basebackup, is placed on the test disk. The main postgres database is also restored.

The essence of testing is the sequential reading of five tables - clones in five threads.

The script is extremely simple and is a simple explain explain.

Each table has a size of 13GB, the total volume is ~ 65GB.

We take the data for the charts from sar with the simplest parameters: “sar 1” - CPU ALL; "Sar -d 1" - I / O.

Before each start, reset pagecache using the command:

Check the completion of background processes:

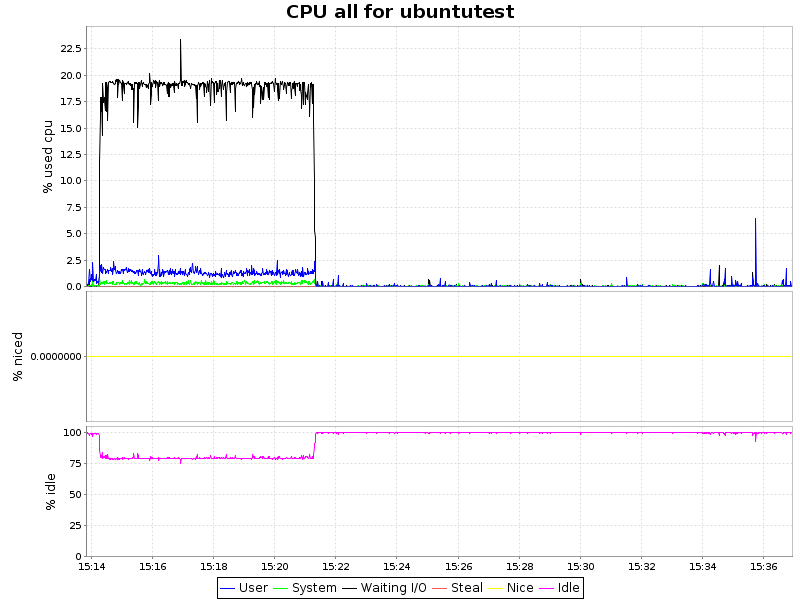

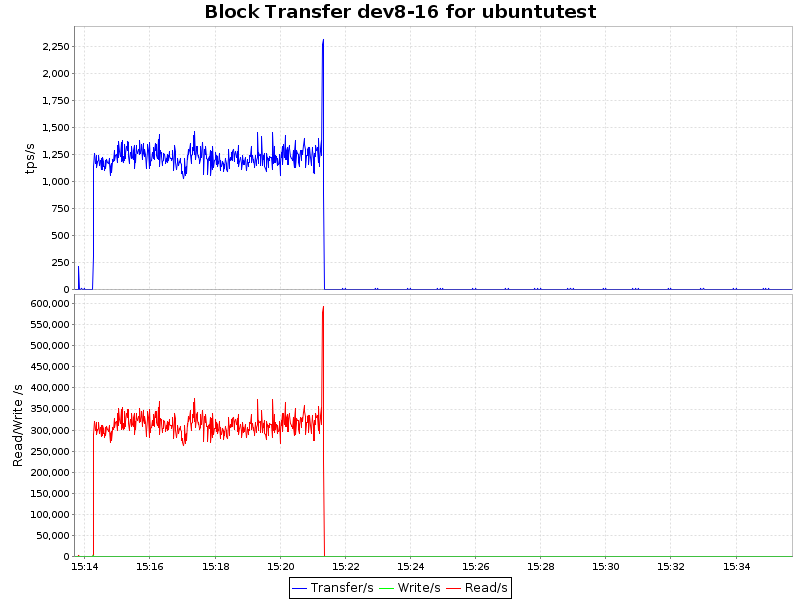

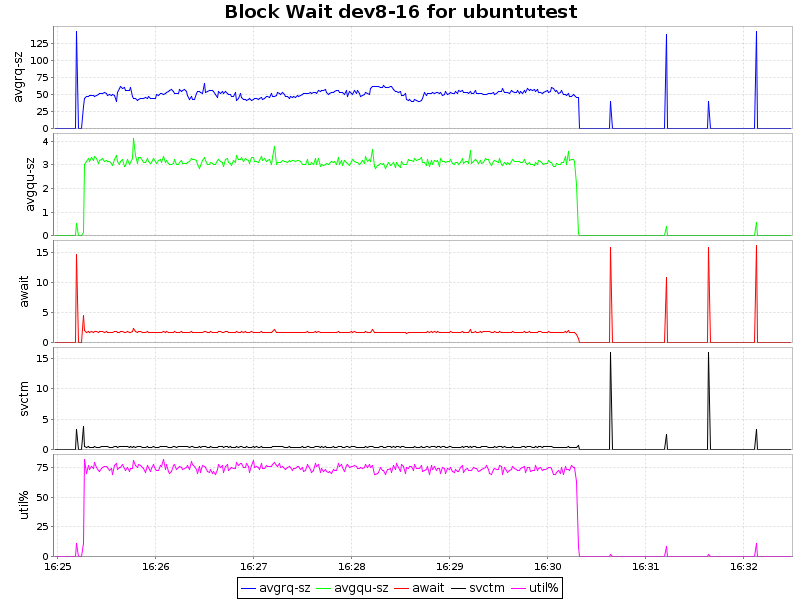

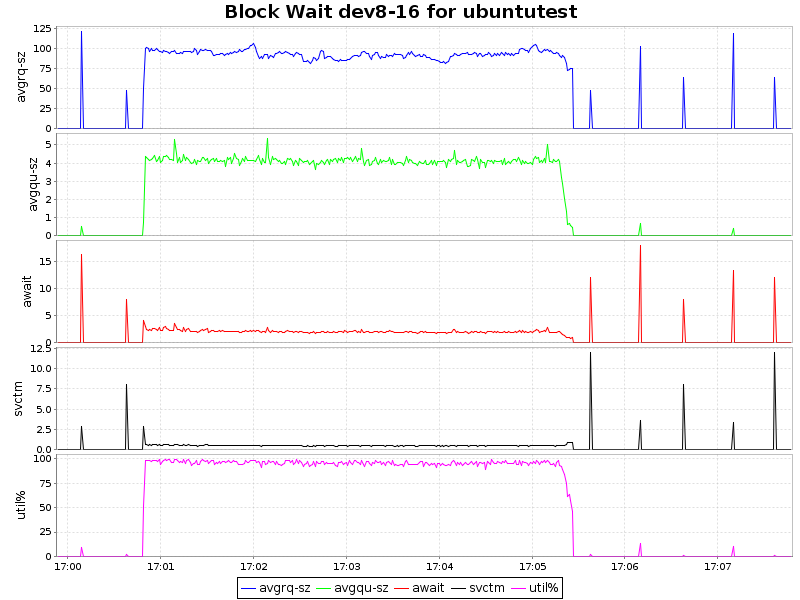

As can be seen from the graphs, compression with the lzo algorithm gives only a small load on the CPU, which, coupled with a 2-fold reduction in the occupied space and some acceleration, makes this approach extremely attractive. Zlib presses our database 4 times, but at the same time the CPU load is already growing significantly (~ 7.5% of CPU time), which is also quite acceptable for certain scenarios. However, btrfs only recently acquired the status of stable (from the kernel 3.10) and it is possible to introduce it into the production environment prematurely. On the other hand, having a synchronous replica solves this issue as well.

As far as I know, zlib and probably lzo use instructions from SSE 4.2, which reduces processor load and it is possible that in some virtualization environments, high processor load will not take advantage of compression.

If someone tells me how to influence this, then I will try to double-check the difference with and without hardware acceleration.

This testing, of course, cannot be called complete, because only reading is involved and that is linear. But the results already make us think about the possible transition to btrfs in certain cases.

But the main goal is to find out the community’s opinion on how reasonable it is and what pitfalls the transparent compression approach at the file system level may conceal.

For those who do not want to waste time, I will immediately tell you about the findings. The PostgreSQL database hosted on btrfs with the compress = lzo option reduces the size of the database in two (compared to any FS without compression) and, when using multi-threaded sequential read, significantly reduces the load on the disk subsystem.

So what's in stock

Physical server - 1 pc.

- CPU: 2 Sockets of 6 cores

- RAM: 48 GB

- Storage:

- 2x - SAS 10K 300GB in RAID 1 + 0 configuration - for OS and main postgres database

- 2x - SAS 10K 300GB in RAID 1 + 0 configuration - for tests

- OS: Ubuntu 14.04.2 - 3.16.0-41

- PG: 9.4.4 x86_64

Testing methodology

So, we have a physical machine with 2 disks: the first one stores the main postgres database (which is after initdb), and the second disk is completely formatted without any markup on it into the tested filesystems (ext4, btrfs lzo / zlib).

The table space from the backup copy, which is involved in testing, made using pg_basebackup, is placed on the test disk. The main postgres database is also restored.

The essence of testing is the sequential reading of five tables - clones in five threads.

The script is extremely simple and is a simple explain explain.

Each table has a size of 13GB, the total volume is ~ 65GB.

We take the data for the charts from sar with the simplest parameters: “sar 1” - CPU ALL; "Sar -d 1" - I / O.

Before each start, reset pagecache using the command:

free && sync && echo 3 > /proc/sys/vm/drop_caches && free

Check the completion of background processes:

SELECT sa.pid, sa.state, sa.query

FROM pg_stat_activity sa;

Figures

Dimensions

| FS | DB size | Disk size | Compression factor |

|---|---|---|---|

| btrfs-zlib | 156GB | 35GB | 4.4 |

| btrfs-lzo | 156GB | 67GB | 2.3 |

| ext4 | 156GB | 156GB | one |

Sequential reading (explain analyze)

| btrfs-zlib | 302000 ms |

| btrfs-lzo | 262000 ms |

| ext4 | 420000 ms |

Graphs

CPU load

| btrfs-zlib |  |

| btrfs-lzo |  |

| ext4 |  |

IO Block Transfer

| btrfs-zlib |  |

| btrfs-lzo |  |

| ext4 |  |

Io wait

| btrfs-zlib |  |

| btrfs-lzo |  |

| ext4 |  |

Conclusion

As can be seen from the graphs, compression with the lzo algorithm gives only a small load on the CPU, which, coupled with a 2-fold reduction in the occupied space and some acceleration, makes this approach extremely attractive. Zlib presses our database 4 times, but at the same time the CPU load is already growing significantly (~ 7.5% of CPU time), which is also quite acceptable for certain scenarios. However, btrfs only recently acquired the status of stable (from the kernel 3.10) and it is possible to introduce it into the production environment prematurely. On the other hand, having a synchronous replica solves this issue as well.

PS

As far as I know, zlib and probably lzo use instructions from SSE 4.2, which reduces processor load and it is possible that in some virtualization environments, high processor load will not take advantage of compression.

If someone tells me how to influence this, then I will try to double-check the difference with and without hardware acceleration.