The Twelve Factor App - The Twelve-Factor App

- Transfer

Dear readers! I present to you the translation of The Twelve-Factor App web application development methodology from the developers of the Heroku platform. My comments are hidden by spoilers during the article.

Nowadays, software is usually distributed in the form of services called web applications or software-as-a-service (SaaS). An application of twelve factors is a methodology for creating SaaS applications that:

The methodology of twelve factors can be applied to applications written in any programming language, and which use any combination of third-party services (backing services) (databases, message queues, cache memory, etc.).

The contributors to this document were directly involved in the development and deployment of hundreds of applications and indirectly witnessed the development, execution, and scaling of hundreds of thousands of applications during our work on the Heroku platform .

This document summarizes all of our experience using and observing a wide variety of SaaS applications in the wild. The document is a combination of three ideal approaches to application development: paying special attention to the dynamics of the organic growth of the application over time, the dynamics of cooperation between developers working on the application code base and eliminating the effects of software erosion .

Our motivation is to raise awareness of some systemic problems that we have encountered in the practice of developing modern applications, as well as to provide general basic concepts for discussing these problems and to offer a set of general conceptual solutions to these problems with related terminology. The format is inspired by the books of Martin Fowler Patterns of Enterprise Application Architecture and Refactoring .

Developers who create SaaS applications. Ops to engineers who deploy and manage such applications.

I. Codebase

One codebase monitored in a version control system is multiple deployments

. II. Dependencies

Explicitly declare and isolate dependencies

III. Configuration

Save configuration in runtime

IV. Third-Party Services (Backing Services)

Consider third-party services (backing services) pluggable resources

V. Build, release, execution

Strictly separate the stages of assembly and execution

VI. Processes

Run the application as one or more processes that do not preserve the stateless state

VII. Port binding

Export services through port binding

VIII. Parallelism

Scale your application with

IX processes . Disposability

Maximize reliability with quick start and correct shutdown

X. Application development / work parity

Keep development, staging and production environments as close as possible to

XI. Logging

Consider a log as an

XII event stream . Administration Tasks

Perform administration / management tasks using one-time processes

An application of twelve factors is always tracked in a version control system such as Git , Mercurial, or Subversion . A copy of the database of monitored versions is called a code repository , which is often shortened to code repo or simply to a repo (repo) A

code database is one repository (in centralized version control systems like Subvertion) or many repositories that share common initial commits (in decentralized version control systems like Git).

There is always a one-to-one correspondence between the code base and the application:

There is only one code base for each application, but there can be many deployments of the same application. A deployed application (deploy) is a running instance of an application. Typically, this is a working site deployment and one or more intermediate site deployments. In addition, each developer has a copy of the application running in his local development environment, each of which also qualifies as a deployed application (deploy).

The code base must be the same for all deployments, however, different versions of the same code base can be performed in each of the deployments. For example, a developer may have some changes that are not yet added to the intermediate deployment; An intermediate deployment may have some changes that have not yet been added to the operational deployment. However, all of these deployments use the same code base, so you can identify them as different deployments of the same application.

Most programming languages come with a package manager for distributing libraries, such as CPAN in Perl or Rubygems in Ruby. Libraries installed by the package manager can be installed accessible for the entire system (the so-called “system packages”) or available only to the application in the directory containing the application (the so-called “vendoring” and “bundling”).

The application of twelve factors never depends on implicitly available packages available throughout the system. The application declares all its dependencies completely and accurately using the dependency declaration manifest . In addition, it uses a dependency isolation tool.at run time to ensure that implicit dependencies do not “leak out” of the surrounding system. The full and explicit specification of dependencies is applied in the same way both in the development and operation of the application.

For example, the Ruby Gem Bundler uses

One of the advantages of explicitly declaring dependencies is that it makes it easy to configure applications for new developers. A new developer can copy the application codebase to his machine, the necessary requirements for which are only the presence of the language runtime and the package manager. Everything you need to run the application code can be configured using a specific configuration command . For example, for Ruby / Bundler, the configuration command is

The application of twelve factors also does not rely on the implicit existence of any system tools. An example is the launch of ImageMagick and

Application configuration is all that can change between deployments (development environment, intermediate and operational deployment). It includes:

Sometimes applications store configurations as constants in code. This is a violation of the methodology of twelve factors, which requires a strict separation of configuration and code . The configuration can vary significantly between deployments; the code should not be different.

The litmus test of whether the configuration and the application code are correctly separated is the fact that the application code base can be freely accessible at any time without compromising any private data.

Note that this definition of “configuration” does not include internal application configurations, such as 'config / routes.rb' in Rails, or how the main modules will be connected in Spring. This type of configuration does not change between deployments and therefore it is best to keep it in code.

Another configuration approach is to use configuration files that are not saved to the version control system, for example, 'config / database.yml' in Rails. This is a huge improvement over the use of constants, which are stored in the code, but there are still disadvantages to this method: it is easy to mistakenly save the configuration file to the repository; There is a tendency when configuration files are scattered in different places and in different formats, because of this it becomes difficult to view and manage all the settings in one place. In addition, the formats of these files are usually specific to a particular language or framework.

An application of twelve factors stores the configuration inenvironment variables (often shortened to env vars or env ). Environment variables can be easily changed between deployments without changing the code; unlike configuration files, it is less likely to accidentally save them to a code repository; and unlike user configuration files or other configuration mechanisms, such as Java System Properties, they are a language and operating system independent standard.

Another approach to configuration management is grouping. Sometimes applications group configurations into named groups (often called “environments”) named after the name of a particular deployment, such as

In an appendix of twelve factors, environment variables are unrelated controls, where each environment variable is completely independent of the others. They are never grouped together in “environments,” but instead are managed independently for each deployment. This is a model that scales gradually along with the natural appearance of more application deployments over its lifetime.

A third-party service is any service that is available to the application over the network and is necessary as part of its normal operation. For example, data warehouses (e.g. MySQL and CouchDB ), message queuing systems (e.g. RabbitMQ and Beanstalkd ), outgoing email SMTP services (e.g. Postfix ), and caching systems (e.g. Memcached ).

Traditionally, third-party services, such as databases, are maintained by the same system administrator who deploys the application. In addition to local services, an application can use services provided and managed by a third party. Examples include SMTP services (e.g.Postmark ), metric collection services (such as New Relic and Loggly ), binary data warehouses (like Amazon S3 ), and the use of various services APIs (such as Twitter , Google Maps and Last.fm ).

The application code of twelve factors does not distinguish between local and third-party services. For the application, each of them is a pluggable resource, accessible by URL or by another location / credential pair stored in the configuration . Every deploymentan application of twelve factors should be able to replace the local MySQL database with any third-party managed one (for example, Amazon RDS ) without any changes to the application code. Similarly, the local SMTP server can be replaced by a third-party (for example Postmark) without changing the code. In both cases, only the resource identifier in the configuration needs to be changed.

Each different third-party service is a resource . For example, a MySQL database is a resource, two MySQL databases (used for fragmentation at the application level) qualify as two separate resources. An appendix of twelve factors considers these databases connected resources, indicating their weak linkage with the deployment in which they are connected.

Resources can, if necessary, be connected to the deployment and disconnected from the deployment. For example, if the application database does not function correctly due to hardware problems, the administrator can start a new database server restored from the last backup. The current working database can be disconnected, and the new database is connected - all this without any code changes.

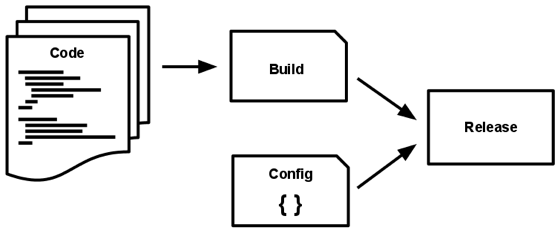

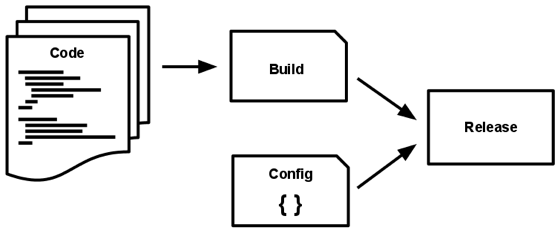

The code base is transformed into deployment (not considering deployment for development) in three stages:

An application of twelve factors uses a strict separation between the stages of assembly, release, and execution. For example, it is not possible to make changes to the code at run time, as there is no way to propagate these changes back to the build phase.

Deployment tools, as a rule, are release management tools, and, importantly, provide an opportunity to roll back to the previous release. For example, the Capistrano deployment tool saves releases in subdirectories of a directory with a name

Each release must have a unique identifier, such as a release time stamp (e.g.

The assembly is initiated by the application developer whenever new code is deployed. The start of the execution phase, on the contrary, can occur automatically in cases such as rebooting the server, or restarting the fallen process by the process manager. Thus, the execution phase should be as technically simple as possible, since problems that may prevent the application from starting can occur in the middle of the night when there are no developers available. The build phase can be more complicated, since possible errors are always visible to the developer who launched the deployment.

An application runs at runtime as one or more processes .

In the simplest case, the code is an independent script, the runtime is the developer's laptop with the language runtime installed, and the process is launched from the command line (for example, like

Application processes of twelve factors do not preserve internal state (stateless) and do not have shared data (share-nothing) . Any data that needs to be stored must be stored in a stateful third-party service, usually in the database.

The process memory and file system can be used as a temporary cache for a single transaction. For example, loading, processing and saving a large file in a database. The application of twelve factors does not imply that anything cached in memory or on disk will be available to the following requests or tasks - with a large number of diverse processes, it is likely that the next request will be processed by another process. Even with one process running, restarting (caused by deployment, configuration changes, or transferring the process to another physical device) will destroy all local (memory, file system) states. Asset packers

(e.g. Jammit or django-compressor) use the file system as a cache for compiled resources. An application of twelve factors prefers to do this compilation during the build phase , for example, as in the Rails asset pipeline , rather than at run time.

Some web systems rely on “sticky sessions” - that is, they cache user session data in the application process memory and expect subsequent requests from the same user to be redirected to the same process. Sticky sessions are a violation of twelve factors and should never be used or relied upon. User session data is good candidates for a data warehouse that provides a function to limit storage time, for example, Memcachedand redis .

Sometimes web applications run inside a web server container. For example, a PHP application can be launched as a module inside Apache HTTPD , or a Java application can be launched inside Tomcat .

The twelve-factor application is completely self-contained and does not rely on the injection of a web server at run time in order to create a web service. The web application exports the HTTP service by binding to a port and listens for requests arriving at that port.

During local development, the developer navigates to a URL of the form

This is usually done by using a dependency declaration to add a web server library to an application such as Tornado in Python, Thin in Ruby, and Jetty in Java and other JVM-based languages. This happens completely in user space , that is, in the application code. The contract with the runtime is to bind the application to the port for processing requests.

HTTP is not the only service that can be exported through port binding. Almost any type of server software can be run as a process bound to a port and waiting for incoming requests. Examples of this include ejabberd (provides the XMPP protocol ) and Redis (provides the Redis protocol ).

Also note that the port binding approach means that one application can act as a third-party service for another application by providing the URL of the third-party application as a resource identifier in the configuration of the consuming application.

After launch, any computer program is one or several working processes. Historically, web applications have taken various forms of process execution. For example, PHP processes will execute as Apache child processes and run on demand in the quantity necessary to serve incoming requests. Java processes use the opposite approach, the JVM is a single monolithic meta-process that reserves a large amount of system resources (processor and memory) at startup and manages parallelism within itself using threads. In both cases, running processes are only minimally visible to the application developer.

In an appendix of twelve factors, processes are first-class entities.The processes in the application of twelve factors took strengths from the unix process model for running daemons . Using this model, the developer can design his application in such a way that for processing various workloads, it is necessary to assign each type of work its own type of process . For example, HTTP requests can be processed by a web process, and lengthy background tasks are processed by a workflow.

This does not exclude the possibility of using internal multiplexing for individual processes through threads of a virtual machine or asynchronous / event models in tools such as EventMachine , Twisted, and Node.js. But each individual virtual machine can only be scaled to a limited extent (vertical scaling), so the application should be able to be run as several processes on different physical machines.

A process-based model really shines when it comes to scaling. The lack of shared data and the horizontal separation of the application processes of twelve factors means that adding more parallelism is a simple and reliable operation. An array of processes of various types and the number of processes of each type are called process formation .

The application processes of twelve factors should never be demonizedand write PID files. Instead, they should rely on an operating system process manager (such as Upstart , a distributed process manager on the cloud platform, or a tool like Foreman in development) to manage the output stream , respond to process crashes, and process user-initiated reboots or shutdowns.

The application processes of the twelve factors are one-time , which means that they can be started and stopped by anyone at the moment. This contributes to stable and flexible scaling, fast deployment of code changesand configurations, and operational deployment reliability.

Processes should try to minimize startup time . Ideally, the process should spend only a few seconds from the point in time when the start command was executed, to the moment when the process is started and ready to accept requests or tasks. Short launch time provides great release flexibilityand scaling. In addition, it is more reliable, since the process manager can freely move processes to new physical machines if necessary.

Processes must terminate correctly when they receive a SIGTERM signal from the process manager. For the web process, the correct shutdown is achieved by stopping listening to the service port (thus, to refuse any new requests), which allows you to complete the current requests and then complete. This model assumes that HTTP requests are short (no more than a few seconds), in the case of long requests, the client should smoothly try to reconnect when the connection is lost.

For a process performing background tasks (worker), a correct shutdown is achieved by returning the current task back to the task queue. For example, in RabbitMQ, a workflow might send a command

Processes must also beResistant to sudden death in the event of hardware failure. Although this is a less likely event than the correct completion of the signal

Historically, there are significant differences between development (the developer makes live changes on the local deployment of the application) and the operation of the application (deployment of the application with access to it by end users). These differences appear in three areas:

The twelve-factor application is designed for continuous deployment by minimizing the differences between application development and operation. Consider the three differences described above:

Summarizing the above in the table:

Third-party services , such as databases, message queuing systems, and the cache, are one area where parity is important when developing and running the application. Many languages provide libraries that simplify access to third-party services, including adapters for accessing various types of services. Some examples are in the table below.

Sometimes developers find it convenient to use lightweight third-party services in their local environment, while more serious and reliable third-party services will be used in a working environment. For example, they use SQLite locally and PostgreSQL in a working environment; or process memory for caching during development and Memcached in a working environment.

A twelve-factor application developer should resist the temptation to use various third-party services in development and in the work environment., even when the adapters are theoretically abstracted from differences in third-party services. The differences in the third-party services used mean that a tiny incompatibility may occur, which will cause the code that worked and passed the tests during development and intermediate deployment to not work in the working environment. This type of error creates interferences that offset the benefits of continuous deployment. The cost of this interference and the subsequent restoration of continuous deployment is extremely high when considered in total for the entire lifetime of the application.

Installing local services has become a less compelling task than it once was. Modern third-party services such as Memcached, PostgreSQL and RabbitMQ are not difficult to install and run thanks to modern package managers such as Homebrew and apt-get . In addition, declarative environment preparation tools such as Chef and Puppet , combined with a lightweight virtual environment such as Vagrant, allow developers to launch a local environment that is as close to the working environment as possible. The cost of installing and using these systems is lower in comparison with the benefits received from the development / operation parity of the application and continuous deployment.

Adapters for various third-party services are still useful, because they allow you to port the application to use new third-party services relatively painlessly. But all application deployments (developer’s environment, intermediate and working deployments) must use the same type and the same version of each of the third-party services.

Logging provides a visual representation of the behavior of a running application. Typically, in a server environment, a log is written to a disk file (“logfile”), but this is only one of the output formats.

A log is a stream of aggregated, time-ordered events collected from the output streams of all running processes and auxiliary services. A raw journal is usually presented in text format with one event per line (although exception traces can span multiple lines). The log does not have a fixed start and end; the message flow is continuous while the application is running.

An application of twelve factors never routes and stores its output stream.An application should not write a log to a file and manage log files. Instead, each running process writes its stream of events without buffering to standard output

In intermediate and production deployments, the output stream of each process will be captured by the runtime, assembled with all other application output streams, and redirected to one or more final destinations for viewing and long-term archiving. These archiving endpoints are not visible to the application and are customizable by the application; instead, they are completely controlled by the runtime. Open source log routers (e.g.Logplex and Fluent ) can be used for this purpose.

The flow of application events can be redirected to a file or viewed in the terminal in real time. Most significant, the flow of events can be directed to an indexing and log analysis system, such as Splunk , or a general-purpose storage system, such as Hadoop / Hive . These systems have great capabilities and flexibility for a thorough analysis of the behavior of the application over time, which includes:

Process shaping is a set of processes that are necessary to perform regular tasks of an application (such as processing web requests) when it is executed. In addition to this, developers periodically need to perform one-time administration and maintenance tasks for the application, such as:

One-time administration processes should be run in an environment identical to regular, long-running application processes . They are launched at the release level , using the same code base and configuration as any other process that runs this release. The administration code must be supplied with the application code to avoid synchronization problems.

The same dependency isolation methods should be used for all types of processes. For example, if the Ruby web process uses a command

The twelve-factor methodology prefers languages that provide REPL wrappers out of the box and that make it easy to run one-time scripts. In a local deployment, developers perform a one-time administration process using the console command inside the application directory. In a production deployment, developers can use ssh or another remote command execution mechanism provided by the runtime to start such a process.

Bug fixes and more suitable translations can be sent:

Thanks to amalinin and litchristina for helping with the translation.

Introduction

Nowadays, software is usually distributed in the form of services called web applications or software-as-a-service (SaaS). An application of twelve factors is a methodology for creating SaaS applications that:

- Use a declarative format to describe the installation and configuration process, which minimizes the time and resources for new developers connected to the project;

- Have an agreement with the operating system that assumes maximum portability between runtimes;

- Suitable for deployment on modern cloud platforms , eliminating the need for servers and system administration;

- The discrepancies between the development environment and the runtime environment are minimized , which allows using continuous deployment for maximum flexibility;

- And they can scale without significant changes in tools, architecture and development practices.

The methodology of twelve factors can be applied to applications written in any programming language, and which use any combination of third-party services (backing services) (databases, message queues, cache memory, etc.).

Background

The contributors to this document were directly involved in the development and deployment of hundreds of applications and indirectly witnessed the development, execution, and scaling of hundreds of thousands of applications during our work on the Heroku platform .

This document summarizes all of our experience using and observing a wide variety of SaaS applications in the wild. The document is a combination of three ideal approaches to application development: paying special attention to the dynamics of the organic growth of the application over time, the dynamics of cooperation between developers working on the application code base and eliminating the effects of software erosion .

Our motivation is to raise awareness of some systemic problems that we have encountered in the practice of developing modern applications, as well as to provide general basic concepts for discussing these problems and to offer a set of general conceptual solutions to these problems with related terminology. The format is inspired by the books of Martin Fowler Patterns of Enterprise Application Architecture and Refactoring .

Who should read this document?

Developers who create SaaS applications. Ops to engineers who deploy and manage such applications.

Twelve factors

I. Codebase

One codebase monitored in a version control system is multiple deployments

. II. Dependencies

Explicitly declare and isolate dependencies

III. Configuration

Save configuration in runtime

IV. Third-Party Services (Backing Services)

Consider third-party services (backing services) pluggable resources

V. Build, release, execution

Strictly separate the stages of assembly and execution

VI. Processes

Run the application as one or more processes that do not preserve the stateless state

VII. Port binding

Export services through port binding

VIII. Parallelism

Scale your application with

IX processes . Disposability

Maximize reliability with quick start and correct shutdown

X. Application development / work parity

Keep development, staging and production environments as close as possible to

XI. Logging

Consider a log as an

XII event stream . Administration Tasks

Perform administration / management tasks using one-time processes

I. Code base

One code base monitored in a version control system - multiple deployments

An application of twelve factors is always tracked in a version control system such as Git , Mercurial, or Subversion . A copy of the database of monitored versions is called a code repository , which is often shortened to code repo or simply to a repo (repo) A

code database is one repository (in centralized version control systems like Subvertion) or many repositories that share common initial commits (in decentralized version control systems like Git).

There is always a one-to-one correspondence between the code base and the application:

- If there are several code bases, then this is not an application - it is a distributed system. Each component in a distributed system is an application, and each component can individually correspond to twelve factors.

- The fact that several applications share the same code is a violation of twelve factors. The solution in this situation is to allocate common code to libraries that can be connected through the dependency manager .

There is only one code base for each application, but there can be many deployments of the same application. A deployed application (deploy) is a running instance of an application. Typically, this is a working site deployment and one or more intermediate site deployments. In addition, each developer has a copy of the application running in his local development environment, each of which also qualifies as a deployed application (deploy).

The code base must be the same for all deployments, however, different versions of the same code base can be performed in each of the deployments. For example, a developer may have some changes that are not yet added to the intermediate deployment; An intermediate deployment may have some changes that have not yet been added to the operational deployment. However, all of these deployments use the same code base, so you can identify them as different deployments of the same application.

II. Dependencies

Explicitly declare and isolate dependencies

Most programming languages come with a package manager for distributing libraries, such as CPAN in Perl or Rubygems in Ruby. Libraries installed by the package manager can be installed accessible for the entire system (the so-called “system packages”) or available only to the application in the directory containing the application (the so-called “vendoring” and “bundling”).

The application of twelve factors never depends on implicitly available packages available throughout the system. The application declares all its dependencies completely and accurately using the dependency declaration manifest . In addition, it uses a dependency isolation tool.at run time to ensure that implicit dependencies do not “leak out” of the surrounding system. The full and explicit specification of dependencies is applied in the same way both in the development and operation of the application.

For example, the Ruby Gem Bundler uses

Gemfileas a manifest format for declaring dependencies and bundle exec- for isolating dependencies. Python has two different tools for these tasks: Pip is used for declarations and Virtualenv is used for isolation. Even C has Autoconfto declare dependencies, and static binding can provide dependency isolation. Regardless of which toolkit is used, declaring and isolating dependencies should always be used together — just one of them is not enough to satisfy twelve factors. One of the advantages of explicitly declaring dependencies is that it makes it easy to configure applications for new developers. A new developer can copy the application codebase to his machine, the necessary requirements for which are only the presence of the language runtime and the package manager. Everything you need to run the application code can be configured using a specific configuration command . For example, for Ruby / Bundler, the configuration command is

bundle install, for Clojure /Leiningen it lein deps. The application of twelve factors also does not rely on the implicit existence of any system tools. An example is the launch of ImageMagick and

curl. Although these tools may be present in many or even most systems, there is no guarantee that they will be present on all systems where the application may work in the future, or whether the version found in another system is compatible with the application. If an application needs to run a system tool, then this tool must be included in the application.Translator Comments

III. Configuration

Save configuration at runtime

Application configuration is all that can change between deployments (development environment, intermediate and operational deployment). It includes:

- Resource connection identifiers such as database, cache, and other third-party services

- Login details for connecting to external services, such as Amazon S3 or Twitter

- Deployment environment-specific values, such as the canonical host name

Sometimes applications store configurations as constants in code. This is a violation of the methodology of twelve factors, which requires a strict separation of configuration and code . The configuration can vary significantly between deployments; the code should not be different.

The litmus test of whether the configuration and the application code are correctly separated is the fact that the application code base can be freely accessible at any time without compromising any private data.

Note that this definition of “configuration” does not include internal application configurations, such as 'config / routes.rb' in Rails, or how the main modules will be connected in Spring. This type of configuration does not change between deployments and therefore it is best to keep it in code.

Another configuration approach is to use configuration files that are not saved to the version control system, for example, 'config / database.yml' in Rails. This is a huge improvement over the use of constants, which are stored in the code, but there are still disadvantages to this method: it is easy to mistakenly save the configuration file to the repository; There is a tendency when configuration files are scattered in different places and in different formats, because of this it becomes difficult to view and manage all the settings in one place. In addition, the formats of these files are usually specific to a particular language or framework.

An application of twelve factors stores the configuration inenvironment variables (often shortened to env vars or env ). Environment variables can be easily changed between deployments without changing the code; unlike configuration files, it is less likely to accidentally save them to a code repository; and unlike user configuration files or other configuration mechanisms, such as Java System Properties, they are a language and operating system independent standard.

Another approach to configuration management is grouping. Sometimes applications group configurations into named groups (often called “environments”) named after the name of a particular deployment, such as

development,testandproductionenvironments in Rails. This method is not scalable enough: the more different application deployments are created, the more new environment names are needed, for example stagingand qa. With the project growing further, developers can add their own special environments, such as joes-staging, as a result, a combinatorial explosion of configurations occurs, which makes managing application deployments very fragile.In an appendix of twelve factors, environment variables are unrelated controls, where each environment variable is completely independent of the others. They are never grouped together in “environments,” but instead are managed independently for each deployment. This is a model that scales gradually along with the natural appearance of more application deployments over its lifetime.

Translator Comments

IV. Third-Party Services (Backing Services)

Consider third-party services (backing services) as pluggable resources

A third-party service is any service that is available to the application over the network and is necessary as part of its normal operation. For example, data warehouses (e.g. MySQL and CouchDB ), message queuing systems (e.g. RabbitMQ and Beanstalkd ), outgoing email SMTP services (e.g. Postfix ), and caching systems (e.g. Memcached ).

Traditionally, third-party services, such as databases, are maintained by the same system administrator who deploys the application. In addition to local services, an application can use services provided and managed by a third party. Examples include SMTP services (e.g.Postmark ), metric collection services (such as New Relic and Loggly ), binary data warehouses (like Amazon S3 ), and the use of various services APIs (such as Twitter , Google Maps and Last.fm ).

The application code of twelve factors does not distinguish between local and third-party services. For the application, each of them is a pluggable resource, accessible by URL or by another location / credential pair stored in the configuration . Every deploymentan application of twelve factors should be able to replace the local MySQL database with any third-party managed one (for example, Amazon RDS ) without any changes to the application code. Similarly, the local SMTP server can be replaced by a third-party (for example Postmark) without changing the code. In both cases, only the resource identifier in the configuration needs to be changed.

Each different third-party service is a resource . For example, a MySQL database is a resource, two MySQL databases (used for fragmentation at the application level) qualify as two separate resources. An appendix of twelve factors considers these databases connected resources, indicating their weak linkage with the deployment in which they are connected.

Resources can, if necessary, be connected to the deployment and disconnected from the deployment. For example, if the application database does not function correctly due to hardware problems, the administrator can start a new database server restored from the last backup. The current working database can be disconnected, and the new database is connected - all this without any code changes.

V. Assembly, release, implementation

Strictly separate assembly and execution stages

The code base is transformed into deployment (not considering deployment for development) in three stages:

- An assembly phase is a transformation that converts a code repository into an executable package called an assembly . Using the version of the code specified by the deployment process, the build phase loads third-party dependencies and compiles binary files and assets.

- The release phase accepts the assembly obtained during the assembly phase and combines it with the current deployment configuration . The resulting release contains assembly and configuration and is ready for immediate launch at runtime.

- The runtime (also known as “runtime”) launches the application in the runtime by launching a certain set of application processes from a specific release.

An application of twelve factors uses a strict separation between the stages of assembly, release, and execution. For example, it is not possible to make changes to the code at run time, as there is no way to propagate these changes back to the build phase.

Deployment tools, as a rule, are release management tools, and, importantly, provide an opportunity to roll back to the previous release. For example, the Capistrano deployment tool saves releases in subdirectories of a directory with a name

releaseswhere the current release is a symbolic link to the directory of the current release. The Capistrano team rollbackmakes it possible to quickly roll back to the previous release. Each release must have a unique identifier, such as a release time stamp (e.g.

2015-04-06-15:42:17) or an increasing number (for example v100). Releases can only be added and each release cannot be changed after its creation. Any changes are required to create a new release. The assembly is initiated by the application developer whenever new code is deployed. The start of the execution phase, on the contrary, can occur automatically in cases such as rebooting the server, or restarting the fallen process by the process manager. Thus, the execution phase should be as technically simple as possible, since problems that may prevent the application from starting can occur in the middle of the night when there are no developers available. The build phase can be more complicated, since possible errors are always visible to the developer who launched the deployment.

VI. The processes

Run the application as one or more processes that do not preserve stateless state

An application runs at runtime as one or more processes .

In the simplest case, the code is an independent script, the runtime is the developer's laptop with the language runtime installed, and the process is launched from the command line (for example, like

python my_script.py). Another extreme option is a working deployment of a complex application that can use many types of processes, each of which is launched in the required number of instances . Application processes of twelve factors do not preserve internal state (stateless) and do not have shared data (share-nothing) . Any data that needs to be stored must be stored in a stateful third-party service, usually in the database.

The process memory and file system can be used as a temporary cache for a single transaction. For example, loading, processing and saving a large file in a database. The application of twelve factors does not imply that anything cached in memory or on disk will be available to the following requests or tasks - with a large number of diverse processes, it is likely that the next request will be processed by another process. Even with one process running, restarting (caused by deployment, configuration changes, or transferring the process to another physical device) will destroy all local (memory, file system) states. Asset packers

(e.g. Jammit or django-compressor) use the file system as a cache for compiled resources. An application of twelve factors prefers to do this compilation during the build phase , for example, as in the Rails asset pipeline , rather than at run time.

Some web systems rely on “sticky sessions” - that is, they cache user session data in the application process memory and expect subsequent requests from the same user to be redirected to the same process. Sticky sessions are a violation of twelve factors and should never be used or relied upon. User session data is good candidates for a data warehouse that provides a function to limit storage time, for example, Memcachedand redis .

VII. Port binding

Export services through port binding

Sometimes web applications run inside a web server container. For example, a PHP application can be launched as a module inside Apache HTTPD , or a Java application can be launched inside Tomcat .

The twelve-factor application is completely self-contained and does not rely on the injection of a web server at run time in order to create a web service. The web application exports the HTTP service by binding to a port and listens for requests arriving at that port.

During local development, the developer navigates to a URL of the form

localhost:5000/to access the service provided by his application. When deployed, the routing layer processes requests to the public host and redirects them to the port-bound web application. This is usually done by using a dependency declaration to add a web server library to an application such as Tornado in Python, Thin in Ruby, and Jetty in Java and other JVM-based languages. This happens completely in user space , that is, in the application code. The contract with the runtime is to bind the application to the port for processing requests.

HTTP is not the only service that can be exported through port binding. Almost any type of server software can be run as a process bound to a port and waiting for incoming requests. Examples of this include ejabberd (provides the XMPP protocol ) and Redis (provides the Redis protocol ).

Also note that the port binding approach means that one application can act as a third-party service for another application by providing the URL of the third-party application as a resource identifier in the configuration of the consuming application.

Viii. Parallelism

Scale application with processes

After launch, any computer program is one or several working processes. Historically, web applications have taken various forms of process execution. For example, PHP processes will execute as Apache child processes and run on demand in the quantity necessary to serve incoming requests. Java processes use the opposite approach, the JVM is a single monolithic meta-process that reserves a large amount of system resources (processor and memory) at startup and manages parallelism within itself using threads. In both cases, running processes are only minimally visible to the application developer.

In an appendix of twelve factors, processes are first-class entities.The processes in the application of twelve factors took strengths from the unix process model for running daemons . Using this model, the developer can design his application in such a way that for processing various workloads, it is necessary to assign each type of work its own type of process . For example, HTTP requests can be processed by a web process, and lengthy background tasks are processed by a workflow.

This does not exclude the possibility of using internal multiplexing for individual processes through threads of a virtual machine or asynchronous / event models in tools such as EventMachine , Twisted, and Node.js. But each individual virtual machine can only be scaled to a limited extent (vertical scaling), so the application should be able to be run as several processes on different physical machines.

A process-based model really shines when it comes to scaling. The lack of shared data and the horizontal separation of the application processes of twelve factors means that adding more parallelism is a simple and reliable operation. An array of processes of various types and the number of processes of each type are called process formation .

The application processes of twelve factors should never be demonizedand write PID files. Instead, they should rely on an operating system process manager (such as Upstart , a distributed process manager on the cloud platform, or a tool like Foreman in development) to manage the output stream , respond to process crashes, and process user-initiated reboots or shutdowns.

IX. Disposability

Maximize reliability with quick start and correct shutdown

The application processes of the twelve factors are one-time , which means that they can be started and stopped by anyone at the moment. This contributes to stable and flexible scaling, fast deployment of code changesand configurations, and operational deployment reliability.

Processes should try to minimize startup time . Ideally, the process should spend only a few seconds from the point in time when the start command was executed, to the moment when the process is started and ready to accept requests or tasks. Short launch time provides great release flexibilityand scaling. In addition, it is more reliable, since the process manager can freely move processes to new physical machines if necessary.

Processes must terminate correctly when they receive a SIGTERM signal from the process manager. For the web process, the correct shutdown is achieved by stopping listening to the service port (thus, to refuse any new requests), which allows you to complete the current requests and then complete. This model assumes that HTTP requests are short (no more than a few seconds), in the case of long requests, the client should smoothly try to reconnect when the connection is lost.

For a process performing background tasks (worker), a correct shutdown is achieved by returning the current task back to the task queue. For example, in RabbitMQ, a workflow might send a command

NACK; in Beanstalkd, a task is queued automatically when a workflow shuts down. Lock-based systems such as the Delayed Job must be notified in order to release the lock on the task. This model assumes that all tasks are reentrant , which is usually achieved by wrapping the results of work in a transaction or by using idempotent operations. Processes must also beResistant to sudden death in the event of hardware failure. Although this is a less likely event than the correct completion of the signal

SIGTERM, it can still happen. The recommended approach is to use reliable task queues, such as Beanstalkd, which return the task to the queue when the client disconnects or exceeds the time limit. In any case, an application of twelve factors should be designed to handle unexpected and graceful shutdowns. Crash-only design architecture brings this concept to its logical conclusion .X. Application development / work parity

Keep your development, staging, and production environments as similar as possible.

Historically, there are significant differences between development (the developer makes live changes on the local deployment of the application) and the operation of the application (deployment of the application with access to it by end users). These differences appear in three areas:

- Time difference: the developer can work with code that will get into the working version of the application only in days, weeks or even months.

- Personnel distinction : developers write code, OPS engineers deploy it.

- Tool Difference : Developers can use a stack of technologies such as Nginx, SQLite, and OS X, while Apache, MySQL, and Linux are used for production deployments.

The twelve-factor application is designed for continuous deployment by minimizing the differences between application development and operation. Consider the three differences described above:

- Make the time difference small: the developer can write the code, and it will be deployed in a few hours or even minutes.

- Make staff differences small: the developer who wrote the code actively participates in its deployment and monitors its behavior while the application is running.

- Make tool differences small: keep your application’s development and working environment as similar as possible.

Summarizing the above in the table:

| Traditional application | Appendix Twelve Factors | |

|---|---|---|

| Time Between Deployments | Weeks | Clock |

| The author of the code / the one who deploys | Different people | Same people |

| Application development / work environment | Various | As similar as possible |

Third-party services , such as databases, message queuing systems, and the cache, are one area where parity is important when developing and running the application. Many languages provide libraries that simplify access to third-party services, including adapters for accessing various types of services. Some examples are in the table below.

| A type | Tongue | Library | Adapters |

|---|---|---|---|

| Database | Ruby / Rails | Activerecord | MySQL, PostgreSQL, SQLite |

| Message queue | Python / Django | Celery | RabbitMQ, Beanstalkd, Redis |

| Cache | Ruby / Rails | ActiveSupport :: Cache | Memory, File System, Memcached |

Sometimes developers find it convenient to use lightweight third-party services in their local environment, while more serious and reliable third-party services will be used in a working environment. For example, they use SQLite locally and PostgreSQL in a working environment; or process memory for caching during development and Memcached in a working environment.

A twelve-factor application developer should resist the temptation to use various third-party services in development and in the work environment., even when the adapters are theoretically abstracted from differences in third-party services. The differences in the third-party services used mean that a tiny incompatibility may occur, which will cause the code that worked and passed the tests during development and intermediate deployment to not work in the working environment. This type of error creates interferences that offset the benefits of continuous deployment. The cost of this interference and the subsequent restoration of continuous deployment is extremely high when considered in total for the entire lifetime of the application.

Installing local services has become a less compelling task than it once was. Modern third-party services such as Memcached, PostgreSQL and RabbitMQ are not difficult to install and run thanks to modern package managers such as Homebrew and apt-get . In addition, declarative environment preparation tools such as Chef and Puppet , combined with a lightweight virtual environment such as Vagrant, allow developers to launch a local environment that is as close to the working environment as possible. The cost of installing and using these systems is lower in comparison with the benefits received from the development / operation parity of the application and continuous deployment.

Adapters for various third-party services are still useful, because they allow you to port the application to use new third-party services relatively painlessly. But all application deployments (developer’s environment, intermediate and working deployments) must use the same type and the same version of each of the third-party services.

Translator Comments

Currently, in order to keep environments as similar as possible, you can use Docker and its derivatives, for example, Docker Compose .

Xi. Logging

Think of the log as an event stream

Logging provides a visual representation of the behavior of a running application. Typically, in a server environment, a log is written to a disk file (“logfile”), but this is only one of the output formats.

A log is a stream of aggregated, time-ordered events collected from the output streams of all running processes and auxiliary services. A raw journal is usually presented in text format with one event per line (although exception traces can span multiple lines). The log does not have a fixed start and end; the message flow is continuous while the application is running.

An application of twelve factors never routes and stores its output stream.An application should not write a log to a file and manage log files. Instead, each running process writes its stream of events without buffering to standard output

stdout. During local development, the developer has the ability to view this thread in the terminal to monitor the behavior of the application. In intermediate and production deployments, the output stream of each process will be captured by the runtime, assembled with all other application output streams, and redirected to one or more final destinations for viewing and long-term archiving. These archiving endpoints are not visible to the application and are customizable by the application; instead, they are completely controlled by the runtime. Open source log routers (e.g.Logplex and Fluent ) can be used for this purpose.

The flow of application events can be redirected to a file or viewed in the terminal in real time. Most significant, the flow of events can be directed to an indexing and log analysis system, such as Splunk , or a general-purpose storage system, such as Hadoop / Hive . These systems have great capabilities and flexibility for a thorough analysis of the behavior of the application over time, which includes:

- Search for specific events in the past.

- Large-scale trend charts (e.g., requests per minute).

- Активные оповещения согласно эвристическим правилам, определяемых пользователем (например, оповещение, когда количество ошибок в минуту превышает определенный порог).

Комментарии переводчика

Сторонние сервисы хранения и индексации журналов:

И достаточно мощный и гибкий в настройке стек для самостоятельного развёртывания: Elasticsearch, Logstash, Kibana (ELK).

- Papertrail papertrailapp.com

- Loggly www.loggly.com

- Logentries logentries.com

И достаточно мощный и гибкий в настройке стек для самостоятельного развёртывания: Elasticsearch, Logstash, Kibana (ELK).

XII. Задачи администрирования

Выполняйте задачи администрирования/управления с помощью разовых процессов

Process shaping is a set of processes that are necessary to perform regular tasks of an application (such as processing web requests) when it is executed. In addition to this, developers periodically need to perform one-time administration and maintenance tasks for the application, such as:

- Starting a database migration (for example,

manage.py migratein Django,rake db:migratein Rails). - Running the console (also known as the REPL shell ) to run arbitrary code or test application models with a live database. Most languages provide REPL by starting the interpreter without any arguments (for example,

pythonorperl), and in some cases have a separate command (for exampleirbin Ruby,rails consolein Rails). - Запуск разовых скриптов, хранящихся в репозитории приложения (например,

php scripts/fix_bad_records.php).

One-time administration processes should be run in an environment identical to regular, long-running application processes . They are launched at the release level , using the same code base and configuration as any other process that runs this release. The administration code must be supplied with the application code to avoid synchronization problems.

The same dependency isolation methods should be used for all types of processes. For example, if the Ruby web process uses a command

bundle exec thin start, then you should use it to migrate the database bundle exec rake db:migrate. Similarly, for a Python program using Virtualenv, you should use the suppliedbin/pythonboth to start the Tornado web server, and to start any manage.pyadministration processes. The twelve-factor methodology prefers languages that provide REPL wrappers out of the box and that make it easy to run one-time scripts. In a local deployment, developers perform a one-time administration process using the console command inside the application directory. In a production deployment, developers can use ssh or another remote command execution mechanism provided by the runtime to start such a process.

Bug fixes and more suitable translations can be sent:

- Comment here

- Private message

- Pull rekvest on GitHub

- Pull by request to the official repository (when the transfer is accepted to the main branch)

Thanks to amalinin and litchristina for helping with the translation.