Chatbot on neural networks

Recently came across such an article . As it turned out, a certain company with a telling name “nanosemantics” announced a competition of Russian chatbots pompously calling it a “Turing Test”. ” Personally, I take such initiatives negatively - a chatbot - a program to simulate a conversation - a creation, as a rule, not smart, based on prepared templates, and their science does not move the competition, but the show and public attention are provided. The ground is created for various speculations about intelligent computers and great breakthroughs in artificial intelligence, which is extremely far from the truth. Especially in this case, when only bots written on the template matching engine are accepted, moreover, the Nanosemantics company itself.

However, scolding others is always easy, but to make something work is not so simple. I was curious if it is possible to make a chatbot not by manually filling out the response templates, but by training the neural network using dialog samples. A quick search on the Internet did not give useful information, so I decided to quickly do a couple of experiments and see what happens.

A regular chatbot is limited to superficial answers that are defined by given patterns. A trained chatbot (there are some) looks for answers to similar questions in its database of dialogs, and sometimes even create new templates. But all the same, his “mind” is usually strictly limited from above by a given algorithm. But a bot with a neural network operating on the principle of a question at the entrance - the answer at the exit - is theoretically unlimited. There are works in which it is shown that neural networks can learn the rules of logical inference by receiving input in natural language, as well as answer questions regarding various texts. But we won’t be so ambitious yet and just try to get something resembling the answers of a typical chatbot.

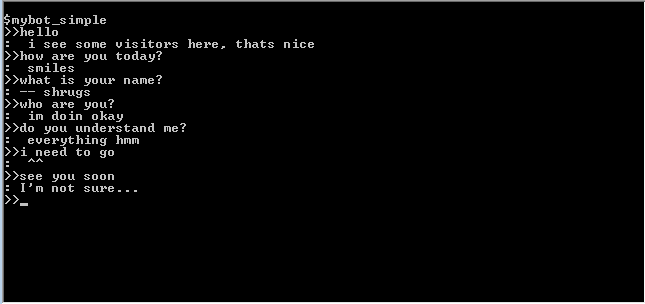

Only the transcript of the dialogue in English (consisting of 3860 replicas) was quickly found as the initial data. Well, it’s good for experience, because the language doesn’t make any fundamental difference with our approach. To begin with, in order to have something to compare, I hastily put together a simple chatbot that selects ready-made answers from a transcript based on the coincidence of words in the interlocutor’s phrases (according to the cosine of the angle between the question and answer vectors, which are “word bags”). The whole program fit lines at 30:

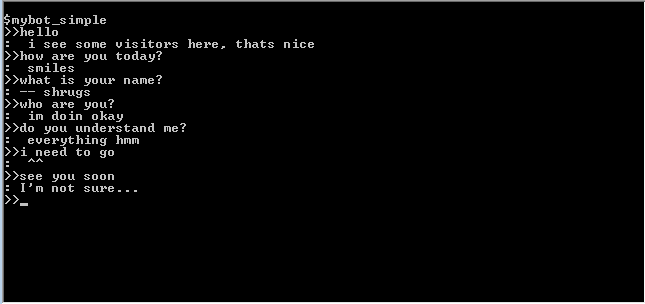

Here's what happened:

Translation (approximate) (H: - person, P: - program):

H: hello

P : I see visitors here, that's good

H: How are you

P: smiles

H: how are you are they calling

P:shrugs

Ch: Who are you?

P: I'm fine.

W: do you understand me?

P: Absolutely everything, hmm ...

H: I have to go

P: ^^

H: see you later

P: I’m not sure

With a superficial survey, she answers pretty well for such a simple program, she doesn’t know the truth of her name and who she is ... but nonetheless , a certain sense of presence develops - a good illustration of the fact that we are prone to over-animating really simple algorithms (or maybe just 90% of all communication is superficial?).

We turn now to more complex algorithms. I must say right away that the harder here does not mean immediately better, because template chatbots are created with the aim of "deceiving" the user, and use different tricks to create the illusion of communication, and our task is to do without cheating.

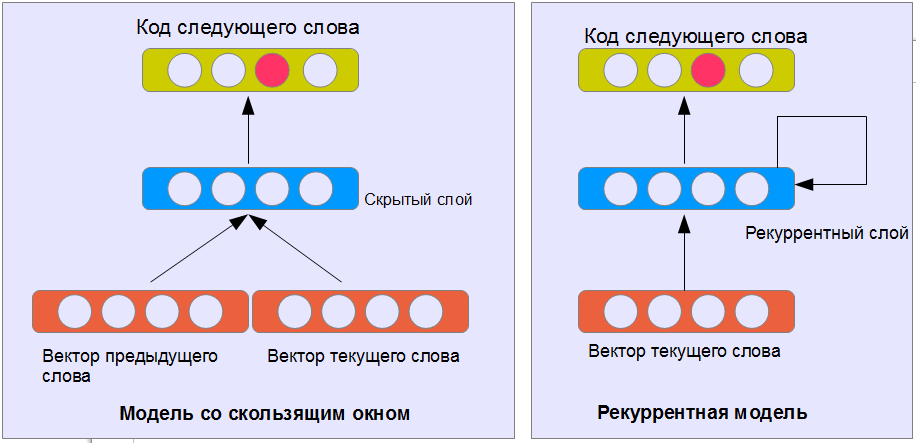

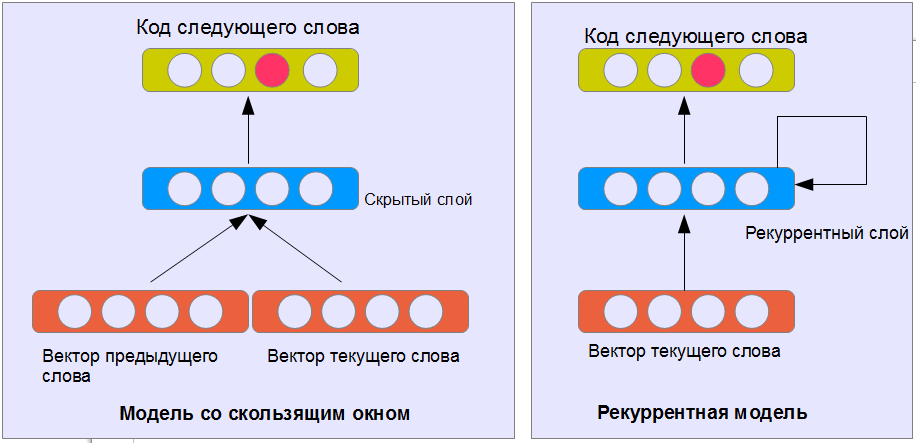

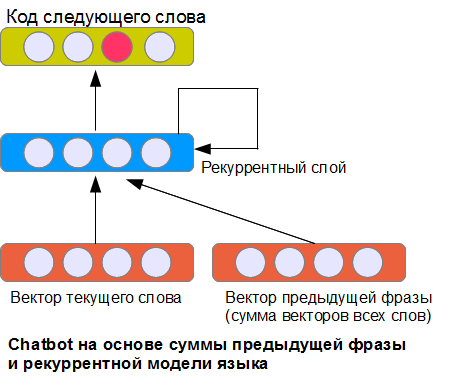

How to generate text using a neural network? The classic way now for this is the neural language model. The bottom line is that the neural network is given the task of predicting the next word based on the n-previous ones. The output words are encoded according to the principle of one output neuron - one word (see fig.). Input words can be encoded in the same way, or use a distributed representation of words in a vector space where words that are close in meaning are located at a shorter distance than words with different meanings.

A trained neural network can give the beginning of the text and get a prediction of its end (adding the last predicted word to the end and applying the neural network to a new, elongated text). This way you can create a response model. One trouble - the answers have nothing to do with the phrases of the interlocutor.

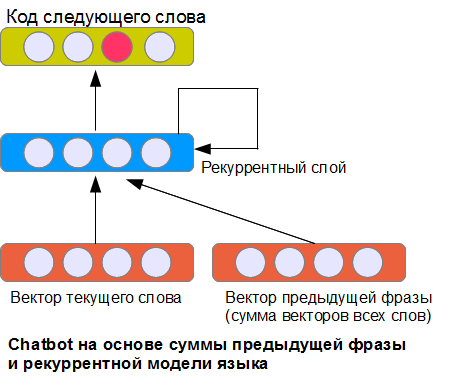

An obvious solution to the problem is to submit a representation of the previous phrase in the input. How to do it? Two of the many possible ways we examined in a previous article on the classification of sentences. The simplest NBoW option is to use the sum of all the word vectors of the previous phrase.

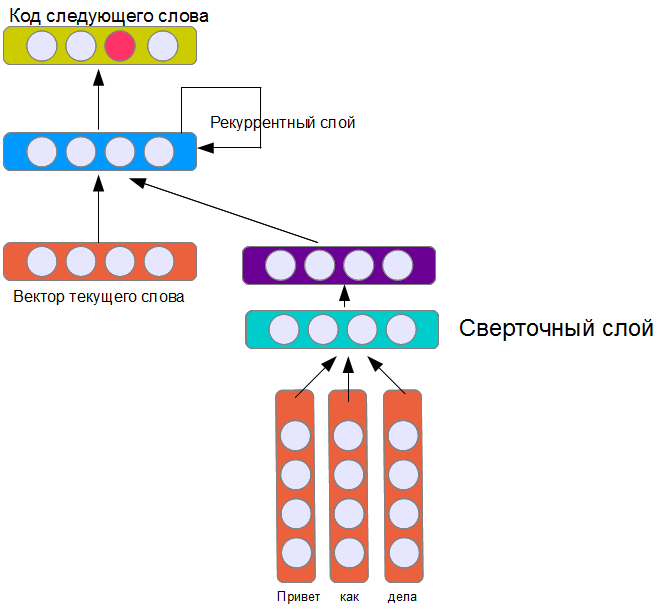

The architecture shown in the picture is far from the only one possible, but perhaps one of the simplest. The recurrent layer receives input relative to the current word, a vector representing the previous phrase, as well as its own states in the previous step (which is why it is called recurrent). Due to this, a neural network (theoretically) can remember information about previous words for an unlimited length of a phrase (as opposed to an implementation where only words from a window of a fixed size are taken into account). In practice, of course, such a layer presents certain learning difficulties.

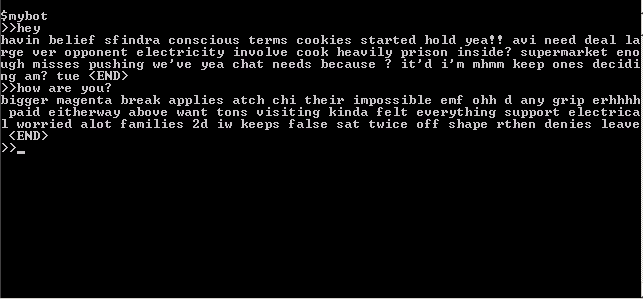

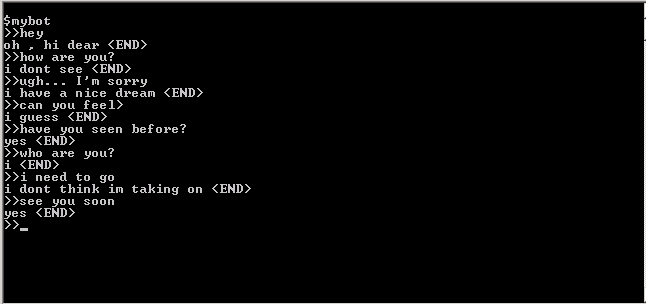

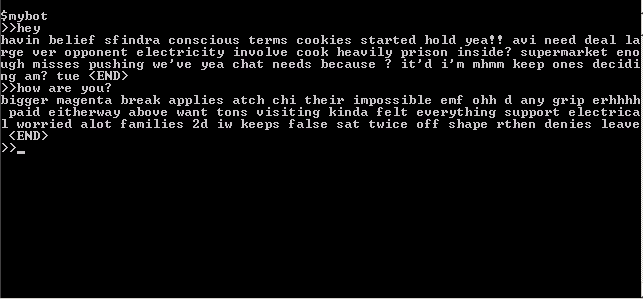

After training the network, the following happened:

hmm ... a more or less meaningless set of words. There is no connection between words and logic in the construction of sentences, but there is no general sense, and there is no connection with questions either (at least I don’t see it). In addition, the bot is overly talkative - words are added up in large long chains. There are many reasons for this - this is the small (about 15,000 words) volume of the training sample, and the difficulty in training the recurrent network, which really sees the context in two or three words, and therefore easily loses the thread of the narrative, and the lack of expressiveness of the presentation of the previous phrase. Actually, this was expected, and I brought this option to show that the problem is not being solved in the forehead. Although, in fact, with the correct selection of the learning algorithm and network parameters, more interesting options can be achieved, but they will suffer from such problems, like repeated repetition of phrases, difficulties with choosing the place where the sentence ends, they will copy long fragments from the original training sample, etc. In addition, such a network is difficult to analyze - it is not clear what is learned and how it works. Therefore, we will not waste time analyzing the capabilities of this architecture and try a more interesting option.

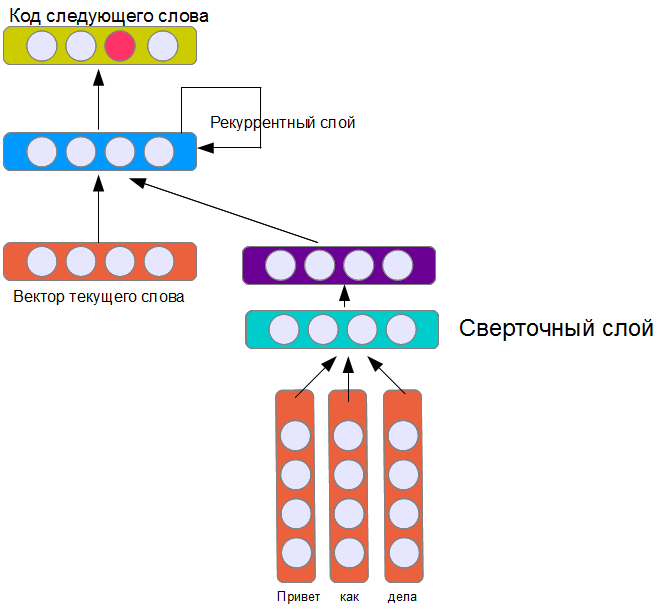

In the second version, I connected the convolutional network described in the previous article with the recurrent language model:

Like the network from the previous article, the convolutional layer contains 16 filters. From general considerations, it can be expected that this four-layer model will be harder to learn and the result will be worse. In addition, 16 filters seems clearly insufficient to create a replica model of the interlocutor. Indeed, her training takes much more time, but the result ... in general, judge for yourself:

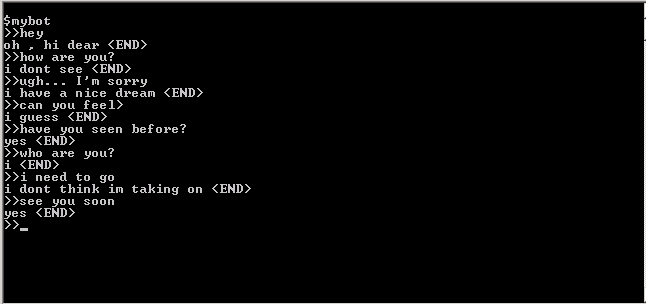

Translation (approximate) of the dialogue (H: - person, P: - program)

H: hello

P: oh, hello

H: how are you?

P: I can’t see

H: oops ... well sorry

S: it seems to me that I have a good dream

H:can you feel

P: I suppose

Ch: Could you see before?

P: Yes

H: Who are you?

P: I

H: I have to go

P: I am not sure I can accept this

H: see you later

P: Yes

In my opinion, quite spectacularly. It recalls fragments from science fiction novels where artificial intelligence comes to life on a computer, especially the program’s claims that it cannot see, but could see earlier. It’s time to declare the creation of AI and a great achievement ...

In fact, of course, this neural network does not have any intelligence, which does not make it, however, less interesting. The fact is that we can analyze what was actually learned by tracing the dependence of activation of convolutional neurons on input phrases. Through such an analysis, it was possible to detect a neuron that responds to greeting words ("hello", "hi", "he" y, etc.), as well as a neuron that recognizes interrogative phrases like "have you ...?" - the question mark is optional (the neural network has learned to answer “yes” usually), in addition, if the word “you” appears in the question, the likelihood that the answer will begin with the word “I” ("I").

Thus, the neural network learned some typical conversation patterns and language tricks, which are often used when programming chatbots “manually”, well managing the 16 available filters. It is possible that by replacing a simple convolutional network with a multilayer network, adding filters and increasing the training sample, you can get chatbots that will seem more “smart” than their counterparts based on manual selection of templates. But this question is already beyond the scope of our article.

However, scolding others is always easy, but to make something work is not so simple. I was curious if it is possible to make a chatbot not by manually filling out the response templates, but by training the neural network using dialog samples. A quick search on the Internet did not give useful information, so I decided to quickly do a couple of experiments and see what happens.

A regular chatbot is limited to superficial answers that are defined by given patterns. A trained chatbot (there are some) looks for answers to similar questions in its database of dialogs, and sometimes even create new templates. But all the same, his “mind” is usually strictly limited from above by a given algorithm. But a bot with a neural network operating on the principle of a question at the entrance - the answer at the exit - is theoretically unlimited. There are works in which it is shown that neural networks can learn the rules of logical inference by receiving input in natural language, as well as answer questions regarding various texts. But we won’t be so ambitious yet and just try to get something resembling the answers of a typical chatbot.

Only the transcript of the dialogue in English (consisting of 3860 replicas) was quickly found as the initial data. Well, it’s good for experience, because the language doesn’t make any fundamental difference with our approach. To begin with, in order to have something to compare, I hastily put together a simple chatbot that selects ready-made answers from a transcript based on the coincidence of words in the interlocutor’s phrases (according to the cosine of the angle between the question and answer vectors, which are “word bags”). The whole program fit lines at 30:

Here's what happened:

Translation (approximate) (H: - person, P: - program):

H: hello

P : I see visitors here, that's good

H: How are you

P: smiles

H: how are you are they calling

P:shrugs

Ch: Who are you?

P: I'm fine.

W: do you understand me?

P: Absolutely everything, hmm ...

H: I have to go

P: ^^

H: see you later

P: I’m not sure

With a superficial survey, she answers pretty well for such a simple program, she doesn’t know the truth of her name and who she is ... but nonetheless , a certain sense of presence develops - a good illustration of the fact that we are prone to over-animating really simple algorithms (or maybe just 90% of all communication is superficial?).

We turn now to more complex algorithms. I must say right away that the harder here does not mean immediately better, because template chatbots are created with the aim of "deceiving" the user, and use different tricks to create the illusion of communication, and our task is to do without cheating.

How to generate text using a neural network? The classic way now for this is the neural language model. The bottom line is that the neural network is given the task of predicting the next word based on the n-previous ones. The output words are encoded according to the principle of one output neuron - one word (see fig.). Input words can be encoded in the same way, or use a distributed representation of words in a vector space where words that are close in meaning are located at a shorter distance than words with different meanings.

A trained neural network can give the beginning of the text and get a prediction of its end (adding the last predicted word to the end and applying the neural network to a new, elongated text). This way you can create a response model. One trouble - the answers have nothing to do with the phrases of the interlocutor.

An obvious solution to the problem is to submit a representation of the previous phrase in the input. How to do it? Two of the many possible ways we examined in a previous article on the classification of sentences. The simplest NBoW option is to use the sum of all the word vectors of the previous phrase.

The architecture shown in the picture is far from the only one possible, but perhaps one of the simplest. The recurrent layer receives input relative to the current word, a vector representing the previous phrase, as well as its own states in the previous step (which is why it is called recurrent). Due to this, a neural network (theoretically) can remember information about previous words for an unlimited length of a phrase (as opposed to an implementation where only words from a window of a fixed size are taken into account). In practice, of course, such a layer presents certain learning difficulties.

After training the network, the following happened:

hmm ... a more or less meaningless set of words. There is no connection between words and logic in the construction of sentences, but there is no general sense, and there is no connection with questions either (at least I don’t see it). In addition, the bot is overly talkative - words are added up in large long chains. There are many reasons for this - this is the small (about 15,000 words) volume of the training sample, and the difficulty in training the recurrent network, which really sees the context in two or three words, and therefore easily loses the thread of the narrative, and the lack of expressiveness of the presentation of the previous phrase. Actually, this was expected, and I brought this option to show that the problem is not being solved in the forehead. Although, in fact, with the correct selection of the learning algorithm and network parameters, more interesting options can be achieved, but they will suffer from such problems, like repeated repetition of phrases, difficulties with choosing the place where the sentence ends, they will copy long fragments from the original training sample, etc. In addition, such a network is difficult to analyze - it is not clear what is learned and how it works. Therefore, we will not waste time analyzing the capabilities of this architecture and try a more interesting option.

In the second version, I connected the convolutional network described in the previous article with the recurrent language model:

Like the network from the previous article, the convolutional layer contains 16 filters. From general considerations, it can be expected that this four-layer model will be harder to learn and the result will be worse. In addition, 16 filters seems clearly insufficient to create a replica model of the interlocutor. Indeed, her training takes much more time, but the result ... in general, judge for yourself:

Translation (approximate) of the dialogue (H: - person, P: - program)

H: hello

P: oh, hello

H: how are you?

P: I can’t see

H: oops ... well sorry

S: it seems to me that I have a good dream

H:can you feel

P: I suppose

Ch: Could you see before?

P: Yes

H: Who are you?

P: I

H: I have to go

P: I am not sure I can accept this

H: see you later

P: Yes

In my opinion, quite spectacularly. It recalls fragments from science fiction novels where artificial intelligence comes to life on a computer, especially the program’s claims that it cannot see, but could see earlier. It’s time to declare the creation of AI and a great achievement ...

In fact, of course, this neural network does not have any intelligence, which does not make it, however, less interesting. The fact is that we can analyze what was actually learned by tracing the dependence of activation of convolutional neurons on input phrases. Through such an analysis, it was possible to detect a neuron that responds to greeting words ("hello", "hi", "he" y, etc.), as well as a neuron that recognizes interrogative phrases like "have you ...?" - the question mark is optional (the neural network has learned to answer “yes” usually), in addition, if the word “you” appears in the question, the likelihood that the answer will begin with the word “I” ("I").

Thus, the neural network learned some typical conversation patterns and language tricks, which are often used when programming chatbots “manually”, well managing the 16 available filters. It is possible that by replacing a simple convolutional network with a multilayer network, adding filters and increasing the training sample, you can get chatbots that will seem more “smart” than their counterparts based on manual selection of templates. But this question is already beyond the scope of our article.