How do i see the perfect browser

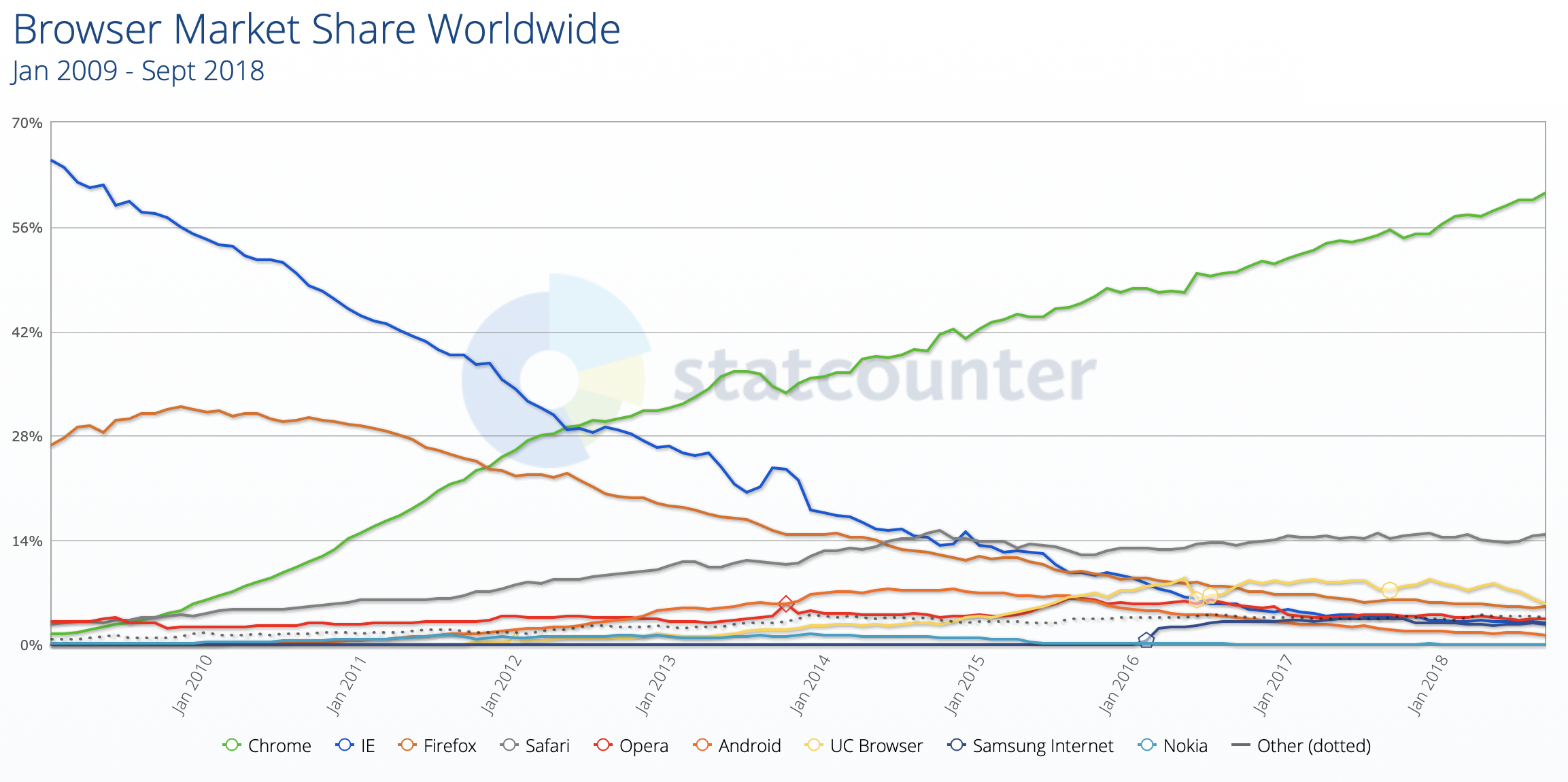

Recently, there have been many articles about the shortcomings of modern software, while no one is trying to offer their solutions to change the situation. This article is an answer to some articles about it, as well as about dreams of an ideal browser. How could rework the browser, its UI, methods of interaction with sites, improve protocols and user experience in general. If you have any, even the most courageous thoughts about this, then I suggest discussing them and perhaps laying the foundation for creating the perfect browser. Ultimately, sooner or later it will have to be done, as the situation in the browser market is currently not at all joyful. And it’s not a problem that other browsers are very complex and difficult to catch up with - we can go our own way, implement only the necessary parts of the standards, at the same time, we can introduce our own non-standard extensions. Do not run after others, let others run after us. Let our browser be created for people, and not in the name of the commercial interests of good corporations and strange consortia, which have long been of no use.

Recently, there have been many articles about the shortcomings of modern software, while no one is trying to offer their solutions to change the situation. This article is an answer to some articles about it, as well as about dreams of an ideal browser. How could rework the browser, its UI, methods of interaction with sites, improve protocols and user experience in general. If you have any, even the most courageous thoughts about this, then I suggest discussing them and perhaps laying the foundation for creating the perfect browser. Ultimately, sooner or later it will have to be done, as the situation in the browser market is currently not at all joyful. And it’s not a problem that other browsers are very complex and difficult to catch up with - we can go our own way, implement only the necessary parts of the standards, at the same time, we can introduce our own non-standard extensions. Do not run after others, let others run after us. Let our browser be created for people, and not in the name of the commercial interests of good corporations and strange consortia, which have long been of no use.

What should be in the perfect browser?

Search

If you make a new browser, then the first thing that should be in it is a local search for everything. By open tabs, cache, uploaded files and tons of meta information inside. The search should be both by index and regular, and the user should be able to select all possible options, including encodings of documents (for example, a very good search in Far).Once on one of the forums, I found an interesting concept of the algorithm. There was no discussion, so I quickly closed the tab, but the concept itself settled in my head. After thinking about it in the background, I decided to share my ideas ... But where? Quickly looking at the forums where I used to live - I did not find anything like that. In the search engines, too, nothing, but this is not surprising - the forums are not instantly indexed. I started rummaging through the history of the browser - I did not find anything, but this is not surprising, since it is large, it is inconvenient to use, I could miss something. I rediscovered almost all the pages - I did not find anything like it. I began to look for messages in the mail, in messengers, even asking people - without result. I had already begun to think that I was controlled by reptiloids, which inspired concepts, how I decided to use the last remedy: Browser cache file search. And almost instantly found the place he was looking for. It turned out that since no one answered the topic of the forum, the author thought that he had written nonsense, he was ashamed and he simply deleted the thread. And I was looking for this deleted topic for a long time and could not find it by winding myself.

Another time I needed to update the video file. The file was called 1.mp4 (I think many of these files have many). He was of some value to me, but unfortunately, he was beaten. Where did I download it? I had to re-look for it by the keywords that were in the video itself.

Sessions

When you try to deal with a new topic, dozens of tabs open by themselves. Link for the link and we have reading for a few days. And each tab is something important that needs to be read. What should we do? Especially when it all accumulates more and more?You can simply close all seen and rely on the story, they say in the future, if necessary, it will be found. Or dump all the tabs in bookmarks. Or even save / print pages - this is not very convenient, but the exact information found will never be lost (however, about saving information will be written further).

Or maybe save the entire session as a project? Give them meaningful names like “I was looking for a boat model”, “I am learning to program” and switch on / off as needed? The mechanism of profiles or sessions is now in every browser, only it is often somehow hidden, that it is difficult to find it, and even harder to use. Perhaps the only browser where such a mechanism is well implemented is the Edge browser in its latest versions. With all the shortcomings of this browser, this mechanism is made in it as convenient as possible and allows you not to accumulate tabs, but it is convenient to sort them. Of course, there is no limit to perfection, but to have at least such an option is a must. Better yet, be able to save such sessions along with page cache / content. But not in the way that browsers do now, keeping the cache in some binary form,

Privacy

The user must decide what information he wants to share with the site. I don’t have to look for different User-Agent toggle switches; such functionality should be built into the browser itself. For example, the Google.com search engine works fine if you introduce yourself as a link, and there is no unpleasant instant search that eats the input text.I want to be able to:

* set the width and height of the screen (any, even 50000x50000 pixels)

* color depth, regardless of the current settings

* add the site to trusted ones, so that its cookies do not sour when you click "clear all"

* replace fonts on the page, this provide the site with the list of fonts that it wants

* provide an arbitrary User-Agent, perhaps even a random one, taken from a large options file or tied to a specific site

* choose the content language and see what is sent to the server, and not just the “preferred language” that is not yet known what the

number will unfold * and the sequence of headers, including emulation of known bugs from other browsers

. In general, everything that can be fingerprinted should be changed. I want to have this opportunity.

The funny thing is that ancient browsers have a lot of settings for this. For example, browsers such as links, w3m and netsurf not only allow you to disable the Referrer / User-Agent, but also provide many different interesting options where you can fine-tune the behavior of the browser as it will fill in these fields. While only future versions of Firefox will learn to do this, and only partially, without giving 100% user protection, without any options, rigidly defining behavior only under certain conditions (however, we will talk about site settings and conditions).

Flying ships

For a very long time, MSIE did not support position: fixed, for which it was scolded. And as practice shows, it is good that it did not support. True, this did not stop people and they emulated it through JS with jumping menus, which have been preserved to this day on millions of sites.Today, overlapping elements are used for all sorts of useful things: login windows on the whole screen, getting out while browsing the pages and which cannot be removed (facebook), pop-up assistants, who appear to be chat bots, messages on the whole screen about promotions and gifts, as I won something , sometimes they just show me advertising (by itself without ads, but without the close button), transparent pop-ups that don't let me click on the page (pornhub), and the apotheosis: they tell me that I have to disable AdBlock, which I don’t have.

Have you tried to print any page? And here I often “print” PDF and I want to beat those who make pop-up stretch marks like “we use cookies” or “breakin news here” somewhere above or below the screen. Well, or just a fixed menu at the top and a simple footer at the bottom. No, well, it looks nothing on the screen, you can scroll the page and somehow read what they are blocking. Did you know that this stuff is printed on every page? And printed over the text? And that the paper does not scroll, although it is very eager, because this crap is blocking some of the content that can not be read? So far, I have to break off styles or delete some individual menus through the element inspector / ublock, only after that I can “print” the page. It is somewhat annoying. But if there were simple managed items,

But inside the browser engine it is possible to detect when an element overlaps with textual information and ... Well, for example, tuck it off to the side. Or even break off styles, declaring them dangerous. There are lots of options. You can think of the page as layers and give the user a couple of buttons to “cut off” the upper layers or return them back, as is done in some toys or three-dimensional editors - I want this function for a year!

It's funny, but once IE refused to render the blink tag, but it allowed to move the browser window from JS and make unclosed pop-ups. Today, even displaying text in the status line is already difficult, it is easier to emulate it. Now I propose to do something with overlapping text blocks, perhaps somehow break off this function. And you have to break off more and more features so that they cannot be used to harm. Yes, for the sake of this, you can write your browser, albeit without complying with the standards, but convenient for reading the text, besides, less programming.

Snapshot page

It so happens that, having opened some simple page with some text, you sometimes forget about it and leave it hanging in tabs, “for later”, “not to forget”. As a rule, there is nothing special. For example, they tell how to grow strawberries in the country and nothing at all that foreshadows trouble.And having come to the computer in a few hours, you notice that the MOUSE COURSOR IS MOVING, EVERYTHING IS IN THE SWEEP, IT IS IMPOSSIBLE TO WORK ON THE COMPUTER, HORROR OPENING THE LIST OF PROCESSES AND KILLING GADGES (if you can wait for the lists of the lists of processes to open and if you can draw lists of the lists of processes and if you can draw lists of the lists of processes and if you can draw lists of the lists of processes and if you can draw lists of the lists of processes and if you can wait for the list of the lists of the processes, then you can download lists of lists of processes and if you can draw lists of the lists of processes and if you can wait for the lists of the lists of processes to open and if you can draw lists of the lists of processes and if you can draw lists of the lists of processes and if you can draw lists of the list of processes And here the tab closes just with this innocent site.

To prevent this from happening, I once wrote a plug-in for Firefox, which, 5 seconds after the page was loaded (onload events), replaced setInterval / setTimeout / requestAnimationFrame with empty calls that didn’t do anything, and the existing ones disconnected. Basically, I was happy. True, all sorts of interactive elements, like unfolding spoilers, also stopped working, since there were no more timers, and the opening of the spoiler started the timer for animation. Is it a big price? I had to abandon my plug-in, since I could not return handlers for some event, but if we write our browser — why not?

Alternative implementation: after 10 seconds from the onload event, we stop the entire JS, unload the DOM and leave only those structures in memory that are needed to render rectangles with text, tables and pictures. Everything, let the background tab be something like a picture with text, nothing more. Another alternative: we render the entire layout in a separate process, and load only the coordinates of the text and images after rendering, as it was in Opera Mini, so our browser will be even a bit safer.

The most interesting thing is that in the modern Opera something similar already exists, but it turns on only when switching to battery power. I want to always have this option for sites, especially for sites that I visit for the first time. In general, in the guts of all modern browsers there are many useful functions, but they are hard-coded and cannot be customized by the user, because of which browsers practically lose huge potential.

Content caching locally

I tell the idea for a million bucks: download page for 0ms. No, even if the site is completely in our cache, it will not open until we send a request, wait for Round Trip Time, do not parse the answer, and then do the same with all the remaining scripts and styles. And what prevents to open it IMMEDIATELY from the cache and in the background to carry out content validation, sending immediately requests to ALL resources in the background, in the background, using double buffering, update the data in case of changes, just by redrawing pictures and blocks of text? You say what already was in IE and was called "Work autonomously"? Yes, in IE there are still a lot of good and interesting functions, but firstly, this function did not always work, and secondly, we will not be able to refresh the page, and in my case the page will be automatically redrawn as it is validated.Unfortunately, in the modern web caching is not just not working well, but rather not working at all. But what prevents to forcibly save pages to disk? This would allow not only to open the site in case of his death, to save some useful content, but also to track, for example, the dynamics of changes in prices for goods or catch interlocutors on the fact that they have changed their posts. Of course, you can save pages to disk manually ... But as a rule, you remember this only when you already have to go back to some old version, but you don’t have it, and there’s not enough hope for a web archive. Sometimes you can pick out content from search engines, but this does not always work, especially if you didn’t have time. This would be especially useful in cases where the content is easily divided into separate manageable elements, but more on that later.

Of course, caching should be in the form of incremental diffs (otherwise there is not enough space for everything), with intelligent parsing of non-displayable information (there is no need to store the changing code of counters), with changes highlighted, with the choice of old versions directly from the address bar. You can store already parked pages as a set of rectangles and their coordinates on the screen, thus rendering can be accelerated, and images can be downscaled and stored in the form of h265, which is much better than jpeg-pictures - we save space. And if we have spent so much effort on forced cache and refining, then why not share it with someone else? User interface is important here. Not only should a feature be, but it should be convenient to use: open different versions of a page, delete or save versions of pages, announce them as a public cache,

To speed up page loading and insure yourself against unexpected code injection, various scripts such as jquery and the like stored on different CDNs can be loaded directly from the local disk, as does the Decentraleyes extension. Download fonts and icons packs will be instant. Learn more about what already exists: addons.mozilla.org/en-US/firefox/addon/decentraleyes. Of course, it would be nice to make injections of your code, by analogy with browser.js (not only by the hands of the authors) or Grease Monkey (only without Trojans), so that you can change / correct the code of sites. No, not crutches in the form of a plug-in, but native support, which will not slow down, as it once was in Opera. But alas, there is simply no convenient means for patching the site code. Richard Stallman calls this the “tivoization” of sites, but this will be written in the section on code signatures.

Add here some hypothetical sitemap.xml, which determines the relationship of articles, pages for proactive caching, a link to a certain tracker for p2p content sharing ... And we get a self-replicating site that can be saved and used locally, which will withstand any loads and content that will not die never. But about this, as well as about the distributed sites, we will talk further.

Code Signature

Many of us do not think about it, but the browser can execute a code of different people, written under different, including non-free licenses. And not the fact that the user agrees with these licenses. It's like having sex without prior consent. In principle, in most cases, nothing bad will happen, but there may be nuances. Richard Stallman wrote an excellent article “The Javascript Trap”, based on which the LibreJS extension was written: en.wikipedia.org/wiki/GNU_LibreJS - this is what should be the starting point for interpreting Javascript in our browser!If the indication of the license were part of the standard, life would be a little easier, but it is not. If the authors of the code signed it with their public key, then I could at least trust various authors, but there is not even that. It remains only to hash the scripts, including the smallest ones sewn into the page, and ask the user "whether to allow it?" To run each of them, leading the database of allowed or prohibited scripts. Something of antivirus level. Also, the search for "virus" by signatures, but instead of a heuristic analyzer - an indication of the license and questions to the user. On the basis of such hashes, you can not only protect yourself from malicious code, but also build a versioning system. Create an infrastructure where only the code you trust will be launched! You're tired of fighting scripts, who turn the text and ask to disable Adblok? I would disconnect, but I do not have Adblock, as well as the confidence that tomorrow they will not ask me to donate or subscribe to some kind of scam.

If you are not familiar with the remarkable work of Richard Stallman, I recommend reading: www.gnu.org/philosophy/javascript-trap.ru.html (in Russian).

Site Evaluation / Anti-Rating

Some browsers, such as Opera, for some reason tried to fix each site with their hands, making patches through the injection of custom code. And once they got tired of it, the result we all know. Although they were deservedly proud of their achievements, which were confirmed in different puzomerki, performing tests for compliance with the standard.But it was possible to go another way: instead of patching something, writing someone on an email, using personal connections and all that, you could output the text of the patch directly over the page with the words “the author of this site does not adhere to the standard, the next the code could fix this site. ” Took a call IE-only? No emulation, instead of it a big red popup about the author’s professional suitability (of course, not overlapping content). Many users will certainly ignore this, but someone can ask the authors of the site a question: “Why is it that there are so many reds?”. And the site owners will tell you how they saved money on programmers. Or to tell the client that it would be necessary to put "a normal Guugle Chrome", because of which the client would rather leave them. If this site will display something like “location.href = 'http://google.com/',

You can go further: the picture on the page is displayed as 100x100, but in fact is 500x500? Red popup with the message that the author does not know how to resize pictures. Picture with photo-realistic graphics reaped in PNG? Red popup that the author does not understand the file formats. On the page there is no link to the main page? Red popup with the message that the author of the site did not make normal navigation.

Of course, red popup can not always display. For example, if a PNG image can be better optimized through optipng, then you can display just a little red varning, as their ad blockers do. Something like this is already being done by various CDN optimizers, which pinch the images, and the code is minified, and at the input they even try to filter the SQL injections. But all this joy will be only if the author paid the money and connected the relevant services, and what should the simple user do? A simple user can simply refuse to use a poor-quality site, and his browser should help him with this.

Already, the ad blockers report, which displays tsifirki, can be considered a kind of anti-rating site. The more anti-rating - the worse the site and the author should do something about it. For some values, you can simply display the varning that visiting this site may be undesirable. And I think that the browser should share its findings with the community. You can create a global rating for each site by hooking on the cherished numbers to each link, so as not to accidentally go somewhere where the user will experience a “bad experience.” Of course, you can not automate everything. Therefore, you can create several ratings, some of which will be conducted by living people, manually checking the code, checking their licenses and the quality of the code, the quality of the site as a whole. Of course, the mechanism should be decentralized and uncontrollable to specific individuals.

Individual site settings

Each site or group of sites must have its own individual settings, just as it could be configured in the old Opera (up to and including 12 versions). Only this mechanism can be improved.First, identify sites not only by domain or subdomain, but also by regular expression in the domain. Or by the received IP-address of this domain. For example, I do not want to run scripts on sites / resources from Yandex (for the reasons, see below), I could find lists of blocks of IP addresses belonging to Yandex and gently block the execution of an unreliable code. It is simple and easy. But at the moment I have to limit myself to a ban of individual domains (I don’t know all of them!), Putting all the address ranges in the firewall, which is extremely inconvenient, or raising my DNS server with the addresses for the * yandex * mask, which I doing at the moment.

Secondly, in order not to create entities, you can create basic profiles, such as “trusted website”, “regular website”, “bad website”, “for websites from Vasyan”, “for Aliexpress” and assign your settings to this or that website . And depending on the profile, your User-Agent, the sequence and contents of the headers will be sent, the loading of styles, fonts, scripts and everything else that can be customized is supported or not supported. Even determine whether it is possible to intercept the right mouse click, with what accuracy to start timers or to play animations and sounds (for some reason, a request for MIDI appears on AliExpress). You can also provide settings that change randomly, such as random User-Agent values from a large list or an arbitrary proxy for a specific site (more on this later).

Copy and Paste

It would seem, what could be the most basic function in programs that display text? Working with selection / copying / pasting text of course!Alas, but even with a simple selection of problems already begin. Have you tried to highlight a link? In the browser, in the mail, in IM? And how is it? Somewhere the link starts to drag, somewhere you go over it, even if you did not release the button, but somewhere you need to aim at the millimeter gap to be able to select it. Selecting pictures is a separate lottery, sometimes this cannot be done at all, except by pressing the secret hacker combination CTRL + A. Step left and right - and we have highlighted the entire page, and not the paragraph in which we aimed.

Or the text may not stand out at all, creating the illusion of a broken mouse button. Even if we took aim and were able to select the text, it is not a fact that when we press the right mouse button, we will not get a cool warning like "The text of this page is cool protected." Or nothing will appear at all, since browsers learned how to break off such scripts automatically. Or it does not come out, please send a report about a typo found on the page. When I read a text, I often select it with a mouse for easy perception, and this kind of filth is extremely annoying.

With the insert is even worse. Will formatting be preserved or not? Sometimes it depends on whether you are using a hot key, or using a “wheel” - a different behavior, for the seemingly one action. Will there be gaps in what is inserted into third-party applications if there are no gaps between the blocks? And sometimes you will not get rid of formatting: you paste copied text within a page, for example, within a typed letter, and the paragraph being typed suddenly becomes bold and / or turns into a quote.

Last fashion: substitute the contents of the clipboard. You copied the text about the seals, without looking at it, inserted it into the chat and ... Ogrebli ban, since, together with the desired text, the advertisement of the resource where the copy came from was also inserted. Of course, you need to be careful and cautious, watch what and where you send ... But on the other hand, why do my text viewing tools allow themselves to behave like this?

Distributed storage

Local content caching, which we talked about earlier, is only part of the needs of a modern web user. The second important part of the problem is content caching on the server, on the way to the client on different CDNs and the like. In fact, small sites may be faced with the fact that you need too much traffic to deliver, in fact, almost static files. All over again. And they have practically no way out, except to feed CloudFlare so that it will provide its distributed cache.CloudFlare itself has an interesting RailGun technology: www.cloudflare.com/website-optimization/railgun- This is a cool crutch that allows you to cache not cached, with the help of which they do not just cache old versions of pages, but also make diffs with them and send the already reassembled difference from their servers. Thus, it turns out that you can update the page with just 1 data packet of 400 bytes (the number is taken from the description), and the original server can be hosted even on the phone (in fact, it is not). But for such a thing you have to pay, from $ 200 per month, which is very substantial money for small sites.

Oh, and if it were possible to divide the content into small manageable elements ... But yes, more on that later. While there are crutches like diff and cloudflare with its Railgun.

But the IPFS split file system already exists. And there is ZeroNet, which already right now, out of the box, allows you to host websites distributed. You can try to download the client and look at this unusual network, which does not need a server!

However, there is nothing new here. About 15 years ago, popular websites had a desktop client (and sometimes not one) and something like a torrent distribution bundled with it. And today it exists in one form or another, for example, the WikiTaxi application, which allows you to keep Wikipedia in your pocket. And I also remember such a thing as AportExpress, inside of which was a full-fledged template engine and native Aport templates from the server, which collected pages on the client.

Improved networking

Can you imagine that sometimes people connect to the network via different GSM modems, where an already low speed is reduced by poor signal reception conditions / bad contract terms? And there are sites like imgur.com/a/XJmb7 , where there are very beautiful things, but the weight of the page itself, including the entire schedule, exceeds tens of megabytes. That's just the problem - such pages can not be viewed with this connection.Today, the browser tries to load all the pictures at the same time, slowing down the loading of each of them (for this, they also make a bunch of sub-domains in order to bypass the limits on the number of connections). After some time, the timeout comes and the server simply closes the connection, leaving us with beaten pictures, which are good, if at all, to open up somehow. If you press F5, then for a moment there will be a drawing (the cancellation of the download and the display of what has already been downloaded), and then the download will start from the very beginning, without resuming the individual images. And you also often noticed that the browser “loads” a page or file first at a speed of 50kb / s, then at 20kb / s, and then 3kb / s? This means that the actual download speed for some reason became equal to 0 bytes / sec, and to terminate the connection and start again is very difficult,

But the web server can generate torrent-files for statics and distribute them automatically, which will allow both downloading files and removing the load from the network channel! At its core, a torrent file is just a list of checksums that allow you to download a file from an arbitrary location and verify the correctness of the downloaded one. Thus, even under-downloaded images can be easily deflated, even from the 5th attempt, to accurately solve the issue with versioning and cache validations.

And since we are giving the client metadata about the files, then the whole page can be designed as “one big distribution” in the form of a single data package, inside which will be indicated both information about the page, and about image files, styles, related pages and other references (including other "distributions"), such a small binary sitemap. This will allow to better cache / optimize websites, load all resources faster, not waiting for full page or scripts, and even optimize websites for people with disabilities, offering them advanced navigation through pages. Or do not download any items at once, for example, Epplovye icons on half of the screen or a lot of video.

Unfortunately, modern developers are trying to deal with these problems in their own way, without providing settings and realizing all this with their own hands, i.e. "we'll see how it goes". For example, uploading pictures / video through a bunch of JS, a lot of domains and processing page scrolling, which is why you can not quickly squander a page to the “tenth page”, which really pisses me off. Fortunately, some large vendors such as Xiaomi began to struggle with this, asking each time “Do you want to play a video? An additional fee may be charged for this! ”, But so far it is impossible to set up an automatic ban on such outrages, and there are still many ways to bypass the developer’s part.

If we have already touched endless sites with endless scrolling, then I would like to note: nothing prevents you from showing the empty frame of the whole giant tape to the user so that he can safely navigate it, and load the content dynamically. But no one does.

Downloading sites

Suppose I found a website with strawberry growing manuals. He admired, caught fire with the idea, went to the dacha and ... And having encountered problems, he realized that it was necessary to convert each page into PDF, and only then go to the dacha. Why in PDF? Yes, because modern pages even one by one do not want to be saved correctly, and what will be displayed when opening local HTML and where it cram Cookies we can only guess.But in the old days I could take Teleport Pro and pump out the entire site with strawberries, pour it on my phone and quietly go to the country! All pictures will be deflated, all links will be perelinkovany, almost everything will work. There were even sites with already downloaded sites - an indispensable thing for training in those years, as well as search engines on JS, working directly in the browser!

But what will happen today if I try to do so? I’m waiting for the discovery that in modern sites the pages are dynamic, each page has a thousand URLs and I can easily download three pages 10,000 times, carefully linking them, and I’ll not go to the right page even if it is downloaded (by a path of 50 links, which I will have to go through exactly the same way as the rocking chair did).

And if you really want? In this case, we now take and write the site parser, pick out the content (regular or xpath), somehow we link it using self-made scripts, we attach the index from shit and sticks, maybe even the simplest search engine. All this takes from 1 day, until then, until you get bored. You can simply accumulate text in Word and obscenities from the fact that everything is inserted by the red Impact and the markup travels to such an extent that it is impossible to look at it. You can turn on video recording and flipping pages - a less time-consuming option, although such a record will weigh a lot, but in our times few people care.

In this place, I would have to write that in an ideal browser I need the function of downloading sites, so that later I can easily transfer content to a phone or any other * stand-alone * device. But taking into account the above, alas, it is impossible. But if our content was divided into small manageable elements ... But alas. Therefore, a modern browser, in addition to all of the above, should be able to not only parse these same elements, but also store them in a local database, version and be a kind of small CMS.

And do not think that modern sites, in principle, impossible to deflate. On the contrary, statics comes into fashion again, there are even interesting and popular projects like github.com/jekyll/jekyll for generating statics. So why not distribute the "source" of the site?

Disclaimer: Teleport Pro is used here only as the most well-known software for downloading sites, it is never advertising or nostalgia, I personally did not like it because of a bunch of temporary files and the inability to parse javascript correctly. My choice was other rockers, not so widely known, like webzip, which, although they demanded a lot of resources, inserted advertising into the pages, but pumped out the content correctly and completely.

Media content

Like small controlled elements that turn into an uncontrollable monolith, the authors of the sites make primitive means for viewing media content. Simply put, every first site tries to show me a video through its unique web player. Unique there, of course, the logo and glitches.No, once upon a time I also boasted that I could write a cool web player on a flash, and I would do it in just 20 lines! I'm cool, I can do anything! With age, I began to ask myself questions:

1. How to twist the brightness / contrast in this? And dynamic normalization?

2. How to switch to fullscreen? And if there is no button, because you forgot to draw it?

3. How to speed up a boring lecture for 3 hours?

4. How to twist the equalizer? Lecturers are barely audible, even if you unscrew the speakers

5. How would you cut that piece of paper and send it to a friend?

6. How would you quickly go back, for a couple of seconds, without aiming with a mouse in a small striped?

7. How to make it give out more than 15 fps?

Some vendors are already trying to solve this problem. The problem is in the form of primitive homemade with basic features. For example, in Opera you can “peel off” the player from the page and manage it separately. There is a youtube-dl, which allows you not only to download videos from a heap of services, but also to get a link so that you can shove it into a normal player, at least in VLC. There is also StreamLink and MPV, try it, for sure you will like it more than regular players.

But we can go further by applying all the above principles to multimedia. If something wants to lose, then we ask the user, then we download it, cache it locally, decode it and display it - just like in any other browser. But since we understand that the browser is not a multimedia application and can not satisfy all requests, we can display a button next to it, which will launch a normal player with displayed content. Let's trust the professionals and fans who have spent many hours of their lives doing this. People who live music or video, and not who are forced to attach the player to the site for 20 bucks per hour.

To ensure that the connection to the video source does not RELE and the video does not download again, we can open a local proxy server, as torrent clients do, with repacking the video stream on the fly, which we use to distribute the video to an external application, and when the request comes - some of them will be left out of the cache, and some will be fed in real time, according to the requests of the application and the capabilities of the site. Similarly, any video / audio can be easily saved as a file, even if it was originally a live broadcast or dynamically generated media scripts and files as such did not even exist. And it is not necessary somewhere in the guts of pages to look for direct links, to fight with redirects, or to include heavy artillery in the form of recording video from the screen - the browser should be for the user, and only the user will achieve his here no one will hurt him. The most difficult thing here is probably an injection into the Flush process. But its life cycle ends, because too often it should not be updated.

Filtering inappropriate content

If at the words about filtering inappropriate content, only porn or intrusive advertising usually comes to mind, but you have encountered a problem on almost any site when it would be better not to see this or that content. Remember how you crawled on different sites, rewinding kilometers of search results with the same type of results. Or not the same type, but did you try to find something of your own among the data set?For example, on a job / freelance website you can often see that someone needs to write an essay, but you do not do essays, just like you do not write in JS or PHP, but often take out and throw out all such projects from search results it is simply impossible, as well as relying on categories - nobody normally indicates them. Or are you watching the news feed, and after the crash of the next plane you don’t know where to go from the news about the plane, especially if there were relatives and it hurts you? Some people got some kind of fashionable and famous expressions, or even just trends like spinners or Pokemon, as a result of which even special browser plug-ins appeared to simply not see. And who would not like to add some “friends” to the blacklist, never to see their posts again? And still not to see news about the rhino with a brawl with their brazen advertising, covered up by testing open source programs and free help to the community ...

The funny thing is that in almost any email client there are rich filters for sorting / auto-removing spam, but almost no website has this functionality. If our content would be sliced into small manageable elements, then we could filter / highlight so as not to waste our time on what we are clearly not interested in. And I would not have to write parsers for some sites that removed 90% of the content and provided me with the squeeze in the form of the remaining 10% in the format I needed. And not 10 elements per page. At least 1000 each . RSS readers could be used, but RSS / Atom is far from being everywhere, especially it is lacking in search results.

Small managed items

So what are these small and manageable elements of the site mentioned earlier? To make it easier to understand, let's imagine a static json file with any information. Or XML, or SQLite database, or XLS-file, or CSV-textbook, or something that has not even been born yet, but necessarily binary, compressed and nanotechnological ... And inside there are small pieces of information. Small because they are indivisible logical units. This can be a link in the navigation bar, a snippet for the product description, the product itself with all the properties, a user comment, or even the whole article. It can be any separate site widgets: search field, order basket, login / login field.Managed because, unlike a whole-blind monolith, we can manage such data: identify the necessary, sort, output in the direct and reverse order, filter or decorate with our data, creating mashups that in their time made a lot of noise. Nearly every site has a database for which SQL is used to manage. SQL is behind relational theory, relational algebra, and many, many methods of information management. And just below, I will show how it would be possible to manage the information, and how little the authors of the sites give us, if at all.

For example, I'm trying to find new cool work in the field of the demoscene. I'm going to pouet.net, I poke the Prods, and then ... On the one hand, I want only cool work, because I sort the works by the number of Lois. In the first place I see my favorite fr-041: debris, as well as other works that I have seen more than once. But I want something fresh! I poke it means on release date, here only svezhak. But which one is the best? How would i combine 2 sorts? Or at least to make a sample by the time interval of the form "for the last six months" and only then sort? Alas, I was not given the tools to do this. But each of the works could be represented in our JSON-file as an element from the array of works, based on the data scheme, our browser could draw controls that were not dependent on the authors of the site, where we would perform the sampling for our pleasure.

Another example: we all know that a search is better than that of Google simply does not exist. But sometimes he considers himself so clever that he throws whole phrases from a search query, translates them into different languages and shows what he considers more useful. I do not need this. Where is the tick "stop clever, I'm the main one"? Previously, it consisted in the correct placement of quotes and plus signs, and now it is located at bing.com- a more primitive search is immediately turned on, but it searches for exactly what I need and does not try to be clever, does not ignore my keywords, does not ignore the terms of the request. If he finds something at all, and if he does not find it, he will honestly say about it, not trying to think of something from himself. In that case, if we were given out small managed items, then we could easily combine the search results from both search engines into a single search tape, for this we would only need to connect 2 of the same type arrays.

Very often, the search results on individual sites are cluttered with spam or similar ads, or even just crooked descriptions of something. For example, one blouse can have 20 color options - I’ll need to completely scroll through all my eyes. And at best, I can only remove from the issuance of some categories of goods or the withdrawal of ads from someone, but this is extremely inconvenient, and often this functionality is simply not provided. If we have small manageable elements, I can simply filter the careless seller, or even immediately select the clothes of the desired color.

Again, back to the sorting. As with the search for beautiful demoscenes, when searching for goods in online stores there are often several parameters that are interesting, but you can sort the results of a sample only by one. If at all possible. Even the largest trading floors suffer from this. If they returned raw data, they would be very easy to manipulate. In practice, it is necessary to open 50 pages and manually compare product descriptions, toss coins and hope that the purchase will be successful. There are no fraudulent schemes, when a comb for 1 dollar is added to the lot as an accessory, and in fact the minimum cost is from 10 dollars. There are more interesting methods. When I bought my first tablet, I pumped up descriptions of 15,000 products and parsed them with regulars in search of keywords I needed - it was very slow,

But let's go back to our strawberries. Or rather, to the site with manuals about growing strawberries.

Let us imagine that the instruction for growing strawberries is a resource (still in the form of a json file, for simplicity), which can be requested separately, within which there is a semantic markup (it will tell us which pages it refers to and the type of links). No navigation, tops of the best tips or comments from other users - only pure content. Well, tops, comments and tips there somewhere near of course can also be, but the main thing is that it is not in the form of a monolith, you can accurately identify the desired data types. Of course, advertisements and scripts will certainly be added here, but more on that later. While we believe that we have pure content, straight from the database (or even from the content editor). This is easy to deflate, fold, index, not to mention the ease of caching and content delivery. Such elements can be used for pre-caching both on CDN and in the browser, creating bulk packages with content for efficient compression and loading (so as not to pull each button by 50 bytes separately) for versioning and archiving. Such data can be twisted for a long time in the browser without any load on the server, play for a long time with sorts and different samples. The funny thing is that this is all exactly the way it is stored in the databases inside the CMS managers. But outside it all goes through a “monolithizer,” which imprints data into monolithic HTML, which is then very difficult to work with. Such data can be twisted for a long time in the browser without any load on the server, play for a long time with sorts and different samples. The funny thing is that this is all exactly the way it is stored in the databases inside the CMS managers. But outside it all goes through a “monolithizer,” which imprints data into monolithic HTML, which is then very difficult to work with. Such data can be twisted for a long time in the browser without any load on the server, play for a long time with sorts and different samples. The funny thing is that this is all exactly the way it is stored in the databases inside the CMS managers. But outside it all goes through a “monolithizer,” which imprints data into monolithic HTML, which is then very difficult to work with.

You can make a lot of interesting features, having on hand such data. For example, you can parse posts on forums, cache them and then watch deleted posts.

Where are such small items? What is already there?

It’s hard to believe, but attempts to separate content from its presentation have been around for quite some time. The first sign was RSS, which copes well with the role of snippets delivery. Yandex.market requires stores to upload in a special XML-format, which contains prices, pictures, data about the manufacturer and even delivery. Other sites have their own upload formats, for example, Google Merchant uses a slightly modified RSS2.0, but in general, these formats can be read and rendered today.If you delve into dreams, that is, all sorts of opengraphs and microformats, and in HTML5 a lot of things have been added, but alas, it is difficult to rely on it today. On the other hand, many sites already contain semantic markup, therefore it is silly to refuse to read it.

You could exchange pure XML or JSON with a bunch of named and semi-standardized fields. You can even share the databases themselves in SQLite format or generate small samples in it. The main thing is that there should be clean data, without any code (more on that later).

Where to take happiness?

At first, until the developers understand the advantages of the new way of interaction, we ourselves will have to get our happiness. Simply put, I suggest parsing sites and ripping out the entities we need from them. You can do this with xpath, trendy CSS selectors, or good old regular expressions. Yes, for each site on the Internet you will need to write your own parser. At first glance, this is a titanic work with an unachievable result, but is it?Today there are several projects that specialize in parsing sites. Some, such as Octoparse, require almost no knowledge, the necessary blocks are selected with the mouse. As well as the plan to "crawl" the site is also filled with the mouse. This means that the entry threshold for “programming” will be extremely low, even a housewife, if desired, will be able to make her own parser if she wants. If the parser fails or stops working, the browser will simply display the page as it is, until someone else writes a new parser.

There are also closer projects that are already working today. For example, this is an Instant View feature in the Telegram. People have already written many parsers that bypass known sites and only pure content parsat. And when someone posts a link to such a site in Telegram, the cherished button Instant View appears. If you press it, then only pure content will arrive, without ads and other garbage. The download of which will spend only a few kilobytes of traffic and memory, and not megabytes of traffic and gigabytes of memory, as it would be in the case of the browser. Loading such a small amount of data occurs instantly, hence the name of the feature - Instant View. If a parser breaks down, then there is a bugtracker and a community ready to write a new parser, in which a handy editor will help. So if someone cannot believe in the possibility of such an idea,

True, our task will be a bit more difficult, because besides displaying the text of articles, we need to display also tapes with articles, navigate through sections of the site (articles, forum and store - all this cannot be interfered into a single tape). It is necessary not just to take the necessary out of the page, but also to decide according to which tables it should be decomposed. For example, I really like to read comments, and if only the main news is pulled out or the article - the value of the resource for me will be less. For example, I used to watch Youtube via SkyTube and found many new and interesting comments, but when I switched to NewPipe, I was left without them. This also suffers from a set of parsers like youtube-dl. And how to decompose the received content on the shelves is a big question, not every housewife will be able to design a database structure. An even bigger question is how to navigate this content. What is the main, and what is a subsidiary? Some years ago, I already wrote a universal parser with heuristics, so he cut out the main content, leaving only comments, because he thought that comments was the main thing.

It is even more difficult to understand what to do with the received data, how to display them. Only existing methods come to mind here: HTML templates, PHP and SQL. And if browsers are already being made on NodeJS, why not add PHP to the browser for one of the features? I am not a fan of this language, but the threshold for entering it is minimal, and where it will be impossible to get by with some simple patterns, people will be able to program on it (or any other language, see below). Something like this generation of pages reminds me of the ancient Aport Express - a small program from the search engine Aport, which was engaged in templating search results directly on the client, reducing traffic on the dialup used then. If someone wants to dive into the story, then you can read on web.archive.org/web/20010124043000/http: //www2.aport.ru: 80 / aexpress /, and download at web.archive.org/web/20040627182348/http : //www.romangranovsky.narod.ru: 80 / aexpress.exe

Banners and trackers

No, advertising itself does not annoy me: over the years of network presence, I have developed banner blindness, which consists in the fact that I simply do not see the blocks in “prominent places”, as well as the blocks that are written in some non-standard font or in capital letters. Sometimes it gets ridiculous - I’m looking for the “registration”, “download” or “new theme” buttons for a long time, as they are made large and visible, and I just don’t notice them. Sometimes, until I receive a screenshot with a circled button. And it's not a question of traffic or speed. Today, this is a security issue, because firstly, banner advertising is an ** executable ** code, which means it is not only leakage of personal data for the so-called “targeting” and tracking everything, but in fact just a security hole, through which it is possible to flood or just a miner. If earlier it was possible to say “do not go on porn sites and everything will be fine,” then now “porn site” is built into almost every website, every page.But the particular pain I get trackers, and active, working on the page all the time. I can consider Yandex.metrics as an example of such nasty things; everything inhibited it unbearably. All the Yandex domains were banned and my life was filled with happiness, as the websites suddenly stopped slowing down and I even stopped thinking about upgrading hardware. The Yandex domain ban is the first thing I do when I set up a system for someone. People lose absolutely nothing, but the speed of browsing increases by an order of magnitude.

The solution is very simple: the ability to specify "friendly domains" for the site and disable requests to everything else. So you can cut ads using Request Policy or analogue, which, unlike AdBlock-shaped cutters, will work on almost every site, does not require subscriptions, and will help even if the site was hacked and a bunch of malicious code was placed on it.

I hear there cries that cutting advertising reduces the income of the authors? Do not forget that no one has been paying for displaying banners for a long time. Showing _mne_ advertising is meaningless, it only spends my traffic and server traffic, but will not bring a penny to the authors. But it can only make me angry. At one time, I wrote a set of utilities for highly-distinguished sites.

If, however, come to terms with the collection / leakage of personal data, and the user is angry enough, I would recommend to install something like the wonderful extension AdNauseam. Probably the only ad blocking extension that was banned by Google. The essence of the work of this extension is very simple: it clicks on each blocked element in the hidden mode, i.e. displaying nothing to the user. Advertisers get their long-awaited clicks, everything they wanted. And if you click on each banner, then the leaked personal data will be mixed with a bunch of garbage, since they will not correspond to the real preferences of the user. Targeting and surveillance become useless. Very good extension. And a brilliant idea.

Advertising (Target Profile)

Condemn? Offer! Yes, I condemn the practice of collecting targeted data, especially with the help of surveillance and similar bad (for me) techniques. Why not specify target data directly in the browser? I will tell you everything about myself, no surveillance and viruses are needed:Gender: male

Age: 55 years old

Education: secondary special

Interests: fisting, bdsm, shemale, chastity devices, gasmask breath control

Residence: Klyuchi (a settlement) in Ust -Kamchatsky District of Kamchatka Krai

Last check in the store: 28 rubles (loaf of bread)

Financial status: no money, I live on allowance and personal garden

Gardening to freemium products:I write negative reviews about them, put cokes

Profile in social networks: no and there will not be

Credit card: no and there will not be

Looking forward to the proposals that I can use, taking into account my profile.

I understand perfectly well that publishers need to somehow earn and survive in our difficult times buying their own plane or villa, but they also need to understand users who are annoyed by the advertisement that they are in any case unavailable. I also understand very well that not all advertising can be cut off, therefore I am for targeted advertising, the profile of which is easy to provide. And no banners.

I would also like a user rating mechanism for leaked advertising. For example, I open the video on Youtube, the channel of my beloved Kreosan, and at some point, Kreosan himself begins to talk about some kind of casino there. I would gladly select an area with ads and post data about this area as “advertising”, so that later, other users could easily skip such ads. Sometimes advertising is found in the text of the articles themselves, and articles in general are disguised advertising. It is very unpleasant for me to read such articles, therefore I would gladly mark them as an “advertisement”.

Native proxy / VPN support

Unfortunately, some stupid people decide for me whether I can use this or that service, and they do it on the basis of what country I was born / live in. And not only sites (sad look in the direction of Google Play). For example, I can use Spotify only if I live in the USA, but I can use the Advcash service if I do not live in the USA. Of course, if you are unlucky at birth, then it is not necessary to vegetate in a backward country, in theory you can go to the right country, but I don’t know how to live in 2 countries at the same time.Solution: built-in VPN mechanism, and it must be fully configured for each site separately. For some, I will only be German, for someone an American, and I will make purchases from the country for which they offer lower prices.

Why not buy a normal VPN and not use it, why drag it all to the browser? Then, that only the browser can separate one site from another, sharing each tab. If we route all traffic through the system VPN, then we will need to constantly switch, and sometimes catch disconnects and bans, if we forget to do it.

Plugins

Sometimes there is content sliced into tiles. For example, it can be satellite maps or photos. In principle, it can be picked out from the browser today, but what next? It is inconvenient to look in the form of separate tiles. Glue? What and how? Of course, I can write a brute-force that will compare the edges of the tiles and look for options for seamless gluing, but here you can make a mistake, and if the browser additionally stores information in the cache about where and which tile was located relative to other tiles, then the gluing will be quick and unmistakable! You can attach a convenient export of tiles directly from the cache or the current page!And I don’t want to invent and enter logins / passwords on each site, instead I would like to indicate some random Seed, from which both logins and passwords for a specific site would be generated. For example, I specify the line “soMeRanDOooo0MStr11nng” and when entering the website example.com these 2 lines are concatenated and create a UID, based on which you can generate anything, including logins / passwords (and even better, the rest of the personal information to it was possible to register in one click and not think about what other names besides Sergey exist without using fakenamegenerator). And the ability to fumble these passwords on the bugmenot. By the way, such a generator is already in Safari!

In other words, the browser should provide a flexible plugin mechanism. Moreover, the plugins should be inside the browser, so that you can poke almost every part of the browser, and not as a JS injection after loading pages or buttons inside the toolbar. Of course, I want to write plug-ins in the C language, compromises in speed of processing pages are simply unacceptable.

Browser parts as services

Almost every browser has a utility for downloading files. This thing with a curved interface that downloads files to some incomprehensible directory is not able to resume, and then also says that viruses have been detected inside the file. But this thing is, and most importantly, it is part of the browser, which means it uses cookies and other session attributes. This means that after logging in to any site, we no longer need to pick out cookies in order to put them in wget or curl. The browser itself can act as such a utility, fully supporting the current session. And this means that we can initially develop both the network subsystem and such a self-made curl with a single code base and weak connectivity with the main browser code, but more on that later.Almost every browser has a built-in primitive file lister that can display the contents of the local disk directories. It makes it crooked, but it is often much better than nothing at all. But the old opera was able to fumble files between users and even had an application with a Refrigerator on which to draw. Yes, the guys really did the future. And a little ahead of him.

In the browser, there may be an email client that would be nice to use from the command line, with detailed history maintained. This would allow to automate a lot of tasks, ranging from debugging spam, to sending reminders. Reminders can also be taken from the integrated RSS service.

Partial browser

Writing a whole browser is quite a challenge. Moreover, many things, such as file rocking, an RSS reader or an e-mail client, do not even come to mind when they hear the word “browser”. At a minimum, these applications can be written separately, maybe in the form of full-fledged applications, maybe in the form of bindings over existing ones, and maybe even as temporary solutions from a couple of hundred lines in a scripting language.Work with the network can also be taken out in a separate daemon. Nearby you can make a dns-resolver with a built-in blacklist of domains and auto-update of blacklist lists, a content caching subsystem, and a whole lot more. Even rendering can be put into a separate process, as it was in Opera Mini (and that can be done using merged sources, since this code does not even get directly into the project, but will be a third-party plug-in, then the license purity is also preserved). github.com/browsh-org/browsh - here the engine is rendered somewhere on the foreground, and the rendered one is sent to you in the form of text and text pseudographics - it looks very cool, you can even watch the video

At first, all this can be implemented as independent microservices, one developer can write in java, the other in python, and the third in Ruby and they do not have to quarrel, choosing a technology stack. After all, everyone is familiar with the situation that someone cannot submit a browser to Java because of the brakes, someone is afraid of Sishka because of fear of vulnerabilities, and someone wants to try the trendy Go and agitate for it? Here everyone will be able to choose a small part for themselves and be strictly responsible for it, it will only be necessary to agree on a communication protocol. And if some parts work poorly, then they will eventually be simply replaced. Or take and adapt existing solutions for closer integration, as was done in the Arachne browser.

Even the renderer can be executed in a separate process and transmit only information for display. At first, you can simply take the existing code from w3m / links / netsurf, then those who wish can attach switchable modes from Gecko / Servo / Blink.

Of course, it is supposed to write a huge number of plug-ins. Bookmarks, including those synchronized via the cloud or recommendation services, multi-level tabs with previews and auto-completion of forms based on neural networks - whatever your heart desires. Maybe someone has at hand the source code of a multi-threaded file rocking (or someone saw something on a githaba), who can start porting this code to a new platform right now?

And of course, here you can follow the old principle: let each program do one thing, but it does it well. The browser is a very complex set of programs that work with the network, and hence the complexity of the whole system. So maybe it is easy to divide our browser into the maximum number of parts, ensuring the quality and reliability of each of them?

Plugins as guaranteed features

Part of the plug-ins can be defolone in the installation. For example, plugins for the operation of tabs, file downloads, a plug-in for the address bar with autofill, pom-poms and dragons, and similar things that any browser has. But I suggest to go a little further and include a little more in the default delivery. Of course, this is a slippery slope, which can lead us to Bloatware, but in my opinion, you should not be afraid to experiment (of course, not like Mozilla does, which includes leaky extensions from various affiliate programs without the possibility of disconnecting).For example, do you remember in IE6 such an incomprehensible button as Discuss? She appeared after installing the MS office, almost never worked, because her work needed server SharePoint. But the thing was great: when you clicked it, a toolbar was opened, through which you could add comments to the page, there was still some tree-like chat (although I don’t remember all that well, but I couldn’t google it), and it worked with any page. Just imagine: comments on any site, without the moderation of the authors, where, boldly and directly in the face, you can express everything that you think about any site. I believe that such a plugin simply had to be bundled with our browser.

Another example: many sites open a Google or Yandex map map on the “location map” page, and this is considered good practice, no one even asks questions of privacy, much less asking the user if I want an outside organization to know which objects Are they interested in the city? Such elements can be cut and replaced with OSM cards or even local repository cards. No one bothers to download the full OSM dump and make local maps; one or two gigabytes on the disk today means almost nothing.

Results

Here is my vision of the perfect browser. Of course, not everything is written about everything: the topics of replication, multilevel forms and protection of user data are not affected, there is nothing about the business model or ways to attract sponsors for the project. And sponsors are needed, because few people who do this amount of work for free will bring something usable for free. Not described and how to protect themselves from the interests of the sponsor, because at the output we can get another Firefox with telemetry plug-ins, reporting the disconnection of telemetry.But at this stage, the most important thing in creating the perfect browser is people. Write your ideas, thoughts, and if you can help the project with code or layouts, feel free to offer your help. Especially interesting is the criticism of the above ideas and thoughts. Perhaps, if not me personally, then someone who has read this text, will be able to write a good browser. I started writing this article a year ago, as an answer to some other topics, I was going to arrange crowdfunding, but the fuss of life was distracting, because I publish it as it is, for other people to think it over.

This text is available under the Public Domain license and you can freely distribute it anywhere. Perhaps in this way we (people in general) will be able to get a browser that is at least a little more convenient to use.