Genetic Algorithm - A Visual Implementation

About four years ago, at the university I heard about such an optimization method as the genetic algorithm. About him everywhere exactly two facts were reported: he is cool and he does not work. Rather, it works, but slowly, unreliably, and nowhere to use it. But he can beautifully demonstrate the mechanisms of evolution. In this article I will show you a beautiful way to look at the processes of evolution live on the example of this simple method. You need only a little math, programming and all this seasoned with imagination.

So what is a genetic algorithm? This is, first of all, a method of multidimensional optimization, i.e. method for finding the minimum of a multidimensional function. Potentially, this method can be used for global optimization, but there are difficulties with this, I will describe them later.

The very essence of the method is that we modulate the evolutionary process: we have some kind of population (a set of vectors) that multiplies, is affected by mutations, and natural selection is made on the basis of minimizing the objective function. Let us consider these processes in more detail.

So, first of all, our population must multiply . The basic principle of reproduction is that the offspring is similar to its parents. Those. we must ask some kind of inheritance mechanism. And it would be better if it included an element of randomness. But the rate of development of such systems is very low - the genetic diversity is falling, the population is degenerating. Those. the value of the function ceases to be minimized.

To solve this problem, a mutation mechanism was introduced , which consists in the random change of some individuals. This mechanism allows you to bring something new to genetic diversity.

The next important mechanism is selection.. As it was said, selection is the selection of individuals (it is possible from only those born, but it is possible from all - practice shows that this does not play a decisive role) that better minimize function. Usually, as many individuals are selected as there were before breeding, so that from era to era we have a constant number of individuals in the population. It is also customary to select the “lucky ones” - a certain number of individuals who, perhaps, poorly minimize the function, but will bring diversity to subsequent generations.

These three mechanisms are often not enough to minimize function. So the population degenerates - sooner or later the local minimum clogs the entire population with its value. When this happens, carry out a process called shake(in the nature of analogy, global cataclysms), when almost the entire population is destroyed, and new (random) individuals are added.

Here is a description of the classical genetic algorithm, it is easy to implement and there is room for imagination and research.

So, when I already decided that I want to try to implement this legendary (albeit unlucky) algorithm, it came to the conclusion that what would I minimize? Usually they take some terrible multidimensional function with sines, cosines, etc. But this is not very interesting and not at all clear. One simple idea came - an image where the value is responsible for brightness is excellent for displaying a multidimensional vector. Thus, we can introduce a simple function - the distance to our target image, measured in the difference in pixel brightness. For simplicity and speed, I took images with a brightness of 0, or 255.

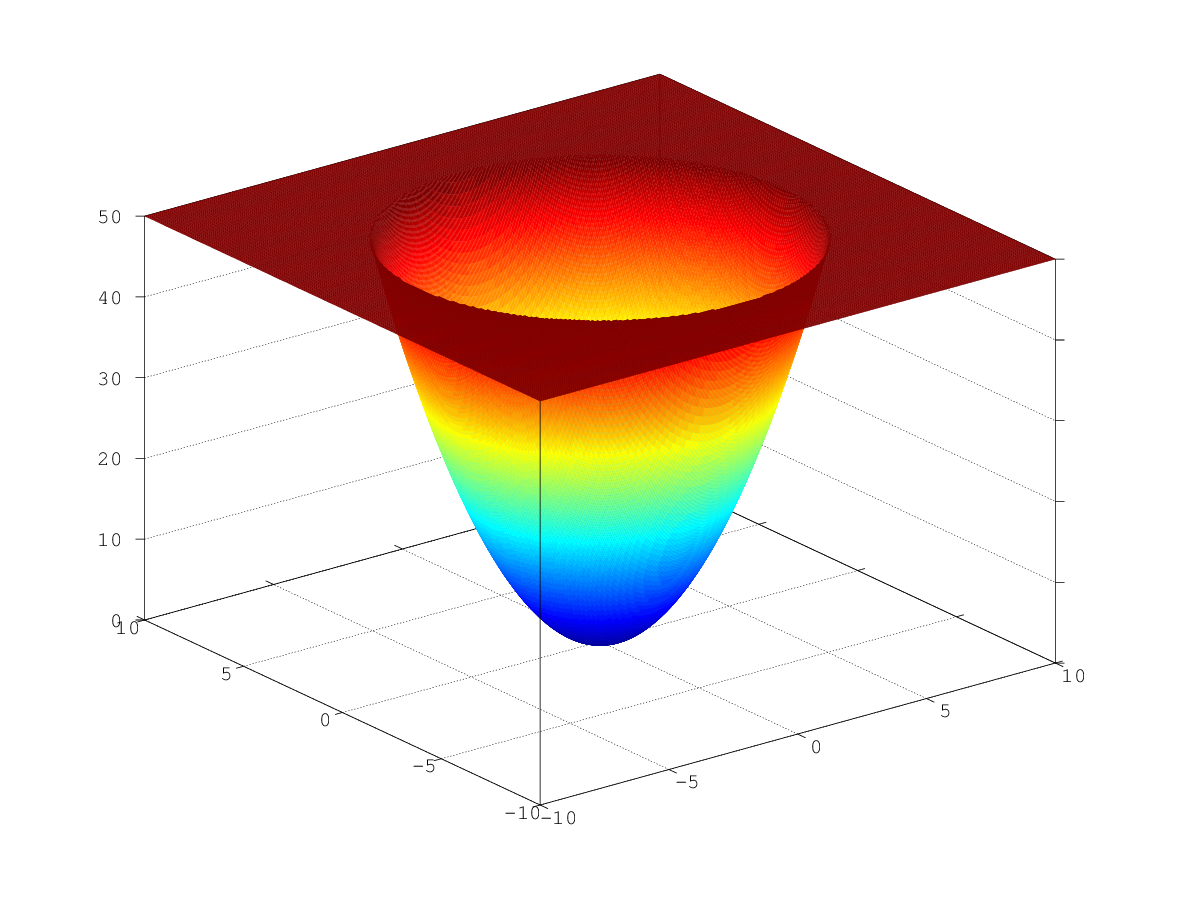

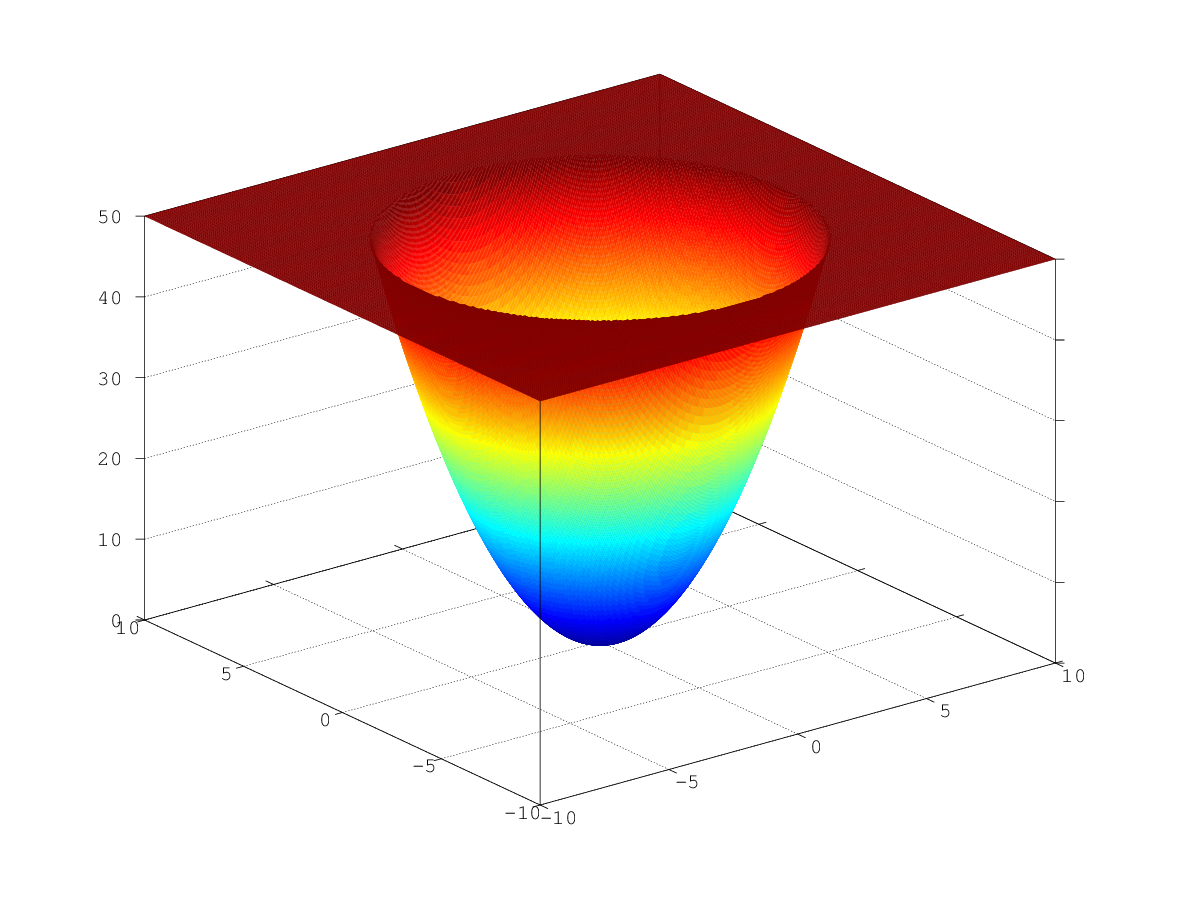

From the point of view of mathematics, such optimization is a mere trifle. The graph of such a function is a huge multidimensional “pit” (like the three-dimensional parabaloid in the figure), which you will inevitably slide into if you follow the gradient. The only local minimum is global. .

.

The only problem is that the number of paths along which you can go down is already greatly reduced, and in total we have as many directions as measurements (i.e. the number of pixels). Obviously, solving this problem using the genetic algorithm is not worth it, but we can look at the interesting processes taking place in our population.

All the mechanisms described in the first paragraph were implemented. Reproduction was carried out by simply crossing random pixels from the “mother” and “father”. Mutations were made by changing the value of a random pixel in a random individual to the opposite. A shake was made if the minimum does not change over five steps. Then an “extreme mutation” is made - replacement occurs more intensively than usual.

I took nonograms ("Japanese scanwords") as the source pictures, but, in truth, you can take just black squares - there is absolutely no difference. Below are the results for multiple images. Here, for all but the “house”, the number of mutations was 100 on average per individual, there were 100 individuals in the population, and when propagated, the population increased 4 times. Lucky were 30% in each era. For the house, smaller values were chosen (30 individuals in the population, mutations of 50 per individual).

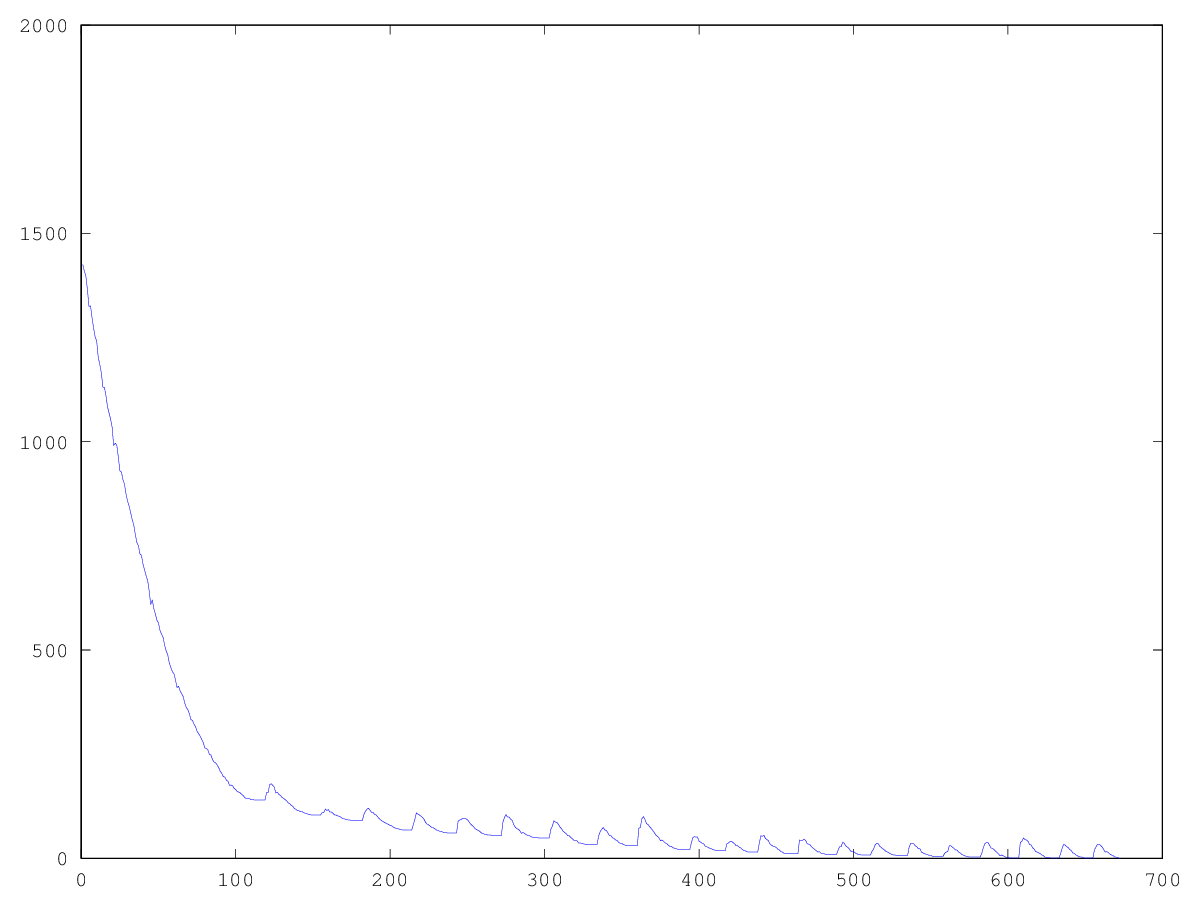

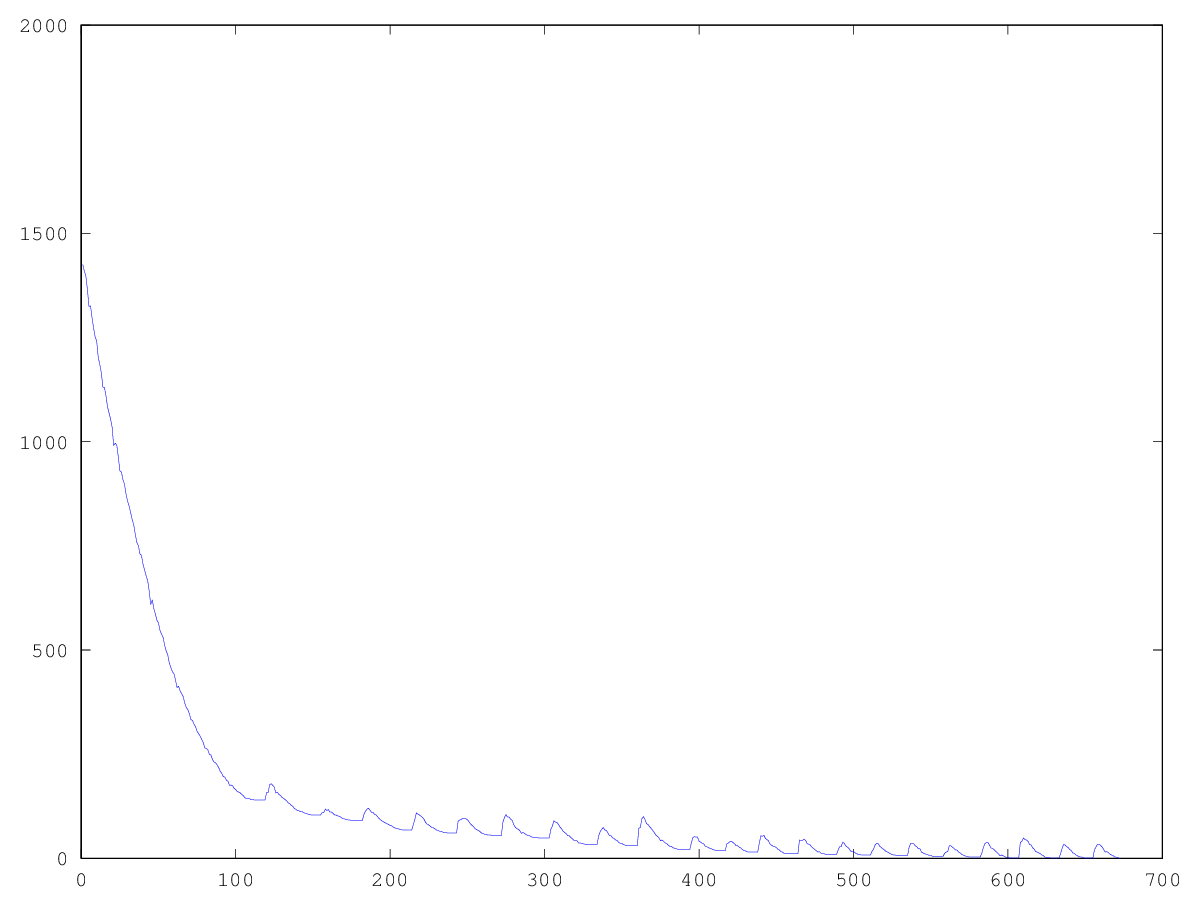

I experimentally found that the use of “lucky ones” in breeding reduces the population’s desire to minimize, but it helps to get out of stagnation - without “lucky ones” stagnation will be constant. What can be seen from the graphs: the left graph shows the development of the “pharaoh” population with the lucky ones, the right one - without the lucky ones.

Thus, we see that this algorithm allows us to solve the problem, albeit in a very long time. Too many shakes, in the case of large images, can solve a larger number of individuals in a population. I leave the optimal selection of parameters for different dimensions beyond the scope of this post.

As was said, local optimization is a rather trivial task, even for multidimensional cases. It is much more interesting to see how the algorithm will cope with global optimization. But for this you need to first build a function with many local minima. And this is not so difficult in our case. It is enough to take a minimum from distances to several images (house, dinosaur, fish, boat). Then the initial algorithm will “slip” into some random hole. And you can just run it several times.

But there is a more interesting solution to this problem: you can understand that we have slipped into a local minimum, make a strong shake (or even initiate individuals anew), and add fines in the future when approaching a known minimum. As you can see, the pictures alternate. I note that we do not have the right to touch the original function. But we can remember local minimums and add penalties on our own.

This picture shows the result when, when a local minimum is reached (strong stagnation), the population simply dies.

Here the population dies out and a small fine is added (in the amount of the usual distance to a known minimum). This greatly reduces the likelihood of repetitions.

It is more interesting when the population does not die out, but simply begins to adjust to new conditions (next figure). This is achieved by a fine of 0.000001 * sum ^ 4. In this case, the new images become a bit noisy:

This noise is eliminated by limiting the fine to max (0.000001 * sum ^ 4, 20). But we see that the fourth local minimum (dinosaur) cannot be reached - most likely because it is too close to some other one.

What conclusions can we draw from, I'm not afraid of this word, simulation? First of all, we see sexual reproduction - the most important engine of development and adaptability. But only it is not enough. The role of random, small changes is extremely important. They provide the emergence of new species of animals in the process of evolution, and we provide a diversity of the population.

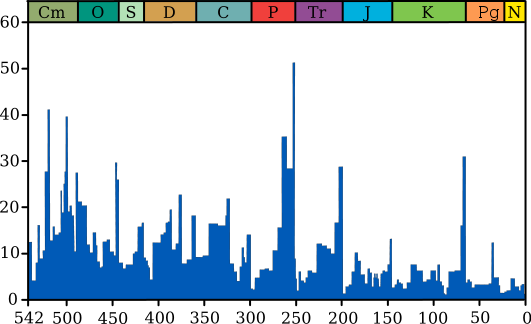

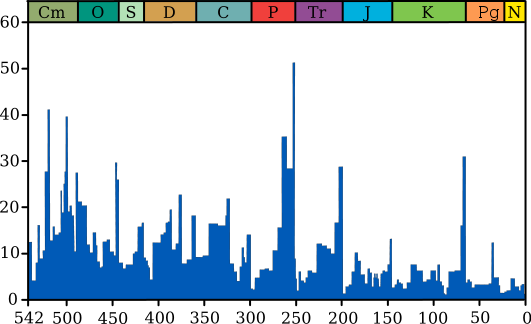

The most important role in the evolution of the Earth was played by natural disasters and mass extinctions (extinctions of dinosaurs, insects, etc. - there were about ten large ones - see the diagram below). This was confirmed by our modeling. And the selection of the “lucky ones” showed that the weakest organisms today are capable of becoming the basis for future generations in the future.

As they say, everything is as in life. This do-it-yourself evolution method clearly shows interesting mechanisms and their role in development. Of course, there are many more worthwhile evolutionary models (based, of course, on difura) that take into account more factors that are closer to life. Of course, there are more effective optimization methods.

I wrote the program in Matlab (or rather, even on Octave), because here everything is a golem matrix, and there are tools for working with pictures. Source code is attached.

Briefly about the algorithm

So what is a genetic algorithm? This is, first of all, a method of multidimensional optimization, i.e. method for finding the minimum of a multidimensional function. Potentially, this method can be used for global optimization, but there are difficulties with this, I will describe them later.

The very essence of the method is that we modulate the evolutionary process: we have some kind of population (a set of vectors) that multiplies, is affected by mutations, and natural selection is made on the basis of minimizing the objective function. Let us consider these processes in more detail.

So, first of all, our population must multiply . The basic principle of reproduction is that the offspring is similar to its parents. Those. we must ask some kind of inheritance mechanism. And it would be better if it included an element of randomness. But the rate of development of such systems is very low - the genetic diversity is falling, the population is degenerating. Those. the value of the function ceases to be minimized.

To solve this problem, a mutation mechanism was introduced , which consists in the random change of some individuals. This mechanism allows you to bring something new to genetic diversity.

The next important mechanism is selection.. As it was said, selection is the selection of individuals (it is possible from only those born, but it is possible from all - practice shows that this does not play a decisive role) that better minimize function. Usually, as many individuals are selected as there were before breeding, so that from era to era we have a constant number of individuals in the population. It is also customary to select the “lucky ones” - a certain number of individuals who, perhaps, poorly minimize the function, but will bring diversity to subsequent generations.

These three mechanisms are often not enough to minimize function. So the population degenerates - sooner or later the local minimum clogs the entire population with its value. When this happens, carry out a process called shake(in the nature of analogy, global cataclysms), when almost the entire population is destroyed, and new (random) individuals are added.

Here is a description of the classical genetic algorithm, it is easy to implement and there is room for imagination and research.

Formulation of the problem

So, when I already decided that I want to try to implement this legendary (albeit unlucky) algorithm, it came to the conclusion that what would I minimize? Usually they take some terrible multidimensional function with sines, cosines, etc. But this is not very interesting and not at all clear. One simple idea came - an image where the value is responsible for brightness is excellent for displaying a multidimensional vector. Thus, we can introduce a simple function - the distance to our target image, measured in the difference in pixel brightness. For simplicity and speed, I took images with a brightness of 0, or 255.

From the point of view of mathematics, such optimization is a mere trifle. The graph of such a function is a huge multidimensional “pit” (like the three-dimensional parabaloid in the figure), which you will inevitably slide into if you follow the gradient. The only local minimum is global.

.

. The only problem is that the number of paths along which you can go down is already greatly reduced, and in total we have as many directions as measurements (i.e. the number of pixels). Obviously, solving this problem using the genetic algorithm is not worth it, but we can look at the interesting processes taking place in our population.

Implementation

All the mechanisms described in the first paragraph were implemented. Reproduction was carried out by simply crossing random pixels from the “mother” and “father”. Mutations were made by changing the value of a random pixel in a random individual to the opposite. A shake was made if the minimum does not change over five steps. Then an “extreme mutation” is made - replacement occurs more intensively than usual.

I took nonograms ("Japanese scanwords") as the source pictures, but, in truth, you can take just black squares - there is absolutely no difference. Below are the results for multiple images. Here, for all but the “house”, the number of mutations was 100 on average per individual, there were 100 individuals in the population, and when propagated, the population increased 4 times. Lucky were 30% in each era. For the house, smaller values were chosen (30 individuals in the population, mutations of 50 per individual).

I experimentally found that the use of “lucky ones” in breeding reduces the population’s desire to minimize, but it helps to get out of stagnation - without “lucky ones” stagnation will be constant. What can be seen from the graphs: the left graph shows the development of the “pharaoh” population with the lucky ones, the right one - without the lucky ones.

Thus, we see that this algorithm allows us to solve the problem, albeit in a very long time. Too many shakes, in the case of large images, can solve a larger number of individuals in a population. I leave the optimal selection of parameters for different dimensions beyond the scope of this post.

Global optimization

As was said, local optimization is a rather trivial task, even for multidimensional cases. It is much more interesting to see how the algorithm will cope with global optimization. But for this you need to first build a function with many local minima. And this is not so difficult in our case. It is enough to take a minimum from distances to several images (house, dinosaur, fish, boat). Then the initial algorithm will “slip” into some random hole. And you can just run it several times.

But there is a more interesting solution to this problem: you can understand that we have slipped into a local minimum, make a strong shake (or even initiate individuals anew), and add fines in the future when approaching a known minimum. As you can see, the pictures alternate. I note that we do not have the right to touch the original function. But we can remember local minimums and add penalties on our own.

This picture shows the result when, when a local minimum is reached (strong stagnation), the population simply dies.

Here the population dies out and a small fine is added (in the amount of the usual distance to a known minimum). This greatly reduces the likelihood of repetitions.

It is more interesting when the population does not die out, but simply begins to adjust to new conditions (next figure). This is achieved by a fine of 0.000001 * sum ^ 4. In this case, the new images become a bit noisy:

This noise is eliminated by limiting the fine to max (0.000001 * sum ^ 4, 20). But we see that the fourth local minimum (dinosaur) cannot be reached - most likely because it is too close to some other one.

Biological interpretation

What conclusions can we draw from, I'm not afraid of this word, simulation? First of all, we see sexual reproduction - the most important engine of development and adaptability. But only it is not enough. The role of random, small changes is extremely important. They provide the emergence of new species of animals in the process of evolution, and we provide a diversity of the population.

The most important role in the evolution of the Earth was played by natural disasters and mass extinctions (extinctions of dinosaurs, insects, etc. - there were about ten large ones - see the diagram below). This was confirmed by our modeling. And the selection of the “lucky ones” showed that the weakest organisms today are capable of becoming the basis for future generations in the future.

As they say, everything is as in life. This do-it-yourself evolution method clearly shows interesting mechanisms and their role in development. Of course, there are many more worthwhile evolutionary models (based, of course, on difura) that take into account more factors that are closer to life. Of course, there are more effective optimization methods.

PS

I wrote the program in Matlab (or rather, even on Octave), because here everything is a golem matrix, and there are tools for working with pictures. Source code is attached.

Source

functionres = genetic(file)%generatingglobal A B;

im2line(file);

dim = length(A(1,:)); count = 100; reprod = 4; mut = 100; select = 0.7; stagn = 0.8;

pop = round(rand(count,dim));

res = []; B = []; localmin = []; localcount = [];

for k = 1:300%reproduction forj = 1:count * reprod

pop = [pop; crossingover(pop(floor(rand() * count) + 1,:),pop(floor(rand() * count) + 1,:))];

end%mutation

idx = 10 * (length(res) > 5 && std(res(1:5)) == 0) + 1;

forj = 1:count * mut

a = floor(rand() * count) + 1;

b = floor(rand() * dim) + 1;

pop(a,b) = ~pop(a,b);

end%selection

val = func(pop);

val(1:count) = val(1:count) * 10;

npop = zeros(count,dim);

[s,i] = sort(val);

res = [s(1) res];

opt = pop(i(1),:);

fn = sprintf('result/%05d-%d.png',k,s(1));

line2im(opt*255,fn);

if (s(1) == 0 || localcount > 10)

localmin = []; localcount = [];

B = [B; opt];

% pop = round(rand(count,dim));continue;

% break;endforj = 1:floor(count * select)

npop(j,:) = pop(i(j),:);

end%adding luckersforj = (floor(count*select)+1) : count

npop(j,:) = pop(floor(rand() * count) + 1,:);

end%fixing stagnationif (length(res) > 5 && std(res(1:5)) == 0)

if (localmin == res(1))

localcount = localcount+1;

else

localcount = 1;

end

localmin = res(1);

forj = 1:count*stagn

a = floor(rand() * count) + 1;

npop(a,:) = crossingover(npop(a,:),rand(1,dim));

endend

pop = npop;

end

res = res(length(res):-1:1);

endfunctionres = crossingover(a, b)

x = round(rand(size(a)));

res = a .* x + b .* (~x);

endfunctionres = func(v)global A B;

res = inf;

fori = 1:size(A,1)

res = min(res,sum(v ~= A(i,:),2));

endfori = 1:size(B,1)

res = res + max(0.000001 * sum(v == B(i,:),2) .^ 4,20);

endendfunction[] = im2line(files)global A sz;

A = [];

files = cellstr(files);

fori = 1:size(files,1)

imorig = imread(char(files(i,:)));

sz = size(imorig);

A = [A; reshape(imorig,[1size(imorig,1)*size(imorig,2)])];

end

A = A / 255;

endfunction[] = line2im(im,file)global sz;

imwrite(reshape(im*255,sz),file);

end