The engineering device of the TIER III level data center, located on four trunk lines

We already talked about how our TIER III data center was designed and costed. It's time to show what happened.

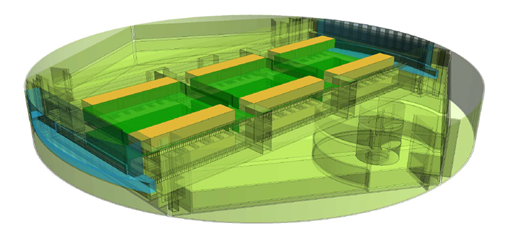

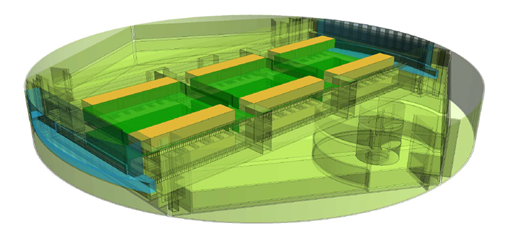

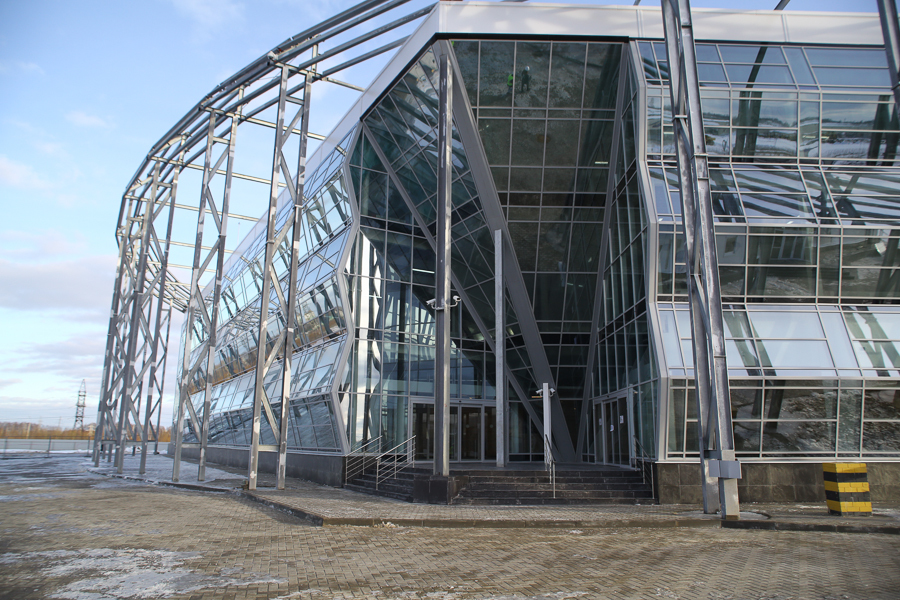

At first there was a clean field on the site of the data center, then a hefty 100-meter pit was dug. Then the data center looked like a concrete site, and even later, a hexagonal building was built from metal structures, inside which there are six data center modules (highlighted in green on the diagram), a “command center” for monitoring the backbone network throughout the country and an office (more about construction can be read in the publication "Data Center of our dreams in Yaroslavl: photos of construction and launch" ).

Caution traffic and hikporn from the Yaroslavl data center: 91 photographs of the just launched first module, final work is still going on in the main building, but there will be no more capital construction.

Territory

• 7 ha;

• 30 thousand sq. M. Area of buildings.

Modular approach

• 6 independent data center modules (the first one is now installed).

Fault tolerance

• Tier III certified by the Uptime Institute.

Uninterruptible power supply

• 2 independent city inputs 10 MW;

• Diesel-dynamic uninterruptible power supply: 2500 kVA;

• 2N redundancy;

• Fuel storage 30,000 liters, designed for 12 hours of operation of 6 modules at full load.

Cooling

• N + 2;

• Water cooling in chiller units;

• Cooling with outside air (Natural Free Cooling);

• Adiabatic cooling system;

• Uninterrupted power supply of cooling system equipment;

• The average annual PUE is not more than 1.3.

Firefighting

• Novec gas is harmless to humans;

• Fire Early Warning System (VESDA).

Security

• Security 24х7х365;

• Access control and management system, video surveillance.

Environmentally friendly

• Uninterruptible power system without chemical batteries;

• Cooling with outside air up to 90% of the time in a year ;.

• Eco-friendly decoration materials for office interiors.

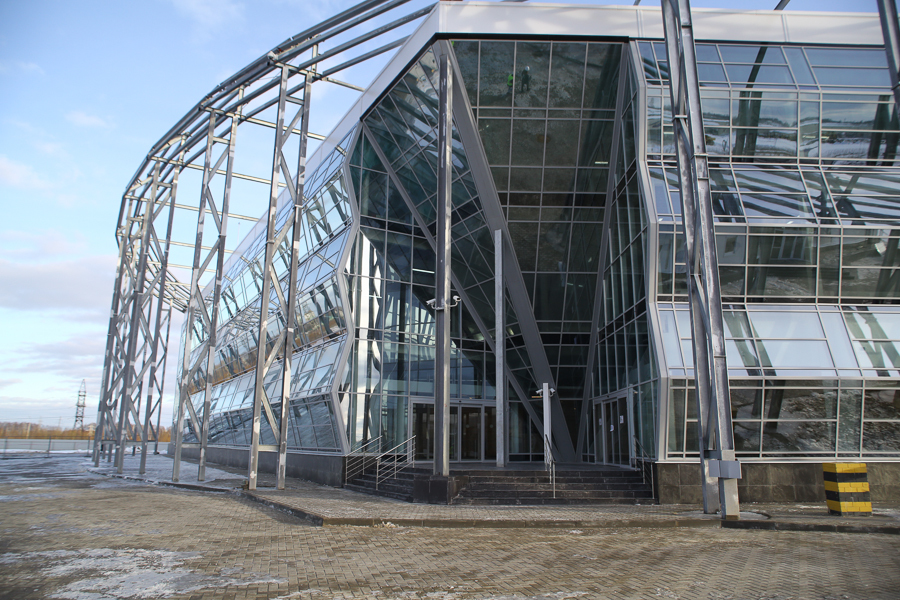

In reality, the building is almost as transparent as in the diagram, because there is a lot of polarized glass:

The main building, in which the data center is located

For comparison - here is a small container data center with seven racks, which we installed in three months: we threw a couple of plates, and forward. A mobile data center and a neighboring blue container with a DES worked at this point during commissioning and generally maintained a number of basic services until we raised the first machine room.

Container data center

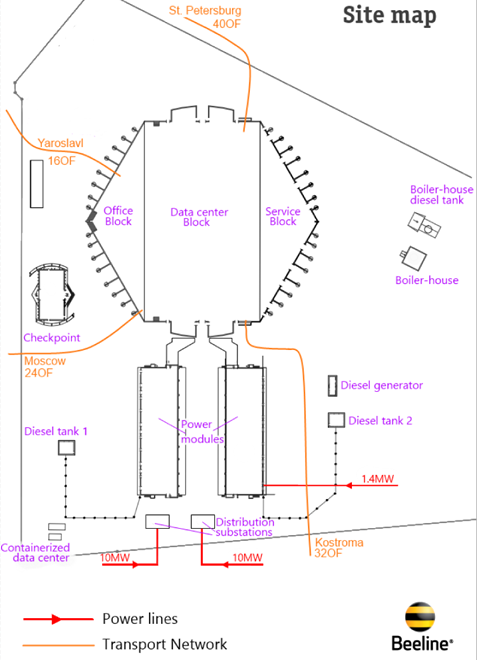

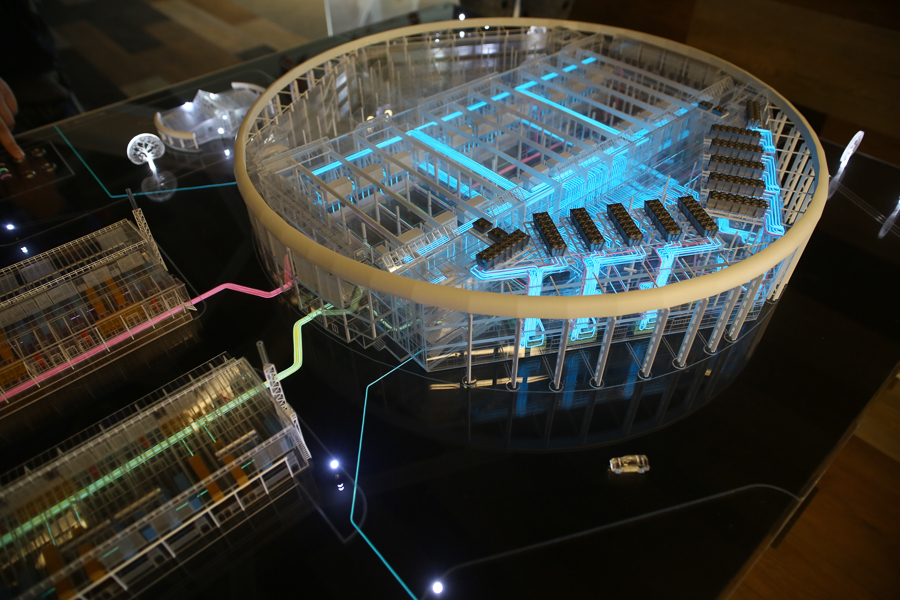

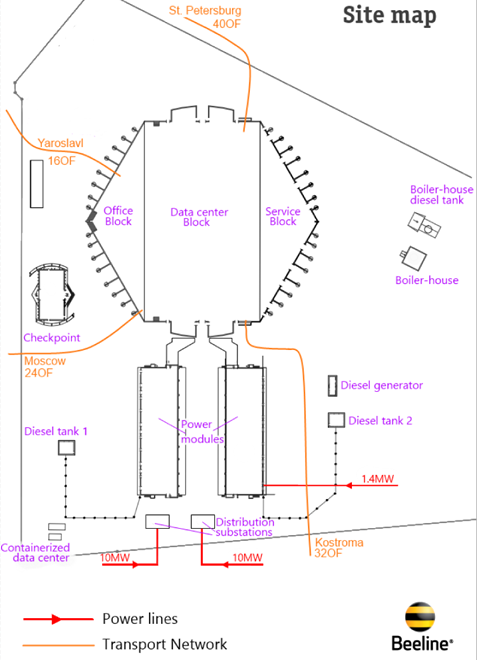

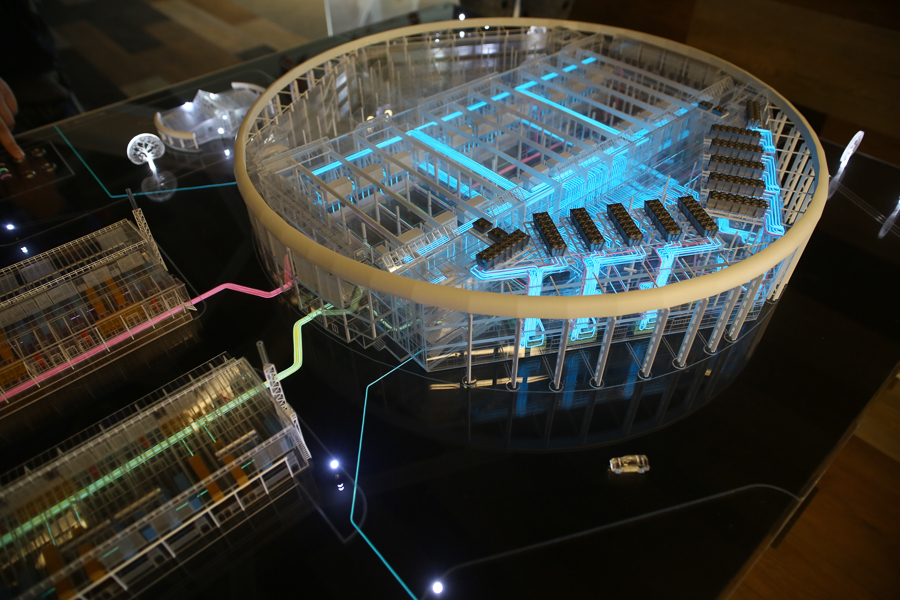

Here is the scheme of the “space cruiser”:

The scheme of the object The

hexagon in the middle is the main building, which houses the halls, the office and service areas of the Enterprise. The container data center is below, it is small and will be taken away soon. And the two blocks of cruiser “engines” are independent power units (Power modules). Let's get there first.

Here is a building with power plants outside: The energy

module, in which the DDIBP is located

Nearby in a blue container is stored diesel fuel. In winter we pour winter diesel fuel, in summer - summer. There is no need to pump it out - we produce diesel fuel at monthly routine inspections.

30,000 liter

fuel tank But let's go inside the energy module. We are greeted by such a miracle device.

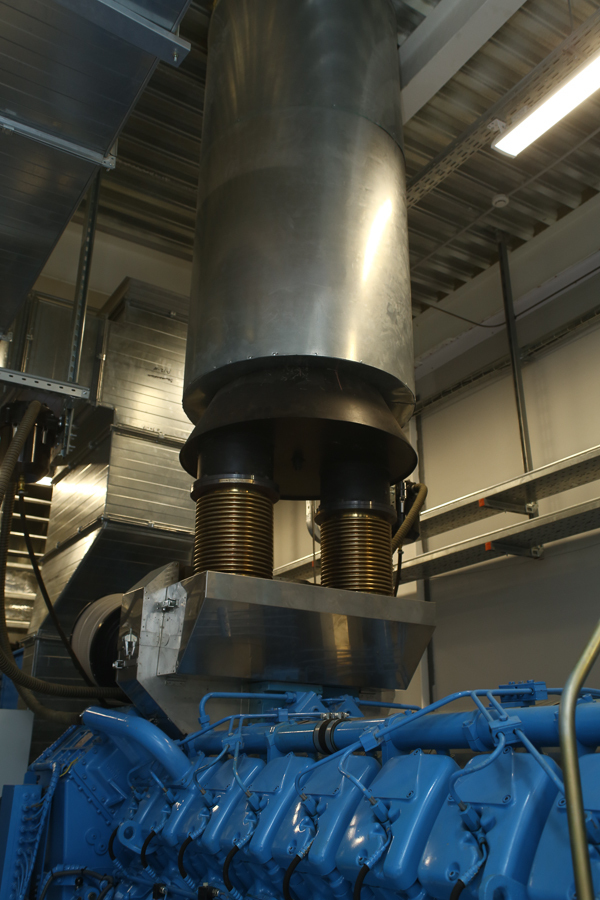

Motor-generator system of a diesel-dynamic UPS

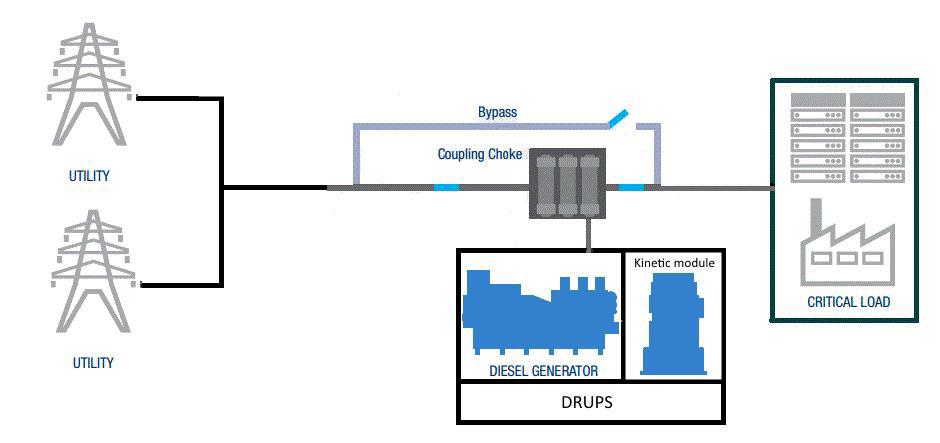

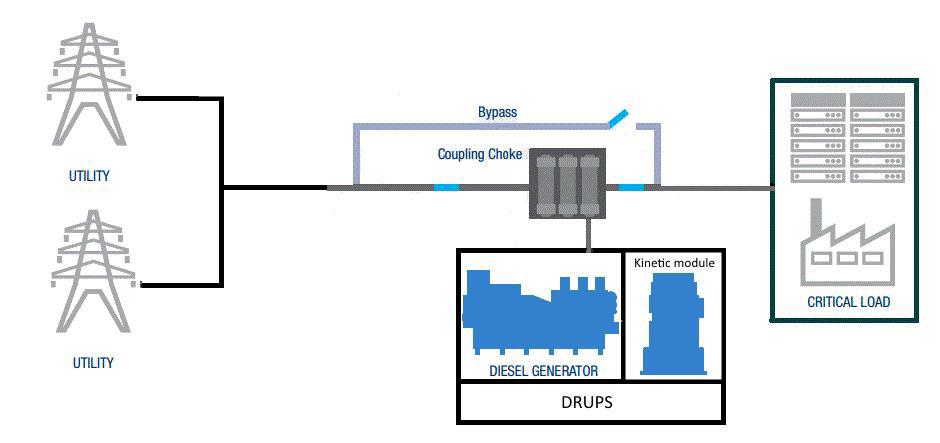

This is a whole range of devices. How it all works, we already told at the design stage, here there are details (and the animation of the manufacturer). In short, we are supplied by two independent substations from the city, which is reserved by a system of diesel generators. In the middle of the chain between the sources and the payload is a kinetic drive.

The power supply scheme of the

data center complex will consume up to 10 MW. For obvious reasons, it’s much more economical to take food from the city. But if something happens, you need to either have a huge supply of UPS batteries for the duration of the diesel warm-up, or a smaller battery supply and a pool of ice water for cooling, or your own constantly working energy center (generation). One of the best solutions is DDIBP: a kinetic drive, plus a quick-start diesel engine in standby mode. In practice, everything is much more complicated, but the general principle of work is just that.

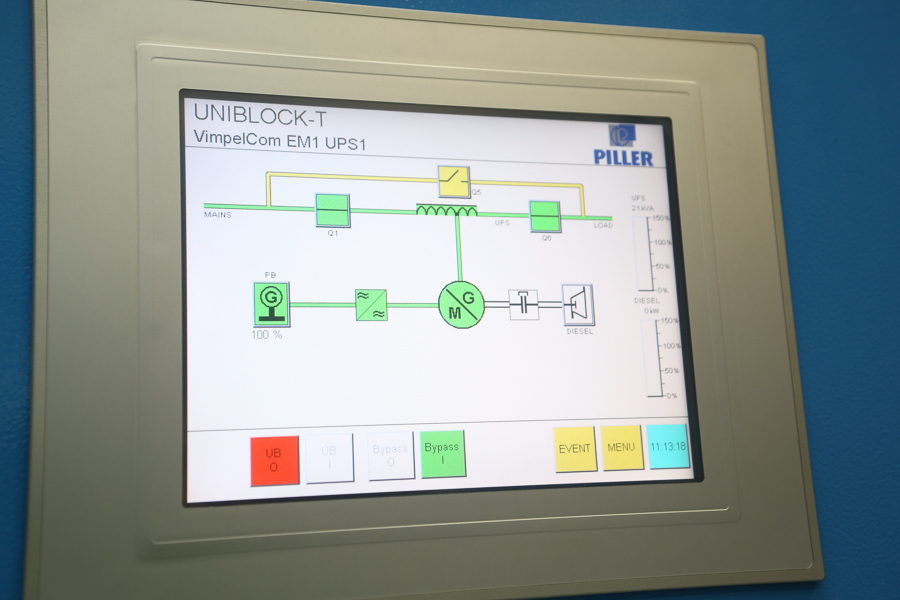

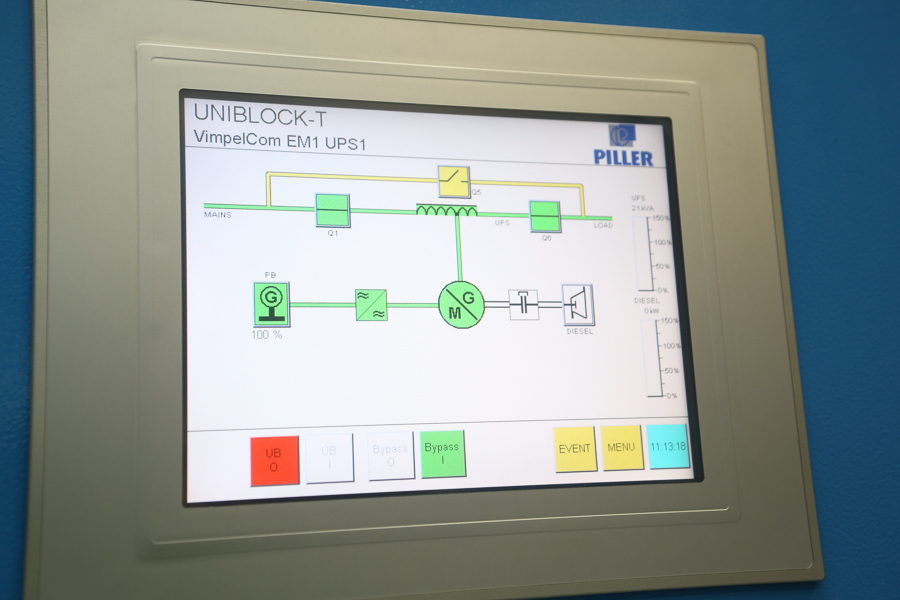

DDIBP control panel

It should be noted that the food from the city comes to us is not the highest quality. When it worsens or disappears, due to the energy of the kinetic accumulator (a seven-ton flywheel untwisted to great speed). The energy of the residual rotation of the flywheel is enough with a margin to start the diesel engine, even from the second attempt. Of the pluses - the same system makes it very easy to cope with most power surges - roughly speaking, in the middle of the circuit, the DDIBP smooths an average of about 80% of peaks and dips without turning on the motor-generator. Another advantage of using DDIBP is that there is no problem with the delay in switching to the backup power system, since the kinetic drive is constantly connected to the circuit.

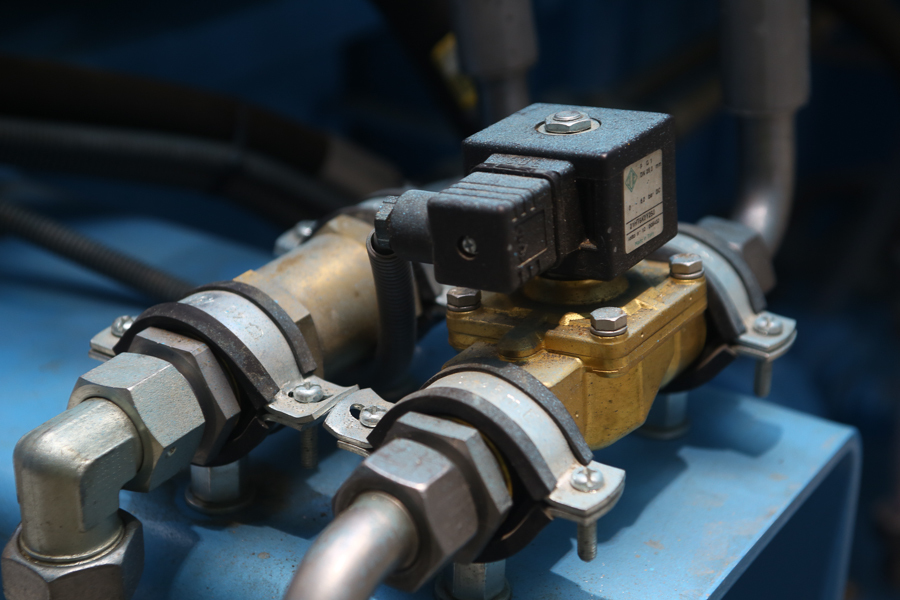

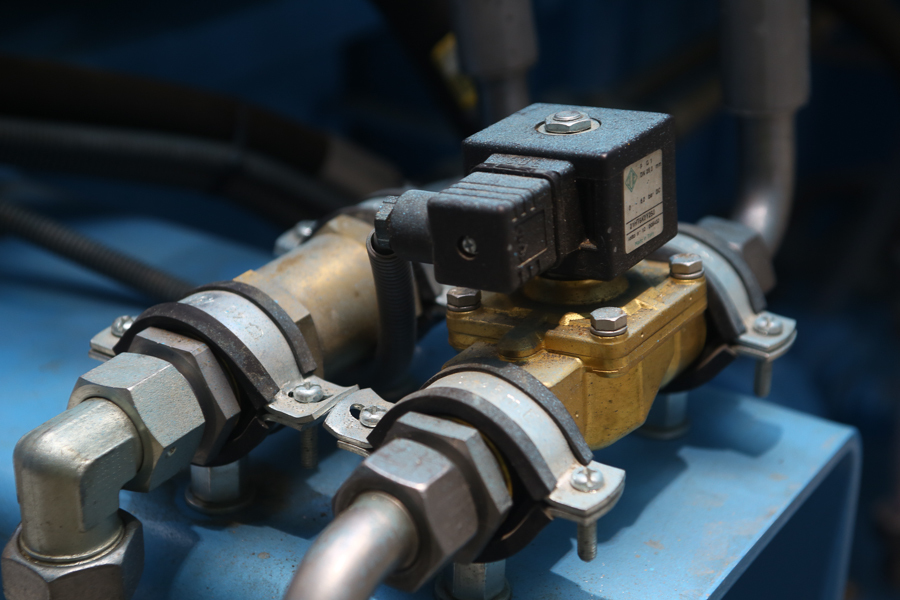

The fuel purification system, in addition to conventional filtration, also includes an impurity separation system that purifies the fuel immediately before being fed to the fuel pump.

Filter separator for the fuel supply system

Cylinder covers

Solenoid valve for the fuel supply system

One of our important tasks now is to guarantee energy efficiency with low consumption.

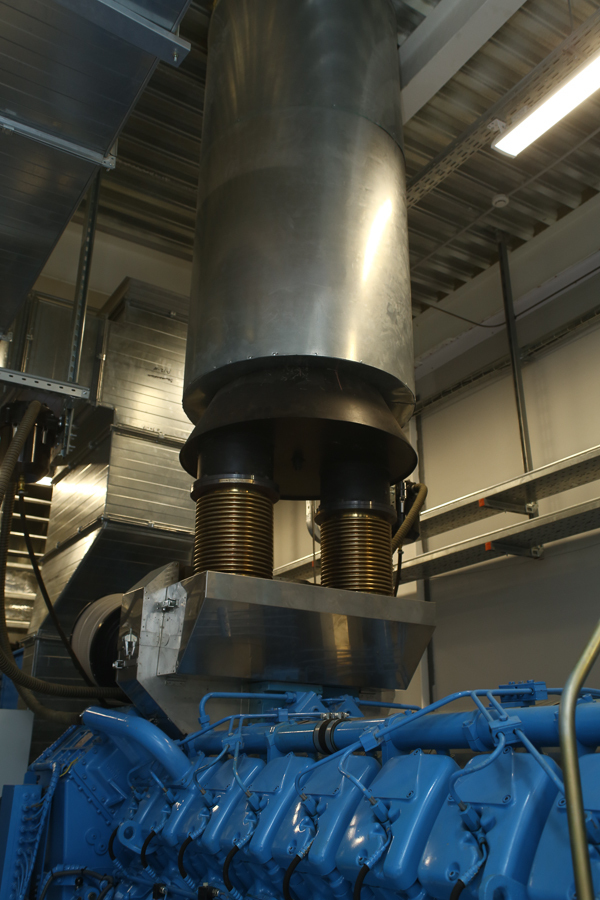

The pipe leads to the second floor, where exhaust silencing and purification systems are located. In particular, in the 4.5-meter two-ton silencers

Last time we promised to show the size of the silencers. They promised - we are fulfilling:

At the DDBI plant in Germany

This is a fuel tank

Solarium for diesel generator sets is stored in large quantities in a blue house outside, and in this tank there is the necessary reserve for an hour or two of work (depending on the load). The tanks are connected, the fuel can be delivered endlessly, and it will first be poured into the main storage, then - here, hereinafter - into the diesel generator itself. Of course, the second energy module is the same, and there is its own independent fuel storage.

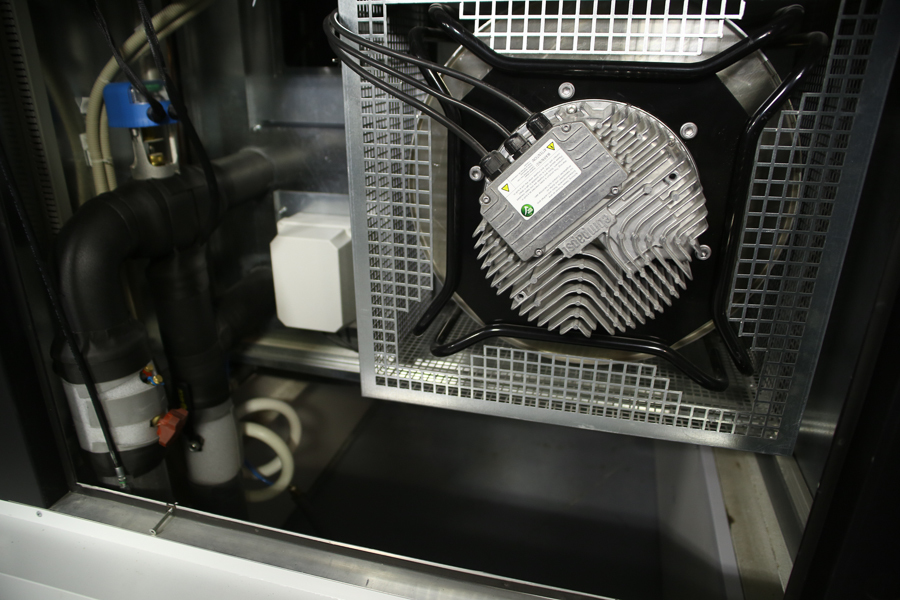

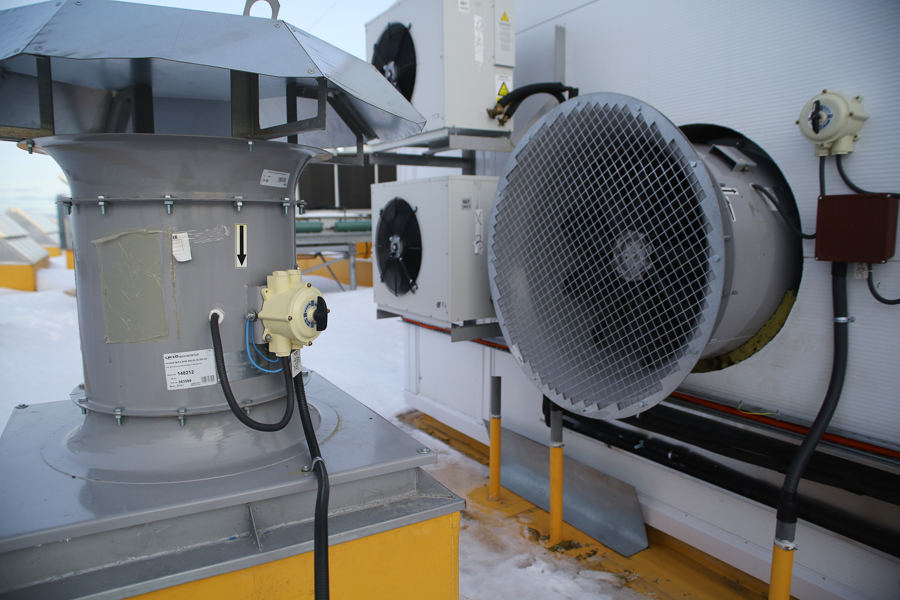

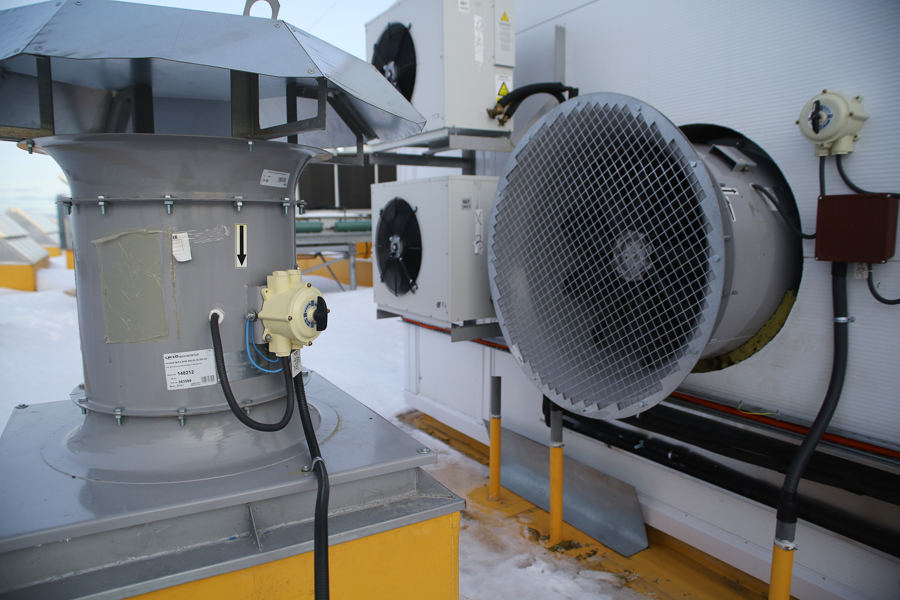

Ventilation exhaust fan

Pipelines for diesel engine cooling system. Refrigerant circulates in the pipes, which passes through radiators installed on the second floor.

To reduce losses, the flywheel rotates in gaseous helium. While he is in this environment, the cost of maintaining rotation even at wild speed is minimal, that is, it is enough to invest energy in it for promotion, and then it consumes very little. Efficiency> 99%. Then, when necessary, the flywheel transfers the accumulated energy to the system.

>

>

Reducer-distributor of helium supply to the kinetic drive

Emergency stop valve for the diesel engine with a “fire” signal or emergency shutdown with a red button. It is used only as a last resort, and actually shuts off oxygen to the motor

The fire is extinguished by gas Novec 1230 - in the applied concentration it is not dangerous to humans. Our fire extinguishing system is smart: in the event of a fire on a diesel engine, it gives a chance to cope with the fire yourself. This increases the survivability of the data center.

Starting batteries

Power cables from the generator to the load (DPC)

Fire alarm siren. As soon as it turns on - you need to run out of the room.

Ventilation control cabinet

Circuit breaker circuit breaker

Here is protection from the fool. The lock closes critical panels. To press something, you need to remove the plastic curtain by rotating it 180 degrees. Then press the button hard enough. Such protection options are made so that nothing is done by chance without a full awareness of what is happening.

The smoke exhaust system button is located in front of the room entrance.

Here, the test load is connected to this unit from the outside for routine inspection of the DDIBP:

The load is connected by a cable

that is very flexible for its diameter. And here is the load itself. Rheostat tractor. The trailer is something like a huge air heater that effectively heats the air in order to take a lot of power from the energy module. Not that the winter in Yaroslavl became warmer, but they could do what they could.

Rheostat tractor

And these are two interesting objects. Lightning trap and lightning conductor. More precisely, on the power module, a high mast structure is a passive lightning rod - a metal pin that discharges a discharge deep underground. And then, in the center, on the roof of the data center, you can already see an active installation of a similar purpose, it allows you to achieve a similar effect only with much smaller sizes.

Passive and active lightning rods The

second module is symmetrical to the first. The only thing that distinguishes its surroundings is this additional object. This is a separate DES for the office, the requirements for uninterrupted power supply are much lower, which means that you do not need to expend the power of the DDIBP.

DES for the office

Now we go down to the metro line to the Kremlin.

Cable collector

In fact, of course, this is a cable collector, but if you turn off the lights correctly, you can devote newcomers to the secrets of the USSR.

You can still do it like this:

Here are cables the thickness of the arm of a healthy engineer. Well, or with the leg of a pretty girl. For the scraps of such a cable was a real hunt for some installers at a construction site. According to their ideas, he is probably golden. They got a little, but they spoiled us pretty badly.

The cable, by the way, is for the most part domestic, produced by the Kolchuginsky plant from the Vladimir region. There were no problems at our facility, but, of course, the cable should always be checked at the time of reception - it can be brought with a break, or with broken insulation, and much more.

"Glamorous" fire foam. During installation, it expands and very tightly covers everything that can, in order to prevent the spread of fire and smoke through the holes where the cable passes, in this case.

So, there is food. The data center lacks more cooling and the Internet. And beer, but the brewery is right outside the window. With the transport network, everything is fine with us, there are two main communication rooms that reserve each other. There were 4 inputs of the main optics brought to the data center, we provided for the possibility of installing 100Gbit / s transponders.

One day we will tell you more about the device of our communication center.

Now let's go look at the cooling.

You can read more about cooling design here .

Again, briefly the main things:

The Natural Free Cooling Free Organization system organization

diagram works like this. In the "face" (mixing chamber), our data center is blown by the wind. Cold air enters the main duct, gives off cold to the internal circuit, then flies further through the data center and goes into the steppe. The hot environment of the machine room transfers heat through the heat exchanger to the external space of the hangar, in which the data center modules are located. Hot updrafts go up through openings in the roof or forcibly through the mixing chamber. Simple yet, right ?

The layout of the main duct

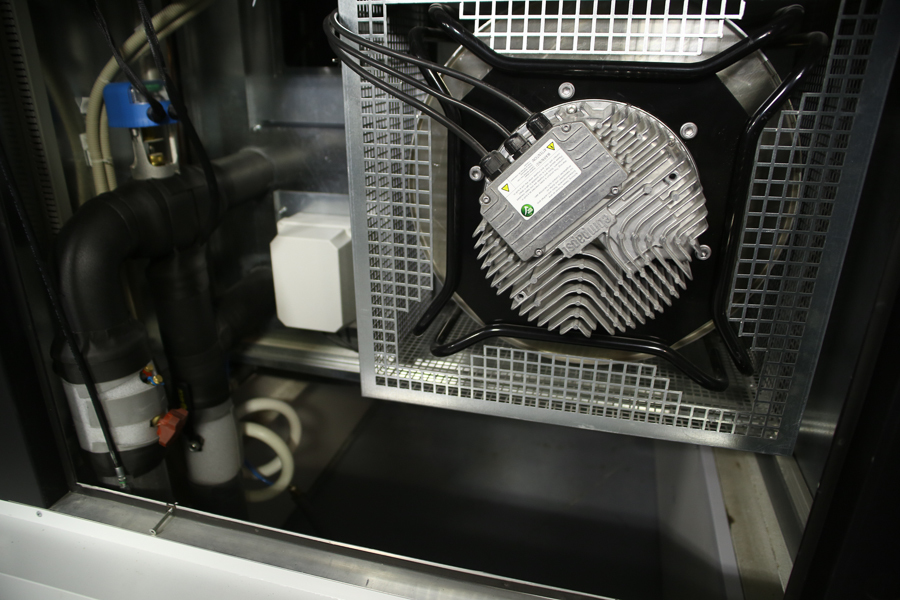

The first one who meets us is the "spider." This is a chiller. We call him a spider because of its characteristic shape.

Chiller

Why a chiller in a data center with free cooling? That's because if the external temperature rises above 24 degrees Celsius, the halls will not cool with the desired efficiency. Let me remind you that we decided to build a data center in Yaroslavl also because it is cooler in the summer (remember the night) than in Moscow, and you can save on cooling.

Nevertheless, up to about a month in a year, on average, the temperature rises above the specified limit. In this case, we use a second cooling system on chillers that cool water in a huge pipeline network. Again, simplifying to indecency - these are large refrigerators that cool the water. They require much more food, but nowhere to go. Reservation of N + 2 chillers, which means three units are installed at once on the first machine.

Do you recognize these heroes?

Expansion tanks

A total of 180 tons of water in the circuit.

Main collector

Drain cock

Another spider, but on the other side (control panel on the side)

Balancing valve

If emergency water drain is required, it will flow into special pits. The

pumps provide circulation in the circuit. The second pump is the reserve.

Freon pipelines lead to the roof. Bending is oil lifting loops to allow oil to return to the compressor.

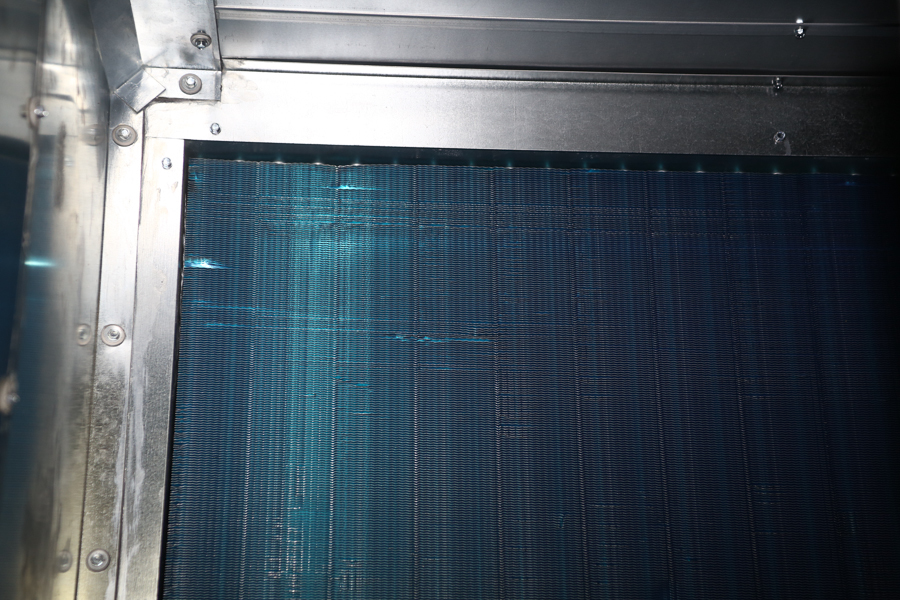

Soon we will finish with the glands and go to the gym.He’s right behind this wall. But! First, there is an important point. The fact is that the ambient temperature is enough to cool the halls with the required supply of only 8 months a year. And we launch chillers for two to four weeks. Where else have gone at least three and a half months? Here, look at the device.

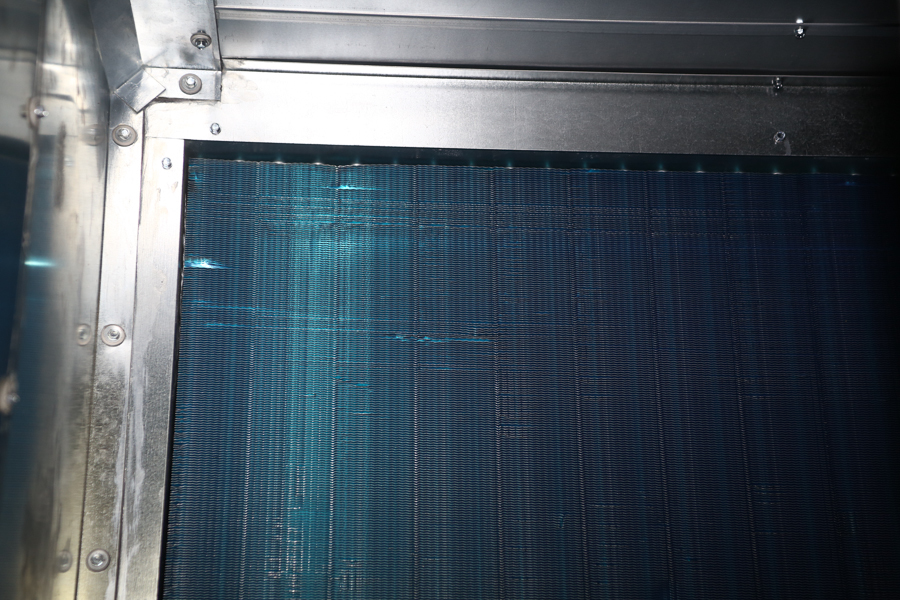

MNFC - heat exchanger

This is a heat exchanger chamber, from here the cold hall of the hall begins, and here the hot ends. That is, in this device we make cold air from hot air. This is a key element of the cooling system. The heat exchanger operates both in outdoor cooling mode and in water cooling mode.

The main mode - a large volume of street air is passed through the heat exchanger.

If the air is hot, we close the air intake and begin to cool the exchange chamber with the output from the chiller, spending electricity on cooling.

Behind this grate there is a heat exchange.

But when the air is only 3-4 degrees warmer (up to 24 degrees), which happens in the “fallen” months, we use the life hack from the school course of physics. We simply saturate the air with moisture (we drive it through the wall of water fog for such a device), which gives the desired delta in the adiabatic process. This technology is much more economical than the chiller system, and is widely used in conjunction with free cooling.

Here, right through the building, an exhaust duct passes right through. This is such a very wide hot corridor.

Roof anti-aircraft lights. The flaps open, if necessary, and release hot air currents.

That's it, we’re watching the mashroom. Now the rooms from the second to the sixth look like this: the modules have not yet been mounted.

But the first module is launched and is already filling up. We arrived at the very moment when some of the servers are already working, but the hall is still half empty.

Data Center Module

Since our racks are typical, and the hardware is generally homogeneous (this is our data center, and we know for sure what will be installed here), it was possible to mount all the I / O at once.

Power sockets for connecting racks

Another plus of typical racks is the known dimensions of the cold and hot corridor. Here is the cold air blowing from below from the raised floor. These panels are now closed with polyethylene - the corridor is not used, and we do not want engineers to blow off how much in vain. By the way, these cold corridors are perhaps the last of the reminders why the admin is always wearing a sweater. Because it is very cold and blowing.

Cold air corridor

And this is our teleportation device, prototype.

Seriously, these are the doors that close (limit) the cold corridor so that the cooling works as efficiently as possible. This is how it looks in that part of the hall where the racks are already there.

Racks

Actually, racks. Now the following equipment has been installed:

- Oracle Hi-End and Mid-Range servers;

- Storage built on equipment HDS, HP, IBM, Brocade;

- The core of the network is built on Avaya equipment;

- HP servers.

Power distribution system for racks: busbars with power take-offs

We have the SCS system top-of-rack Panduit

Top-of-rack

Cross cassettes with switching by four with a minus, will soon be five

Accurate installation - our little fad. Even in the Moscow test zone , where everything changes every day and the devil breaks in the equipment, the installation is more or less accurate. We know from experience that unsigned and inaccurately mounted cables can cause service interruptions.

Switching Aggregation Switches

Active Network Equipment

10% of the room is designed for high-density racks - up to 20 kW per unit. The most powerful racks are located in the center of the hall, there is a margin of cold because of the peculiarity of the movement of high-speed air flows.

But there are storage systems. They have not yet been connected. Somewhere here will be stored data about your balance.

Tape library

These are the fan heaters. With their help, we checked the room for the ability to remove heat. Yes, yes, they just warmed it and looked what happened.

Retraction to the in-line busbar.

Again Novec, there is enough reserve for two fire extinguishing system trips.

This is how the power cables that we saw in the basement go to the hall.

Now let's look at the office to the admins and not only.

The main entrance to the data center building

We go around the data center and see temporary modular blocks - this is the dining room and the “office” - the places where the shift worked during the construction of the facility, when the office premises were not yet ready. The office is much better than in such a "container."

Temporary office and dining room

At the entrance, we have a mock data center: The

cooling circuit is highlighted :

On the roof you can see the condenser units of the chillers:

You can also highlight the power supply and trunk inputs:

Before I get inside, I will show the helmets in the locker room. One of the builders turned out to be an artist and painted his helmet - and raced.

Open space

The wall is made of a blackboard.

And here is a rest room for changing administrators.

Coffee Point

Cabinet

Bathroom

Again on the street!They walked around, but were not on the roof. And on the roof there are chillers, so they peer carefully:

Here is the roof itself. She is very big and striped. From the satellite, our data center is visible as a circle logo.

This we are approaching the external units of the chillers. As from domestic air conditioners, only a little more.

These "windows" or anti-aircraft lights - these are places where the hot air naturally finally leaves the building.

Anti-aircraft lights

Each anti-aircraft lamp has its own weather station, which sends data to the automation system.

And here is the final point of the freon pipelines that we saw before. There are so

many pipes, because each unit has two circuits, and there are two of them for one chiller (that is, the four-circuit chiller itself)

Elements of general ventilation systems of the building.

Here is the lightning protection mast:

Here is our data center in Yaroslavl. While the first module of the flagship data center, designed for 236 racks, has been launched, we are slowly moving some of the "combat" systems there. By the way, the Federal Monitoring Center moved to us, and soon the girls from the integrated service center will come. So do not lose courage.

At first there was a clean field on the site of the data center, then a hefty 100-meter pit was dug. Then the data center looked like a concrete site, and even later, a hexagonal building was built from metal structures, inside which there are six data center modules (highlighted in green on the diagram), a “command center” for monitoring the backbone network throughout the country and an office (more about construction can be read in the publication "Data Center of our dreams in Yaroslavl: photos of construction and launch" ).

Caution traffic and hikporn from the Yaroslavl data center: 91 photographs of the just launched first module, final work is still going on in the main building, but there will be no more capital construction.

Basic data center parameters

Territory

• 7 ha;

• 30 thousand sq. M. Area of buildings.

Modular approach

• 6 independent data center modules (the first one is now installed).

Fault tolerance

• Tier III certified by the Uptime Institute.

Uninterruptible power supply

• 2 independent city inputs 10 MW;

• Diesel-dynamic uninterruptible power supply: 2500 kVA;

• 2N redundancy;

• Fuel storage 30,000 liters, designed for 12 hours of operation of 6 modules at full load.

Cooling

• N + 2;

• Water cooling in chiller units;

• Cooling with outside air (Natural Free Cooling);

• Adiabatic cooling system;

• Uninterrupted power supply of cooling system equipment;

• The average annual PUE is not more than 1.3.

Firefighting

• Novec gas is harmless to humans;

• Fire Early Warning System (VESDA).

Security

• Security 24х7х365;

• Access control and management system, video surveillance.

Environmentally friendly

• Uninterruptible power system without chemical batteries;

• Cooling with outside air up to 90% of the time in a year ;.

• Eco-friendly decoration materials for office interiors.

In reality, the building is almost as transparent as in the diagram, because there is a lot of polarized glass:

The main building, in which the data center is located

For comparison - here is a small container data center with seven racks, which we installed in three months: we threw a couple of plates, and forward. A mobile data center and a neighboring blue container with a DES worked at this point during commissioning and generally maintained a number of basic services until we raised the first machine room.

Container data center

Here is the scheme of the “space cruiser”:

The scheme of the object The

hexagon in the middle is the main building, which houses the halls, the office and service areas of the Enterprise. The container data center is below, it is small and will be taken away soon. And the two blocks of cruiser “engines” are independent power units (Power modules). Let's get there first.

Here is a building with power plants outside: The energy

module, in which the DDIBP is located

Nearby in a blue container is stored diesel fuel. In winter we pour winter diesel fuel, in summer - summer. There is no need to pump it out - we produce diesel fuel at monthly routine inspections.

30,000 liter

fuel tank But let's go inside the energy module. We are greeted by such a miracle device.

Motor-generator system of a diesel-dynamic UPS

This is a whole range of devices. How it all works, we already told at the design stage, here there are details (and the animation of the manufacturer). In short, we are supplied by two independent substations from the city, which is reserved by a system of diesel generators. In the middle of the chain between the sources and the payload is a kinetic drive.

The power supply scheme of the

data center complex will consume up to 10 MW. For obvious reasons, it’s much more economical to take food from the city. But if something happens, you need to either have a huge supply of UPS batteries for the duration of the diesel warm-up, or a smaller battery supply and a pool of ice water for cooling, or your own constantly working energy center (generation). One of the best solutions is DDIBP: a kinetic drive, plus a quick-start diesel engine in standby mode. In practice, everything is much more complicated, but the general principle of work is just that.

DDIBP control panel

It should be noted that the food from the city comes to us is not the highest quality. When it worsens or disappears, due to the energy of the kinetic accumulator (a seven-ton flywheel untwisted to great speed). The energy of the residual rotation of the flywheel is enough with a margin to start the diesel engine, even from the second attempt. Of the pluses - the same system makes it very easy to cope with most power surges - roughly speaking, in the middle of the circuit, the DDIBP smooths an average of about 80% of peaks and dips without turning on the motor-generator. Another advantage of using DDIBP is that there is no problem with the delay in switching to the backup power system, since the kinetic drive is constantly connected to the circuit.

The fuel purification system, in addition to conventional filtration, also includes an impurity separation system that purifies the fuel immediately before being fed to the fuel pump.

Filter separator for the fuel supply system

Cylinder covers

Solenoid valve for the fuel supply system

One of our important tasks now is to guarantee energy efficiency with low consumption.

The pipe leads to the second floor, where exhaust silencing and purification systems are located. In particular, in the 4.5-meter two-ton silencers

Last time we promised to show the size of the silencers. They promised - we are fulfilling:

At the DDBI plant in Germany

This is a fuel tank

Solarium for diesel generator sets is stored in large quantities in a blue house outside, and in this tank there is the necessary reserve for an hour or two of work (depending on the load). The tanks are connected, the fuel can be delivered endlessly, and it will first be poured into the main storage, then - here, hereinafter - into the diesel generator itself. Of course, the second energy module is the same, and there is its own independent fuel storage.

Ventilation exhaust fan

Pipelines for diesel engine cooling system. Refrigerant circulates in the pipes, which passes through radiators installed on the second floor.

To reduce losses, the flywheel rotates in gaseous helium. While he is in this environment, the cost of maintaining rotation even at wild speed is minimal, that is, it is enough to invest energy in it for promotion, and then it consumes very little. Efficiency> 99%. Then, when necessary, the flywheel transfers the accumulated energy to the system.

>

> Reducer-distributor of helium supply to the kinetic drive

Emergency stop valve for the diesel engine with a “fire” signal or emergency shutdown with a red button. It is used only as a last resort, and actually shuts off oxygen to the motor

The fire is extinguished by gas Novec 1230 - in the applied concentration it is not dangerous to humans. Our fire extinguishing system is smart: in the event of a fire on a diesel engine, it gives a chance to cope with the fire yourself. This increases the survivability of the data center.

Starting batteries

Power cables from the generator to the load (DPC)

Fire alarm siren. As soon as it turns on - you need to run out of the room.

Ventilation control cabinet

Circuit breaker circuit breaker

Here is protection from the fool. The lock closes critical panels. To press something, you need to remove the plastic curtain by rotating it 180 degrees. Then press the button hard enough. Such protection options are made so that nothing is done by chance without a full awareness of what is happening.

The smoke exhaust system button is located in front of the room entrance.

Here, the test load is connected to this unit from the outside for routine inspection of the DDIBP:

The load is connected by a cable

that is very flexible for its diameter. And here is the load itself. Rheostat tractor. The trailer is something like a huge air heater that effectively heats the air in order to take a lot of power from the energy module. Not that the winter in Yaroslavl became warmer, but they could do what they could.

Rheostat tractor

And these are two interesting objects. Lightning trap and lightning conductor. More precisely, on the power module, a high mast structure is a passive lightning rod - a metal pin that discharges a discharge deep underground. And then, in the center, on the roof of the data center, you can already see an active installation of a similar purpose, it allows you to achieve a similar effect only with much smaller sizes.

Passive and active lightning rods The

second module is symmetrical to the first. The only thing that distinguishes its surroundings is this additional object. This is a separate DES for the office, the requirements for uninterrupted power supply are much lower, which means that you do not need to expend the power of the DDIBP.

DES for the office

Now we go down to the metro line to the Kremlin.

Cable collector

In fact, of course, this is a cable collector, but if you turn off the lights correctly, you can devote newcomers to the secrets of the USSR.

You can still do it like this:

Here are cables the thickness of the arm of a healthy engineer. Well, or with the leg of a pretty girl. For the scraps of such a cable was a real hunt for some installers at a construction site. According to their ideas, he is probably golden. They got a little, but they spoiled us pretty badly.

The cable, by the way, is for the most part domestic, produced by the Kolchuginsky plant from the Vladimir region. There were no problems at our facility, but, of course, the cable should always be checked at the time of reception - it can be brought with a break, or with broken insulation, and much more.

"Glamorous" fire foam. During installation, it expands and very tightly covers everything that can, in order to prevent the spread of fire and smoke through the holes where the cable passes, in this case.

So, there is food. The data center lacks more cooling and the Internet. And beer, but the brewery is right outside the window. With the transport network, everything is fine with us, there are two main communication rooms that reserve each other. There were 4 inputs of the main optics brought to the data center, we provided for the possibility of installing 100Gbit / s transponders.

One day we will tell you more about the device of our communication center.

Now let's go look at the cooling.

You can read more about cooling design here .

Again, briefly the main things:

The Natural Free Cooling Free Organization system organization

diagram works like this. In the "face" (mixing chamber), our data center is blown by the wind. Cold air enters the main duct, gives off cold to the internal circuit, then flies further through the data center and goes into the steppe. The hot environment of the machine room transfers heat through the heat exchanger to the external space of the hangar, in which the data center modules are located. Hot updrafts go up through openings in the roof or forcibly through the mixing chamber. Simple yet, right ?

The layout of the main duct

The first one who meets us is the "spider." This is a chiller. We call him a spider because of its characteristic shape.

Chiller

Why a chiller in a data center with free cooling? That's because if the external temperature rises above 24 degrees Celsius, the halls will not cool with the desired efficiency. Let me remind you that we decided to build a data center in Yaroslavl also because it is cooler in the summer (remember the night) than in Moscow, and you can save on cooling.

Nevertheless, up to about a month in a year, on average, the temperature rises above the specified limit. In this case, we use a second cooling system on chillers that cool water in a huge pipeline network. Again, simplifying to indecency - these are large refrigerators that cool the water. They require much more food, but nowhere to go. Reservation of N + 2 chillers, which means three units are installed at once on the first machine.

Do you recognize these heroes?

Expansion tanks

A total of 180 tons of water in the circuit.

Main collector

Drain cock

Another spider, but on the other side (control panel on the side)

Balancing valve

If emergency water drain is required, it will flow into special pits. The

pumps provide circulation in the circuit. The second pump is the reserve.

Freon pipelines lead to the roof. Bending is oil lifting loops to allow oil to return to the compressor.

Soon we will finish with the glands and go to the gym.He’s right behind this wall. But! First, there is an important point. The fact is that the ambient temperature is enough to cool the halls with the required supply of only 8 months a year. And we launch chillers for two to four weeks. Where else have gone at least three and a half months? Here, look at the device.

MNFC - heat exchanger

This is a heat exchanger chamber, from here the cold hall of the hall begins, and here the hot ends. That is, in this device we make cold air from hot air. This is a key element of the cooling system. The heat exchanger operates both in outdoor cooling mode and in water cooling mode.

The main mode - a large volume of street air is passed through the heat exchanger.

If the air is hot, we close the air intake and begin to cool the exchange chamber with the output from the chiller, spending electricity on cooling.

Behind this grate there is a heat exchange.

But when the air is only 3-4 degrees warmer (up to 24 degrees), which happens in the “fallen” months, we use the life hack from the school course of physics. We simply saturate the air with moisture (we drive it through the wall of water fog for such a device), which gives the desired delta in the adiabatic process. This technology is much more economical than the chiller system, and is widely used in conjunction with free cooling.

Here, right through the building, an exhaust duct passes right through. This is such a very wide hot corridor.

Roof anti-aircraft lights. The flaps open, if necessary, and release hot air currents.

That's it, we’re watching the mashroom. Now the rooms from the second to the sixth look like this: the modules have not yet been mounted.

But the first module is launched and is already filling up. We arrived at the very moment when some of the servers are already working, but the hall is still half empty.

Data Center Module

Since our racks are typical, and the hardware is generally homogeneous (this is our data center, and we know for sure what will be installed here), it was possible to mount all the I / O at once.

Power sockets for connecting racks

Another plus of typical racks is the known dimensions of the cold and hot corridor. Here is the cold air blowing from below from the raised floor. These panels are now closed with polyethylene - the corridor is not used, and we do not want engineers to blow off how much in vain. By the way, these cold corridors are perhaps the last of the reminders why the admin is always wearing a sweater. Because it is very cold and blowing.

Cold air corridor

And this is our teleportation device, prototype.

Seriously, these are the doors that close (limit) the cold corridor so that the cooling works as efficiently as possible. This is how it looks in that part of the hall where the racks are already there.

Racks

Actually, racks. Now the following equipment has been installed:

- Oracle Hi-End and Mid-Range servers;

- Storage built on equipment HDS, HP, IBM, Brocade;

- The core of the network is built on Avaya equipment;

- HP servers.

Power distribution system for racks: busbars with power take-offs

We have the SCS system top-of-rack Panduit

Top-of-rack

Cross cassettes with switching by four with a minus, will soon be five

Accurate installation - our little fad. Even in the Moscow test zone , where everything changes every day and the devil breaks in the equipment, the installation is more or less accurate. We know from experience that unsigned and inaccurately mounted cables can cause service interruptions.

Switching Aggregation Switches

Active Network Equipment

10% of the room is designed for high-density racks - up to 20 kW per unit. The most powerful racks are located in the center of the hall, there is a margin of cold because of the peculiarity of the movement of high-speed air flows.

But there are storage systems. They have not yet been connected. Somewhere here will be stored data about your balance.

Tape library

These are the fan heaters. With their help, we checked the room for the ability to remove heat. Yes, yes, they just warmed it and looked what happened.

Retraction to the in-line busbar.

Again Novec, there is enough reserve for two fire extinguishing system trips.

This is how the power cables that we saw in the basement go to the hall.

Now let's look at the office to the admins and not only.

The main entrance to the data center building

We go around the data center and see temporary modular blocks - this is the dining room and the “office” - the places where the shift worked during the construction of the facility, when the office premises were not yet ready. The office is much better than in such a "container."

Temporary office and dining room

At the entrance, we have a mock data center: The

cooling circuit is highlighted :

On the roof you can see the condenser units of the chillers:

You can also highlight the power supply and trunk inputs:

Before I get inside, I will show the helmets in the locker room. One of the builders turned out to be an artist and painted his helmet - and raced.

Open space

The wall is made of a blackboard.

And here is a rest room for changing administrators.

Coffee Point

Cabinet

Bathroom

Again on the street!They walked around, but were not on the roof. And on the roof there are chillers, so they peer carefully:

Here is the roof itself. She is very big and striped. From the satellite, our data center is visible as a circle logo.

This we are approaching the external units of the chillers. As from domestic air conditioners, only a little more.

These "windows" or anti-aircraft lights - these are places where the hot air naturally finally leaves the building.

Anti-aircraft lights

Each anti-aircraft lamp has its own weather station, which sends data to the automation system.

And here is the final point of the freon pipelines that we saw before. There are so

many pipes, because each unit has two circuits, and there are two of them for one chiller (that is, the four-circuit chiller itself)

Elements of general ventilation systems of the building.

Here is the lightning protection mast:

Here is our data center in Yaroslavl. While the first module of the flagship data center, designed for 236 racks, has been launched, we are slowly moving some of the "combat" systems there. By the way, the Federal Monitoring Center moved to us, and soon the girls from the integrated service center will come. So do not lose courage.