Budget SAN Storage on LSI Syncro, Part 1

- Tutorial

Part Two

So, I will continue my rare articles on the topic "how not to pay HP / EMC / IBM many kilo- (or even mega-) dollars and collect your storage no worse." I did not bring the last cycle to a victorious end, but I still filled out 90% of my thoughts into a text.

Our current goal will be the fault-tolerant All-Flash (that is, only from SSDs, without hard drives, although this is not important) storage for the needs of the vSphere cluster, several times cheaper than branded counterparts and with very good performance. We will connect to it via Fiber Channel, but no one bothers to do iSCSI, FCoE, or even, horror, Infiniband.

Syncro

As the name implies, the basis of this whole house will be a fairly unique product on the market called Syncro CS from LSI (now Avago).

What is it and what is remarkable?

In fact, this is a set of two conventional LSI 9286-8e controllers (or 9271-8i if internal ports are needed) and two supercapacitors for storing cache memory on the controller’s flash drive in case of power loss. The cost of the kit is several times higher than the price of a similar kit without HA-functionality. But, when compared with DRBD-based solutions, this difference is more than offset by the lack of the need for a dual set of drives.

But the most interesting lies in the firmware. Thanks to it, these controllers, being connected to the same SAS network (for example, a disk basket with expanders), establish communication with each other through it and work in a failover cluster mode.

For us, this is interesting here:

- Ability to create RAID arrays available on two servers at once

- Fault tolerance at the controller level: at the death of one of them (or the entire server), the second will continue to work and maintain I / O

Through the SAS network, these controllers synchronize their write caches (the so-called cache coherency), exchange heartbeats, telling each other that they are alive and well, and so on.

They have some important features, clarified both by

- Due to the fault tolerant nature of the solution, LSI Syncro only supports dual port SAS drives . So no SATA SSD, unfortunately, which pretty much increases the cost of storage.

- As I understand it, controllers support no more than 96 SAS drives. The documentation also contains the phrase Maximum of 120 dual-ported SAS devices in the HA storage domain , which contradicts Up to 96 ea 6G SAS and / or NearLine-SAS dual-ported HDDs and SSDs two lines above in the same place. So we will take it to a minimum.

- When creating an array on one of the controllers, this controller becomes its master until, for example, the server where it is installed is rebooted. After this, the role of the host passes to the second controller.

- After the return of the first controller, the role of the owner of the array does not return to him, but remains with the second.

- The controllers use the I / O Shipping mechanism : when an I / O request arrives at the controller, which is not currently the master of the array, it forwards this request to the host controller via the SAS bus, it executes it and sends it back.

- As a result of the above, the speed of the slave controller with the array is several times lower (linear speed - 2 times, according to IOPS - 7 (!) Times) than the speed of the host controller.

- At the moment, there is no way to force the host controller to voluntarily transfer ownership of the array to the second controller, which is very strange. Especially considering that during a soft-reset (Controlled failover) of the main controller, messages in the logs of the second controller show that the main one warns about its reboot and asks to pick up the arrays. LSI promised to add something to its utilities, but for now ... only reboot, only hardcore.

- The server OS where the subordinate (at the given moment) controller is inserted sees the host controller arrays, but the controller management utilities (StorCLI, MegaCLI, ...) of the arrays (and disks consisting of these arrays) will not show. As soon as ownership of arrays transfers to this controller, arrays and disks will immediately appear in the output of utilities and it will be possible to manage them

Alua

Since the speed of working with the array is different for the master and the slave controllers, we will have to build a repository with the ALUA paradigm, which was invented just for such cases. There are a lot of all sorts of features, but for us its meaning is that you can mark part of the ports on the storages as Optimized (i.e. recommended for use), and others as Unoptimized (respectively, not recommended). All of these ports can handle I / O at any given time, but if there are live paths to the Optimized ports, the initiator will use them and keep the rest in reserve. If all Optimized ports fail, then the initiator (ESXi in our case), after a little thought, will start I / O on Unoptimized ports.

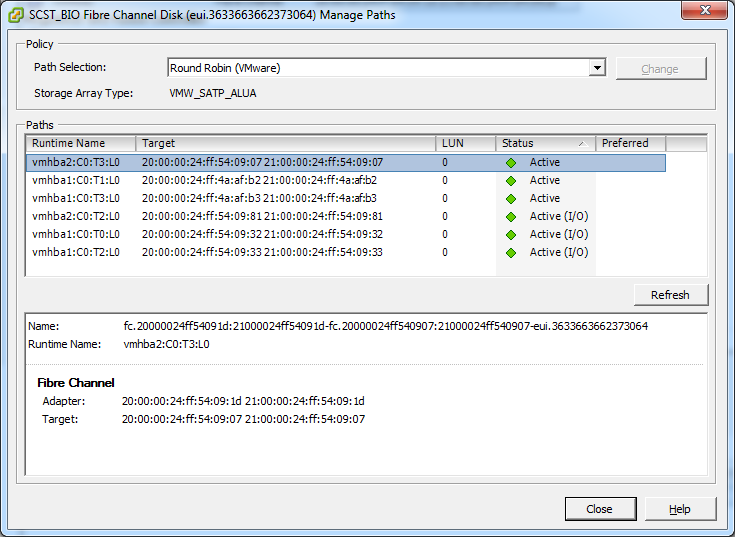

From a VMWare ESXi point of view, it looks like this:

The first three paths lead us to the subordinate storage, and the second three - to the main one, which can serve I / O at full speed.

If we had not used ALUA, then I / O requests (in Round-Robin mode) would go to both storages, which would lead to uneven delays and speed, essentially resting on the performance of the sub-repository.

Achtung!

Many programs that work with SAN (for example, Microsoft Cluster Services) rely on SCSI Persistent Reservations, which allow you to block the LUN and make all sorts of tricks with it exclusively. So, these same SCSI PRs will not be replicated to another server .

That is, if the initiator puts a lock on the LUN through the path leading to the first server, then through the path leading to the second server, this lock will not be visible. For work in Round Robin mode, this is especially important, since the paths are constantly changing and it is not known in advance which path the lock will be set to. In the case of VMWare ESXi, this, in principle, is not a problem since SCSI PR is not used with the VMFS5 version, but instead the Atomic-Test-and-Set instruction is used (which, however, is also not replicated, but it only blocks the necessary area on the LUN and for a short time, not the entire LUN), and ALUA should let ESXi know that it does not use the path to the second server while the main one is alive.

There are some commercial solutions based on SCST that implement SCSI PR synchronization, but I have not tried them. And, according to rumors, some kind of solution will probably be open to the public soon.

Okay, with theory finished, let's get down to practice.

Hard & soft

Iron

Controller Servers:

- LSI Syncro CS 9286-8e x 1 Kit Syncro is

now available at 12Gb / s, but I haven’t tried it. Plus, there MegaRAID features were cut even more (Cachecade was completely removed, but in the previous version it was, albeit only in read cache mode). - Housing Supermicro SC216 2U x 2

You could certainly use 1U, but they are very tight with expansion slots, and rack space savings especially was not necessary. - Supermicro X10SRL-F x 2 MP

Selected for a large number of PCI-E slots. - Processor the Intel Xeon E5-1650 v3 x 2

is selected for a great rate. - Memory DDR4-2133 16GB ECC Registered x 8

Memory will hardly be needed for our hardware (although if you add a basket with hard drives, it’s quite useful as a read cache), so you can take a smaller one, but taking into account the 4-channel memory controller and the ability to mirror modules. - Dual-port Fiber Channel QLogic 2562 x 4 controllers

Casting and 16Gbit QLogic 2672 were passed to this nomination, but there the problems of compatibility with the driver got out, and I don’t have 16Gbit switches. Therefore, a magic wand was used and they, for the time being, turned into dual-port 10Gb network cards with space cost ... :) - Intel DC S3500 80Gb x 4

A pair in each server (RAID1) under the OS (yes, it would be possible to put USB sticks or SATA DOM on USB, but the former have poor reliability (sometimes they fall off), and the latter do not have Hot Swap capability).

Disk shelf:

- Case for 72 2.5 " Supermicro SC417E26 x 1 discs

Inside, it has three identical backplanes for 24 discs with dual expanders for fault tolerance. They have an even more monstrous solution - SC417E16-RJBOD1 - for 88 discs, but there are only single expanders, which will badly affect reliability, and expansion slots are not enough, but we need, for good, at least three (for setting strips with external SAS ports - two for connecting to servers and one for cascading baskets). - 400GB Solid State SAS Drives Hitachi S842E400M2 x 48

At the time of purchase, these were some of the most reliable and affordable on the market (from the Syncro compatibility list). The manufacturer promises wear resistance of 30 Petabytes per record or 40 full dubs per day for 5 years. Well, time will tell ... - Any other trifle like a Supermicro CSE-PTJBOD-CB2 power board (so that the case turns on without M / P + monitoring the case's power supply via I2C / SAS), SAS wires are internal and external (for switching backplanes to each other and connecting servers to the shelf), etc. P.

FC switches:

- Cisco MDS9148 x 2

Relatively inexpensive and high-quality devices (I took with 16 activated ports).

Now it seems like end-of-sale, instead of them 9148S at 16Gbps.

Cost & performance

All this happiness (along with switches) at the time of purchase (November 2014) cost about 4 million rubles, which, with a “raw capacity” of 19.2 TB flash, is very, very inexpensive. For comparison: for the low-end dual-controller piece of hardware MSA 2040 with a 24 x 800GB SSD on board, HP now wants something about 18 million (yes, I know about all kinds of discounts, and the dollar has grown, but still the order of prices, I think, something like this).

In terms of speed, they promise no more than 85k IOPS, but we will have a little more: from one node I shot 720k IOPS with 75% CPU load with the vdisk_nullio backend (it reads zeros when reading, it discards the records, a kind of analogue / dev / null).

Moreover, the CPU load, for some reason, increases spasmodically with an increase in IOPS from 600k to 720k - from 40% to 75%, before that it grows evenly. The linear speed is expectedly limited by FC interfaces - about 3 GB / s (4 ports of 800 MB / s in theory, given the 8b / 10b encoding of 8Gbit FC).

From Syncro I was able to squeeze out the 420k IOPS , which is close to the theoretical limit of the 450k IOPS that LSI promises. When working with SSD, it is very important to disable the write cache on the array (write-through mode) and enable Direct I / O, otherwise the performance rests on 150k IOPS even when reading . These are the features of LSI FastPath technology, it is activated only if these two conditions are met.

The linear read speed from the array reaches 3.8GB / s. Here, most likely, we are limited by the performance of the controller itself (the PCI-E 3.0 x8 bus can pump 8GB / s).

More detailed benchmarks, I think, will be in the second part.

Server assembly

There is nothing special to tell.

In each server we install a motherboard, processor, memory, two FC controllers and one Syncro.

I also installed a small basket (Supermicro MCP-220-82609-0N) on two disks in the back of the case and inserted an SSD under the OS. The main basket of the case has remained unused for now, but in the future it can also be connected to Syncro, although this will destroy the fault-tolerant nature of the solution (turned off one node - at the same time cut down the backplane too).

An important advice on reliability is to set memory mirroring in the BIOS, this is a kind of RAID1 for DDR.

Brains will be half as much, but reliability will increase dramatically. Well and drive memtest th week, for fidelity. In BIOS logs read about ECC errors, if they exist - change the failed module and drive again. For if so far these are correctable errors, then they can then become fatal, there have been such cases.

Wiring diagram

SAS

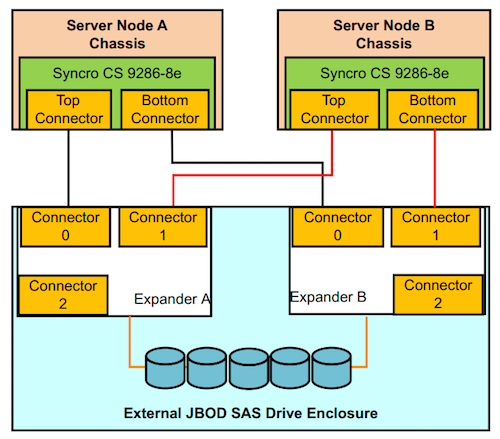

The following scheme is brazenly stolen from the documentation for Syncro: The

deep meaning of this connection is that if one of the disk shelves fails, the

second will continue to work. If you connect the shelves one after the other (daisy chain),

then if the first shelf in the chain fails, communication with all the others will also be lost.

And here we have something like a “ring” topology, when additional shelves are connected

between the two.

In our case, there is only one shelf (even though it has three backplanes), so we will connect it

as usual, something like this:

Inside the shelf, backplanes are connected in series.

FC

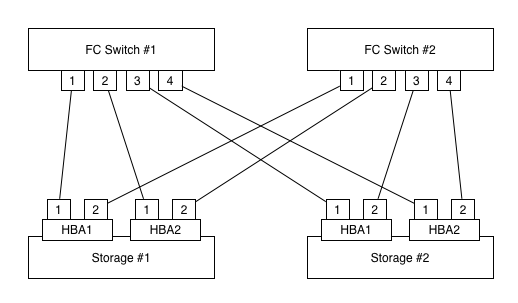

We connect the servers to FC switches as follows:

Thus, if any of the HBA cards and / or one switch fails, we will have connectivity.

Cramming! Switches should not be interconnected by trunks and other ISL!

These should be two independent factories.

For, if we make a mistake in configuring one of them (or the software fails), this will not affect the second (in FC, zoning and other settings apply to all switches of the factory).

I zoned on the principle of “one initiator port and one storage port”.

In the presence of a large number of initiators, this is time-consuming, since the number of zones will be equal to [number of initiator ports] X [number of storage ports]. But, having a list of storage ports and zone initiators can be easily generated by a script :) Laziness is the engine of progress.

Software part

- Debian Linux 7 x86-64

- Kernel 3.14.xx LTS

If extremely high performance is required, then you should turn your bright eyes to the 3.18+ kernels, as they have integrated SCSI-MQ support , which allows you to achieve 1mln + IOPS on one LUN.

But, in our case, when many initiators connect to several LUNs, this is even harmful, because by benchmarks in this case SCSI-MQ works somewhat slower than a regular stack. Another minus is that SCSI-MQ does not have I / O schedulers (deadline, cfq), which is logical in general - you do not need an SSD scheduler, but there is no sense for SCSI-MQ on hard drives, IOPS are far from the software limits. But in mixed configurations (SSD + HDD), this is likely to adversely affect the speed of work of arrays with HDD. - SCST Target Framework 3.0.1

Everything is more or less standard here, we put the OS on a soft Linux raid (mdraid) since there are no iron raids in these motherboards.

Next, configure and assemble the kernel.

My config, can someone come in handy

CONFIG_64BIT=y

CONFIG_X86_64=y

CONFIG_X86=y

CONFIG_INSTRUCTION_DECODER=y

CONFIG_OUTPUT_FORMAT="elf64-x86-64"

CONFIG_ARCH_DEFCONFIG="arch/x86/configs/x86_64_defconfig"

CONFIG_LOCKDEP_SUPPORT=y

CONFIG_STACKTRACE_SUPPORT=y

CONFIG_HAVE_LATENCYTOP_SUPPORT=y

CONFIG_MMU=y

CONFIG_NEED_DMA_MAP_STATE=y

CONFIG_NEED_SG_DMA_LENGTH=y

CONFIG_GENERIC_ISA_DMA=y

CONFIG_GENERIC_BUG=y

CONFIG_GENERIC_BUG_RELATIVE_POINTERS=y

CONFIG_GENERIC_HWEIGHT=y

CONFIG_ARCH_MAY_HAVE_PC_FDC=y

CONFIG_RWSEM_XCHGADD_ALGORITHM=y

CONFIG_GENERIC_CALIBRATE_DELAY=y

CONFIG_ARCH_HAS_CPU_RELAX=y

CONFIG_ARCH_HAS_CACHE_LINE_SIZE=y

CONFIG_ARCH_HAS_CPU_AUTOPROBE=y

CONFIG_HAVE_SETUP_PER_CPU_AREA=y

CONFIG_NEED_PER_CPU_EMBED_FIRST_CHUNK=y

CONFIG_NEED_PER_CPU_PAGE_FIRST_CHUNK=y

CONFIG_ARCH_HIBERNATION_POSSIBLE=y

CONFIG_ARCH_SUSPEND_POSSIBLE=y

CONFIG_ARCH_WANT_HUGE_PMD_SHARE=y

CONFIG_ARCH_WANT_GENERAL_HUGETLB=y

CONFIG_ZONE_DMA32=y

CONFIG_AUDIT_ARCH=y

CONFIG_ARCH_SUPPORTS_OPTIMIZED_INLINING=y

CONFIG_ARCH_SUPPORTS_DEBUG_PAGEALLOC=y

CONFIG_X86_64_SMP=y

CONFIG_X86_HT=y

CONFIG_ARCH_HWEIGHT_CFLAGS="-fcall-saved-rdi -fcall-saved-rsi -fcall-saved-rdx -fcall-saved-rcx -fcall-saved-r8 -fcall-saved-r9 -fcall-saved-r10 -fcall-saved-r11"

CONFIG_ARCH_SUPPORTS_UPROBES=y

CONFIG_DEFCONFIG_LIST="/lib/modules/$UNAME_RELEASE/.config"

CONFIG_IRQ_WORK=y

CONFIG_BUILDTIME_EXTABLE_SORT=y

CONFIG_INIT_ENV_ARG_LIMIT=32

CONFIG_CROSS_COMPILE=""

CONFIG_LOCALVERSION=""

CONFIG_HAVE_KERNEL_GZIP=y

CONFIG_HAVE_KERNEL_BZIP2=y

CONFIG_HAVE_KERNEL_LZMA=y

CONFIG_HAVE_KERNEL_XZ=y

CONFIG_HAVE_KERNEL_LZO=y

CONFIG_HAVE_KERNEL_LZ4=y

CONFIG_KERNEL_XZ=y

CONFIG_DEFAULT_HOSTNAME="(none)"

CONFIG_SYSVIPC=y

CONFIG_SYSVIPC_SYSCTL=y

CONFIG_POSIX_MQUEUE=y

CONFIG_POSIX_MQUEUE_SYSCTL=y

CONFIG_FHANDLE=y

CONFIG_GENERIC_IRQ_PROBE=y

CONFIG_GENERIC_IRQ_SHOW=y

CONFIG_GENERIC_PENDING_IRQ=y

CONFIG_IRQ_FORCED_THREADING=y

CONFIG_SPARSE_IRQ=y

CONFIG_CLOCKSOURCE_WATCHDOG=y

CONFIG_ARCH_CLOCKSOURCE_DATA=y

CONFIG_GENERIC_TIME_VSYSCALL=y

CONFIG_GENERIC_CLOCKEVENTS=y

CONFIG_GENERIC_CLOCKEVENTS_BUILD=y

CONFIG_GENERIC_CLOCKEVENTS_BROADCAST=y

CONFIG_GENERIC_CLOCKEVENTS_MIN_ADJUST=y

CONFIG_GENERIC_CMOS_UPDATE=y

CONFIG_TICK_ONESHOT=y

CONFIG_NO_HZ_COMMON=y

CONFIG_NO_HZ_IDLE=y

CONFIG_HIGH_RES_TIMERS=y

CONFIG_TICK_CPU_ACCOUNTING=y

CONFIG_TASKSTATS=y

CONFIG_TASK_DELAY_ACCT=y

CONFIG_TASK_XACCT=y

CONFIG_TASK_IO_ACCOUNTING=y

CONFIG_TREE_RCU=y

CONFIG_RCU_STALL_COMMON=y

CONFIG_RCU_FANOUT=64

CONFIG_RCU_FANOUT_LEAF=16

CONFIG_IKCONFIG=y

CONFIG_IKCONFIG_PROC=y

CONFIG_LOG_BUF_SHIFT=18

CONFIG_HAVE_UNSTABLE_SCHED_CLOCK=y

CONFIG_ARCH_SUPPORTS_NUMA_BALANCING=y

CONFIG_ARCH_SUPPORTS_INT128=y

CONFIG_ARCH_WANTS_PROT_NUMA_PROT_NONE=y

CONFIG_ARCH_USES_NUMA_PROT_NONE=y

CONFIG_NUMA_BALANCING_DEFAULT_ENABLED=y

CONFIG_NUMA_BALANCING=y

CONFIG_CGROUPS=y

CONFIG_CGROUP_SCHED=y

CONFIG_FAIR_GROUP_SCHED=y

CONFIG_CFS_BANDWIDTH=y

CONFIG_RT_GROUP_SCHED=y

CONFIG_BLK_CGROUP=y

CONFIG_NAMESPACES=y

CONFIG_SCHED_AUTOGROUP=y

CONFIG_SYSCTL=y

CONFIG_ANON_INODES=y

CONFIG_HAVE_UID16=y

CONFIG_SYSCTL_EXCEPTION_TRACE=y

CONFIG_HAVE_PCSPKR_PLATFORM=y

CONFIG_UID16=y

CONFIG_KALLSYMS=y

CONFIG_PRINTK=y

CONFIG_BUG=y

CONFIG_ELF_CORE=y

CONFIG_PCSPKR_PLATFORM=y

CONFIG_BASE_FULL=y

CONFIG_FUTEX=y

CONFIG_EPOLL=y

CONFIG_SIGNALFD=y

CONFIG_TIMERFD=y

CONFIG_EVENTFD=y

CONFIG_SHMEM=y

CONFIG_AIO=y

CONFIG_PCI_QUIRKS=y

CONFIG_HAVE_PERF_EVENTS=y

CONFIG_PERF_EVENTS=y

CONFIG_VM_EVENT_COUNTERS=y

CONFIG_SLUB_DEBUG=y

CONFIG_SLUB=y

CONFIG_SLUB_CPU_PARTIAL=y

CONFIG_HAVE_OPROFILE=y

CONFIG_OPROFILE_NMI_TIMER=y

CONFIG_JUMP_LABEL=y

CONFIG_HAVE_EFFICIENT_UNALIGNED_ACCESS=y

CONFIG_ARCH_USE_BUILTIN_BSWAP=y

CONFIG_HAVE_IOREMAP_PROT=y

CONFIG_HAVE_KPROBES=y

CONFIG_HAVE_KRETPROBES=y

CONFIG_HAVE_OPTPROBES=y

CONFIG_HAVE_KPROBES_ON_FTRACE=y

CONFIG_HAVE_ARCH_TRACEHOOK=y

CONFIG_HAVE_DMA_ATTRS=y

CONFIG_GENERIC_SMP_IDLE_THREAD=y

CONFIG_HAVE_REGS_AND_STACK_ACCESS_API=y

CONFIG_HAVE_DMA_API_DEBUG=y

CONFIG_HAVE_HW_BREAKPOINT=y

CONFIG_HAVE_MIXED_BREAKPOINTS_REGS=y

CONFIG_HAVE_USER_RETURN_NOTIFIER=y

CONFIG_HAVE_PERF_EVENTS_NMI=y

CONFIG_HAVE_PERF_REGS=y

CONFIG_HAVE_PERF_USER_STACK_DUMP=y

CONFIG_HAVE_ARCH_JUMP_LABEL=y

CONFIG_ARCH_HAVE_NMI_SAFE_CMPXCHG=y

CONFIG_HAVE_ALIGNED_STRUCT_PAGE=y

CONFIG_HAVE_CMPXCHG_LOCAL=y

CONFIG_HAVE_CMPXCHG_DOUBLE=y

CONFIG_ARCH_WANT_COMPAT_IPC_PARSE_VERSION=y

CONFIG_ARCH_WANT_OLD_COMPAT_IPC=y

CONFIG_HAVE_ARCH_SECCOMP_FILTER=y

CONFIG_SECCOMP_FILTER=y

CONFIG_HAVE_CC_STACKPROTECTOR=y

CONFIG_CC_STACKPROTECTOR_NONE=y

CONFIG_HAVE_CONTEXT_TRACKING=y

CONFIG_HAVE_VIRT_CPU_ACCOUNTING_GEN=y

CONFIG_HAVE_IRQ_TIME_ACCOUNTING=y

CONFIG_HAVE_ARCH_TRANSPARENT_HUGEPAGE=y

CONFIG_HAVE_ARCH_SOFT_DIRTY=y

CONFIG_MODULES_USE_ELF_RELA=y

CONFIG_HAVE_IRQ_EXIT_ON_IRQ_STACK=y

CONFIG_OLD_SIGSUSPEND3=y

CONFIG_COMPAT_OLD_SIGACTION=y

CONFIG_SLABINFO=y

CONFIG_RT_MUTEXES=y

CONFIG_BASE_SMALL=0

CONFIG_MODULES=y

CONFIG_MODULE_UNLOAD=y

CONFIG_STOP_MACHINE=y

CONFIG_BLOCK=y

CONFIG_BLK_DEV_BSG=y

CONFIG_PARTITION_ADVANCED=y

CONFIG_MSDOS_PARTITION=y

CONFIG_EFI_PARTITION=y

CONFIG_BLOCK_COMPAT=y

CONFIG_IOSCHED_NOOP=y

CONFIG_IOSCHED_DEADLINE=y

CONFIG_IOSCHED_CFQ=y

CONFIG_CFQ_GROUP_IOSCHED=y

CONFIG_DEFAULT_DEADLINE=y

CONFIG_DEFAULT_IOSCHED="deadline"

CONFIG_PADATA=y

CONFIG_INLINE_SPIN_UNLOCK_IRQ=y

CONFIG_INLINE_READ_UNLOCK=y

CONFIG_INLINE_READ_UNLOCK_IRQ=y

CONFIG_INLINE_WRITE_UNLOCK=y

CONFIG_INLINE_WRITE_UNLOCK_IRQ=y

CONFIG_ARCH_SUPPORTS_ATOMIC_RMW=y

CONFIG_MUTEX_SPIN_ON_OWNER=y

CONFIG_ZONE_DMA=y

CONFIG_SMP=y

CONFIG_X86_SUPPORTS_MEMORY_FAILURE=y

CONFIG_SCHED_OMIT_FRAME_POINTER=y

CONFIG_NO_BOOTMEM=y

CONFIG_MCORE2=y

CONFIG_X86_INTERNODE_CACHE_SHIFT=6

CONFIG_X86_L1_CACHE_SHIFT=6

CONFIG_X86_INTEL_USERCOPY=y

CONFIG_X86_USE_PPRO_CHECKSUM=y

CONFIG_X86_P6_NOP=y

CONFIG_X86_TSC=y

CONFIG_X86_CMPXCHG64=y

CONFIG_X86_CMOV=y

CONFIG_X86_MINIMUM_CPU_FAMILY=64

CONFIG_X86_DEBUGCTLMSR=y

CONFIG_CPU_SUP_INTEL=y

CONFIG_CPU_SUP_AMD=y

CONFIG_CPU_SUP_CENTAUR=y

CONFIG_HPET_TIMER=y

CONFIG_HPET_EMULATE_RTC=y

CONFIG_DMI=y

CONFIG_SWIOTLB=y

CONFIG_IOMMU_HELPER=y

CONFIG_NR_CPUS=32

CONFIG_SCHED_SMT=y

CONFIG_SCHED_MC=y

CONFIG_PREEMPT_NONE=y

CONFIG_X86_UP_APIC_MSI=y

CONFIG_X86_LOCAL_APIC=y

CONFIG_X86_IO_APIC=y

CONFIG_X86_MCE=y

CONFIG_X86_MCE_INTEL=y

CONFIG_X86_MCE_THRESHOLD=y

CONFIG_X86_THERMAL_VECTOR=y

CONFIG_X86_16BIT=y

CONFIG_X86_ESPFIX64=y

CONFIG_MICROCODE=y

CONFIG_MICROCODE_INTEL=y

CONFIG_MICROCODE_OLD_INTERFACE=y

CONFIG_X86_MSR=y

CONFIG_X86_CPUID=y

CONFIG_ARCH_PHYS_ADDR_T_64BIT=y

CONFIG_ARCH_DMA_ADDR_T_64BIT=y

CONFIG_DIRECT_GBPAGES=y

CONFIG_NUMA=y

CONFIG_X86_64_ACPI_NUMA=y

CONFIG_NODES_SPAN_OTHER_NODES=y

CONFIG_NODES_SHIFT=2

CONFIG_ARCH_SPARSEMEM_ENABLE=y

CONFIG_ARCH_SPARSEMEM_DEFAULT=y

CONFIG_ARCH_SELECT_MEMORY_MODEL=y

CONFIG_ILLEGAL_POINTER_VALUE=0xdead000000000000

CONFIG_SELECT_MEMORY_MODEL=y

CONFIG_SPARSEMEM_MANUAL=y

CONFIG_SPARSEMEM=y

CONFIG_NEED_MULTIPLE_NODES=y

CONFIG_HAVE_MEMORY_PRESENT=y

CONFIG_SPARSEMEM_EXTREME=y

CONFIG_SPARSEMEM_VMEMMAP_ENABLE=y

CONFIG_SPARSEMEM_ALLOC_MEM_MAP_TOGETHER=y

CONFIG_SPARSEMEM_VMEMMAP=y

CONFIG_HAVE_MEMBLOCK=y

CONFIG_HAVE_MEMBLOCK_NODE_MAP=y

CONFIG_ARCH_DISCARD_MEMBLOCK=y

CONFIG_MEMORY_ISOLATION=y

CONFIG_PAGEFLAGS_EXTENDED=y

CONFIG_SPLIT_PTLOCK_CPUS=4

CONFIG_ARCH_ENABLE_SPLIT_PMD_PTLOCK=y

CONFIG_COMPACTION=y

CONFIG_MIGRATION=y

CONFIG_ARCH_ENABLE_HUGEPAGE_MIGRATION=y

CONFIG_PHYS_ADDR_T_64BIT=y

CONFIG_ZONE_DMA_FLAG=1

CONFIG_BOUNCE=y

CONFIG_VIRT_TO_BUS=y

CONFIG_DEFAULT_MMAP_MIN_ADDR=0

CONFIG_ARCH_SUPPORTS_MEMORY_FAILURE=y

CONFIG_MEMORY_FAILURE=y

CONFIG_TRANSPARENT_HUGEPAGE=y

CONFIG_TRANSPARENT_HUGEPAGE_ALWAYS=y

CONFIG_CROSS_MEMORY_ATTACH=y

CONFIG_X86_RESERVE_LOW=64

CONFIG_MTRR=y

CONFIG_MTRR_SANITIZER=y

CONFIG_MTRR_SANITIZER_ENABLE_DEFAULT=1

CONFIG_MTRR_SANITIZER_SPARE_REG_NR_DEFAULT=1

CONFIG_X86_PAT=y

CONFIG_ARCH_USES_PG_UNCACHED=y

CONFIG_ARCH_RANDOM=y

CONFIG_X86_SMAP=y

CONFIG_SECCOMP=y

CONFIG_HZ_100=y

CONFIG_HZ=100

CONFIG_SCHED_HRTICK=y

CONFIG_PHYSICAL_START=0x1000000

CONFIG_PHYSICAL_ALIGN=0x1000000

CONFIG_ARCH_ENABLE_MEMORY_HOTPLUG=y

CONFIG_USE_PERCPU_NUMA_NODE_ID=y

CONFIG_ACPI=y

CONFIG_ACPI_FAN=y

CONFIG_ACPI_PROCESSOR=y

CONFIG_ACPI_THERMAL=y

CONFIG_ACPI_NUMA=y

CONFIG_X86_PM_TIMER=y

CONFIG_ACPI_HED=y

CONFIG_ACPI_APEI=y

CONFIG_ACPI_APEI_GHES=y

CONFIG_ACPI_APEI_PCIEAER=y

CONFIG_ACPI_APEI_MEMORY_FAILURE=y

CONFIG_ACPI_EXTLOG=y

CONFIG_CPU_FREQ=y

CONFIG_CPU_FREQ_GOV_COMMON=y

CONFIG_CPU_FREQ_DEFAULT_GOV_PERFORMANCE=y

CONFIG_CPU_FREQ_GOV_PERFORMANCE=y

CONFIG_CPU_FREQ_GOV_ONDEMAND=y

CONFIG_X86_ACPI_CPUFREQ=y

CONFIG_CPU_IDLE=y

CONFIG_CPU_IDLE_MULTIPLE_DRIVERS=y

CONFIG_CPU_IDLE_GOV_LADDER=y

CONFIG_CPU_IDLE_GOV_MENU=y

CONFIG_INTEL_IDLE=y

CONFIG_PCI=y

CONFIG_PCI_DIRECT=y

CONFIG_PCI_MMCONFIG=y

CONFIG_PCI_DOMAINS=y

CONFIG_PCIEPORTBUS=y

CONFIG_PCIEAER=y

CONFIG_PCIEASPM=y

CONFIG_PCIEASPM_PERFORMANCE=y

CONFIG_PCI_MSI=y

CONFIG_PCI_LABEL=y

CONFIG_ISA_DMA_API=y

CONFIG_AMD_NB=y

CONFIG_BINFMT_ELF=y

CONFIG_COMPAT_BINFMT_ELF=y

CONFIG_ARCH_BINFMT_ELF_RANDOMIZE_PIE=y

CONFIG_BINFMT_SCRIPT=y

CONFIG_COREDUMP=y

CONFIG_IA32_EMULATION=y

CONFIG_X86_X32=y

CONFIG_COMPAT=y

CONFIG_COMPAT_FOR_U64_ALIGNMENT=y

CONFIG_SYSVIPC_COMPAT=y

CONFIG_X86_DEV_DMA_OPS=y

CONFIG_IOSF_MBI=m

CONFIG_NET=y

CONFIG_PACKET=y

CONFIG_PACKET_DIAG=y

CONFIG_UNIX=y

CONFIG_UNIX_DIAG=y

CONFIG_INET=y

CONFIG_TCP_ZERO_COPY_TRANSFER_COMPLETION_NOTIFICATION=y

CONFIG_IP_MULTICAST=y

CONFIG_NET_IPIP=y

CONFIG_NET_IPGRE_DEMUX=y

CONFIG_NET_IP_TUNNEL=y

CONFIG_NET_IPGRE=y

CONFIG_NET_IPGRE_BROADCAST=y

CONFIG_INET_TUNNEL=y

CONFIG_INET_LRO=y

CONFIG_INET_DIAG=y

CONFIG_INET_TCP_DIAG=y

CONFIG_INET_UDP_DIAG=y

CONFIG_TCP_CONG_ADVANCED=y

CONFIG_TCP_CONG_HTCP=y

CONFIG_DEFAULT_HTCP=y

CONFIG_DEFAULT_TCP_CONG="htcp"

CONFIG_STP=y

CONFIG_BRIDGE=y

CONFIG_HAVE_NET_DSA=y

CONFIG_VLAN_8021Q=y

CONFIG_LLC=y

CONFIG_NETLINK_MMAP=y

CONFIG_NETLINK_DIAG=y

CONFIG_RPS=y

CONFIG_RFS_ACCEL=y

CONFIG_XPS=y

CONFIG_NET_RX_BUSY_POLL=y

CONFIG_BQL=y

CONFIG_NET_FLOW_LIMIT=y

CONFIG_HAVE_BPF_JIT=y

CONFIG_UEVENT_HELPER_PATH="/sbin/hotplug"

CONFIG_DEVTMPFS=y

CONFIG_DEVTMPFS_MOUNT=y

CONFIG_STANDALONE=y

CONFIG_PREVENT_FIRMWARE_BUILD=y

CONFIG_FW_LOADER=y

CONFIG_FIRMWARE_IN_KERNEL=y

CONFIG_EXTRA_FIRMWARE=""

CONFIG_FW_LOADER_USER_HELPER=y

CONFIG_CONNECTOR=y

CONFIG_PROC_EVENTS=y

CONFIG_ARCH_MIGHT_HAVE_PC_PARPORT=y

CONFIG_PNP=y

CONFIG_PNPACPI=y

CONFIG_BLK_DEV=y

CONFIG_BLK_DEV_LOOP=y

CONFIG_BLK_DEV_LOOP_MIN_COUNT=8

CONFIG_HAVE_IDE=y

CONFIG_SCSI_MOD=y

CONFIG_RAID_ATTRS=y

CONFIG_SCSI=y

CONFIG_SCSI_DMA=y

CONFIG_SCSI_NETLINK=y

CONFIG_BLK_DEV_SD=y

CONFIG_CHR_DEV_SG=y

CONFIG_SCSI_MULTI_LUN=y

CONFIG_SCSI_CONSTANTS=y

CONFIG_SCSI_SCAN_ASYNC=y

CONFIG_SCSI_FC_ATTRS=y

CONFIG_SCSI_SAS_ATTRS=y

CONFIG_SCSI_LOWLEVEL=y

CONFIG_MEGARAID_SAS=y

CONFIG_ATA=y

CONFIG_ATA_VERBOSE_ERROR=y

CONFIG_ATA_ACPI=y

CONFIG_SATA_PMP=y

CONFIG_SATA_AHCI=y

CONFIG_SATA_AHCI_PLATFORM=y

CONFIG_MD=y

CONFIG_BLK_DEV_MD=y

CONFIG_MD_AUTODETECT=y

CONFIG_MD_RAID0=y

CONFIG_MD_RAID1=y

CONFIG_MD_RAID10=y

CONFIG_MD_RAID456=y

CONFIG_BLK_DEV_DM_BUILTIN=y

CONFIG_BLK_DEV_DM=y

CONFIG_DM_CRYPT=y

CONFIG_DM_ZERO=y

CONFIG_DM_UEVENT=y

CONFIG_NETDEVICES=y

CONFIG_NET_CORE=y

CONFIG_BONDING=y

CONFIG_NET_FC=y

CONFIG_NETCONSOLE=y

CONFIG_NETCONSOLE_DYNAMIC=y

CONFIG_NETPOLL=y

CONFIG_NETPOLL_TRAP=y

CONFIG_NET_POLL_CONTROLLER=y

CONFIG_TUN=y

CONFIG_ETHERNET=y

CONFIG_MDIO=y

CONFIG_NET_VENDOR_INTEL=y

CONFIG_E1000E=y

CONFIG_IGB=y

CONFIG_IGB_HWMON=y

CONFIG_IGB_DCA=y

CONFIG_IXGBE=y

CONFIG_IXGBE_HWMON=y

CONFIG_IXGBE_DCA=y

CONFIG_PPP=y

CONFIG_PPP_DEFLATE=y

CONFIG_PPP_FILTER=y

CONFIG_PPP_MULTILINK=y

CONFIG_PPPOE=y

CONFIG_PPP_ASYNC=y

CONFIG_PPP_SYNC_TTY=y

CONFIG_SLHC=y

CONFIG_INPUT=y

CONFIG_INPUT_MOUSEDEV=y

CONFIG_INPUT_MOUSEDEV_PSAUX=y

CONFIG_INPUT_MOUSEDEV_SCREEN_X=1024

CONFIG_INPUT_MOUSEDEV_SCREEN_Y=768

CONFIG_INPUT_KEYBOARD=y

CONFIG_KEYBOARD_ATKBD=y

CONFIG_SERIO=y

CONFIG_ARCH_MIGHT_HAVE_PC_SERIO=y

CONFIG_SERIO_I8042=y

CONFIG_SERIO_SERPORT=y

CONFIG_SERIO_LIBPS2=y

CONFIG_TTY=y

CONFIG_VT=y

CONFIG_CONSOLE_TRANSLATIONS=y

CONFIG_VT_CONSOLE=y

CONFIG_HW_CONSOLE=y

CONFIG_VT_HW_CONSOLE_BINDING=y

CONFIG_UNIX98_PTYS=y

CONFIG_SERIAL_8250=y

CONFIG_SERIAL_8250_DEPRECATED_OPTIONS=y

CONFIG_SERIAL_8250_PNP=y

CONFIG_SERIAL_8250_CONSOLE=y

CONFIG_FIX_EARLYCON_MEM=y

CONFIG_SERIAL_8250_DMA=y

CONFIG_SERIAL_8250_PCI=y

CONFIG_SERIAL_8250_NR_UARTS=2

CONFIG_SERIAL_8250_RUNTIME_UARTS=2

CONFIG_SERIAL_CORE=y

CONFIG_SERIAL_CORE_CONSOLE=y

CONFIG_HPET=y

CONFIG_HPET_MMAP=y

CONFIG_HPET_MMAP_DEFAULT=y

CONFIG_DEVPORT=y

CONFIG_I2C=y

CONFIG_I2C_BOARDINFO=y

CONFIG_I2C_CHARDEV=y

CONFIG_I2C_HELPER_AUTO=y

CONFIG_I2C_ALGOBIT=y

CONFIG_I2C_I801=y

CONFIG_I2C_SCMI=y

CONFIG_PPS=y

CONFIG_PTP_1588_CLOCK=y

CONFIG_ARCH_WANT_OPTIONAL_GPIOLIB=y

CONFIG_HWMON=y

CONFIG_HWMON_VID=y

CONFIG_SENSORS_CORETEMP=y

CONFIG_SENSORS_JC42=y

CONFIG_SENSORS_W83627EHF=y

CONFIG_SENSORS_ACPI_POWER=y

CONFIG_THERMAL=y

CONFIG_THERMAL_HWMON=y

CONFIG_THERMAL_DEFAULT_GOV_STEP_WISE=y

CONFIG_THERMAL_GOV_STEP_WISE=y

CONFIG_SSB_POSSIBLE=y

CONFIG_BCMA_POSSIBLE=y

CONFIG_VGA_ARB=y

CONFIG_VGA_ARB_MAX_GPUS=16

CONFIG_VGA_CONSOLE=y

CONFIG_DUMMY_CONSOLE=y

CONFIG_HID=y

CONFIG_HIDRAW=y

CONFIG_HID_GENERIC=y

CONFIG_HID_A4TECH=y

CONFIG_HID_APPLE=y

CONFIG_HID_BELKIN=y

CONFIG_HID_CHERRY=y

CONFIG_HID_CHICONY=y

CONFIG_HID_CYPRESS=y

CONFIG_HID_EZKEY=y

CONFIG_HID_KENSINGTON=y

CONFIG_HID_LOGITECH=y

CONFIG_HID_MICROSOFT=y

CONFIG_HID_MONTEREY=y

CONFIG_USB_HID=y

CONFIG_USB_HIDDEV=y

CONFIG_USB_OHCI_LITTLE_ENDIAN=y

CONFIG_USB_SUPPORT=y

CONFIG_USB_COMMON=y

CONFIG_USB_ARCH_HAS_HCD=y

CONFIG_USB=y

CONFIG_USB_DEFAULT_PERSIST=y

CONFIG_USB_XHCI_HCD=y

CONFIG_USB_EHCI_HCD=y

CONFIG_USB_EHCI_ROOT_HUB_TT=y

CONFIG_USB_EHCI_TT_NEWSCHED=y

CONFIG_USB_EHCI_PCI=y

CONFIG_USB_EHCI_HCD_PLATFORM=y

CONFIG_USB_UHCI_HCD=y

CONFIG_USB_ACM=y

CONFIG_USB_WDM=y

CONFIG_USB_STORAGE=y

CONFIG_USB_SERIAL=y

CONFIG_USB_SERIAL_CONSOLE=y

CONFIG_USB_SERIAL_GENERIC=y

CONFIG_USB_SERIAL_FTDI_SIO=y

CONFIG_USB_SERIAL_PL2303=y

CONFIG_USB_SERIAL_WWAN=y

CONFIG_USB_SERIAL_OPTION=y

CONFIG_EDAC=y

CONFIG_EDAC_MM_EDAC=y

CONFIG_EDAC_GHES=y

CONFIG_EDAC_I7CORE=y

CONFIG_EDAC_SBRIDGE=y

CONFIG_RTC_LIB=y

CONFIG_RTC_CLASS=y

CONFIG_RTC_HCTOSYS=y

CONFIG_RTC_SYSTOHC=y

CONFIG_RTC_HCTOSYS_DEVICE="rtc0"

CONFIG_RTC_INTF_SYSFS=y

CONFIG_RTC_INTF_PROC=y

CONFIG_RTC_INTF_DEV=y

CONFIG_RTC_DRV_CMOS=y

CONFIG_DMADEVICES=y

CONFIG_INTEL_IOATDMA=y

CONFIG_DMA_ENGINE=y

CONFIG_DMA_ACPI=y

CONFIG_ASYNC_TX_DMA=y

CONFIG_DMA_ENGINE_RAID=y

CONFIG_DCA=y

CONFIG_CLKEVT_I8253=y

CONFIG_I8253_LOCK=y

CONFIG_CLKBLD_I8253=y

CONFIG_FIRMWARE_MEMMAP=y

CONFIG_DMIID=y

CONFIG_DMI_SCAN_MACHINE_NON_EFI_FALLBACK=y

CONFIG_UEFI_CPER=y

CONFIG_DCACHE_WORD_ACCESS=y

CONFIG_EXT4_FS=y

CONFIG_EXT4_USE_FOR_EXT23=y

CONFIG_JBD2=y

CONFIG_FS_MBCACHE=y

CONFIG_EXPORTFS=y

CONFIG_FILE_LOCKING=y

CONFIG_FSNOTIFY=y

CONFIG_INOTIFY_USER=y

CONFIG_FANOTIFY=y

CONFIG_FUSE_FS=y

CONFIG_CUSE=y

CONFIG_ISO9660_FS=y

CONFIG_JOLIET=y

CONFIG_ZISOFS=y

CONFIG_UDF_FS=y

CONFIG_UDF_NLS=y

CONFIG_FAT_FS=y

CONFIG_MSDOS_FS=y

CONFIG_VFAT_FS=y

CONFIG_FAT_DEFAULT_CODEPAGE=437

CONFIG_FAT_DEFAULT_IOCHARSET="iso8859-1"

CONFIG_PROC_FS=y

CONFIG_PROC_SYSCTL=y

CONFIG_PROC_PAGE_MONITOR=y

CONFIG_SYSFS=y

CONFIG_TMPFS=y

CONFIG_HUGETLBFS=y

CONFIG_HUGETLB_PAGE=y

CONFIG_CONFIGFS_FS=y

CONFIG_MISC_FILESYSTEMS=y

CONFIG_PSTORE=y

CONFIG_NLS=y

CONFIG_NLS_DEFAULT="utf8"

CONFIG_NLS_CODEPAGE_437=y

CONFIG_NLS_CODEPAGE_855=y

CONFIG_NLS_CODEPAGE_866=y

CONFIG_NLS_CODEPAGE_1251=y

CONFIG_NLS_ASCII=y

CONFIG_NLS_ISO8859_1=y

CONFIG_NLS_ISO8859_5=y

CONFIG_NLS_ISO8859_15=y

CONFIG_NLS_KOI8_R=y

CONFIG_NLS_UTF8=y

CONFIG_TRACE_IRQFLAGS_SUPPORT=y

CONFIG_PRINTK_TIME=y

CONFIG_DEFAULT_MESSAGE_LOGLEVEL=4

CONFIG_FRAME_WARN=1024

CONFIG_STRIP_ASM_SYMS=y

CONFIG_ARCH_WANT_FRAME_POINTERS=y

CONFIG_MAGIC_SYSRQ=y

CONFIG_MAGIC_SYSRQ_DEFAULT_ENABLE=0x1

CONFIG_HAVE_DEBUG_KMEMLEAK=y

CONFIG_DEBUG_MEMORY_INIT=y

CONFIG_HAVE_DEBUG_STACKOVERFLOW=y

CONFIG_HAVE_ARCH_KMEMCHECK=y

CONFIG_PANIC_ON_OOPS_VALUE=0

CONFIG_PANIC_TIMEOUT=0

CONFIG_DEBUG_BUGVERBOSE=y

CONFIG_RCU_CPU_STALL_TIMEOUT=60

CONFIG_ARCH_HAS_DEBUG_STRICT_USER_COPY_CHECKS=y

CONFIG_USER_STACKTRACE_SUPPORT=y

CONFIG_HAVE_FUNCTION_TRACER=y

CONFIG_HAVE_FUNCTION_GRAPH_TRACER=y

CONFIG_HAVE_FUNCTION_GRAPH_FP_TEST=y

CONFIG_HAVE_FUNCTION_TRACE_MCOUNT_TEST=y

CONFIG_HAVE_DYNAMIC_FTRACE=y

CONFIG_HAVE_DYNAMIC_FTRACE_WITH_REGS=y

CONFIG_HAVE_FTRACE_MCOUNT_RECORD=y

CONFIG_HAVE_SYSCALL_TRACEPOINTS=y

CONFIG_HAVE_FENTRY=y

CONFIG_HAVE_C_RECORDMCOUNT=y

CONFIG_TRACING_SUPPORT=y

CONFIG_HAVE_ARCH_KGDB=y

CONFIG_STRICT_DEVMEM=y

CONFIG_X86_VERBOSE_BOOTUP=y

CONFIG_EARLY_PRINTK=y

CONFIG_DOUBLEFAULT=y

CONFIG_HAVE_MMIOTRACE_SUPPORT=y

CONFIG_IO_DELAY_TYPE_0X80=0

CONFIG_IO_DELAY_TYPE_0XED=1

CONFIG_IO_DELAY_TYPE_UDELAY=2

CONFIG_IO_DELAY_TYPE_NONE=3

CONFIG_IO_DELAY_0X80=y

CONFIG_DEFAULT_IO_DELAY_TYPE=0

CONFIG_OPTIMIZE_INLINING=y

CONFIG_DEFAULT_SECURITY_DAC=y

CONFIG_DEFAULT_SECURITY=""

CONFIG_XOR_BLOCKS=y

CONFIG_ASYNC_CORE=y

CONFIG_ASYNC_MEMCPY=y

CONFIG_ASYNC_XOR=y

CONFIG_ASYNC_PQ=y

CONFIG_ASYNC_RAID6_RECOV=y

CONFIG_CRYPTO=y

CONFIG_CRYPTO_ALGAPI=y

CONFIG_CRYPTO_ALGAPI2=y

CONFIG_CRYPTO_AEAD=y

CONFIG_CRYPTO_AEAD2=y

CONFIG_CRYPTO_BLKCIPHER=y

CONFIG_CRYPTO_BLKCIPHER2=y

CONFIG_CRYPTO_HASH=y

CONFIG_CRYPTO_HASH2=y

CONFIG_CRYPTO_RNG2=y

CONFIG_CRYPTO_PCOMP2=y

CONFIG_CRYPTO_MANAGER=y

CONFIG_CRYPTO_MANAGER2=y

CONFIG_CRYPTO_USER=y

CONFIG_CRYPTO_GF128MUL=y

CONFIG_CRYPTO_PCRYPT=y

CONFIG_CRYPTO_WORKQUEUE=y

CONFIG_CRYPTO_CRYPTD=y

CONFIG_CRYPTO_ABLK_HELPER=y

CONFIG_CRYPTO_GLUE_HELPER_X86=y

CONFIG_CRYPTO_CBC=y

CONFIG_CRYPTO_LRW=y

CONFIG_CRYPTO_XTS=y

CONFIG_CRYPTO_CMAC=y

CONFIG_CRYPTO_HMAC=y

CONFIG_CRYPTO_XCBC=y

CONFIG_CRYPTO_VMAC=y

CONFIG_CRYPTO_CRC32C=y

CONFIG_CRYPTO_CRC32C_INTEL=y

CONFIG_CRYPTO_CRC32=y

CONFIG_CRYPTO_CRC32_PCLMUL=y

CONFIG_CRYPTO_CRCT10DIF=y

CONFIG_CRYPTO_CRCT10DIF_PCLMUL=y

CONFIG_CRYPTO_SHA1=y

CONFIG_CRYPTO_SHA1_SSSE3=y

CONFIG_CRYPTO_SHA256_SSSE3=y

CONFIG_CRYPTO_SHA512_SSSE3=y

CONFIG_CRYPTO_SHA256=y

CONFIG_CRYPTO_SHA512=y

CONFIG_CRYPTO_GHASH_CLMUL_NI_INTEL=y

CONFIG_CRYPTO_AES=y

CONFIG_CRYPTO_AES_X86_64=y

CONFIG_CRYPTO_AES_NI_INTEL=y

CONFIG_HAVE_KVM=y

CONFIG_RAID6_PQ=y

CONFIG_BITREVERSE=y

CONFIG_GENERIC_STRNCPY_FROM_USER=y

CONFIG_GENERIC_STRNLEN_USER=y

CONFIG_GENERIC_NET_UTILS=y

CONFIG_GENERIC_FIND_FIRST_BIT=y

CONFIG_GENERIC_PCI_IOMAP=y

CONFIG_GENERIC_IOMAP=y

CONFIG_GENERIC_IO=y

CONFIG_ARCH_USE_CMPXCHG_LOCKREF=y

CONFIG_CRC_CCITT=y

CONFIG_CRC16=y

CONFIG_CRC_T10DIF=y

CONFIG_CRC_ITU_T=y

CONFIG_CRC32=y

CONFIG_CRC32_SLICEBY8=y

CONFIG_LIBCRC32C=y

CONFIG_ZLIB_INFLATE=y

CONFIG_ZLIB_DEFLATE=y

CONFIG_GENERIC_ALLOCATOR=y

CONFIG_HAS_IOMEM=y

CONFIG_HAS_IOPORT=y

CONFIG_HAS_DMA=y

CONFIG_CHECK_SIGNATURE=y

CONFIG_CPU_RMAP=y

CONFIG_DQL=y

CONFIG_NLATTR=y

CONFIG_ARCH_HAS_ATOMIC64_DEC_IF_POSITIVE=y

CONFIG_DDR=y

We start compilation and assembly into a deb package:

# cd /usr/src/linux-3.14.xx

# fakeroot make-kpkg clean

# CONCURRENCY_LEVEL=12 fakeroot make-kpkg --us --uc --jobs 12 --stem=kernel-scst --revision=1 kernel_image

Then we pump out SCST, assemble and install (specify the path to the kernel sources):

# svn checkout svn://svn.code.sf.net/p/scst/svn/branches/3.0.x scst-svn

# cd scst-svn

# BUILD_2X_MODULE=y CONFIG_SCSI_QLA_FC=y CONFIG_SCSI_QLA2XXX_TARGET=y KDIR="/usr/src/linux-3.14.xx" make all install

A more detailed instruction on installing SCST with a driver for QLogic can be found on the project website (but there the QLogic driver is assembled from their GIT tree, which did not seem particularly stable to me. We will take the driver from the SCST kit).

As a result, we received a package with the kernel + directory /lib/modules/3.14.xx/extra with SCST modules, which will need to be manually copied to the server. You can, of course, think of a way to integrate them directly into the .deb package, but I was too lazy.

For FC cards to work, you also need firmware, which you can either upload to the adapter (more precisely, update it, because there is already some), and just put it in / lib / firmwareand the driver will pull it up when loading it. I, for fidelity, and the manufacturer recommends, did both this and that. You can flash it either through the Linux utility from Qlogic - qaucli, or from FreeDOS (or EFI) using their own software from the site.

Download the firmware (in our case it is ql2500_fw.bin) and put it in its place:

# mkdir -p /lib/firmware

# cd /lib/firmware

# wget http://ldriver.qlogic.com/firmware/ql2500_fw.bin

Next, we need the SCST management utility under the capacious name scstadmin and the libraries used by it. We take the utility itself in the SCST source tree: scstadmin / scstadmin.sysfs / scstadmin and put it on our servers somewhere in / usr / bin, so that everyone can see. Then we take the directory scstadmin / scstadmin.sysfs / scst-0.9.10 / lib / SCST and put it in / usr / lib / perl / <version of Perl> .

Then install Pacemaker to steer our cluster and generate an authorization key.

Pacemaker will switch ALUA modes depending on the state of the nodes.

# apt-get -t wheezy-backports install pacemaker

# corosync-keygen

The key file / etc / corosync / authkey is transferred to the second server in the same place.

To control SCST through Pacemaker, we need a “resource”, which I pulled from the ESOS project and twisted it for myself.

Resource

#! /bin/sh

#

# $Id$

#

# Resource Agent for managing the Generic SCSI Target Subsystem

# for Linux (SCST) and related daemons.

#

# License: GNU General Public License (GPL)

# (c) 2012-2014 Marc A. Smith

#

# Initialization

: ${OCF_FUNCTIONS_DIR=${OCF_ROOT}/lib/heartbeat}

. ${OCF_FUNCTIONS_DIR}/ocf-shellfuncs

MODULES="scst scst_vdisk qla2x00tgt"

SCST_CFG="/etc/scst.conf"

PRE_SCST_CONF="/etc/pre-scst_xtra_conf"

POST_SCST_CONF="/etc/post-scst_xtra_conf"

SCST_SYSFS="/sys/kernel/scst_tgt"

ALUA_STATES="active nonoptimized standby unavailable offline transitioning"

NO_CLOBBER="/tmp/scst_ra-no_clobber"

# For optional SCST modules

if [ -f "/lib/modules/$(uname -r)/extra/ocs_fc_scst.ko" ]; then

MODULES="${MODULES} ocs_fc_scst"

fi

if [ -f "/lib/modules/$(uname -r)/extra/chfcoe.ko" ]; then

MODULES="${MODULES} chfcoe"

fi

scst_start() {

# Exit immediately if configuration is not valid

scst_validate_all || exit ${?}

# If resource is already running, bail out early

if scst_monitor; then

ocf_log info "Resource is already running."

return ${OCF_SUCCESS}

fi

# If our pre-SCST file exists, run it

if [ -f "${PRE_SCST_CONF}" ]; then

ocf_log info "Pre-SCST user config. file found; running..."

ocf_run -warn sh "${PRE_SCST_CONF}"

fi

# Load all modules

ocf_log info "Loading kernel modules..."

for i in ${MODULES}; do

ocf_log debug "scst_start() -> Module: ${i}"

if [ -d /sys/module/${i} ]; then

ocf_log warn "The ${i} module is already loaded!"

else

ocf_run modprobe ${i} || exit ${OCF_ERR_GENERIC}

fi

done

# Configure SCST

if [ -f "${SCST_CFG}" ]; then

ocf_log info "Applying SCST configuration..."

ocf_run scstadmin -config "${SCST_CFG}"

# Prevent scst_stop() from clobbering the configuration file

if [ ${?} -ne 0 ]; then

ocf_log err "Something is wrong with the SCST configuration!"

ocf_run touch "${NO_CLOBBER}"

exit ${OCF_ERR_GENERIC}

else

if [ -f "${NO_CLOBBER}" ]; then

ocf_run rm -f "${NO_CLOBBER}"

fi

fi

fi

# If our post-SCST file exists, run it

if [ -f "${POST_SCST_CONF}" ]; then

ocf_log info "Post-SCST user config. file found; running..."

ocf_run -warn sh "${POST_SCST_CONF}"

fi

# If we are using ALUA, be sure we are using the "Slave" state initially

if ocf_is_true ${OCF_RESKEY_alua}; then

check_alua

# Set the local target group ALUA state

ocf_log debug "scst_start() -> Setting target group" \

"'${OCF_RESKEY_local_tgt_grp}' ALUA state to" \

"'${OCF_RESKEY_s_alua_state}'..."

ocf_run scstadmin -noprompt -set_tgrp_attr \

${OCF_RESKEY_local_tgt_grp} -dev_group \

${OCF_RESKEY_device_group} -attributes \

state\=${OCF_RESKEY_s_alua_state} || exit ${OCF_ERR_GENERIC}

# For now, we simply assume the other node is the Master

ocf_log debug "scst_start() -> Setting target group" \

"'${OCF_RESKEY_remote_tgt_grp}' ALUA state to" \

"'${OCF_RESKEY_m_alua_state}'..."

ocf_run scstadmin -noprompt -set_tgrp_attr \

${OCF_RESKEY_remote_tgt_grp} -dev_group \

${OCF_RESKEY_device_group} -attributes \

state\=${OCF_RESKEY_m_alua_state} || exit ${OCF_ERR_GENERIC}

fi

# Make sure the resource started correctly

while ! scst_monitor; do

ocf_log debug "scst_start() -> Resource has not started yet, waiting..."

sleep 1

done

# Only return $OCF_SUCCESS if _everything_ succeeded as expected

return ${OCF_SUCCESS}

}

scst_stop() {

# Exit immediately if configuration is not valid

scst_validate_all || exit ${?}

# Check the current resource state

scst_monitor

local rc=${?}

case "${rc}" in

"${OCF_SUCCESS}")

# Currently running; normal, expected behavior

ocf_log info "Resource is currently running."

;;

"${OCF_RUNNING_MASTER}")

# Running as a Master; need to demote before stopping

ocf_log info "Resource is currently running as Master."

scst_demote || ocf_log warn "Demote failed, trying to stop anyway..."

;;

"${OCF_NOT_RUNNING}")

# Currently not running; nothing to do

ocf_log info "Resource is already stopped."

return ${OCF_SUCCESS}

;;

esac

# Unload the modules (in reverse)

ocf_log info "Unloading kernel modules..."

for i in $(echo ${MODULES} | tr ' ' '\n' | tac | tr '\n' ' '); do

ocf_log debug "scst_stop() -> Module: ${i}"

if [ -d /sys/module/${i} ]; then

ocf_run rmmod -w ${i} || exit ${OCF_ERR_GENERIC}

else

ocf_log warn "The ${i} module is not loaded!"

fi

done

# Make sure the resource stopped correctly

while scst_monitor; do

ocf_log info "scst_stop() -> Resource has not stopped yet, waiting..."

sleep 1

done

# Only return $OCF_SUCCESS if _everything_ succeeded as expected

return ${OCF_SUCCESS}

}

scst_monitor() {

# Exit immediately if configuration is not valid

scst_validate_all || exit ${?}

# Check if SCST is loaded

local rc

if [ -e "${SCST_SYSFS}/version" ]; then

ocf_log debug "scst_monitor() -> SCST version:" \

"$(cat ${SCST_SYSFS}/version)"

ocf_log debug "scst_monitor() -> Resource is running."

crm_master -l reboot -v 100

rc=${OCF_SUCCESS}

else

ocf_log debug "scst_monitor() -> Resource is not running."

crm_master -l reboot -D

rc=${OCF_NOT_RUNNING}

return ${rc}

fi

# If we are using ALUA, then we can test if we are Master or not

if ocf_is_true ${OCF_RESKEY_alua}; then

dev_grp_path="${SCST_SYSFS}/device_groups/${OCF_RESKEY_device_group}"

tgt_grp_path="${dev_grp_path}/target_groups/${OCF_RESKEY_local_tgt_grp}"

tgt_grp_state="$(head -1 ${tgt_grp_path}/state)"

ocf_log debug "scst_monitor() -> SCST local target" \

"group state: ${tgt_grp_state}"

if [ "x${tgt_grp_state}" = "x${OCF_RESKEY_m_alua_state}" ]; then

rc=${OCF_RUNNING_MASTER}

fi

fi

return ${rc}

}

scst_validate_all() {

# Test for required binaries

check_binary scstadmin

# There can only be one instance of SCST running per node

if [ ! -z "${OCF_RESKEY_CRM_meta_clone_node_max}" ] &&

[ "${OCF_RESKEY_CRM_meta_clone_node_max}" -ne 1 ]; then

ocf_log err "The 'clone-node-max' parameter must equal '1'."

exit ${OCF_ERR_CONFIGURED}

fi

# If ALUA support is enabled, we need to check the parameters

if ocf_is_true ${OCF_RESKEY_alua}; then

# Make sure they are set to something

if [ -z "${OCF_RESKEY_device_group}" ]; then

ocf_log err "The 'device_group' parameter is not set!"

exit ${OCF_ERR_CONFIGURED}

fi

if [ -z "${OCF_RESKEY_local_tgt_grp}" ]; then

ocf_log err "The 'local_tgt_grp' parameter is not set!"

exit ${OCF_ERR_CONFIGURED}

fi

if [ -z "${OCF_RESKEY_remote_tgt_grp}" ]; then

ocf_log err "The 'remote_tgt_grp' parameter is not set!"

exit ${OCF_ERR_CONFIGURED}

fi

if [ -z "${OCF_RESKEY_m_alua_state}" ]; then

ocf_log err "The 'm_alua_state' parameter is not set!"

exit ${OCF_ERR_CONFIGURED}

fi

if [ -z "${OCF_RESKEY_s_alua_state}" ]; then

ocf_log err "The 's_alua_state' parameter is not set!"

exit ${OCF_ERR_CONFIGURED}

fi

# Currently, we only support using one Master with this RA

if [ ! -z "${OCF_RESKEY_CRM_meta_master_max}" ] &&

[ "${OCF_RESKEY_CRM_meta_master_max}" -ne 1 ]; then

ocf_log err "The 'master-max' parameter must equal '1'."

exit ${OCF_ERR_CONFIGURED}

fi

if [ ! -z "${OCF_RESKEY_CRM_meta_master_node_max}" ] &&

[ "${OCF_RESKEY_CRM_meta_master_node_max}" -ne 1 ]; then

ocf_log err "The 'master-node-max' parameter must equal '1'."

exit ${OCF_ERR_CONFIGURED}

fi

fi

return ${OCF_SUCCESS}

}

scst_meta_data() {

cat <<-EOF

0.1 The SCST OCF resource agent for ESOS; includes SCST ALUA support. SCST OCF RA script for ESOS. Use to enable/disable updating ALUA status in SCST. The 'alua' parameter. The name of the SCST device group (used with ALUA support). The 'device_group' parameter. The name of the SCST local target group (used with ALUA support). The 'local_tgt_grp' parameter. The name of the SCST remote target group (used with ALUA support). The 'remote_tgt_grp' parameter. The ALUA state (eg, active) for a Master node (used with ALUA support). The 'm_alua_state' parameter. The ALUA state (eg, nonoptimized) for a Slave node (used with ALUA support). The 's_alua_state' parameter. This file must be put under the name /usr/lib/ocf/resource.d/esos/scst and made executable.

Since two nodes are not enough for cluster stability (there will be Split-Brain if the connection is broken), we will have a third node that will create a quorum, but will not execute any resources. This is where the Pacemaker feature comes into play that the "health" of resources is checked on all nodes of the cluster, regardless of whether this resource can be executed there at all or not (in new versions it seems they added some kind of feature that disables this feature, but in the version from the Debian repository doesn’t seem to have such happiness yet). Therefore, for a quorum node, we need a fake resource that will simply say "everything is calm in Baghdad."

Fake resource

#! /bin/sh

#

# $Id$

#

# Resource Agent for managing the Generic SCSI Target Subsystem

# for Linux (SCST) and related daemons.

#

# License: GNU General Public License (GPL)

# (c) 2012-2014 Marc A. Smith

#

# Initialization

: ${OCF_FUNCTIONS_DIR=${OCF_ROOT}/lib/heartbeat}

. ${OCF_FUNCTIONS_DIR}/ocf-shellfuncs

MODULES="scst scst_vdisk qla2x00tgt"

SCST_CFG="/etc/scst.conf"

PRE_SCST_CONF="/etc/pre-scst_xtra_conf"

POST_SCST_CONF="/etc/post-scst_xtra_conf"

SCST_SYSFS="/sys/kernel/scst_tgt"

ALUA_STATES="active nonoptimized standby unavailable offline transitioning"

NO_CLOBBER="/tmp/scst_ra-no_clobber"

scst_monitor() {

return ${OCF_NOT_RUNNING}

}

scst_meta_data() {

cat <<-EOF

0.1 The SCST OCF resource agent for ESOS; includes SCST ALUA support. SCST OCF RA script for ESOS. Use to enable/disable updating ALUA status in SCST. The 'alua' parameter. The name of the SCST device group (used with ALUA support). The 'device_group' parameter. The name of the SCST local target group (used with ALUA support). The 'local_tgt_grp' parameter. The name of the SCST remote target group (used with ALUA support). The 'remote_tgt_grp' parameter. The ALUA state (eg, active) for a Master node (used with ALUA support). The 'm_alua_state' parameter. The ALUA state (eg, nonoptimized) for a Slave node (used with ALUA support). The 's_alua_state' parameter. Put it on the quorum node in the same way as the main nodes.

So, finally create arrays and configure the SCST.

For the first part, probably enough.

Let's see what devices we have:

storcli64 / c0 / eall / sall show

Controller = 0

Status = Success

Description = Show Drive Information Succeeded.

Drive Information :

=================

-------------------------------------------------------------------------

EID:Slt DID State DG Size Intf Med SED PI SeSz Model Sp

-------------------------------------------------------------------------

37:0 61 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:1 62 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:2 63 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:3 64 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:4 65 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:5 66 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:6 67 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:7 68 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:8 69 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:9 70 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:10 71 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:11 72 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:12 73 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:13 74 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:14 75 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:15 76 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:16 77 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:17 78 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:18 15 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:19 19 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:20 79 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:21 80 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:22 81 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

37:23 82 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:0 8 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:1 9 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:2 10 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:3 11 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:4 12 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:5 14 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:6 21 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:7 22 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:8 23 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:9 24 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:10 18 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:11 17 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:12 25 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:13 26 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:14 16 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:15 27 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:16 28 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:17 29 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:18 30 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:19 31 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:20 32 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:21 33 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:22 34 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

59:23 35 UGood - 372.093 GB SAS SSD N N 512B S842E400M2 U

-------------------------------------------------------------------------

It’s clear, that means we will create two RAID6 arrays (23 + 1 Hot Spare), one on each backplane:

# storcli /c0 add vd r6 name=SSD-RAID6-1 drives=37:0-22 WT nora direct Strip=64

# storcli /c0 add vd r6 name=SSD-RAID6-2 drives=59:0-22 WT nora direct Strip=64

These are the recommended LSI options for SSDs. Judging by my tests, the size of the stripe from 8Kb to 128Kb has almost no effect on the speed of work.

SCST Configuration: /etc/scst.conf

Server-1 (with comments)

# Перечисляем устройства

## Режим blockio работает с устройствами минуя ядерный Page Cache, для SSD самое то

HANDLER vdisk_blockio {

## Нужно иметь в виду, что исходя из имени устройства SCST генерирует поля t10_dev_id и usn

## по которым ESXi идентифицирует LUN.

DEVICE SSD-RAID6-1 {

## Уникальный путь к устройству (отвязываемся от именования /dev/sdX)

filename /dev/disk/by-id/scsi-3600605b008b4be401c91ac4abce21c9b

## Отключаем кеш записи

write_through 1

## Говорим что это SSD

rotational 0

}

DEVICE SSD-RAID6-2 {

filename /dev/disk/by-id/scsi-3600605b008b4be401c91ac53bd668eda

write_through 1

rotational 0

}

}

# Настройка таргетов

TARGET_DRIVER qla2x00t {

## WWN порта, свои смотреть в /sys/kernel/scst_tgt/targets/qla2x00t

TARGET 21:00:00:24:ff:54:09:32 {

HW_TARGET

enabled 1

# Порядковый номер в ALUA группе (1-4 у первого сервера и 5-8 у второго)

rel_tgt_id 1

## Добавляем в него наши девайсы

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

TARGET 21:00:00:24:ff:54:09:33 {

HW_TARGET

enabled 1

rel_tgt_id 2

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

TARGET 21:00:00:24:ff:54:09:80 {

HW_TARGET

enabled 1

rel_tgt_id 3

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

TARGET 21:00:00:24:ff:54:09:81 {

HW_TARGET

enabled 1

rel_tgt_id 4

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

}

# Группа ALUA, имя произвольное

DEVICE_GROUP default {

## Добавляем устройства в группу

DEVICE SSD-RAID6-1

DEVICE SSD-RAID6-2

## Группа портов в Сервере-1, имя произвольное

TARGET_GROUP local {

## ID группы, произвольное

group_id 256

## ALUA статус

state active

## Порты Сервера-1

TARGET 21:00:00:24:ff:54:09:32

TARGET 21:00:00:24:ff:54:09:33

TARGET 21:00:00:24:ff:54:09:80

TARGET 21:00:00:24:ff:54:09:81

}

## Группа портов Сервера-2

TARGET_GROUP remote {

group_id 257

## ALUA статус

state nonoptimized

## Порты Сервера-2 и их порядковые номера

TARGET 21:00:00:24:ff:4a:af:b2 {

rel_tgt_id 5

}

TARGET 21:00:00:24:ff:4a:af:b3 {

rel_tgt_id 6

}

TARGET 21:00:00:24:ff:54:09:06 {

rel_tgt_id 7

}

TARGET 21:00:00:24:ff:54:09:07 {

rel_tgt_id 8

}

}

}

Server-2 (symmetrical)

HANDLER vdisk_blockio {

DEVICE SSD-RAID6-1 {

filename /dev/disk/by-id/scsi-3600605b008b4be401c91ac4abce21c9b

write_through 1

rotational 0

}

DEVICE SSD-RAID6-2 {

filename /dev/disk/by-id/scsi-3600605b008b4be401c91ac53bd668eda

write_through 1

rotational 0

}

}

TARGET_DRIVER qla2x00t {

TARGET 21:00:00:24:ff:4a:af:b2 {

HW_TARGET

enabled 1

rel_tgt_id 5

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

TARGET 21:00:00:24:ff:4a:af:b3 {

HW_TARGET

enabled 1

rel_tgt_id 6

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

TARGET 21:00:00:24:ff:54:09:06 {

HW_TARGET

enabled 1

rel_tgt_id 7

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

TARGET 21:00:00:24:ff:54:09:07 {

HW_TARGET

enabled 1

rel_tgt_id 8

LUN 0 SSD-RAID6-1

LUN 1 SSD-RAID6-2

}

}

DEVICE_GROUP default {

DEVICE SSD-RAID6-1

DEVICE SSD-RAID6-2

TARGET_GROUP local {

group_id 257

state nonoptimized

TARGET 21:00:00:24:ff:4a:af:b2

TARGET 21:00:00:24:ff:4a:af:b3

TARGET 21:00:00:24:ff:54:09:06

TARGET 21:00:00:24:ff:54:09:07

}

TARGET_GROUP remote {

group_id 256

state active

TARGET 21:00:00:24:ff:54:09:32 {

rel_tgt_id 1

}

TARGET 21:00:00:24:ff:54:09:33 {

rel_tgt_id 2

}

TARGET 21:00:00:24:ff:54:09:80 {

rel_tgt_id 3

}

TARGET 21:00:00:24:ff:54:09:81 {

rel_tgt_id 4

}

}

}

Everything, for the first part, I think, is enough. I hope that I will master the second :)