Testing flash storage. EMC XtremIO

In mid-2012, EMC paid $ 430 million for an Israeli startup opened 3 years earlier. Even at the development stage, almost six months before the alleged appearance of the first XtremIO device. To order, the first devices became available only at the end of 2013.

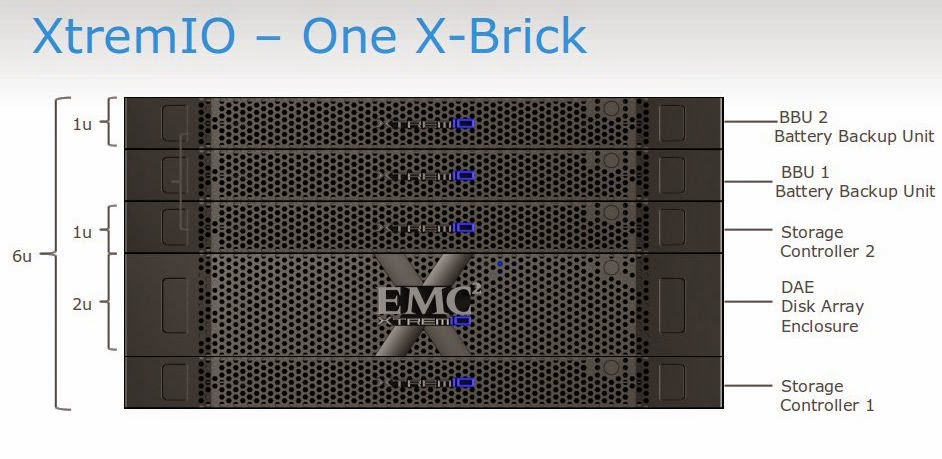

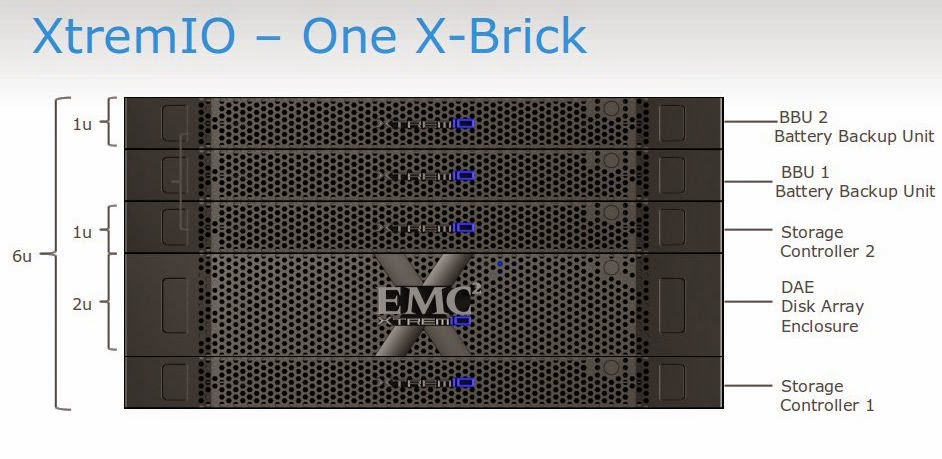

The main distinguishing feature of XtremIO lies in its architecture and functionality.. Firstly, the architecture was initially built with constantly working and non-disconnecting services, such as inline deduplication, compression and thin provisioning, which can save space on SSDs. Secondly, XtremIO is a horizontally-scalable cluster of modules (X-Bricks), between which data and load are automatically evenly distributed. At the same time, standard x86 equipment and SSD are used, and the functionality is implemented in software. As a result, it turns out not just a fast disk, but an array that allows you to save capacity due to deduplication and compression, especially in tasks such as server virtualization, VDI or databases with multiple copies.

Love for various tests is not a strong point of EMC. Nevertheless, thanks to the initiative assistance of the local office, for us, in the depths of the remote laboratory, a stand was assembled that included 2 X-Brick systems. That allowed us to conduct a series of tests as close as possible to our developed methodology .

Testing was conducted on version 2.4 of the code, version 3.0 is now available, in which half the delays are stated.

During testing, the following tasks were solved:

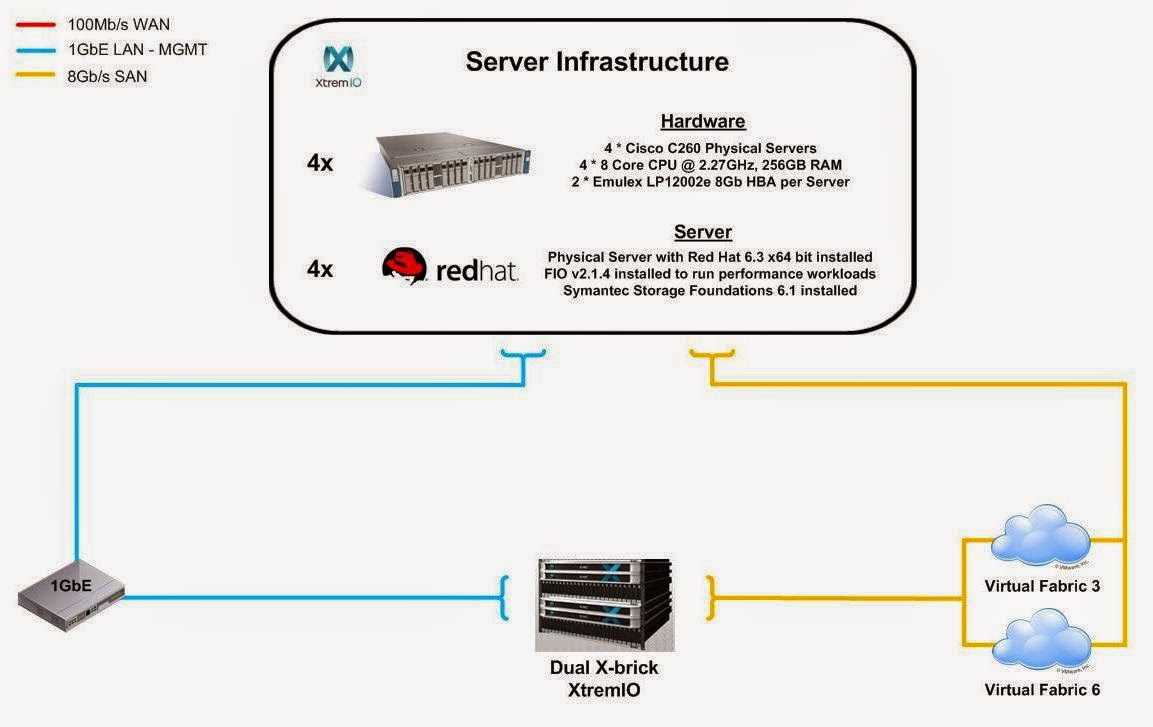

The test bench consists of 4 servers, each of which is connected by four 8Gb connections to 2 FC switches. Each switch has 4 8Gb FC connections to EMC Xtream-IO storage. Zones are created on FC switches in such a way that each initiator is in the zone with each storage port.

server ;

SHD

As an additional software on the test server installed Symantec Storage Foundation 6.1, which implements:

The tests were performed by creating a synthetic load simultaneously from four servers using the fio program on a Block Device, which is a logical volume of the type

Testing consisted of 2 groups of tests:

Study of disk array performance on synthetic tests.

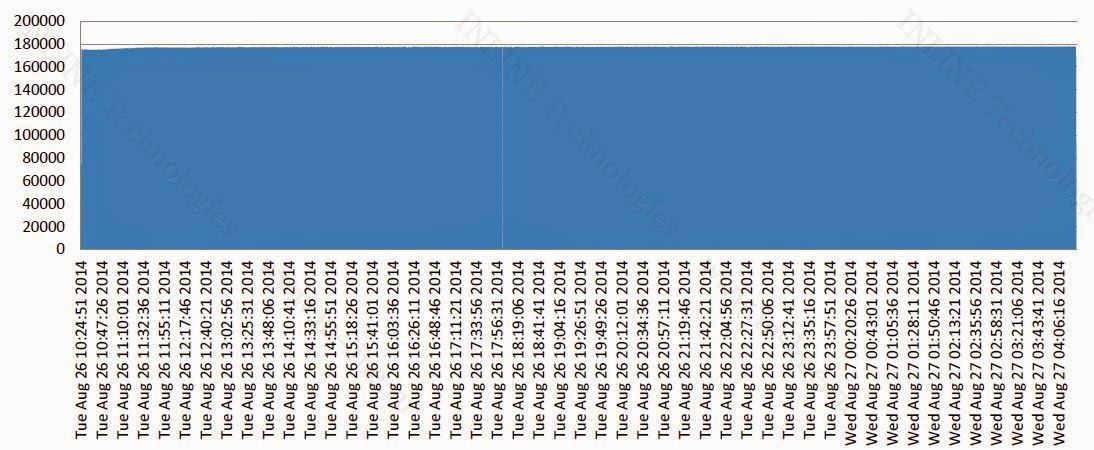

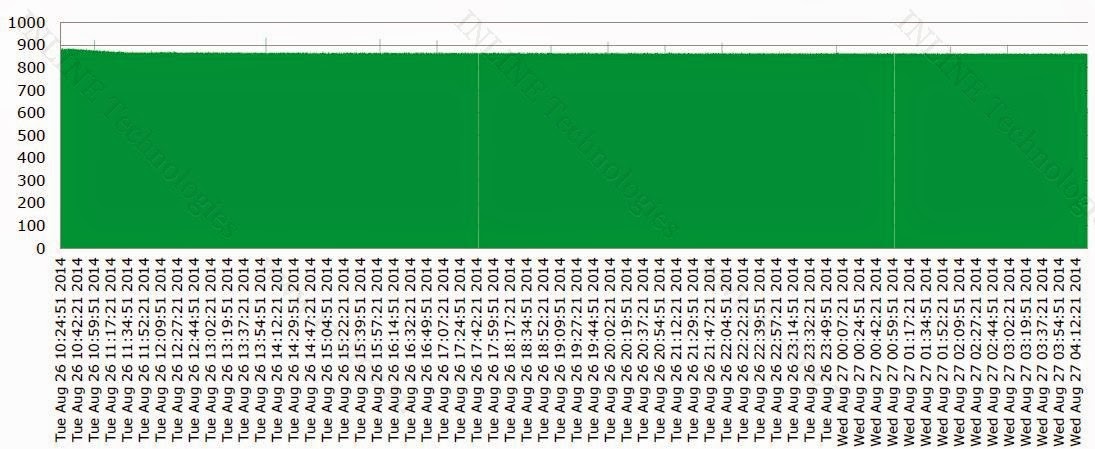

Test results are presented in graphs (Figure 2 and 3).

Key findings:

With a prolonged load, a drop in performance over time was not recorded. A phenomenon such as “Write Cliff” is missing. Therefore, when choosing a disk subsystem (sizing), you can count on stable performance regardless of the duration of the load (disk usage history).

The test results are shown in graphs (Fig. 4-9) and are summarized in tables 1-3

Record:

Reading:

Mixed Load (70/30 rw)

As an added bonus, we were shown the performance of snapshots. 32 snapshots taken simultaneously from 32 woofers, which were maximally loaded on the record, the moons did not have a visible impact on performance, which cannot but cause admiration for the system architecture.

Summing up, we can say that the array made a favorable impression and demonstrated the iops announced by the manufacturer. Compared to other flash solutions, it compares favorably:

The unique architecture and data processing algorithms of the array allow us to implement excellent functionality (deduplication, snapshots, thin moons).

Unfortunately, the testing was not complete. After all, maximum performance is expected from a system consisting of 4 X-Brick. In addition, we were provided with version 2.4, while version 3.0 was already released, where half the delays are stated. Still unclear are the issues of working with large blocks of the array (its maximum bandwidth) and working with synchronous I / O, where the delay is critical. We hope that soon we will be able to close all these "white spots" with additional research.

PS The author is grateful to Pavel Katasonov, Yuri Rakitin and all other employees of the company who participated in the preparation of this material.

The main distinguishing feature of XtremIO lies in its architecture and functionality.. Firstly, the architecture was initially built with constantly working and non-disconnecting services, such as inline deduplication, compression and thin provisioning, which can save space on SSDs. Secondly, XtremIO is a horizontally-scalable cluster of modules (X-Bricks), between which data and load are automatically evenly distributed. At the same time, standard x86 equipment and SSD are used, and the functionality is implemented in software. As a result, it turns out not just a fast disk, but an array that allows you to save capacity due to deduplication and compression, especially in tasks such as server virtualization, VDI or databases with multiple copies.

Love for various tests is not a strong point of EMC. Nevertheless, thanks to the initiative assistance of the local office, for us, in the depths of the remote laboratory, a stand was assembled that included 2 X-Brick systems. That allowed us to conduct a series of tests as close as possible to our developed methodology .

Testing was conducted on version 2.4 of the code, version 3.0 is now available, in which half the delays are stated.

Testing methodology

During testing, the following tasks were solved:

- studies of the degradation of storage performance with a long write load (Write Cliff);

- EMC XtremIO storage performance research for various load profiles;

Testbed configuration

|

| Figure 1. Block diagram of the test bench. |

server ;

SHD

As an additional software on the test server installed Symantec Storage Foundation 6.1, which implements:

- Functionality of the logical volume manager (Veritas Volume Manager);

- Functionality of fail-safe connection to disk arrays (Dynamic Multi Pathing).

See the tedious details and all sorts of smart words.

The following settings were made on the test server, aimed at reducing latency of disk I / O:

The following configuration settings for partitioning disk space are performed on the storage system:

To create a synthetic load (perform synthetic tests) on the storage system, the Flexible IO Tester (fio) utility version 2.1.4 is used. For all synthetic tests, the following configuration parameters of the fio section [global] are used:

The following utilities are used to take performance indicators under synthetic load:

- I / O scheduler changed from “cfq” to “noop” by assigning the noop value to the parameter;

/sys/<путь_к_устройству_Symantec_VxVM>/queue/scheduler - Added the following parameter in

/etc/sysctl.confminimizing the size of the queue at the level of the logical volume manager Symantec:«vxvm.vxio.vol_use_rq = 0»; - The limit of simultaneous I / O requests to the device is increased to 1024 by assigning a value of 1024 to the parameter

/sys/<путь_к_устройству_Symantec_VxVM>/queue/nr_requests - Disabled checking the possibility of merging I / O operations (iomerge) by assigning a value of 1 to the parameter

/sys/<путь_к_устройству_Symantec_VxVM>/queue/nomerges - Read-ahead is disabled by assigning a value of 0 to a parameter

/sys/<путь_к_устройству_Symantec_VxVM>/queue/read_ahead_kb - The default queue size for FC HBA is used (30);

The following configuration settings for partitioning disk space are performed on the storage system:

- On storage, by default, all space is marked up and there is the possibility of only logical partitioning of physical capacity.

- 32 LUNs of the same size are created on the storage system, which together occupy 80% of the storage capacity, and 8 moons are presented to the moon.

Testing Software

To create a synthetic load (perform synthetic tests) on the storage system, the Flexible IO Tester (fio) utility version 2.1.4 is used. For all synthetic tests, the following configuration parameters of the fio section [global] are used:

- direct = 1

- size = 3T

- ioengine = libaio

- group_reporting = 1

- norandommap = 1

- time_based = 1

- randrepeat = 0

The following utilities are used to take performance indicators under synthetic load:

- interface and tools for monitoring and diagnostics of storage systems;

- fio version 2.1.4, to generate a summary report for each load profile

Testing program.

The tests were performed by creating a synthetic load simultaneously from four servers using the fio program on a Block Device, which is a logical volume of the type

stripe, 8 column, stripewidth=1MiBcreated using Veritas Volume Manager from 8 LUNs presented from the tested system on each server. The created volumes are pre-populated with data. Testing consisted of 2 groups of tests:

Ask for details

When creating a test load, the following additional parameters of the fio program are used:

Testing duration - 18 hours.

Based on the test results, based on the data output by the vxstat team, charts are formed that combine the test results:

The analysis of the information obtained is carried out and conclusions are made about:

During testing, the following types of loads are investigated:

Tests are made in 3 stages:

A similar testing algorithm allows you to determine the maximum performance of a disk array for a given load profile, as well as the dependence of latency on load.

Based on the test results, charts and tables of the obtained iops and latency are built, the results are analyzed, and conclusions about the performance of storage systems are made.

Group 1: Tests that implement a continuous load of type random write.

When creating a test load, the following additional parameters of the fio program are used:

- rw = randwrite

- blocksize = 4K

- numjobs = 10

- iodepth = 8

Testing duration - 18 hours.

Based on the test results, based on the data output by the vxstat team, charts are formed that combine the test results:

- IOPS as a function of time;

- Latency as a function of time.

The analysis of the information obtained is carried out and conclusions are made about:

- The presence of degradation of performance with prolonged load on the write and read;

- Productivity of service processes of storage systems (Garbage Collection) limiting the performance of a disk array for recording under long peak load;

Group 2: Disk array performance tests for different types of load.

During testing, the following types of loads are investigated:

- load profiles (modifiable software parameters fio: randomrw, rwmixedread):

- random record 100%;

- random write 30%; random read 70%;

- random read 100%.

- block sizes: 1KB, 8KB, 16KB, 32KB, 64KB, 1MB (modifiable software parameter fio: blocksize);

- methods of processing input-output operations: asynchronous (variable parameter of software fio: ioengine);

Tests are made in 3 stages:

- for each combination of the load types listed above, by varying the parameters fio numjobs and iodepth, the saturation point of the storage system is found, that is, such a combination of jobs and iodepth at which the maximum iops is achieved, but the delay is minimal. The fio program records iops, latency, numjobs and qdepth.

- then the tests are carried out similarly to the previous stage, only a point is found at which approximately half the performance is achieved.

- then similar tests are carried out, a point of even half as much productivity is sought.

A similar testing algorithm allows you to determine the maximum performance of a disk array for a given load profile, as well as the dependence of latency on load.

Based on the test results, charts and tables of the obtained iops and latency are built, the results are analyzed, and conclusions about the performance of storage systems are made.

Test results

Study of disk array performance on synthetic tests.

Group 1: Tests that implement a continuous load of type random write.

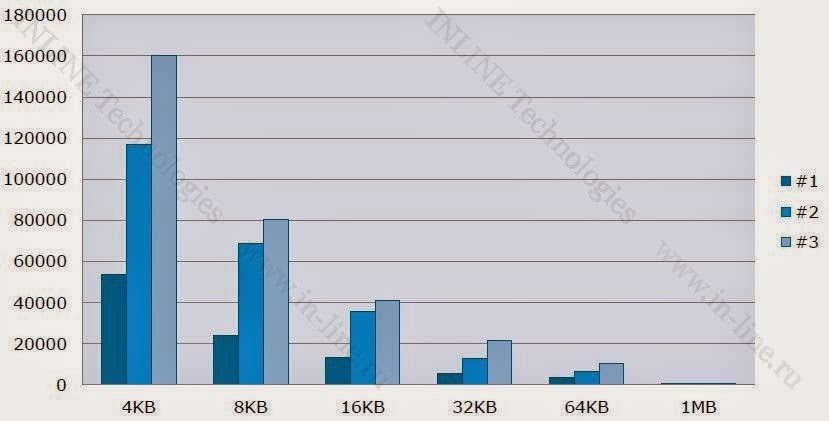

Test results are presented in graphs (Figure 2 and 3).

View graphs.

Key findings:

With a prolonged load, a drop in performance over time was not recorded. A phenomenon such as “Write Cliff” is missing. Therefore, when choosing a disk subsystem (sizing), you can count on stable performance regardless of the duration of the load (disk usage history).

Group 2: Disk array performance tests for different types of load.

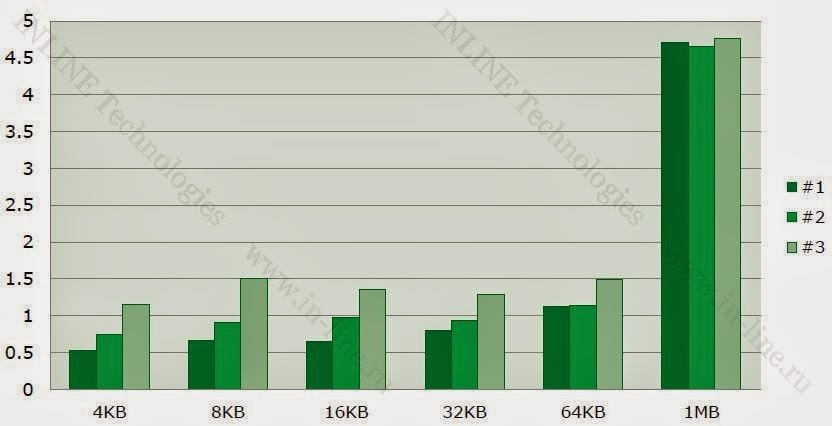

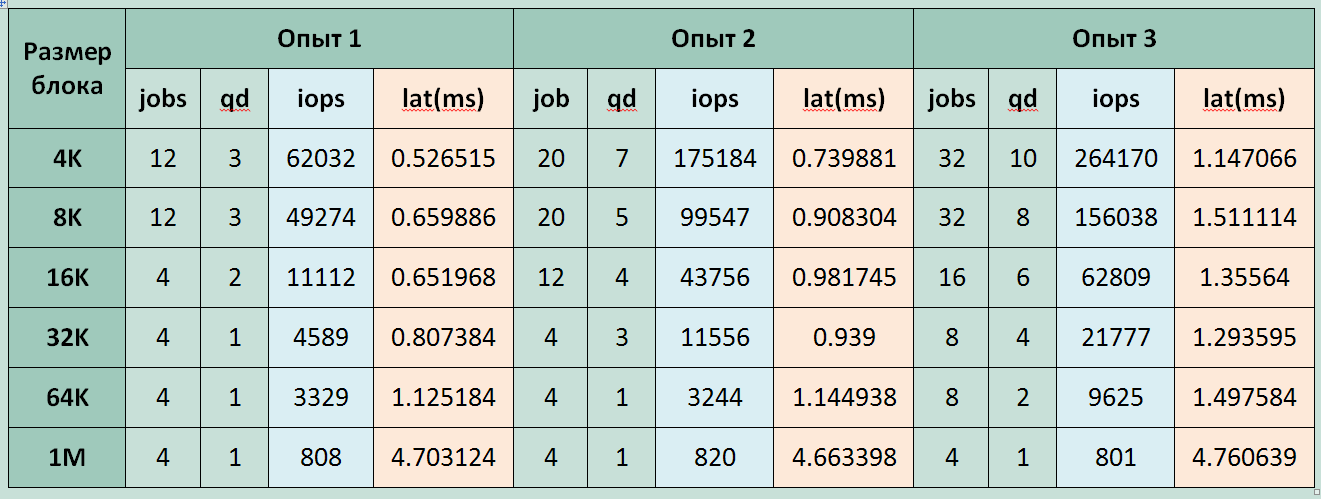

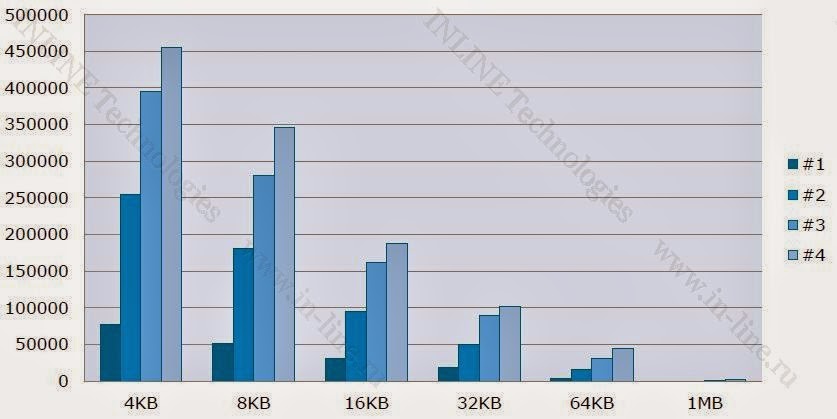

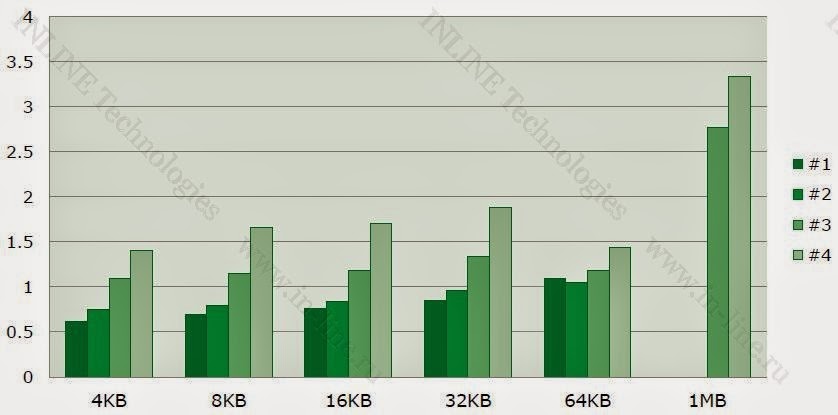

The test results are shown in graphs (Fig. 4-9) and are summarized in tables 1-3

View graphs and tables.

|

| Figure 4. IOPS at random recording. |

|

| Figure 5. Delay during random recording. (ms) |

|

| Table 1. Random Write Performance |

|

| Figure 6. IOPS with mixed I / O (70% read 30% write) |

|

| Figure 7. Delay with mixed I / O (70% read 30% write) (ms) |

|

| Table 2. Performance with mixed I / O (70% read 30% write) |

|

| Figure 8. IOPS with random reading. |

|

| Figure 9. Delay during random reading. (ms) |

|

| Table 3. Random read performance. |

Maximum recorded storage performance parameters:

Record:

- 160,000 IOPS at latency 1.2ms (4K block);

Reading:

- 455000 IOPS at latency 1.4ms (4K jobs = 10 block qd = 16);

Mixed Load (70/30 rw)

- 264000 IOPS with a latency of 1.15ms (block 4K jobs = 8 qd = 10);

- Storage provides declared performance with acceptable delays.

- The obtained delay values cannot be considered minimal, because testing was carried out only asynchronous I / O with queue depth greater than 1.

- On the 1MB block, performance is lower than expected. Further studies showed that during tests with a 1MB block on a storage system, operations with 256KB blocks occurred. This means that the data blocks were shared on the server before sending, which led to a drop in performance. The reason for this was the use of the swidth = 1m parameter instead of stripeunit = 1m when creating the Symantec VxVM volume.

- In case of random recording, the change in performance with increasing block size occurs linearly, which allows us to conclude that the storage bandwidth of the storage system is limited to about 700-750MB / s.

As an added bonus, we were shown the performance of snapshots. 32 snapshots taken simultaneously from 32 woofers, which were maximally loaded on the record, the moons did not have a visible impact on performance, which cannot but cause admiration for the system architecture.

conclusions

Summing up, we can say that the array made a favorable impression and demonstrated the iops announced by the manufacturer. Compared to other flash solutions, it compares favorably:

- Lack of performance degradation on write operations (write cliff)

- Online deduplication, which allows not only to save in volume, but also to win in recording speed.

- Functional snapshot and their impressive performance.

- Scalability from 1 to 4 knots (X-brick)

The unique architecture and data processing algorithms of the array allow us to implement excellent functionality (deduplication, snapshots, thin moons).

Unfortunately, the testing was not complete. After all, maximum performance is expected from a system consisting of 4 X-Brick. In addition, we were provided with version 2.4, while version 3.0 was already released, where half the delays are stated. Still unclear are the issues of working with large blocks of the array (its maximum bandwidth) and working with synchronous I / O, where the delay is critical. We hope that soon we will be able to close all these "white spots" with additional research.

PS The author is grateful to Pavel Katasonov, Yuri Rakitin and all other employees of the company who participated in the preparation of this material.