A short course in computer graphics: writing a simplified DIY OpenGL, article 4b of 6

- Tutorial

Course content

- Article 1: Bresenham's algorithm

- Article 2: rasterize a triangle + trim the back faces

- Article 3: Removing invisible surfaces: z-buffer

- Article 4: Required Geometry: Matrix Festival

- 4a: Building perspective distortion

- 4b: we move the camera and what follows from this

- 4c: new rasterizer and perspective distortion correction

- Article 5: We write shaders under our library

- Article 6: A little more than just a shader: rendering shadows

Code improvement

Official translation (with a bit of polishing) is available here.

Today we finish with educational program on geometry, next time there will be fun with shaders!

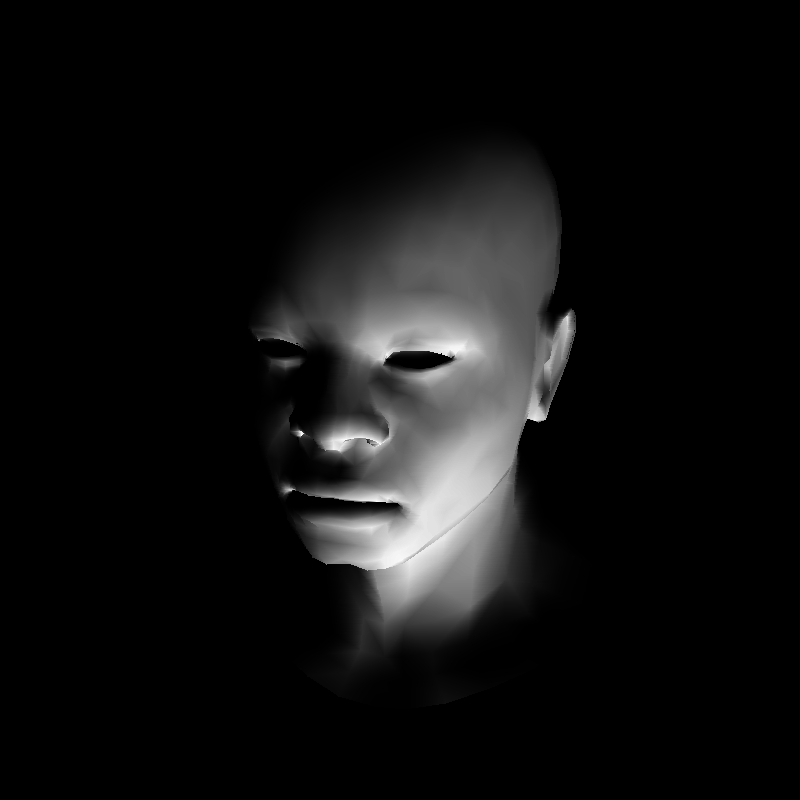

In order not to be completely bored, here is Guro's tint for you:

I removed the textures to make it more visible. Guro ’s tinting is very simple: the good uncle modeller gave us normal vectors to each vertex of the object, they are stored in the lines vn xyz of the .obj file. We calculate the light intensity for each vertex of the triangle and simply interpolate the intensity inside. Just like we did for depth z or for texture coordinates uv!

By the way, if the uncle-modeler were not so kind, then we could calculate the normals to the vertex as the average of the normals of the faces adjacent to this vertex.

The current code that generated this image is here .

Likbez: change of basis in three-dimensional space

In Euclidean space, the coordinate system (frame) is defined by a reference point and the basis of space. What does it mean that in the frame (O, i, j, k) the point P has coordinates (x, y, z)? This means that the vector OP is defined as follows:

Now imagine that we have a second frame (O ', i', j ', k'). How do we convert the coordinates of a point given in one frame to another frame? To begin with, we note that since (i, j, k) and (i ', j', k ') are bases, then there exists a non-degenerate matrix M such that:

Let's draw an illustration to make it clearer:

Let's write a representation of the vector OP:

We substitute the expression for the replacement of the basis in the second part:

And this will give us the formula for the replacement of coordinates for two bases.

Writing your gluLookAt

OpenGL and, as a result, our small renderer can only draw scenes with a camera located on the z axis . If we need to move the camera, it's okay, we just move the whole scene, leaving the camera motionless.

Let's set the task as follows: we want the camera to be at the point e (eye), look at the point c (center) and that the given vector u (up) in our final picture would be vertical.

Here is an illustration:

It just means we render in the frame (c, x'y'z '). But the model is set in the frame (O, xyz), which means that we need to calculate the frame x'y'z 'and the corresponding transition matrix. Here is the code that returns the matrix we need:

update: carefully, the code in the repository is incorrect, the one shown here is correct

void lookat(Vec3f eye, Vec3f center, Vec3f up) {

Vec3f z = (eye-center).normalize();

Vec3f x = cross(up,z).normalize();

Vec3f y = cross(z,x).normalize();

Matrix Minv = Matrix::identity();

Matrix Tr = Matrix::identity();

for (int i=0; i<3; i++) {

Minv[0][i] = x[i];

Minv[1][i] = y[i];

Minv[2][i] = z[i];

Tr[i][3] = -center[i];

}

ModelView = Minv*Tr;

}

To begin with, z 'is just a ce vector (don't forget to normalize it, it's easier to work this way). How to count x '? Just a vector product between u and z ' . Then we assume y ', which will be orthogonal to the already calculated x' and z '(I recall that, by the assumption of the problem, the vector ce and u are not necessarily orthogonal). The last chord is doing a parallel translation in c , and our coordinate matrix is ready. It is enough to take any point with coordinates (x, y, z, 1) in the old basis, multiply it by this matrix, and we will get the coordinates in the new basis! In OpenGL, this matrix is called a view matrix.

Viewport

If you remember, in my code there were similar constructions:

screen_coords [j] = Vec2i ((v.x + 1.) * width / 2., (v.y + 1.) * height / 2.);

What does this mean? I have a Vec2f v point that belongs to the square [-1,1] * [- 1,1]. I want to draw it in a picture of size (width, height). The vector (v.x + 1) varies from 0 to 2, (v.x + 1.) / 2. ranging from zero to one, well, and (v.x + 1.) * width / 2. sweeps the whole picture, which is what I need.

But we move on to the matrix representation of affine mappings, so let's look at the following code:

Matrix viewport (int x, int y, int w, int h) {

Matrix m = Matrix :: identity (4);

m [0] [3] = x + w / 2.f;

m [1] [3] = y + h / 2.f;

m [2] [3] = depth / 2.f;

m [0] [0] = w / 2.f;

m [1] [1] = h / 2.f;

m [2] [2] = depth / 2.f;

return m;

}

He builds such a matrix:

This means that the cube of world coordinates [-1,1] * [- 1,1] * [- 1,1] is mapped into a cube of screen coordinates (yes, a cube, because we have z-buffer!) [x, x + w] * [y, y + h] * [0, d], where d is the resolution of the z-buffer (I have 255, because I store it directly in black and white picture).

In the OpenGL world, this matrix is called the viewport matrix.

Transformation chain

So, we summarize. Models (for example, presages) are made in their local coordinate system (object coordinates). They are inserted into the scene, which is expressed in world coordinates. The transition from one to another is carried out by the Model matrix. Further, we want to express this matter in the frame of the camera (eye coordinates), the matrix of the transition from world to camera is called View. Then, we carry out perspective distortion using the Projection matrix (see article 4a), it translates the scene into the so-called clip coordinates. Well, then we display this whole thing on the screen, the matrix of transition to screen coordinates is Viewport.

That is, if we read point v from a file, then to do it on the screen, we do the multiplication

Viewport * Projection * View * Model * v.

If you look at the code on the github , we will see the following lines:

Vec3f v = model-> vert (face [j]); screen_coords [j] = Vec3f (ViewPort * Projection * ModelView * Matrix (v));

Since I draw only one object, the Model matrix is just the unit, I combined it with the View matrix.

Normal vectors conversion

The following fact is widely known:

If we have a model given and normal vectors for this model have already been calculated (or, for example, given by hands), and this model undergoes an (affine) transformation M, then normal vectors undergo a transformation inverse to the transposed M.

What what?!

This moment remains magical for many, but in fact, there is nothing magical here. Consider the triangle and the vector a , which is normal to its inclined face. If we just stretch our space twice vertically, then the transformed vector a will cease to be normal to the transformed face.

To remove all the plaque of magic, you need to understand one simple thing:we need not only to convert normal vectors, we need to calculate normal vectors to the transformed model.

So, we have a normal vector a = (A, B, C). We know that a plane passing through the origin and having the normal vector a (in our illustration this is the inclined edge of the left triangle) is given by the equation Ax + By + Cz = 0. Let's write this equation in matrix form, and immediately in homogeneous coordinates: I

recall that (A, B, C) is a vector, therefore it gets zero in the last component when immersed in four-dimensional space, and (x, y, z) is a point, so

we attribute it to it 1. Let's add the identity matrix (M times the inverse to it) in the middle of this entry:

The expression in the right brackets is the converted points. In the left - a normal vector! Since in the standard convention in linear mapping we write vectors (and points) in a column (I hope we won’t kindle a holivar about co- and contravariant vectors), the previous expression can be written as follows:

Which even leads us to the above fact, that the normal to the transformed object is obtained by transforming the original normal inverse to the transposed M.

Note that if M is a composition of parallel transfers, rotations and uniform stretching, then the transposed M is equal to the inverse of M, and they annul each other. But since our transformation matrices will include perspective distortion, this will not help us much.

In the current code, we don’t use normal transformation, but in the next article about shaders this will be very important.

Happy programming!