Exploring the Internal Mechanisms of Hyper-V: Part 2

Since the publication of the first part of the article, nothing has changed globally in the world: the Earth hasn’t hit the celestial axis, the popularity of cloud services is still growing, all the same, new holes have not been discovered in the Microsoft hypervisor, and researchers do not want to spend their time searching bugs in poorly documented and little studied technology. Therefore, I suggest you refresh your memory with the first part from the previous issue, replenish your bar and start reading, because today we will create a driver that interacts with the hypervisor interface and monitors messages transmitted by the hypervisor, and also examine the operation of the Data Exchange integration services components.

Handling Hypervisor Messages

On dvd.xakep.ruwe posted the driver written using Visual Studio 2013. It must be loaded into the root OS, for example, using OSRLoader. To send IOCTL codes, a simple program SendIOCTL.exe is used. After sending the IOCTL code INTERRUPT_CODE, the driver begins to process data transmitted by the hypervisor through the SIM slot zero. Unfortunately, the HvlpInterruptCallback variable, which contains the address of the array with message handler pointers, is not exported by the kernel, therefore, to detect it, it is necessary to analyze the code of the HvlRegisterInterruptCallback function exported by the kernel containing the array address we need. Also, unfortunately, you can’t just call HvlRegisterInterruptCallback to register your message handler, since at the very beginning of the function the HvlpFlags variable is checked. If the variable is equal to 1 (and this value is assigned to it at the initial stages of kernel loading), the function stops execution, returns error code 0xC00000BB (STATUS_NOT_SUPPORTED) and, accordingly, the handler is not registered, therefore, to replace the handlers, you will have to write your own version of the HvlpInterruptCallback function. In the hyperv4 driver, the necessary actions are performed by the RegisterInterrupt function:

int RegisterInterrupt()

{

UNICODE_STRING uniName;

PVOID pvHvlRegisterAddress = NULL;

PHYSICAL_ADDRESS pAdr = {0};

ULONG i,ProcessorCount;

// Получаем число активных ядер процессоров

ProcessorCount = KeQueryActiveProcessorCount(NULL);

// Выполняем поиск адреса экспортируемой функции HvlRegisterInterruptCallback

DbgLog("Active processor count",ProcessorCount);

RtlInitUnicodeString(&uniName,L"HvlRegisterInterruptCallback");

pvHvlRegisterAddress = MmGetSystemRoutineAddress(&uniName);

if (pvHvlRegisterAddress == NULL){

DbgPrintString("Cannot find HvlRegisterInterruptCallback!");

return 0;

}

DbgLog16("HvlRegisterInterruptCallback address ",pvHvlRegisterAddress);

// Выполняем поиск адреса переменной HvlpInterruptCallback, FindHvlpInterruptCallback((unsigned char *)pvHvlRegisterAddress);

// Производим замену оригинальных обработчиков на свои

ArchmHvlRegisterInterruptCallback((uintptr_t)&ArchmWinHvOnInterrupt,(uintptr_t)pvHvlpInterruptCallbackOrig,WIN_HV_ON_INTERRUP_INDEX);

ArchmHvlRegisterInterruptCallback((uintptr_t)&ArchXPartEnlightenedIsr,(uintptr_t)pvHvlpInterruptCallbackOrig,XPART_ENLIGHTENED_ISR0_INDEX);

ArchmHvlRegisterInterruptCallback((uintptr_t)&ArchXPartEnlightenedIsr,(uintptr_t)pvHvlpInterruptCallbackOrig,XPART_ENLIGHTENED_ISR1_INDEX);

ArchmHvlRegisterInterruptCallback((uintptr_t)&ArchXPartEnlightenedIsr,(uintptr_t)pvHvlpInterruptCallbackOrig,XPART_ENLIGHTENED_ISR2_INDEX);

ArchmHvlRegisterInterruptCallback((uintptr_t)&ArchXPartEnlightenedIsr,(uintptr_t)pvHvlpInterruptCallbackOrig,XPART_ENLIGHTENED_ISR3_INDEX);

// Так как значение SIMP для всех ядер разное, то необходимо получить физические адреса всех SIM

WARNING

During experiments involving intensive work of virtual machines, it is better to replace one handler in the HvlpInterruptCallback array, since replacing all of them immediately leads to unstable operation of the system (at least with a large flow of debugging messages through KdPrint and WPP).

// сделать возможным доступ к содержимому страницы, смапировав ее с помощью Mm MapIoSpace, и сохранить полученные виртуальные адреса каждой страницы в массив для последующего использования

for (i = 0; i < ProcessorCount; i++)

{KeSetSystemAffinityThreadEx(1i64 << i);

DbgLog("Current processor number",KeGetCurrentProcessorNumberEx(NULL));

pAdr.QuadPart = ArchReadMsr (HV_X64_MSR_SIMP) & 0xFFFFFFFFFFFFF000;

pvSIMP[i] = MmMapIoSpace (pAdr, PAGE_SIZE, MmCached);

if (pvSIMP[i] == NULL){ DbgPrintString("Error during pvSIMP MmMapIoSpace");

return 1;

}

DbgLog16("pvSIMP[i] address", pvSIMP[i]);

pAdr.QuadPart = ArchReadMsr (HV_X64_MSR_SIEFP) & 0xFFFFFFFFFFFFF000;

pvSIEFP[i] = MmMapIoSpace(pAdr, PAGE_SIZE, MmCached);

if (pvSIEFP[i] == NULL){DbgPrintString("Error during pvSIEFP MmMapIoSpace");

return 1;

}

DbgLog16("pvSIEFP address", pvSIEFP[i]);

}

return 0;

}

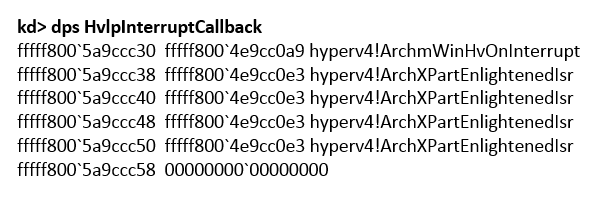

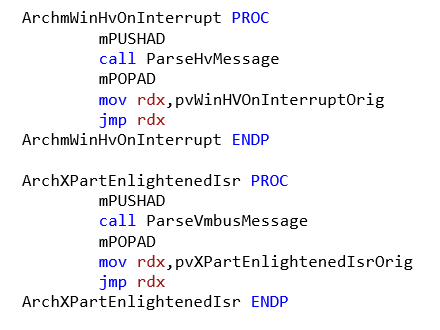

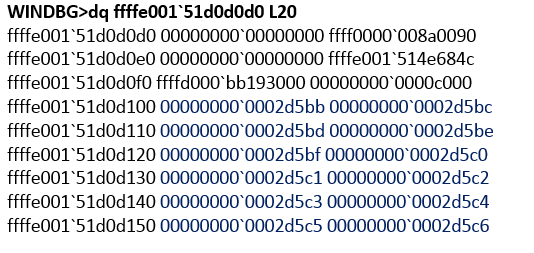

Fig. 1. HvlpInterruptCallback array with modified handlers

The HvlpInterruptCallback array after executing the RegisterInterrupt function (if all handlers are replaced at the same time) looks as follows (see Figure 1). The replacement was carried out by analogy with the original code: one handler for messages from the hypervisor and four for processing messages from VMBus. The ArchmWinHvOnInterrupt and ArchXPartEnlightenedIsr procedures save all the registers on the stack and transfer control to the ParseHvMessage and ParseVmbusMessage message parsing functions, respectively (mPUSHAD and mPOPAD are macros that save registers on the stack) (see Fig. 2).

Fig. 2. ArchmWinHvOnInterrupt and ArchXPartEnlightenedIsr

After parsing, control is transferred to the original WinHvOnInterrupt and XPartEnlightenedIsr procedures. The hypervisor message parsing function is as follows:

void ParseHvMessage()

{

PHV_MESSAGE phvMessage, phvMessage1;

// Получаем номер активного логического процессора

ULONG uCurProcNum = KeGetCurrentProcessorNumberEx(NULL);

Unlock+0x162

vmbkmcl!VmbChannelEnable+0x231

vmbus!PipeStartChannel+0x9e

vmbus!PipeAccept+0x81

vmbus!InstanceCreate+0x90

..................................

nt!IopParseDevice+0x7b3

nt!ObpLookupObjectName+0x6d8

nt!ObOpenObjectByName+0x1e3

nt!IopCreateFile+0x372

nt!NtCreateFile+0x78

nt!KiSystemServiceCopyEnd+0x13

ntdll!NtCreateFile+0xa

KERNELBASE!CreateFileInternal+0x30a

KERNELBASE!CreateFileW+0x66

vmbuspipe!VmbusPipeClientOpenChannel+0x44

icsvc!ICTransportVMBus::ClientNotification+0x60

vmbuspipe!VmbusPipeClientEnumeratePipes+0x1ac

icsvc!ICTransportVMBusClient::Open+0xe5

icsvc!ICEndpoint::Connect+0x66

icsvc!ICChild::Run+0x65

icsvc!ICKvpExchangeChild::Run+0x189

icsvc!ICChild::ICServiceWork+0x137

icsvc!ICChild::ICServiceMain+0x8f

..................................

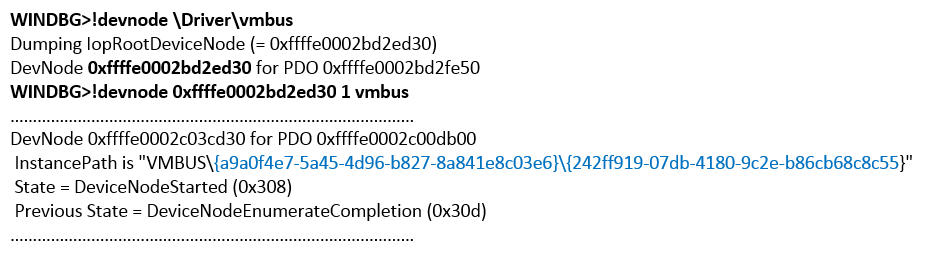

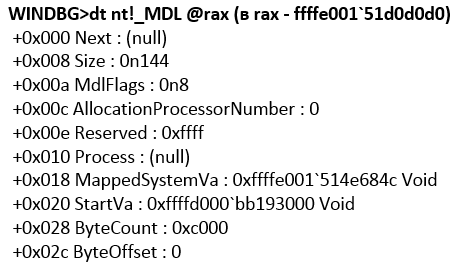

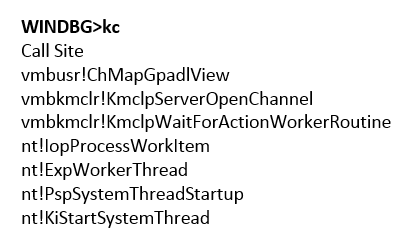

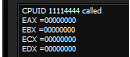

The Data Exchange component is activated on the virtual machine. After activating the Data Exchange component in the properties of the virtual machine and clicking the Apply root button, the OS sends a message with the CHANNELMSG_OFFERCHANNEL code via the HvPostMessage hyperlink (see Figure 9). The transferred data contains the GUID of the device that is a child of VMBUS (see Figure 10). Next, the guest OS processes the data and calls the vmbus! InstanceDeviceControl function.

Fig. 9. The device GUID in the message

Fig. 10. Output! Devnode for VMBus device

Part of the stack:

WINDBG>kс

Call Sitent!IoAllocateMdl vmbus!InstanceCloseChannel+0x22d (адрес возврата для функции, имя которой отсутствует в символах)

vmbus!InstanceDeviceControl+0x118

..................................

vmbkmcl!KmclpSynchronousIoControl+0xa7

vmbkmcl!KmclpClientOpenChannel+0x2a6

vmbkmcl!KmclpClientFindVmbusAnd

if (pvSIMP[uCurProcNum] != NULL){

phvMessage = (PHV_MESSAGE)pvSIMP[uCurProcNum];

} else{

DbgPrintString("pvSIMP is NULL");

return;

}

// Уведомление об отправке сообщения через 1-й слот SIM phvMessage1 = (PHV_MESSAGE)((PUINT8)pvSIMP[uCurProcNum]+ HV_MESSAGE_SIZE); // for SINT1

if (phvMessage1->Header.MessageType != 0){

DbgPrintString("SINT1 interrupt");

}

// В зависимости от типа сообщения вызываем процедуры обработчики

// Структуры для каждого типа сообщения описаны в TLFS

switch (phvMessage->Header.MessageType)

{

case HvMessageTypeX64IoPortIntercept:

PrintIoPortInterceptMessage(phvMessage);

break;

case HvMessageTypeNone:DbgPrintString("HvMessageTypeNone");

break;

case HvMessageTypeX64MsrIntercept:PrintMsrInterceptMessage(phvMessage);

break;

case HvMessageTypeX64CpuidIntercept:PrintCpuidInterceptMessage(phvMessage);

break;

case HvMessageTypeX64ExceptionIntercept:PrintExceptionInterceptMessage(phvMessage);

break;

default:

DbgLog("Unknown MessageType", phvMessage->

Header.MessageType);

break;

}

}

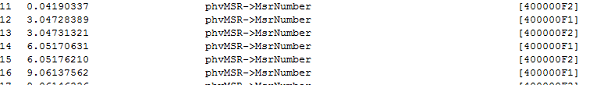

The function receives the number of the active logical processor, the address of the SIM page and reads the value of the SIM zero slot. First, an analysis of the message type phvMessage-> Header.MessageType is performed, since the message body is different for each type. In DbgView you can see the following picture (see. Fig. 3).

Fig. 3. DbgView output when the hypervisor processes calls to MSR registers

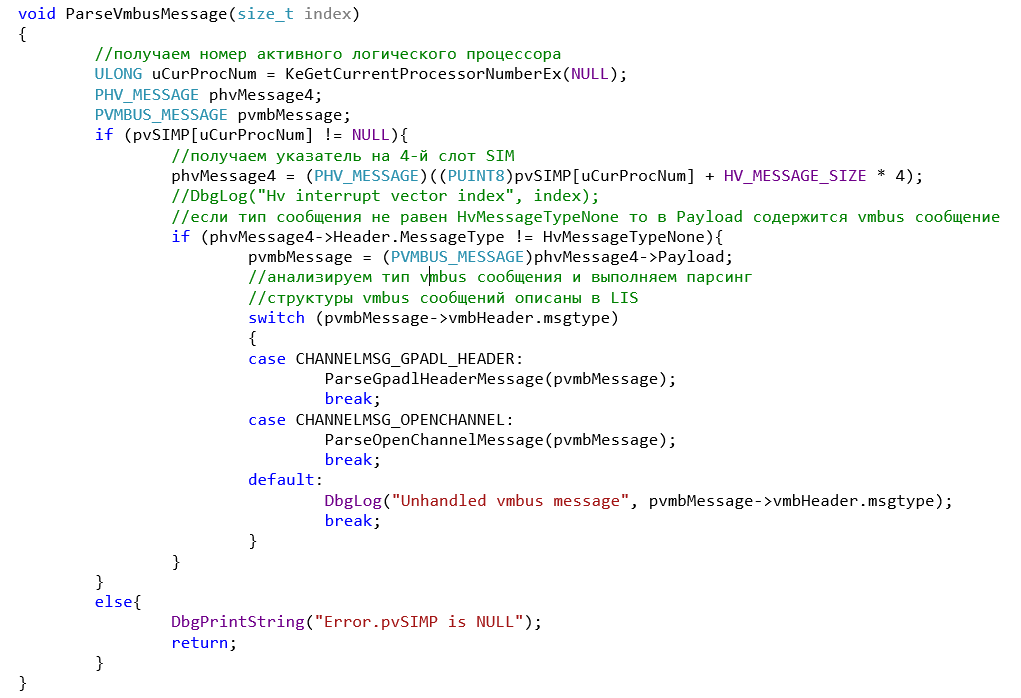

. ParseVmbusMessage function (Fig. 4).

The function receives the number of the active logical processor, the address of the SIM page and reads the value of the fourth SIM slot. For example, the messages CHANNELMSG_OPENCHANNEL and CHANNELMSG_GPADL_HEADER are analyzed, but in the LIS source code you can see the format of all types of messages and easily add the necessary handlers. Messages for VMBus are usually generated when the virtual machine or one of the Integration Services components is turned on / off. For example, when you turn on the Data Exchange component, the debugger or DbgView will display the information shown in Fig. 5.

Fig. 5. Debugging messages when turning on the Data Exchange component

Integration Services - Data Exchange

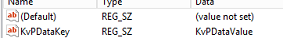

Next, we will examine how data is exchanged between the guest and root OS using the example of one of the components of the integration services - Data Exchange. This component allows the root OS to read data from a specific registry branch of the guest OS. For verification in the guest OS, create a key with the value KvPDataValue in the HKEY_LOCAL_MACHINE \\ SOFTWARE \\ Microsoft \\ Virtual Machine \\ Guest branch (see Figure 6).

Fig. 6. Key KvPDataValue

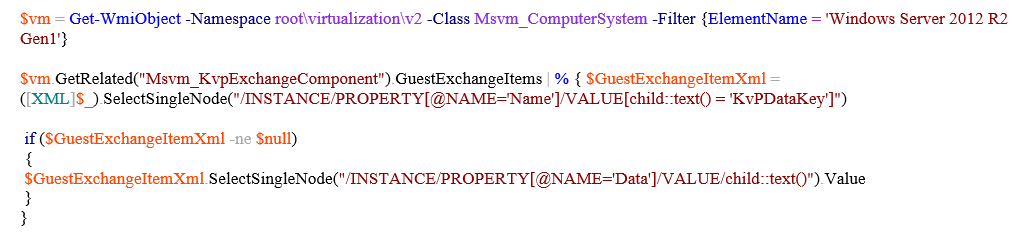

To get the key value in the root OS, the following PowerShell script was used (see Fig. 7).

Fig. 7. PowerShell script to request registry values from a guest OS

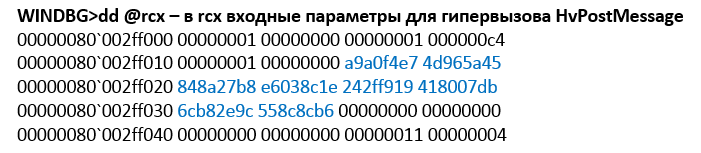

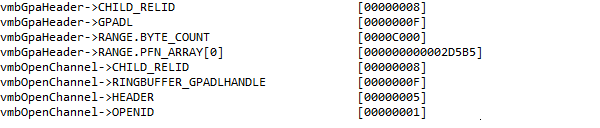

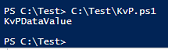

The script will return the value of the KvPDataKey key (see Figure 8). The script receives the entire available set of values using $ vm.GetRelated ("Msvm_KvpExchangeComponent"). GuestExchangeItems and only after that it parses each received object for the search for the KvPDataKey key. Accordingly, the script will only work if nt! IoAllocateMdl is called in the properties of this function with the size of the allocated buffer 0xC000. The result of the function execution is the formed MDL structure (see Fig. 11). Next, nt! MmProbeAndLockPages is called, after completion of which the MDL structure is supplemented by PFN elements (see Figure 12). In this example, a contiguous region of physical memory was allocated, although this condition is optional. Next, vmbus! ChCreateGpadlFromNtmdl is called (the MDL address is passed as the second parameter), which calls vmbus! ChpCreateGpaRanges, passing it the same MDL as the first parameter. Next, the PFN elements are copied from the MDL structure to a separate buffer (see Figure 13), which will become the body of the CHANNELMSG_GPADL_HEADER message sent by the guest OS to the root OS by calling vmbus! ChSendMessage. hv! HvPostMessage or in winhv! WinHvPostMessage you can see the message (Fig. 14).

Fig. 8. The result of the script execution

Fig. 11. Significance structure MDL

Fig. 12. PFN in the MDL structure

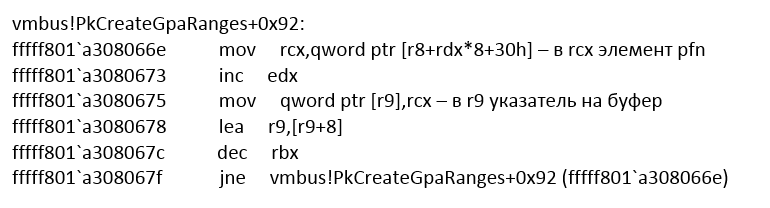

Fig. 13. Plot the code responsible for copying in a separate buffer PFN

Fig. 14. PFN array processed by the hypervisor

The first 16 bytes is the general header of the message, where, for example, 0xF0 is the size of the message body, a VMBus packet is placed inside, the packet type is indicated in its header - 8 (CHANNELMSG_GPADL_HEADER), rangecount is 1, which means that they fit into one packet all the data that needed to be transmitted. In the event of a large amount of data, the guest OS driver would split them into parts and send them in separate messages. Next, the root OS sends a CHANNELMSG_OPENCHANNEL_RESULT message, then the guest OS sends CHANNELMSG_OPENCHANNEL. After that, the root OS runs the WorkItem procedure. (see fig. 15).

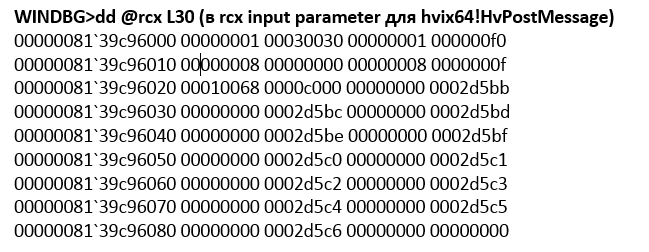

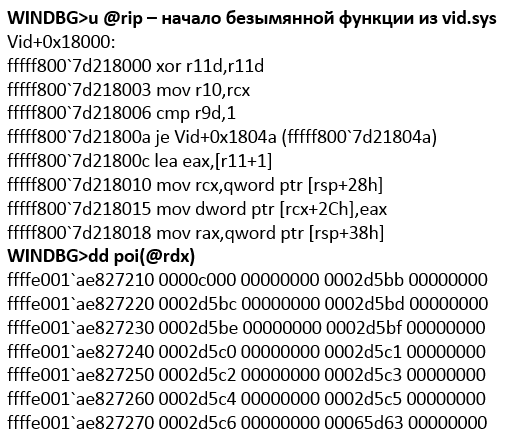

Fig. 15. Call ChMapGpadlView

During its execution, vmbusr! ChMapGpadlView-> vmbusr! PkParseGpaRanges is called, which, in turn, is passed a pointer to the part of the message containing the buffer size 0xC000 and PFN transmitted in the CHANNELMSG_GPADL_HEADER message. Next, a call is made to vmbusr! XPartLockChildPagesSynchronous-> vmbusr! XPartLockChildPages, after which the function from the vid.sys driver is executed (the function name is unknown because there are no symbols for the driver), which receives the PFN block sent earlier in the message from the guest OS as the second parameter ( see fig. 16).

Fig. 16. Processing guest PFNs by the vid.sys driver

Immediately after returning from the function, [rsp + 30h] contains a pointer to the newly created MDL structure (see Figure 17).

Fig. 17. MDL structure returned by vid.sys driver

The size of the allocated buffer is also 0xC000. After that, the root OS sends the message CHANNELMSG_OPENCHANNEL_RESULT. This completes the process of activating the Data Exchange component. Thus, a certain shared buffer is created, visible to both the guest and root OS. This can be verified by writing arbitrary data to the buffer in the guest OS, for example, using the command:

WINDBG>!ed 2d5bb000 aaaaaaaa

WINDBG>!db 2d5bb000

\#2d5bb000 aa aa aa aa 10 19 00

And in the root OS, look at the contents of the page corresponding to the PFN returned by the vid.sys driver function:

WINDBG>!db 1367bb000

\#1367bb000 aa aa aa aa 10 19

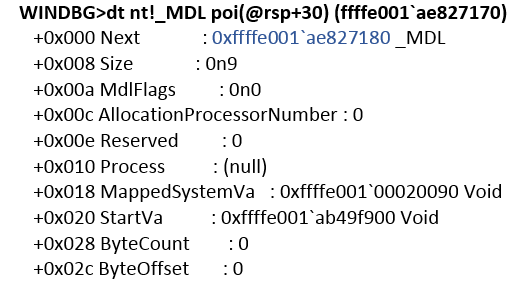

As you can see, the values coincided, so this is really the same physical area of memory. Recall that in the previous steps we determined that when the Data Exchange component is activated, a port of type HvPortTypeEvent with TargetSint = 5 is created. Accordingly, all operations with this port in the root OS will be processed by KiVmbusInterrupt1, from which vmbusr! XPartEnlightenedIsr is called, and it, in in turn, it calls KeInsertQueueDpc with the DPC parameter (its value is shown in Fig. 19).

Fig. 19. The DPC value queued by XPartEnlightenedIsr

From vmbusr! ParentRingInterruptDpc, vmbusr! PkGetReceiveBuffer will be executed after several calls.

WINDBG>k

Child-SP RetAddr Call Site

fffff800\`fcc1ea38 fffff800\`6cdc440c

vmbusr!PkGetReceiveBuffer+0x2c

fffff800\`fcc1ea40 fffff800\`6cdc41a7

vmbusr!PipeTryReadSingle+0x3c

fffff800\`fcc1eaa0 fffff800\`6cdc4037

vmbusr!PipeProcessDeferredReadWrite+0xe7

fffff800\`fcc1eaf0 fffff800\`6c96535e

vmbusr!PipeEvtChannelSignalArrived+0x63

fffff800\`fcc1eb30 fffff800\`6cdc4e3d

vmbkmclr!KmclpVmbusManualIsr+0x16

fffff800\`fcc1eb60 fffff800\`fb2d31e0

vmbusr!ParentRingInterruptDpc+0x5d

If you look at this memory area, then the parameters of the guest OS become visible.

WINDBG> dc ffffd0016fe33000 L1000

…………………………………………………………………………………………………………………

ffffd001\`6fe35b30 0065004e 00770074 0072006f

0041006b N.e.t.w.o.r.k.A.

ffffd001\`6fe35b40 00640064 00650072 00730073

00500049 d.d.r.e.s.s.I.P.

ffffd001\`6fe35b50 00340076 00000000 00000000 00000000

v.4.............

…………………………………………………………………………………………………………………

ffffd001\`6fe35d20 00000000 00000000 00000000 00000000

................

ffffd001\`6fe35d30 00300031 0030002e 0030002e 0033002e

1.0...0...0...3.

ffffd001\`6fe35d40 00000000 00000000 00000000 00000000

................

WINDBG>!pte ffffd001\`6fe35b30

VA ffffd0016fe35b30

PXE at FFFFF6FB7DBEDD00 PPE at FFFFF6FB7DBA0028 PDE at

FFFFF6FB74005BF8

PTE at FFFFF6E800B7F1A8

contains 0000000000225863 contains 00000000003B7863 contains

000000010FB12863 contains 80000001367BD963

pfn 225 ---DA--KWEV pfn 3b7 ---DA--KWEV pfn 10fb12 ---DA-KWEV pfn

1367bd -G-DA--KW-V

pfn 1367bd — это PFN 3-й страницы из конвертированного MDL

Also, the pointer containing the offset relative to the start address of the pages common with the guest OS (in the example it is 4448h) is passed to the same function in rdx, according to which it is necessary to read:

vmbusr!PkGetReceiveBuffer+0x4e:

mov r8,r10 (в r10d был ранее загружено смещение из rdx)

add r8,qword ptr [rcx+20h] — в rcx+20 содержится указатель на одну из общих с гостевой ОС страницу

WINDBG>!pte @r8

VA ffffd0016ff22448

PXE at FFFFF6FB7DBEDD00 PPE at FFFFF6FB7DBA0028 PDE at FFFFF6FB74005BF8

PTE at FFFFF6E800B7F910

contains 0000000000225863 contains 00000000003B7863 contains

000000010FB12863 contains 80000001367C0963

pfn 225 ---DA--KWEV pfn 3b7 ---DA--KWEV pfn 10fb12 ---DA-KWEV pfn

1367c0 -G-DA--KW-V

Set a breakpoint at the beginning of the vmbusr! PkGetReceiveBuffer function and execute the PowerShell script. The breakpoint will work, it will be clear that the structure is passed to the function (pointer in rcx) and in rcx + 18 there is a pointer to the memory block:

WINDBG>? poi(@rcx+18)

Evaluate expression: -52770386006016 = ffffd001\`6fe33000

WINDBG>!pte ffffd001\`6fe33000

VA ffffd0016fe33000

PXE at FFFFF6FB7DBEDD00 PPE at FFFFF6FB

7DBA0028 PDE at FFFFF6FB74005BF8

PTE at FFFFF6E800B7F198

contains 0000000000225863 contains

00000000003B7863 contains

000000010FB12863 contains

80000001367BB963

pfn 225 ---DA--KWEV pfn 3b7 ---DA--KWEV

pfn 10fb12 ---DA--KWEV pfn

1367bb -G-DA--KW-V

WINDBG>r cr3

cr3=00000000001ab000

WINDBG>!vtop 1ab000 ffffd 0016fe33000

Amd64VtoP: Virt ffffd001\`6fe33000,

pagedir 1ab000

Amd64VtoP: PML4E 1abd00

Amd64VtoP: PDPE 225028

Amd64VtoP: PDE 3b7bf8

Amd64VtoP: PTE 00000001\`0fb12198

Amd64VtoP: Mapped phys 00000001\`367bb000

Virtual address ffffd0016fe33000 translates to

physical address

1367bb000.

INFO

KvP technology information can be found on MSDN blogs:

goo.gl/R0U52l

goo.gl/UeZRK2

If you put a breakpoint on the add r8 instruction, qword ptr [rcx + 20h], then after several iterations in r8 you can see the name and value of the KvpDataKey key:

WINDBG>dc @r8

ffffd001\`6ff21d10

....H...........

ffffd001\`6ff21d20

....(........... ffffd001\`6ff21d30

00020006 00000148 00000000 00000000

00000001 00000 a28 00000003 00050002

размер передаваемого блока

0a140000 00000000 00000515 00000103

................

ffffd001\`6ff21d40 00000004 00000001 00000016 0000001a

................

ffffd001\`6ff21d50 0076004b 00440050 00740061 004b0061

K.v.P.D.a.t.a.K.

ffffd001\`6ff21d60 00790065 00000000 00000000 00000000

e.y.............

.............................................................

..........

ffffd001\`6ff21f50 0076004b 00440050 00740061 00560061

K.v.P.D.a.t.a.V.

ffffd001\`6ff21f60 006c0061 00650075 00000000 00000000

a.l.u.e.........

WINDBG>!pte ffffd001\`6ff21f50

VA ffffd0016ff21f50

PXE at FFFFF6FB7DBEDD00 PPE at FFFFF6FB7DBA0028 PDE at

FFFFF6FB74005BF8

PTE at FFFFF6E800B7F908

contains 0000000000225863 contains 00000000003B7863 contains

000000010FB12863 contains 80000001367BF963

pfn 225 ---DA--KWEV pfn 3b7 ---DA--KWEV pfn 10fb12 ---DA-KWEV pfn

1367bf -G-DA--KW-V

Then, upon completion of the PkGetReceiveBuffer, the PipeTryReadSingle function copies data from the shared buffer using the memmove function.

In this case, the block size (in this case A28) is indicated directly in the block itself, but if a number greater than 4000h is specified, then copying will not be performed. Thus, it can be seen that the exchange of data between the root OS and the guest OS uses a common buffer, and the hypervisor interface is used only to notify the root OS that it is necessary to read data from this buffer. In principle, the same operation could be done by sending multiple messages using winhv! HvPostMessage, but this would lead to a significant decrease in performance.

Using the Hypervisor Interception Interface

We configure the hypervisor so that it sends a notification to the root OS if the cpuid instruction with the parameter 0x11114444 is executed in one of the guest OSs. For this, Hyper-V provides an interface in the form of an HvInstallIntercept hyperlink. The hyperv4 driver implements the SetupIntercept function, which receives a list of identifiers of all active guest OS and calls WinHvInstallIntercept for each.

int SetupIntercept()

{

HV_INTERCEPT_DESCRIPTOR Descriptor;

HV_INTERCEPT_PARAMETERS Parameters = {0};

HV_STATUS hvStatus = 0;

HV_PARTITION_ID PartID = 0x0, NextPartID = 0;

// Если в качестве параметра инструкции в RAX-инструкции CPUID будет передано значение 0x11114444, то гипервизор выполнит перехват и отправит сообщение родительскому разделу для обработки результата

DbgPrintString("SetupInterception was called");

Parameters.CpuidIndex = 0x11114444;

Descriptor.Type = HvInterceptTypeX64Cpuid;

Descriptor.Parameters = Parameters;

hvStatus = WinHvGetPartitionId(&PartID);

do{

hvStatus = WinHvGetNextChildPartition(PartID,NextPartID,&NextPartID);

if (NextPartID != 0){

DbgLog("Child partition id", NextPartID);

hvStatus = WinHvInstallIntercept(NextPartID,

HV_INTERCEPT_ACCESS_MASK_EXECUTE, &Descriptor);

DbgLog("hvstatus of WinHvInstallIntercept = ",hvStatus);

} } while ((NextPartID != HV_PARTITION_ID_INVALID) &&

(hvStatus == 0));

return 0;}

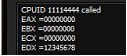

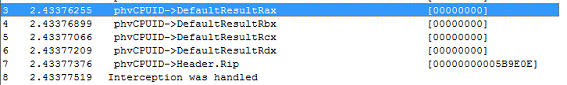

We also change the PrintCpuidInterceptMessage function so that if the guest operating system has EAX (or RAX, if the code that executes the CPUID instruction in longmode) number 0x11114444 is written in the DefaultResultRdx field of the HV_X64_CPUID_INTERCEPT_MESSAGE slot located in the zero SIM located , value 0x12345678:

void PrintCpuidInterceptMessage(PHV_MESSAGE hvMessage)

{PHV_X64_CPUID_INTERCEPT_MESSAGE_phvCPUID = (PHV_X64_CPUID_NTERCEPT_MESSAGE)_hvMessage->Payload;

DbgLog(" phvCPUID->DefaultResultRax",phvCPUID->DefaultResultRax);

DbgLog(" phvCPUID->DefaultResultRbx",phvCPUID->DefaultResultRbx);

DbgLog(" phvCPUID->DefaultResultRcx",phvCPUID->DefaultResultRcx);

DbgLog(" phvCPUID->DefaultResultRdx",phvCPUID->DefaultResultRdx);

if (phvCPUID->Rax == 0x11114444){

phvCPUID->DefaultResultRdx =0x12345678;

DbgLog16(" phvCPUID->Header.Rip",phvCPUID->Header.Rip);

DbgPrintString(" Interception was handled");

}

}

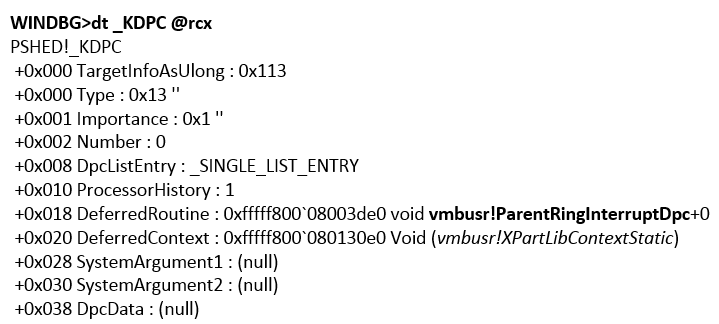

To check in the guest OS, run a test utility that calls the CPUID with Eax equal to 0x11114444. Before installing the interception, the utility will display the result shown in Fig. 20.

Fig. 20. The result of the CPUID instruction on a regular guest OS

After activating the interception, the result will be as follows (see. Fig. 21).

Fig. 21. The result of the CPUID instruction after setting the interception

In this case, a message will be displayed in the root OS (see Fig. 22).

Fig. 22. Debug output of hypervisor message processing with interception set

You should immediately pay attention to the fact that this trick will only happen if the root OS has not previously set intercepts for the given conditions. In this case, after the hyperv driver has replaced the value, control will switch to the original WinHvOnInterrupt, which will call the processing function from the vid.sys driver (this function is the fourth parameter of the winhvr! WinHvCreatePartition function, called in the root OS when creating a child partition when the virtual machine is turned on ), which can lead to a change in the result. In our case, such a handler, of course, was not installed, the hypervisor analyzed the data in the zero SIM slot and corrected the result of the CPUID instruction.

Finally

Despite the fact that after reading my work, your brain probably stood in the pose of a river scorpion (and if you read it at all - respect to you from our entire editorial staff) ... so, I digress. This article turned out to be rather a review, demonstrating the work of some functions and components of the Microsoft virtualization system. However, I hope that the examples will help to better understand the principles of operation of these components and will allow a deeper analysis of their safety.

Posted by gerhart

First published in Hacker Magazine 12/2014.

Subscribe to Hacker