Comparison of data processing speed (ETS, DETS, Memcached, MongoDb) in Erlang (spherical in vacuum)

Good day, dear% habrauser%!

Below is a small set of tests showing statistics on inserting / reading to / from a table / collection. It is clear that no test will show the real picture that will occur in your application. But still, out of sheer curiosity, I decided to take some measurements. The results and conclusions to which I came under the cut.

What we tested on:

OS name: Windows 7 Professional

OS architecture: AMD64

Processor name: Intel Core (TM) i5-3340 CPU @ 3.10GHz

Manufacturer: GenuineIntel Processor

architecture: AMD64 / EM64T

Physical cores: 2

Logical processors: 2

Total RAM: 8139 MB (7.95 GB)

For mongo, the latest version of the official driver was used.

For mc, I used the one that pulled the load. Used with 10 different clients, some just did not start, some tried to work asynchronously and fell.

Mongo version: 2.6.3

mc version: 1.4.4-14-g9c660c0 (the last of those that could be compiled for Windows)

erlang version: 5.10.4 / OTP R16B03

I foresee a question of the form: “And what does mongo have to do with it, how can one compare storage in memory with storage on disk ?!” The question is reasonable. The answer is simple: I became interested. And so I made a comparison of the repositories that are popular and for which there are drivers in Erlang.

I remind you that all tests can be classified as spherical in a vacuum.

So let's get started.

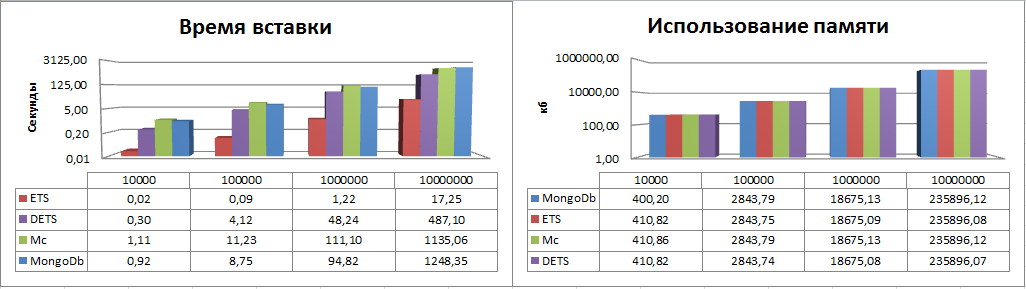

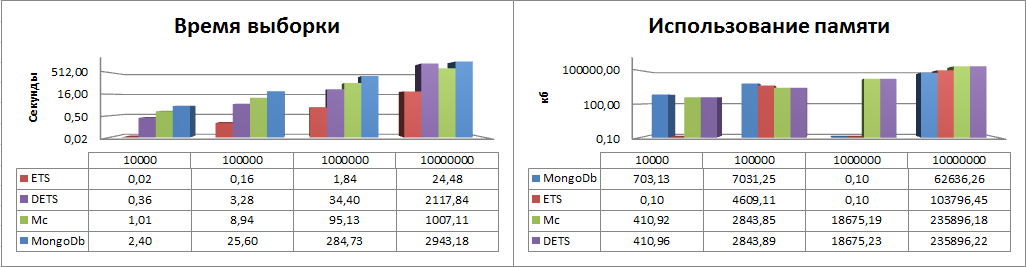

The first thing I decided to check was the speed of inserting records and the memory consumed by it, the result was expected:

Native Ets tables gave odds to everyone else ahead of their closest competitor, dets, by more than 10 times. But by the way, this is logical, since ets works in memory and dets on disk. Mc also works in memory, but apparently. due to the driver (google found the results at about twice the speed), it lagged almost 70 times, the monga turned out to be a little faster.

When performing the operation of inserting 10k records, Mongod consumed 2.7 GB of RAM, 2.87 GB took ets, and Mc took 1.6.

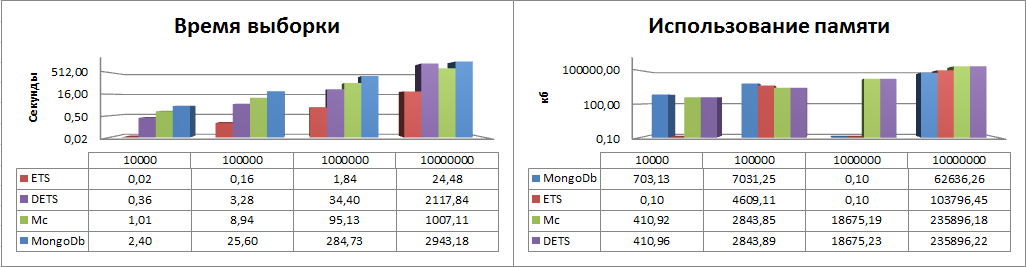

Here, revelation did not happen either. Ets turned out to be the fastest, followed by dets, then Mc and then mongo, but here you should look at the last result. When working with a large number of records, Dets becomes ineffective, and the larger the number of records, the lower the efficiency, here Mc bypasses it. For mongo, an index was made on the sample field.

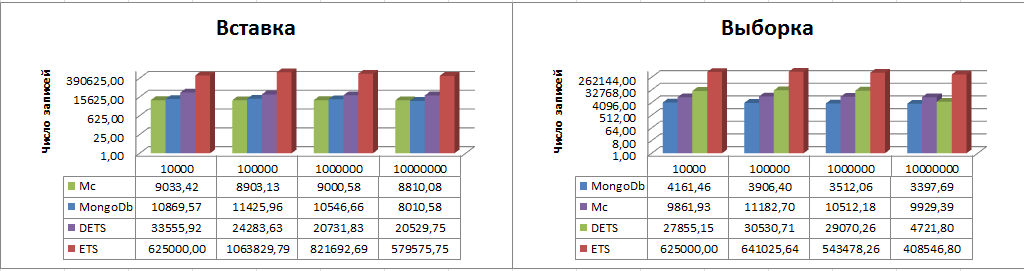

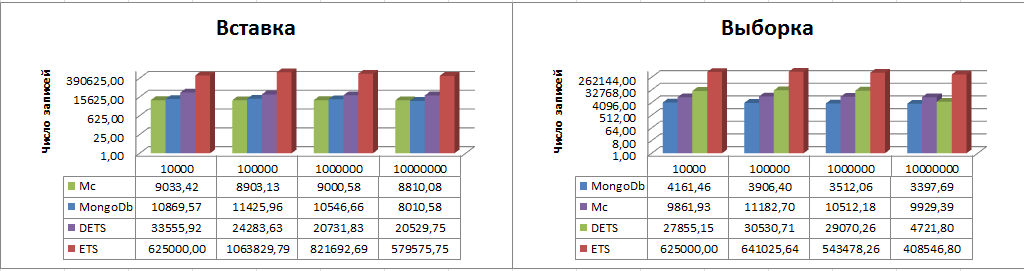

And finally, the number of records that can be processed in one second:

Cap Minute: Use built-in tools to cache data.

And, it would seem, really, what prevents the use of ets tables? Fortunately, any terms can be stored in them. The main disadvantage is that the data is stored in memory, and if the process that created the table falls, the table disappears with it, dragging behind itself the processes that are accessing it at that moment. Yes, the process will be restarted by the supervisor, but the data is everything, bye-bye. Ok, let's use dets, everything is stored on disk and nothing is lost. Yes, but, oh this is a “but”, the time to re-open and check the table for damage with a large file size is very significant, will your processes wait? So what remains is Mc, which, incidentally, can also fall for some reason, or Monga, or, or ...

Yes, the harsh reality is that everything can fall, but let's say Mc or Mongo has the ability to deploy clusters, and if one machine crashes, you can always turn to another. But they are the slowest of the systems tested. So you have to choose what to sacrifice, either performance or data.

Although it is possible not to choose and not to sacrifice, I came to the following conclusions for myself:

1) In ets you need to store a “hot” data cache, which is constantly required and the loss of which does not bode well. And the documentation tells us that dets tables can be opened and used in memory, and when closed, they will be dumped to disk. But, as can be seen from the tests, dets are effective with a small amount of data, otherwise the time spent working with the disk becomes too long.

2) All the data that is critical for you needs to be stored in a reliable storage, it does not matter, sql / nosql somewhere where you will be calm for them.

3) We need a module similar to Mc, but on ets tables, which will allow us to write the necessary data to the “hot” cache so that they are immediately available, and in synchronous / asynchronous mode can transfer them to other repositories.

The conclusions turned out to be captain, but, probably, all conclusions from some measurements are approximately the same and somewhere, once, they already sounded.

Thank you for attention.

Below is a small set of tests showing statistics on inserting / reading to / from a table / collection. It is clear that no test will show the real picture that will occur in your application. But still, out of sheer curiosity, I decided to take some measurements. The results and conclusions to which I came under the cut.

What we tested on:

OS name: Windows 7 Professional

OS architecture: AMD64

Processor name: Intel Core (TM) i5-3340 CPU @ 3.10GHz

Manufacturer: GenuineIntel Processor

architecture: AMD64 / EM64T

Physical cores: 2

Logical processors: 2

Total RAM: 8139 MB (7.95 GB)

For mongo, the latest version of the official driver was used.

For mc, I used the one that pulled the load. Used with 10 different clients, some just did not start, some tried to work asynchronously and fell.

Mongo version: 2.6.3

mc version: 1.4.4-14-g9c660c0 (the last of those that could be compiled for Windows)

erlang version: 5.10.4 / OTP R16B03

A small remark before starting.

I foresee a question of the form: “And what does mongo have to do with it, how can one compare storage in memory with storage on disk ?!” The question is reasonable. The answer is simple: I became interested. And so I made a comparison of the repositories that are popular and for which there are drivers in Erlang.

I remind you that all tests can be classified as spherical in a vacuum.

So let's get started.

Insert

The first thing I decided to check was the speed of inserting records and the memory consumed by it, the result was expected:

Native Ets tables gave odds to everyone else ahead of their closest competitor, dets, by more than 10 times. But by the way, this is logical, since ets works in memory and dets on disk. Mc also works in memory, but apparently. due to the driver (google found the results at about twice the speed), it lagged almost 70 times, the monga turned out to be a little faster.

When performing the operation of inserting 10k records, Mongod consumed 2.7 GB of RAM, 2.87 GB took ets, and Mc took 1.6.

Sample:

Here, revelation did not happen either. Ets turned out to be the fastest, followed by dets, then Mc and then mongo, but here you should look at the last result. When working with a large number of records, Dets becomes ineffective, and the larger the number of records, the lower the efficiency, here Mc bypasses it. For mongo, an index was made on the sample field.

And finally, the number of records that can be processed in one second:

Some conclusions

Cap Minute: Use built-in tools to cache data.

And, it would seem, really, what prevents the use of ets tables? Fortunately, any terms can be stored in them. The main disadvantage is that the data is stored in memory, and if the process that created the table falls, the table disappears with it, dragging behind itself the processes that are accessing it at that moment. Yes, the process will be restarted by the supervisor, but the data is everything, bye-bye. Ok, let's use dets, everything is stored on disk and nothing is lost. Yes, but, oh this is a “but”, the time to re-open and check the table for damage with a large file size is very significant, will your processes wait? So what remains is Mc, which, incidentally, can also fall for some reason, or Monga, or, or ...

Yes, the harsh reality is that everything can fall, but let's say Mc or Mongo has the ability to deploy clusters, and if one machine crashes, you can always turn to another. But they are the slowest of the systems tested. So you have to choose what to sacrifice, either performance or data.

Although it is possible not to choose and not to sacrifice, I came to the following conclusions for myself:

1) In ets you need to store a “hot” data cache, which is constantly required and the loss of which does not bode well. And the documentation tells us that dets tables can be opened and used in memory, and when closed, they will be dumped to disk. But, as can be seen from the tests, dets are effective with a small amount of data, otherwise the time spent working with the disk becomes too long.

2) All the data that is critical for you needs to be stored in a reliable storage, it does not matter, sql / nosql somewhere where you will be calm for them.

3) We need a module similar to Mc, but on ets tables, which will allow us to write the necessary data to the “hot” cache so that they are immediately available, and in synchronous / asynchronous mode can transfer them to other repositories.

The conclusions turned out to be captain, but, probably, all conclusions from some measurements are approximately the same and somewhere, once, they already sounded.

Thank you for attention.