Yourself AWS. Part 1

Hello again!

No, this is not Fez

. Last time I briefly talked about what there is in the open-source market for creating a pocket cloud.

Now it's time to tell you about OpenStack itself and its implementation in the form of Mirantis OpenStack 5.1.

Instead of a thousand words

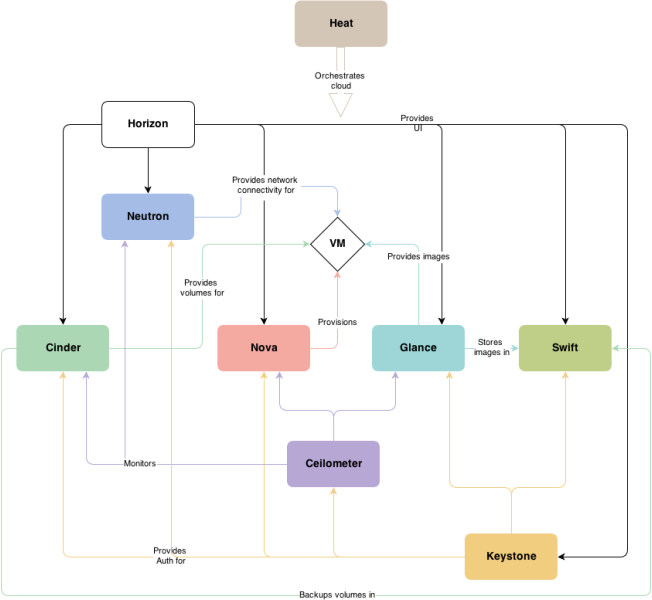

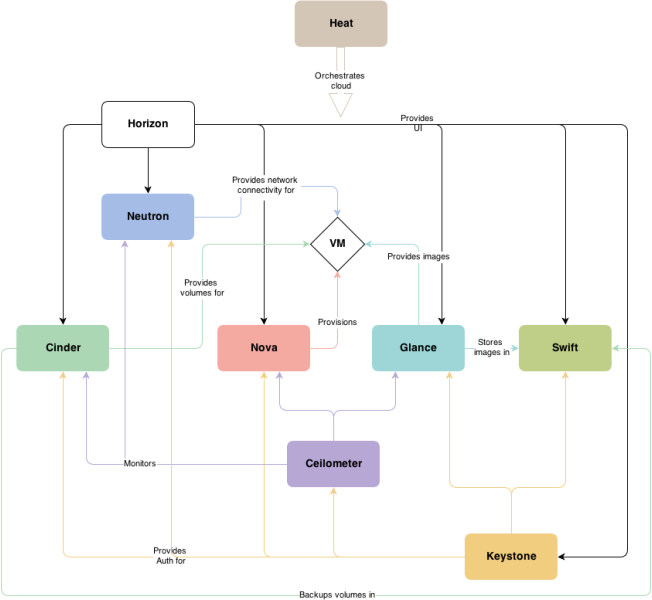

To begin with, I will briefly tell you what OpenStack consists of. So, Horizon is directly a web interface, most of the work with the cloud is through it. In rare cases, you will need to do something from the console. Nova - deals with the provisioning of your virtual machines. Everything related to setting up libvirt, distributing quotas, choosing physical hardware to host is located in nova, and this component is also responsible for one of the network options - nova-network. Neutron (formerly Quantum) - is responsible for everything related to the network - interfaces, virtual routers, dhcp, routing. It is based on Open vSwitch . If something cannot be done through the web interface, then most likely it is implemented in the console.Cinder - is responsible for working with block devices, all questions about issuing additional disks to your virtual machine are sent here. Glance - the original images of virtual machines are stored here. Snapshots of operating systems are also stored here. Swift - object storage. It has an extensive file API that is compatible with Amazon S3. Cellometr - monitoring everything and everyone. It collects data from all components of the cloud, stores, analyzes. It works through the REST API. Keystone- The last and most important component. It authorizes and authenticates all other components, as well as users, and how quickly you will gain access to other components directly depends on the speed of its operation. Able to integrate with LDAP. Heat - with it, you can automate the installation of virtual machines of any configuration. Uses HOT (Heat orchestration template) - essentially a yaml file with a description of the main parameters and a separate userdata section into which you can write both sh-scripts and bat.

OpenStack Juno will also add a couple of new components. Ironic - work with bare metal. In fact, what Fuel is doing now is PXE boot, IPMI, and other goodies. Trove- database as a service (DBaaS). More details here .

What is it you can look at the link at the beginning of the article. Now I’ll tell you what is configured and how. The first thing you see after installation is the login window, followed by the create environment button. It describes the basic parameters of your cloud, such as whether we build a fail-safe cloud or not, what to use as a backend - LVM or Ceph, which network we want, are we building on real hardware (KVM), virtual (QEMU) or just want to manage an already working one vSphere ESXi. For the latter, you must have vCenter installed. As an OS, you can choose either CentOS 6.5 or Ubuntu 12.04.4. My configuration looks like non-HA / Ceph / KVM / Neutron GRE, respectively, some of the things described in this article may not be suitable for another scheme. A lot of questions can be caused by the choice of network, some settings in the future depend on the same parameter.

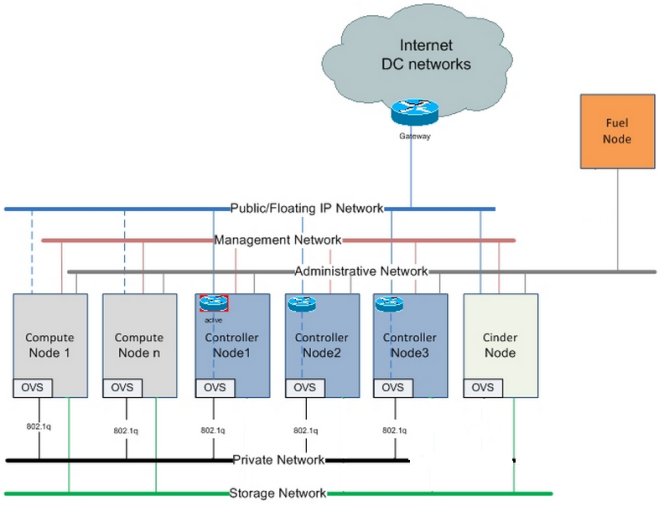

Disclaimer: openstack splits the network into segments. Management network - for OpenStack service teams to work, your servers will receive IP addresses from the same network. Storage is the network that drives all traffic from your storage. Public is your real network, it is routed "to the world" and from it you issue addresses for virtualka in the cloud. You can separate networks both physically and using VLAN. Fuel needs another, non-tagged network. DHCP + PXE will live in it and it will also check the availability of servers. Clickable Nova-network - transferred to deprecated status. Only suitable for very home use, when you do not need to share the cloud between different clients. Able to work only with layer 3 OSI models. Neutron with VLAN

- Requires 802.1Q support on the router. Just only with this option works melanox'ovy driver with SR-IOV plugin and iSER . It is assumed that you know the number of clients in advance, because it requires you to specify the final number of VLAN IDs used. There may be problems with CentOS; to resolve them, Fuel has “VLAN splinters”.

Neutron with GRE is an alternative network option. Connectivity between nodes is carried out using GRE tunnels, this creates an additional load on the processor, but eliminates the need to pre-count the number of clients and configure VLANs on routers. Also, unlike the previous version, there is no need for a separate network interface.

Disclaimer 2: Currently there is a restriction in the Fuel UI when setting up a neutron network - Public and Floating IP must be from the same network segment (CIDR). Theoretically, this can be done already after installing all the cloud components through the Open vSwitch CLI. You can find more information about the difference between networks and examples of setting up some switch models here . I also recommend reading a great article about VxLAN.

I would describe it as “network RAID”. This is a distributed file system that provides both block devices and object storage. The system is critical to timings, so the time on all servers must be synchronized. It has very good documentation , therefore I will not deal with its translation here. Fuel is quite good * doing the setup, so in the basic configuration you don’t need to finish anything with a file.

Disk settings window in Fuel

A disk can be divided into 4 logical parts. And if with the first three everything seems to be understandable, then Virtual Storage has been causing questions for me for a long time. As it turned out, it can not be made less than 5GB, but this is still half the trouble. The main problem got out when I tried to use qcow2 images to create virtual machines - OpenStack needed this section to convert the image to raw and put it in cinder. It was also required to create snapshots with qcow2. As a result, I had to spend several days catching non-obvious errors from the logs and reading the documentation in order to switch to working exclusively with raw system images.

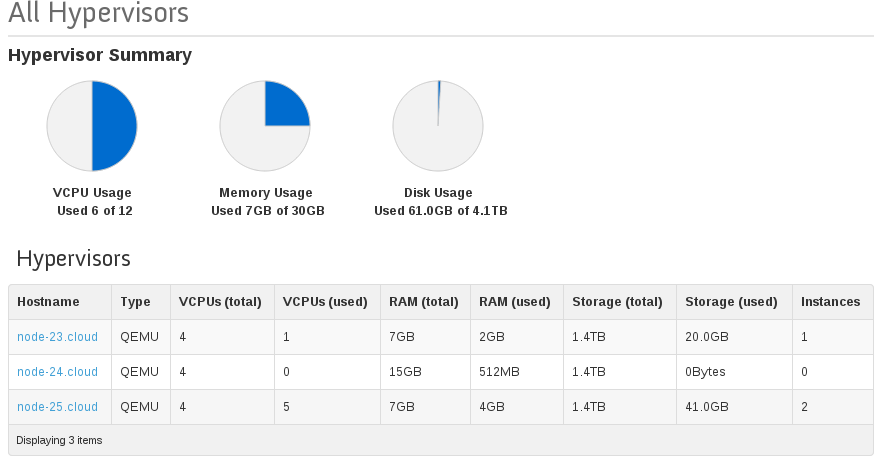

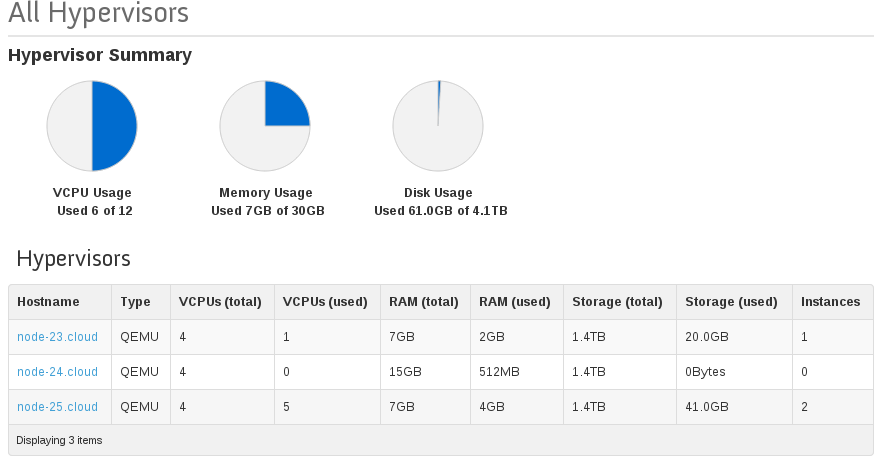

Attention to the columns (total)

And finally, a little about the distribution of resources. Initially, Fuel sets cpu_allocation_ratio = 8.0, which means that you can allocate 8 “virtual” to 1 real cpu of your server. You can change this parameter in /etc/nova/nova.conf and after restarting openstack-nova-api you should see it in the webmord, but due to a bug in the API this is not possible yet. The same problem will arise if you change ram_allocation_ratio (defaults to 1). I still have to figure out how applications behave inside virtual machines when all available memory is exhausted twice (ram_allocation_ratio = 2). But remembering how this worked in OpenVZ, I do not advise you to change this parameter much.

In the next, and most likely final, article, I will try to tell how to build several hypervisors (KVM + Docker) within the same cloud, how to automate the configuration of virtual machines using Heat and Murano, as well as a little about monitoring and searching for the “narrow neck” in your cloud.

No, this is not Fez

. Last time I briefly talked about what there is in the open-source market for creating a pocket cloud.

Now it's time to tell you about OpenStack itself and its implementation in the form of Mirantis OpenStack 5.1.

Openstack

Instead of a thousand words

To begin with, I will briefly tell you what OpenStack consists of. So, Horizon is directly a web interface, most of the work with the cloud is through it. In rare cases, you will need to do something from the console. Nova - deals with the provisioning of your virtual machines. Everything related to setting up libvirt, distributing quotas, choosing physical hardware to host is located in nova, and this component is also responsible for one of the network options - nova-network. Neutron (formerly Quantum) - is responsible for everything related to the network - interfaces, virtual routers, dhcp, routing. It is based on Open vSwitch . If something cannot be done through the web interface, then most likely it is implemented in the console.Cinder - is responsible for working with block devices, all questions about issuing additional disks to your virtual machine are sent here. Glance - the original images of virtual machines are stored here. Snapshots of operating systems are also stored here. Swift - object storage. It has an extensive file API that is compatible with Amazon S3. Cellometr - monitoring everything and everyone. It collects data from all components of the cloud, stores, analyzes. It works through the REST API. Keystone- The last and most important component. It authorizes and authenticates all other components, as well as users, and how quickly you will gain access to other components directly depends on the speed of its operation. Able to integrate with LDAP. Heat - with it, you can automate the installation of virtual machines of any configuration. Uses HOT (Heat orchestration template) - essentially a yaml file with a description of the main parameters and a separate userdata section into which you can write both sh-scripts and bat.

OpenStack Juno will also add a couple of new components. Ironic - work with bare metal. In fact, what Fuel is doing now is PXE boot, IPMI, and other goodies. Trove- database as a service (DBaaS). More details here .

Fuel

What is it you can look at the link at the beginning of the article. Now I’ll tell you what is configured and how. The first thing you see after installation is the login window, followed by the create environment button. It describes the basic parameters of your cloud, such as whether we build a fail-safe cloud or not, what to use as a backend - LVM or Ceph, which network we want, are we building on real hardware (KVM), virtual (QEMU) or just want to manage an already working one vSphere ESXi. For the latter, you must have vCenter installed. As an OS, you can choose either CentOS 6.5 or Ubuntu 12.04.4. My configuration looks like non-HA / Ceph / KVM / Neutron GRE, respectively, some of the things described in this article may not be suitable for another scheme. A lot of questions can be caused by the choice of network, some settings in the future depend on the same parameter.

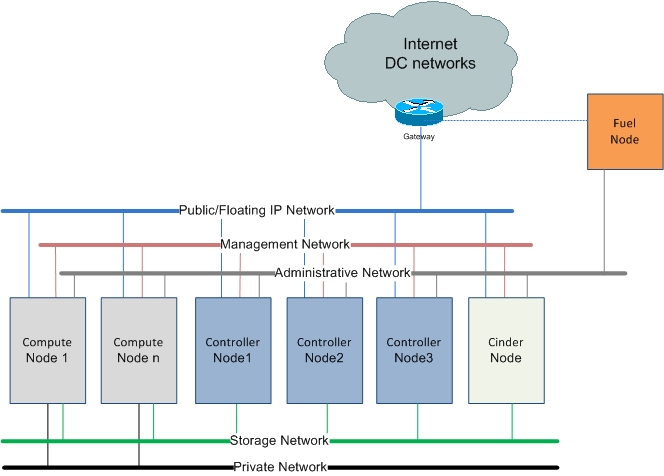

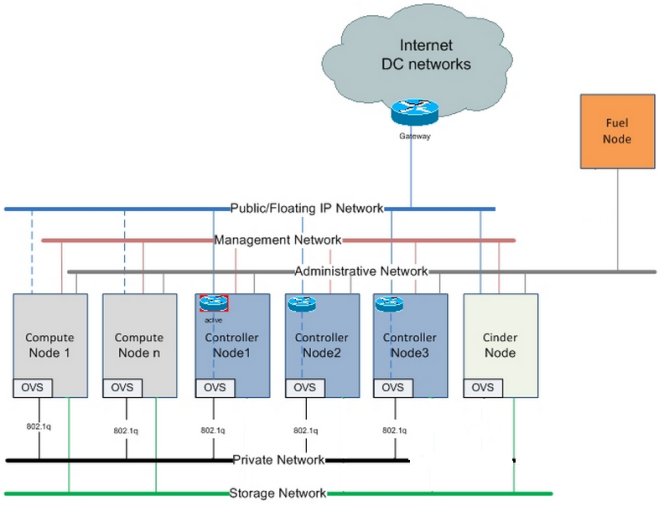

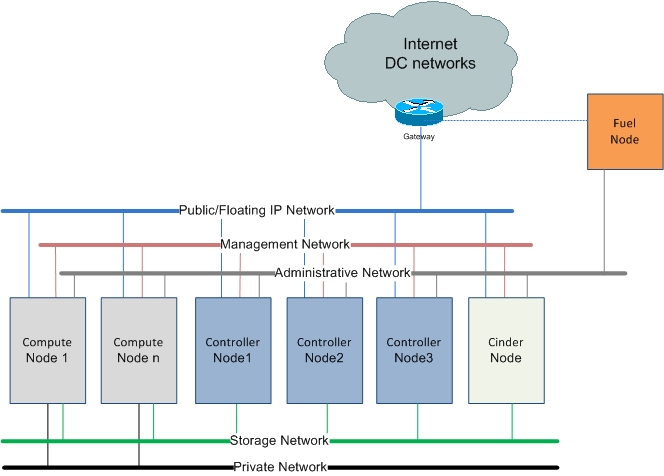

Disclaimer: openstack splits the network into segments. Management network - for OpenStack service teams to work, your servers will receive IP addresses from the same network. Storage is the network that drives all traffic from your storage. Public is your real network, it is routed "to the world" and from it you issue addresses for virtualka in the cloud. You can separate networks both physically and using VLAN. Fuel needs another, non-tagged network. DHCP + PXE will live in it and it will also check the availability of servers. Clickable Nova-network - transferred to deprecated status. Only suitable for very home use, when you do not need to share the cloud between different clients. Able to work only with layer 3 OSI models. Neutron with VLAN

- Requires 802.1Q support on the router. Just only with this option works melanox'ovy driver with SR-IOV plugin and iSER . It is assumed that you know the number of clients in advance, because it requires you to specify the final number of VLAN IDs used. There may be problems with CentOS; to resolve them, Fuel has “VLAN splinters”.

Neutron with GRE is an alternative network option. Connectivity between nodes is carried out using GRE tunnels, this creates an additional load on the processor, but eliminates the need to pre-count the number of clients and configure VLANs on routers. Also, unlike the previous version, there is no need for a separate network interface.

Disclaimer 2: Currently there is a restriction in the Fuel UI when setting up a neutron network - Public and Floating IP must be from the same network segment (CIDR). Theoretically, this can be done already after installing all the cloud components through the Open vSwitch CLI. You can find more information about the difference between networks and examples of setting up some switch models here . I also recommend reading a great article about VxLAN.

Ceph

I would describe it as “network RAID”. This is a distributed file system that provides both block devices and object storage. The system is critical to timings, so the time on all servers must be synchronized. It has very good documentation , therefore I will not deal with its translation here. Fuel is quite good * doing the setup, so in the basic configuration you don’t need to finish anything with a file.

Disk settings window in Fuel

A disk can be divided into 4 logical parts. And if with the first three everything seems to be understandable, then Virtual Storage has been causing questions for me for a long time. As it turned out, it can not be made less than 5GB, but this is still half the trouble. The main problem got out when I tried to use qcow2 images to create virtual machines - OpenStack needed this section to convert the image to raw and put it in cinder. It was also required to create snapshots with qcow2. As a result, I had to spend several days catching non-obvious errors from the logs and reading the documentation in order to switch to working exclusively with raw system images.

Hidden text

* - There are some problems with Ceph as a backend for glance / cinder. Partly they are caused by errors in puppet scripts of Fuel itself, partly these are features of OpenStack logic. So, for example, ceph can only work properly with RAW images. If you use Qcow2 (which, by the way, implies copy-on-write from the box, unlike raw), then OpenStack to work with this image will call 'qemu-img convert', which gives a noticeable load on a disk system, so on the processor. Also when working with raw at the moment there are problems with creating snapshots - instead of using the same CoW, Ceph has to copy the whole image. And this in turn dramatically increases the consumption of space. True, the Mirantis OpenStack 6 was promised to fix this problem. It is also worthwhile to carefully monitor the free space on the controllers, where Fuel has a ceph monitor. As soon as you have less than 5% of free space on the root partition, ceph-mon is strewed and problems with access to ceph-osd begin.

There is another not very pleasant feature in Horizon itself - it incorrectly considers the place available on the hundred pages. So, for example, if you have 5 servers on which a total of 1Tb Ceph is stored, in the dashboard you will see 5Tb of available space. The fact is that ceph reports on the total storage volume from each node, and horizon simply summarizes this data.

There is another not very pleasant feature in Horizon itself - it incorrectly considers the place available on the hundred pages. So, for example, if you have 5 servers on which a total of 1Tb Ceph is stored, in the dashboard you will see 5Tb of available space. The fact is that ceph reports on the total storage volume from each node, and horizon simply summarizes this data.

Attention to the columns (total)

And finally, a little about the distribution of resources. Initially, Fuel sets cpu_allocation_ratio = 8.0, which means that you can allocate 8 “virtual” to 1 real cpu of your server. You can change this parameter in /etc/nova/nova.conf and after restarting openstack-nova-api you should see it in the webmord, but due to a bug in the API this is not possible yet. The same problem will arise if you change ram_allocation_ratio (defaults to 1). I still have to figure out how applications behave inside virtual machines when all available memory is exhausted twice (ram_allocation_ratio = 2). But remembering how this worked in OpenVZ, I do not advise you to change this parameter much.

In the next, and most likely final, article, I will try to tell how to build several hypervisors (KVM + Docker) within the same cloud, how to automate the configuration of virtual machines using Heat and Murano, as well as a little about monitoring and searching for the “narrow neck” in your cloud.