Practical Guide to Unicode'ization

We finally did it! For a long time, the shameful heritage of CP1251 annoyed developers, suggesting that, how so? The Unicode era has long come, and we still use single-byte encoding and place crutches in different places for compatibility with external systems. But the reason for this was quite rational: it’s very laborious to transfer to Unicode the large project that My World has developed into . We estimated this at half a year and were not ready to spend so many resources on a feature that would not bring a substantial benefit to the Russian-speaking audience.

But history makes its adjustments, often very unexpected. It's no secret that the project My World, which is the most popular social network in this country, is very popular in Kazakhstan. And we always wanted our Kazakh users to have the opportunity to use the symbols of the Kazakh alphabet from the extended Cyrillic set, which, unfortunately, could not find a place in CP1251. And an additional incentive for us, which finally allowed us to justify the long development, was the further growth of the project’s popularity outside our country. We realized that it was time to take a step towards foreign users.

Of course, the first thing that was necessary for the internationalization of the project was to start receiving, transmitting, processing and storing data in UTF-8. This procedure for a large project is not simple and lengthy, along the way we had to solve several rather interesting problems that we will try to tell about.

Database Transcoding

The first choice that we encountered was fairly standard - from which end to start: from displaying a page or from data storages. We decided to start with repositories due to the fact that this is the most lengthy and time-consuming process, requiring coordinated actions by developers and administrators.The situation was complicated by the fact that in our social network, for reasons of speed, a large number of various specialized repositories are used. Of course, not all of them contained text fields subject to internationalization, but still there were a lot of them. And the first thing that had to be done was to conduct a complete inventory of all our repositories for the content of text strings in them. Admittedly, we learned a lot.

MySQL

We started converting storages to UTF-8 with MySQL. The reason for this was that, in general, a change in the encoding of this base is supported natively. But in practice, everything turned out to be not so simple.Firstly, it was necessary to convert the database without downtime for the duration of the conversion.

Secondly, it turned out that for all tables, alter table `my_table` convert to character set utf8; not rationally and, moreover, impossible. Not rational because the index for a UTF-8 field always takes 3 * length_in_charactersbytes, even if the field contains only ASCII characters. And we had a lot of such fields, including index ones, especially those that contained hex strings. It is impossible because the maximum index key length in MySQL is 767 bytes, and indexes (especially multi-column ones) no longer fit. In addition, it was found that in some places binary data is stored in some places by mistake and vice versa, and each field must be carefully checked.

After we collected information from our databases about the tables there, it became clear that the lion's share of them is most likely not used. So it turned out as a result, we removed from the databases about half of all the tables in them. In order to find unused tables, we used the following technique: using tcpdump we collected all the queries to our databases in a day, then crossed the list of tables from this dump with the current database scheme and, just in case, searched for unused tables by code (at the same time cleared the code). Tcpdump was used because it, unlike writing all requests to the log using MySQL tools, does not require a restart of the database and does not affect the speed of request processing. Of course, immediately deleting tables was scary, so at first they simply renamed tables with a special suffix,

Then we started writing DDL for database conversion. For this, several standard patterns were used:

- if there were no text fields in the table, then (just in case, we ’ll suddenly add it) we simply executed the query: alter table `my_table` default character set utf8;

- if the table had only varchar text fields requiring internationalization, then: alter table `my_table` convert to character set utf8;

- fields containing only ASCII characters were converted to ASCII: alter table `my_table` modify` my_column` varchar (n) character set ascii ...;

- Fields requiring internationalization are standard: alter table `my_table` modify` my_column` varchar (n) character set utf8 ...;

- but, for some fields with a unique index, due to the equality in collation utf8_general_ci (unlike cp1251_general_ci ) of the letters e and e, I had to crutch: alter table `my_table` modify` my_column` varchar (n) charater set utf8 collate utf8_bin ...;

- for index fields that stopped converting into the index after conversion, they also had to crutch: alter table `my_table` drop index` my_index`, modify `my_column` varchar (n) character set utf8 ..., add index` my_index` (`my_column` ( m)); (where m <n , and the index, as a rule, across several fields);

- text fields containing binary data were translated into binary and varbinary;

- binary fields containing text strings in CP1251 were converted in two ways : alter table `my_table` modify` my_column` varchar (n) character set cp1251; alter table `my_table` modify` my_column` varchar (n) character set utf8 ; This is necessary so that the first query MySQL understands that the data is encoded in cp1251, and the second converts it to utf8.

- text blobs had to be processed separately, because with convert to character set utf8 MySQL expands the blob to the minimum necessary to fit the maximum length text, all characters of which are three-byte long. That is, text is automatically expanded to mediumtext . This is not quite what we wanted in a number of cases, so we gave it explicitly: alter table `my_table` alter` my_column` text character set utf8;

- and, of course, for the future, the final chord: alter database `my_database` default character set utf8;

The task of converting the base to UTF-8 without downtime for the time of conversion was solved in the usual way for us: through a replica. But not without features. Firstly, in order for strings to be automatically converted when catching up a replica from the wizard, it is necessary that replication is necessarily in statement mode , in raw mode, conversion is not performed. Secondly, to switch to statement replication, you also need to change the transaction isolation level from the default repeatable read to read commited .

Actually converted as follows:

- Switch the wizard to statement-replication mode.

- We raise a temporary copy of the database for conversion, run the conversion on it.

- At the end of the conversion, we transfer the copy to the replica mode from the main database, the data is caught up, the lines are also converted on the fly.

- For each database replica:

- transfer the load from the replica to the temporary replica in UTF-8;

- we transfer all replicas from scratch from the temporary base, enable replication from it;

- return the load back to the replica. - We transfer the temporary base to the wizard mode, transfer the requests from the old master to the temporary one using NAT.

- We transfer the old master from the time base, we catch up with replication.

- We switch the master back, we remove NAT, we return replication back to mixed.

- Disable the temporary base.

As a result, in three months of painstaking work, we managed to convert all 98 masters (plus a bunch of replicas) with fifteen different base schemes (one especially large 750GB base was converted for almost two weeks of machine time). The admins cried, did not sleep at night (sometimes they did not let the developers sleep), but the process, not as fast as we wanted, nevertheless went on. Initially, they wanted the best and carried out the conversion according to the above scheme, we used machines with SSD disks to speed up the process. But at the end of the third month, realizing that in this situation two more months would be needed, they could not stand it, transferred the entire load from the replicas to the masters and began to convert directly to the old replicas. Fortunately, no abnormal situations occurred during this time on the masters, and in a week (basically,

In addition to converting the databases themselves, it was also necessary to get support for UTF-8 in the code, as well as to ensure a smooth and inconspicuous transition. Everything is simple with MySQL, however. The fact is that he has a separate encoding in which he stores data, and a separate encoding in which he gives the data to the client. Historically, on servers, it was written to us that character_set_ * = cp1251 . For parameters character_set_client, character_set_connection, character_set_resultswe did not change anything, so as not to break the old customers, and left cp1251. The rest were replaced by utf8. As a result, old clients working in cp1251 still receive data in cp1251, regardless of whether the database is converted or not, and new ones working in UTF-8 immediately after the connection is established, run the set names utf8 command; and begin to use all the benefits of this encoding.

Tarantool

What is a tarantula, I think, you can no longer tell. This brainchild of My World has already gained sufficient fame and has grown into a good open source project .Over the years of its use, we managed to accumulate a huge amount of information in it, and when it turned out that we had 400 instances of the tarantula, frankly, it was scary that the conversion would take a long time. But, fortunately for us, it turned out that only 60 of them have text fields (mainly user profiles).

Admittedly, transcoding tarantulas turned out to be a really interesting task. And the solution was quite elegant. But, of course, not quite out of the box. I’ll immediately make a reservation that historically it turned out that after tarantool began to develop as an open source project, it turned out that the needs of the community and ours do not coincide a bit. The community needs an understandable product, a key-value repository that works out of the box, but we need a product with a modular architecture (framework for writing repositories), additional highly specialized features, and performance optimizations. Therefore, somewhere we continued to use tarantool, and somewhere we began to use its fork octopus, which is developed by the author of the very first tarantula. And this greatly simplified the conversion process. The fact is that in the octopus it is possible to write replication filters on lua, that is, to transmit not the original commands from the snapshot and xlog of the wizard, but passed through the modification using the lua function. This feature was once added a long time ago in order to be able to raise partial replicas containing not all the data from the master, but only certain tuple fields. And we got the idea that in the same way we can transcode texts on the fly in the process of replication.

Still, the octopus for this task had to be slightly finished: although the feeder (the wizard process that feeds the xlog replica) has long been implemented as a separate octopus module mod_feeder, it still could not be launched separately without storage (in this case, the key-value implemented by the mod_box module ), and this was necessary so that changes in the replication mechanism did not require a restart of the wizard. Well, of course, I had to write replication filters on lua, which for each namespace converted the necessary fields from CP1251 to UTF-8.

In addition to actually converting data in tarantulas and octopuses, it was necessary to ensure transparent operation of the code with their shards, which have already been converted and not yet, as well as to provide atomic switching from work in CP1251 to work in UTF-8. Therefore, it was decided to put a special transcoding proxy in front of the storages, which, depending on the flag in the client’s request, converted data from the base encoding to the client encoding. Here, the octopus again came to our aid, or rather its mod_colander module , which allows you to write fast proxy servers, including on lua (since octopus uses luajit and ffi, it turns out really productive).

Total, the conversion scheme for tarantool / octopus to UTF-8 is as follows:

- Configuring utf8proxy on the master and replicas. We pick it up at the port that the tarantula was listening to before, we re-tarantula itself to another port. From now on, clients can fulfill requests in both CP1251 and UTF-8.

- On the server with the wizard, run the converting utf8feeder , configure it to read snapshots and xlogs from the same directories where the wizard writes them.

- On another server aside, we raise the temporary replica of the wizard, configure it to replicate from the converting feeder. Data will already arrive in a temporary replica encoded in UTF-8.

- utf8proxy replicas we configure to replicate from the temporary replica, we transfer the old replica from the temporary one, then we return the load back.

- We firewall the port to utf8proxy masters (so that there are no conflicts on updates), we reconfigure utf8proxy to a temporary replica, we make the temporary replica a temporary master, we extinguish the old master, we fire out the port to utf8proxy .

- We transfer the new master from the temporary one, switch the replicas to replication from it.

- We make the new master a master using utf8proxy , turn off the temporary master. At this step, all instances contain data in UTF-8, you can start writing non-Cyrillic texts from clients.

- After the transition of all clients to UTF-8, we take out utf8proxy .

The entire process of transcoding tarantulas / octopuses took about a month. Unfortunately, there were some overlays: since they converted several shards in parallel, they managed to mix up the two shards in places when switching the masters back. By the time the problem was discovered, a significant number of data changes had already occurred. I had to analyze xlogs from both shards and restore justice.

Memcached

At first glance, it seems (to us, in any case, it seemed so at first) that with the conversion of caches it would be easiest: either we write UTF-8 in keys with a different name, or in other instances. But in practice this does not work out. There are two reasons for this: firstly, you will need twice as many caches, and secondly, when switching the encoding, the caches will be unheated. If the second problem can be fought by smoothly switching across several servers, then the first, given the large number of caches, is much more complicated.Therefore, we went along the path of flagging each key with a flag about the encoding in which it is stored. Moreover, the pearl client for the Cache :: Memcached :: Fast memkesh already has this feature: when saving a line in the memkesh in one of the key flags ( F_UTF8 = 0x4) it writes the internal pearl flag of the string SVf_UTF8, which is set if the string contains multibyte characters. Thus, if the flag is set, then the line is unambiguously in UTF-8, if not, then everything is a little more complicated: this line is either text in CP1251 or binary. Of course, we will convert text strings if necessary, but there was a difficulty with binary: in order not to break them with unnecessary conversion, we had to separate the set / get (and so forth) methods for text strings and binary, find all the strings of binary strings in memcached and their receipt, and replace with appropriate methods without automatic transcoding. They did the same in the sysh code and added support for the F_UTF8 flag .

Other repositories

In addition to the aforementioned standard repositories, we use a huge number of self-written repositories used to store the “what's new” tape, comments, message queues, dialogs, searches and more. We will not dwell on each of them in more detail, we only note the main cases and methods for solving them.- It is difficult to convert the storage without downtime, or we are planning to transfer data to a new storage soon, or data with a short lifetime. In such cases, the data was not converted, but the new records were marked with an encoding sign in one of two ways: either using a flag indicating which encoding the entire record is in, or using a BOM marker at the beginning of each string field, if it is in UTF-8.

- Not the lines themselves are stored in the repository, but the hash sum from them. Used by us for search. They just walked around the entire repository with a script that re-rendered hash sums from the original strings converted to UTF-8. At the time of the conversion, it was necessary to fulfill two queries to the database for each search query: one in CP1251, the other in UTF-8.

- A proxy is already installed in front of the repository, and all requests to the repository go through it. In this case, they converted to a proxy, similar to how it was done for a tarantula, with the only difference being that if it is a temporary functionality for a tarantula, then in this case it will remain until the data stored in the database is relevant.

UTF-8 support in code

In parallel with how our administrators converted the databases, the developers adapted the code to work with UTF-8 encoding. Our entire code base is conditionally divided into three parts: Perl, C and templates.When designing the procedure for switching a project to UTF-8 encoding, one of the key requirements for us was the ability to switch over one server. First of all, this was necessary in order to ensure the possibility of testing the project in UTF-8 using combat bases, first by the efforts of our testers, and then by a few percent of our users.

Perl and UTF-8

To adapt the pearl code for working with UTF-8, it was necessary to solve several basic problems:- Convert Cyrillic strings scattered around the code;

- consider server encoding when establishing a connection to all storage and services;

- take into account that the parameters of HTTP requests may not come in the encoding in which the server is running;

- it is necessary to give content in server encoding and use the correct templates;

- it is necessary to unambiguously logically separate byte strings and character strings, decode UTF-8 (from bytes to characters) at the input and encode it at the output.

We solved the task of converting pearl code from CP1251 to UTF-8 in a somewhat non-trivial way: we started by converting modules on the fly when compiling using filters (see perlfilter and Filter :: Util :: Call, pearl allows you to modify the source code between reading from disk and compiling). This was necessary in order to avoid multiple conflicts during the merge of the repository branches, which would have arisen if we tried to convert the repo in one separate branch and keep it aside during the development and testing process. The entire testing process and the first week after launch, the source codes continued to remain in CP1251 and were converted directly on the battle servers when the daemons started, if the server was configured as UTF-8. A week after the launch, we converted the repository and immediately froze the result in master. As a result, conflicts with merge arose only for those branches that were in development at this point in time.

The most routine was the process of adding to all the necessary places automatic string conversion for storages, which we did not convert to UTF-8 as a whole. But even in those cases when string conversion in the pearl was not needed, still it was necessary to take into account that in the pearl there is a difference (and significant) between byte and character strings. Of course, we wanted all the text lines to automatically become symbolic after reading from the database, which required analyzing all the input / output for whether binary data is transferred or text, we had to go through all the calls to pack / unpack to mark all the necessary lines as unpacked as character (or, on the contrary, before packing, make a string byte so that the length is counted in bytes, not characters).

The problem of the fact that the HTTP request parameters can come either in CP1251 or in UTF-8 (depending on which encoding the referer page was loaded in) was first wanted to be solved by passing an additional parameter in the request. But then, after analyzing how CP1251 and UTF-8 are encoded, we came to the conclusion that we can always clearly distinguish between Cyrillic in CP1251 and Cyrillic in UTF-8 by checking the string to see if it is valid UTF-8 (only from Russian letters in CP1251 is almost impossible to compile valid UTF-8).

In general, the way the work with UTF-8 is organized in the pearl, although quite conveniently, is still often magical, and you should consider that you need to:

- forget that the lines have the SVf_UTF8 flag (it is useful only for debugging), instead it is better to treat the lines as byte and character, forget that the internal representation of the pearl line with the flag SVf_UTF8 is UTF-8;

- forget about the functions Encode :: _ utf8_on (), Encode :: _ utf8_off (), utf8 :: upgrade (), utf8 :: downgrade (), utf8 :: is_utf8 (), utf8 :: valid ();

- use utf8 :: encode () when converting a character Unicode string to UTF-8;

- keep in mind that for pearl, the UTF-8 and utf8 encodings are slightly different encodings: for the first, only code point <= 0x10FFFF (as defined by the Unicode standard) is valid, and for the second, any IV (int32 or int64 depending on the architecture), encoded using the UTF-8 encoding algorithm;

- Accordingly, utf8 :: decode () can only be used for decoding from trusted sources (your own databases), in which there can be no invalid UTF-8, and when decoding external input, always use Encode :: decode ('UTF-8 ', $ _) to protect against code points that are invalid from the Unicode point of view;

- do not forget that the result of the utf8 :: decode () function is sometimes useful to check to see if the byte string was valid utf8, for the same purpose of checking for valid UTF-8, you can use the third parameter in Encode :: decode ();

- keep in mind that the upper half of the latin1 table contains the same characters as Unicode code points with the same numbers, but they will be encoded differently in UTF-8. This affects the result of the double utf8 :: decode () double call : for strings containing only code points from the ASCII table or containing at least one character with code point> 0xff everything will be fine, but if the string contains only characters with code point from the upper half of the table latin1 and ascii, then the characters from latin1 will be beaten.

- Use the latest pearl. On perl 5.8.8, we came up with a wonderful bug: the combination of use locale and some regular expressions with the correct input leads to an endless looping of the regular. I had to limit the scope of use locale to the maximum extent possible only for the strictly necessary set of functions: sort, cmp, lt, le, gt, ge, lc, uc, lcfirst, ucfirst.

C and UTF-8

In our C code, fortunately, there weren’t as many lines as in the pearl, so we went along the classical path: they took all the Cyrillic lines to a separate file. This allowed us to limit potential conflicts with merges within a single file, and also simplified subsequent localization. In the process of converting repos to UTF-8, they found something funny - Russian-language comments in the code were in all 4 Cyrillic encodings: cp1251, cp866, koi8-r and iso8859-5. When converting, I had to use auto-detection of the encoding of each specific line.In addition to converting repos, C also needed support for basic string functions: determining the length in characters, casting registers, trimming a string by length, etc. There is a wonderful libicu library for working with Unicode in Cbut it has a certain inconvenience: it uses UTF-16 as an internal representation. Of course, we wanted to avoid the overhead of transcoding between UTF-8 and UTF-16, so for the most commonly used simple functions we had to implement analogues that work directly with UTF-8 without transcoding.

Templates, javascript and UTF-8

With templates, fortunately, everything turned out to be quite simple. On production, they are laid out in rpm packages, so the logical solution was to cut the transcoding into the rpm build process. We added one more package with templates in UTF-8, which were installed in the neighboring directory, and the code (both pearl and sishl) after that just chose a template from the corresponding directory.With javascript, it didn’t work out of the box. Most browsers when loading javascript take into account its Content-Type, but there are still some old instances that do not, but focus on the page encoding. Therefore, they put a crutch: when building the package with javascript, we replaced all non-ASCII characters with their escape sequences in the form of code point numbers. With this approach, the size of js increases, but any browser loads it correctly.

What is the result

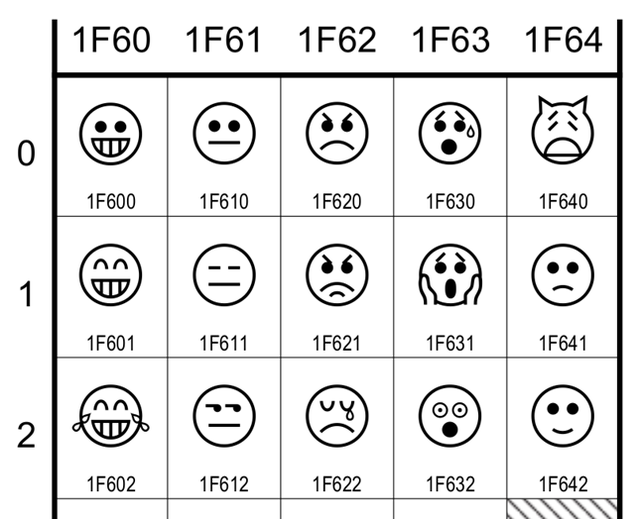

In the end, after six months, solitaire came together. Admins just finished recoding a couple of hundred databases, the developers finished the code, the testing process also ended. We gradually switched the knobs on the World control panel: first, all the accounts of our colleagues were transferred to UTF-8, then one percent of the users, after which we began to switch backend servers and 10 frontends each at 10 servers. Visually, nothing changed, neither the project page, nor the load schedule, which could not but rejoice. The only external change, according to which it was clear that half a year had passed without reason, is a change in the Content-Type line of charset = windows-1251 to charset = UTF-8 .Three months have passed since then, our Russian-speaking users have already appreciated the ability to embed emoji and other little things in the text, and Kazakhs began to correspond in their native language and, more recently, they have the opportunity to use the web interface and mobile applications in their native language. There were also enough interesting tasks in the process of internationalization and localization of the project that followed unicode'ization, we will try to devote a separate article to this.