Why you should never use MongoDB

- Transfer

Disclaimer from the author (author - girl): I do not develop database engines. I am creating web applications. I participate in 4-6 different projects every year, that is, I create many web applications. I see many applications with different requirements and different storage needs. I deployed most of the vaults you’ve heard of, and several that you don’t even suspect.

Several times I made the wrong choice of DBMS. This story is about one such choice - why we made such a choice, as if we knew that the choice was wrong and how we struggled with it. It all happened on an open source project called Diaspora.

Diaspora is a distributed social network with a long history. A long time ago, in 2010, four New York University students posted a video on Kickstarter asking them to donate $ 10,000 to develop a distributed alternative to Facebook. They sent the link to friends, family and hoped for the best.

But they hit the nail on the head. Another scandal just happened due to privacy on Facebook, and when the dust calmed down they received $ 200,000 investment from 6,400 people for a project that has not yet written a single line of code.

Diaspora was one of the first projects on Kickstarter, which managed to significantly exceed the goal. As a result, they were written about them in the New York Times, which turned into a scandal, because an indecent joke was written on the blackboard against the photo of the team, but no one noticed it until the photo was printed ... in the New York Times. So I found out about this project.

As a result of the success at Kickstarter, the guys quit studying and moved to San Francisco to start writing code. So they ended up in my office. At that time I worked at Pivotal Labs and one of the older brothers of the Diaspora developers also worked at this company, so Pivotal offered the guys jobs, Internet, and, of course, access to the beer fridge. I worked with official clients of the company, and in the evenings I hung out with guys and wrote the code on weekends.

It ended up staying at Pivotal for more than two years. However, by the end of the first summer they had a minimal, but working (in a sense) implementation of a distributed social network on Ruby on Rails, using MongoDB to store data.

Quite a lot of baszvorozov - let's understand.

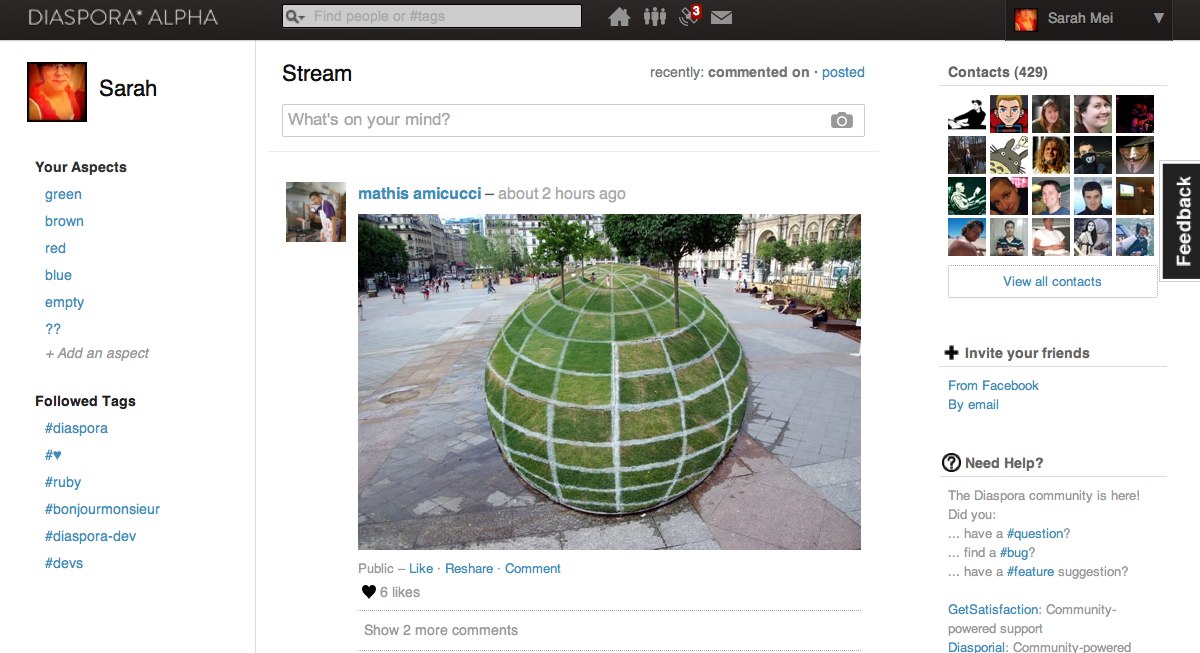

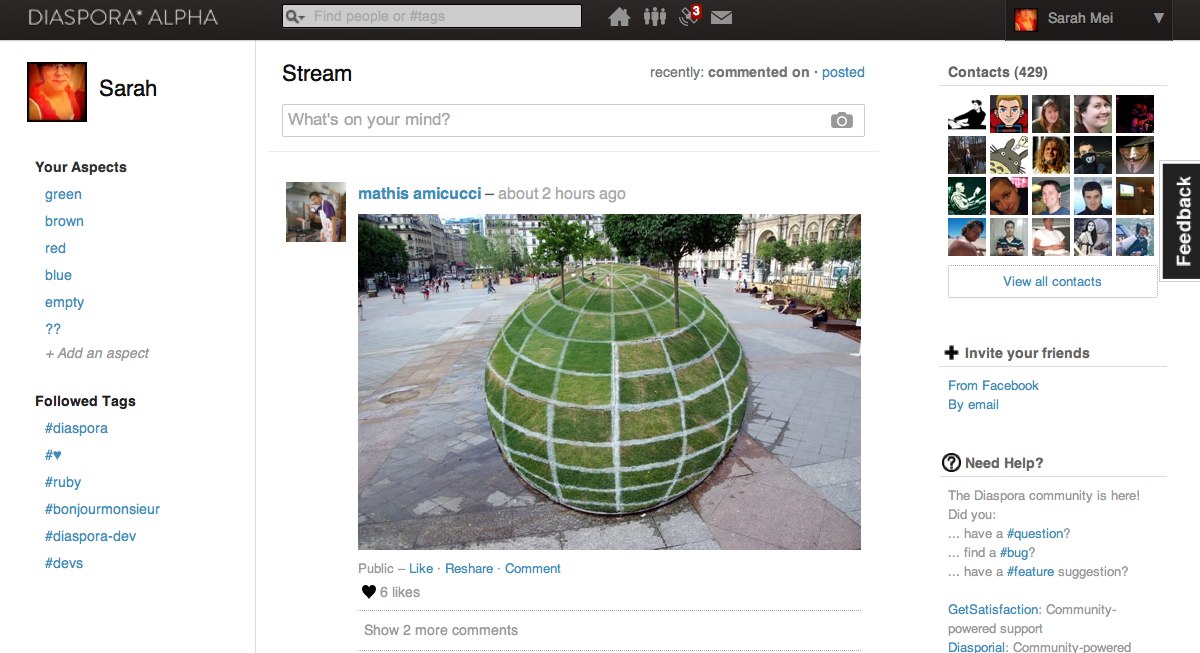

If you've seen Facebook, then you know everything you need to know about Facebook. This is a web application, it exists in a single copy and allows you to chat with people. Diaspora's interface looks almost the same as Facebook.

The message line in the middle shows the posts of all your friends, and around it there is a lot of garbage that nobody pays attention to. The main difference between Diaspora and Facebook is invisible to users, this is the "distributed" part.

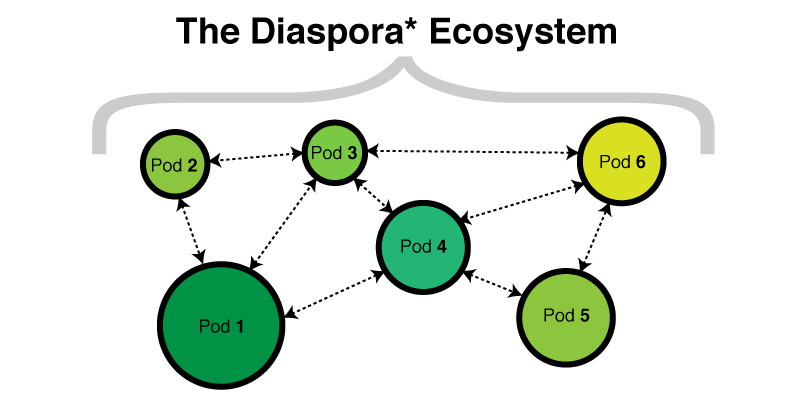

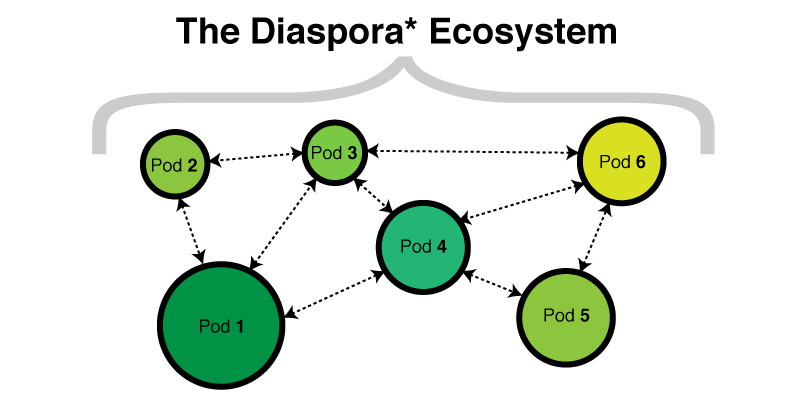

Disapora infrastructure is not located at the same web address. There are hundreds of independent instances of Diaspora. The code is open, so you can deploy your servers. Each instance is called Pod. It has its own database and its set of users. Each Pod interacts with other Pods, which also have their own base and users.

Pods communicate using an API based on the HTTP protocol (now it is fashionable to call the REST API - approx. Per. ). When you deployed your Pod, it will be pretty boring until you add friends. You can add friends as friends in your Pod, or in other Pods. When someone publishes something, this is what happens:

Comments work the same way. Each message can be commented on by users from the same Pod, as well as people from other Pods. Anyone who has permission to view the message will also see all comments. As if everything is happening in one application.

There are technical and legal advantages to this architecture. The main technical advantage is fault tolerance.

(such a fault-tolerant system must be in every office)

If one of the Pods falls, then all the others continue to work. The system causes, and even expects, network sharing. The political consequences of this are, for example, if your country does not allow access to Facebook or Twitter, your local Pod will be available to other people in your country, even if all the others are inaccessible.

The main legal advantage is server independence. Each Pod is a legally independent entity governed by the laws of the country where the Pod is located. Each Pod can also set its own conditions; on most, you do not give away the right to all content, such as on Facebook or Twitter. Diaspora is free software, both in the sense of "free" and in the sense of "independently." Most of those who launch their Pods are very concerned.

This is the system architecture, let's look at the architecture of a single Pod.

Each Pod is a Ruby on Rails application with its own base on MongoDB. In a sense, this is a “typical” Rails application — it has a user interface, a programming API, Ruby logic, and a database. But in all other senses it is not at all typical.

The API is used for mobile clients and for “federation”, that is, for the interaction between the Pods. Distribution adds several layers of code that are not available in a typical application.

And, of course, MongoDB is far from the typical choice for web applications. Most Rails applications use PostgreSQL or MySQL. (as of 2010 - approx. per. )

So here is the code. Consider what data we store.

“Social Data” is information about our network of friends, their friends and their activities. Conceptually, we think of it as a network - an undirected graph in which we are in the center and our friends are around us.

(Photos from rubyfriends.com. Thanks to Matt Rogers, Steve Klabnik, Nell Shamrell, Katrina Owen, Sam Livingston Gray, Josh Sasser, Akshay Khole, Pradyumna Dandwate and Hefzib Watharkar for contributing to # rubyfriends!)

When we store social data, we save both topology and actions.

For several years now, we have known that social data is not relational; if you store social data in a relational database, then you are doing it wrong.

But what are the alternatives? Some argue that graph databases are best suited, but I will not consider them, since they are too niche for mass projects. Others say documentaries are ideal for social data, and they are mainstream enough for real-world use. Let's look at why people think that MongoDB, rather than PostgreSQL, is much better for social data.

MongoDB is a document database. Instead of storing data in tables consisting of separate rows , as in relational databases, MongoDB saves data in collections consisting of documents . A document is a large JSON object without a predefined format and scheme.

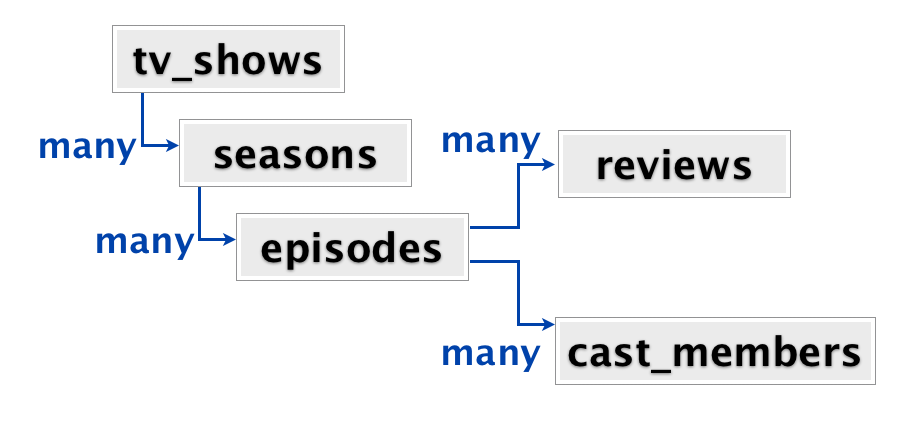

Let's look at a set of relationships that you need to model. This is very similar to the Pivotal projects for which MongoDB was used, and this is the best use case for a documentary DBMS I have ever seen.

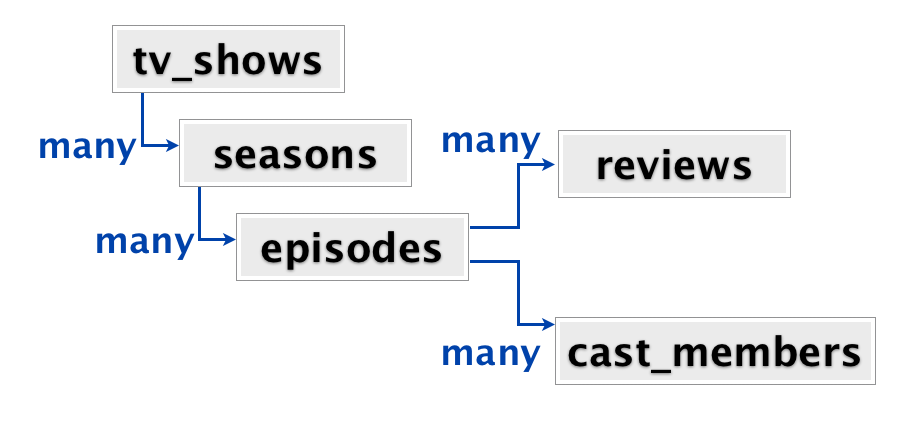

At the root, we have a set of series. Each series can have many seasons, each season has many episodes, each episode has many reviews and many actors. When a user comes to the site, usually he gets to the page of a particular series. The page displays all seasons, episodes, reviews and actors, all on one page. From the point of view of the application, when the user lands on the page we want to get all the information related to the series.

This data can be modeled in several ways. In a typical relational store, each of the rectangles will be a table. You will have a tv_shows table, a seasons table with a foreign key in tv_shows, an episodes table with a foreign key in seasons, reviews and a cast_members table with foreign keys in episodes. Thus, in order to get all the information about the series, you need to join five tables.

We could also model this data as a set of nested objects (a set of key-value pairs). A lot of information about a particular series is one big structure of nested key-value sets. Inside the series, there are many seasons, each of which is also an object (a set of key-value pairs). Within each season, an array of episodes, each of which is an object, and so on. This is how data is modeled in MongoDB. Each series is a document that contains all the information about one series.

Here is an example of a document from one series, Babylon 5:

The series has a name and an array of seasons. Each season is an object with metadata and an array of episodes. In turn, each episode has metadata and arrays of reviews and actors.

It looks like a huge fractal data structure.

(A lot of sets of sets of sets. Delicious fractals.)

All the data needed for the series is stored in one document, so you can very quickly get all the information at once, even if the document is very large. There is a series called “General Hospital”, which has already 12,000 episodes over 50+ seasons. On my laptop, PostgreSQL works for about a minute to get denormalized data for 12,000 episodes, while retrieving a document by ID in MongoDB takes a split second.

So in many ways, this application implements an ideal use case for a document base.

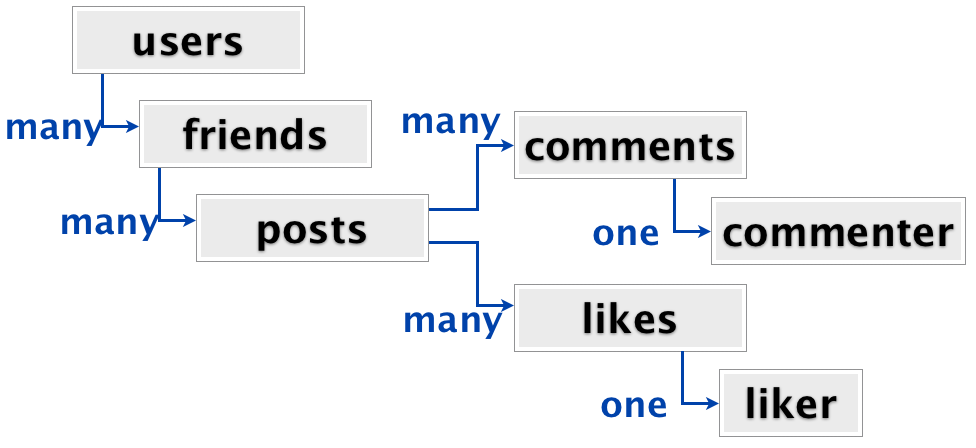

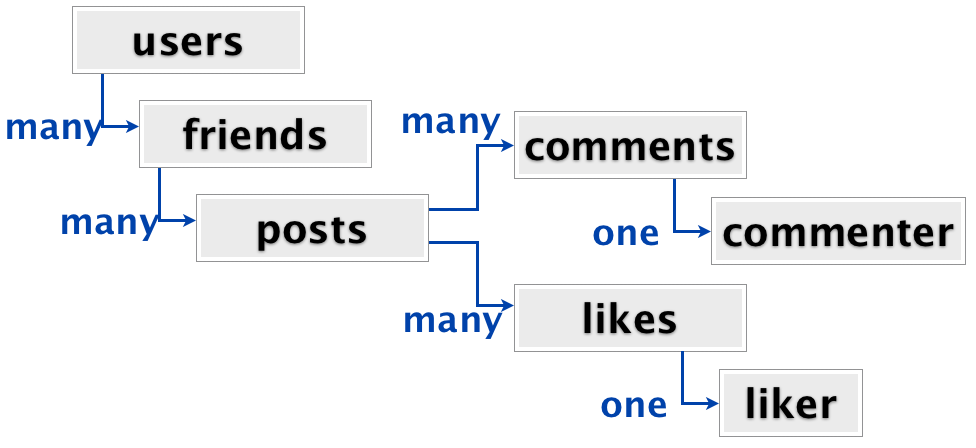

Right. When you get to a social network, there is only one important part of the page: your activity feed. The activity feed request receives all posts from your friends, sorted by date. Each post contains attachments, such as photos, likes, reposts and comments.

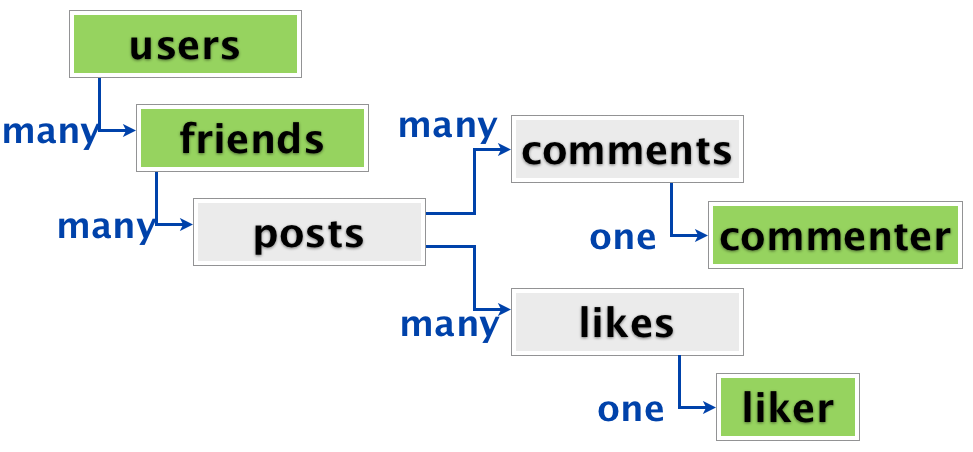

The nested structure of the activity tape looks very much like TV shows.

Users have friends, friends have posts, posts have comments and likes, each of which has a single commentator or liker. From the point of view of connections, this is not much more complicated than the structure of television series. And as in the case of series, we want to get the whole structure at once as soon as the user enters the social network. In a relational DBMS, this would be a join of seven tables to pull all the data.

Joining seven tables, uh. Suddenly saving the entire user feed as a single denormalized data structure, instead of executing joins, looks very attractive. (In PostgreSQL, such joins really work slowly cause pain - approx. Per. )

In 2010, the Diaspora team made such a decision, Esty's articles on the use of documentary DBMSs turned out to be very convincing, even though they publicly abandoned MongoDB later. In addition, at this time, the use of Cassandra on Facebook generated a lot of talk about abandoning relational DBMSs. Choosing MongoDB for Disapora was in the spirit of the time. This was not an unreasonable choice at that time, given the knowledge they had.

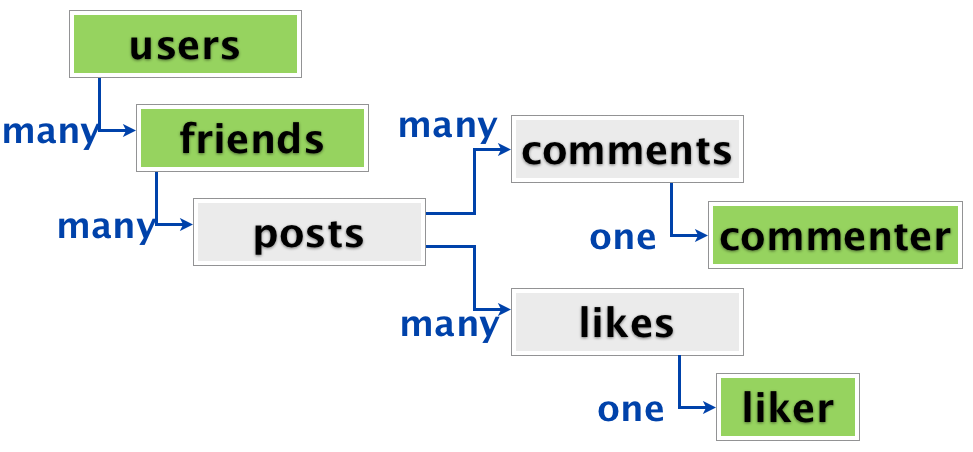

There is a very important difference between the social data of Diaspora and the Mongo-ideal data about the series, which no one noticed at first glance.

With TV shows, each rectangle in the relationship diagram has a different type. Series differ from seasons, differ from episodes, differ from reviews, differ from actors. None of them are even a subtype of another type.

But with social data, some of the rectangles in the relationship diagram are of the same type. In fact, all these green rectangles are of the same type - they are all users of the diaspora.

The user has friends, and each friend can be a user themselves. And it may not be, because it is a distributed system. (This is a whole layer of complexity that I will miss today.) In the same way, commentators and likers can also be users.

Such duplication of types significantly complicates the denormalization of the activity ribbon into a single document. This is because in different places in the document, you can refer to the same entity - in this case, the same user. The user who liked the post can also be the user who commented on other activity.

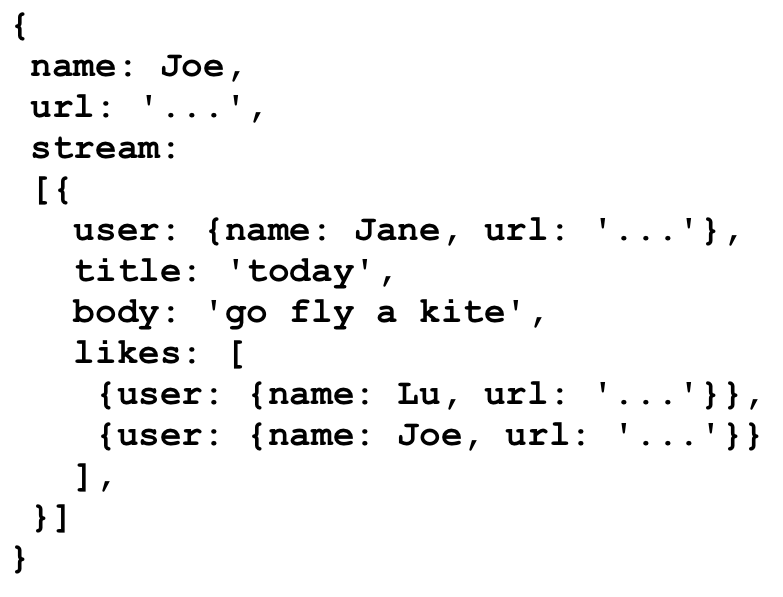

We can model this differently in MongoDB. The easiest way is to duplicate data. All information about the user is copied is saved in the like for the first post, and then a separate copy is saved in the comment for the second post. The advantage is that all the data is present wherever you need it, and you can still pull out the entire activity stream in one more document.

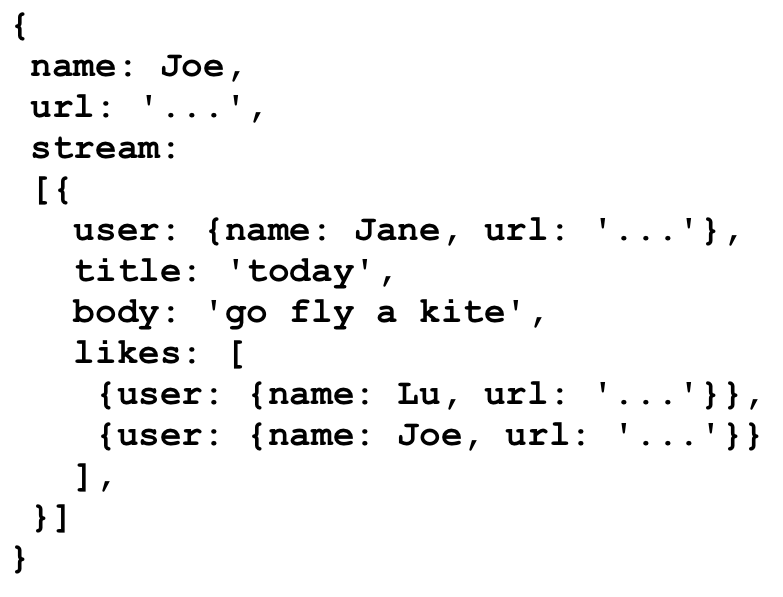

This is how denormalized activity ribbon looks like density.

All copies of user data are embedded in the document. This is Joe’s feed and has top-level copies of user data, including his name and URL. His tape contains a post by Jane. Joe liked Jane's post, so that in the varnishes to Jane's message, a separate copy of Joe's data was saved.

You can understand why this is attractive: all the data you need is already located where you need it.

You can also see why this is dangerous. Updating user data means bypassing all activity feeds to change data in all places where it is stored. This is very error prone, and often leads to data inconsistencies and cryptic errors, especially when working with deletions.

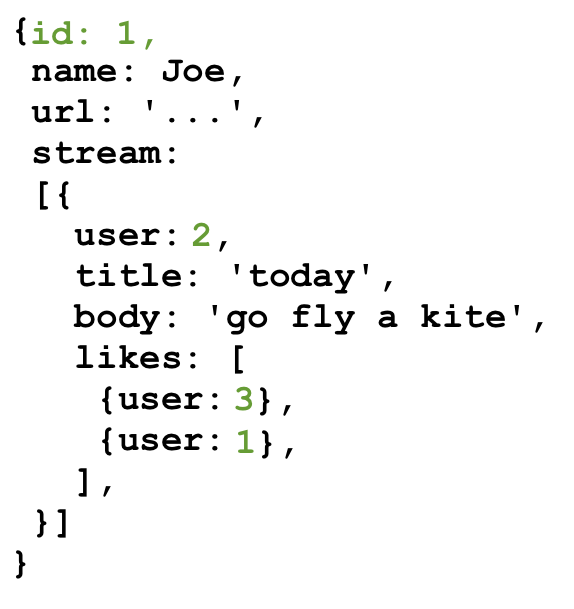

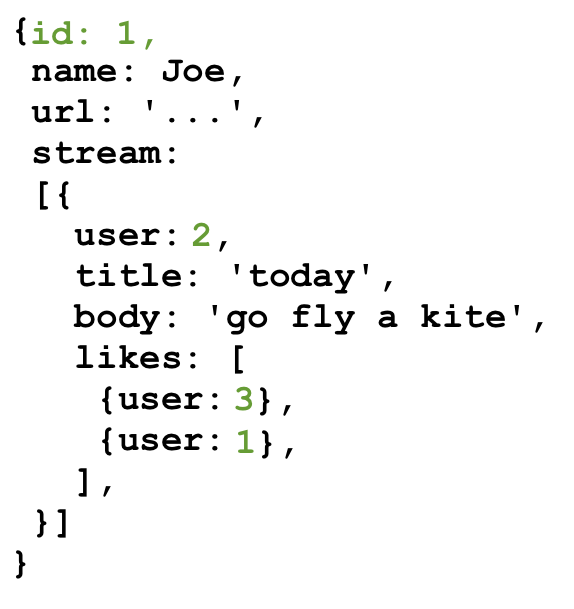

There is another approach to solving the problem in MongoDB, which will be familiar to those who have experience with relational DBMS. Instead of duplicating data, you can save links to users in the activity stream.

With this approach, instead of embedding the data where it is needed, you give each user an ID. After that, instead of embedding user data, you only save links to users. In the picture, the IDs are highlighted in green:

(MongoDB actually uses BSON identifiers - strings similar to GUIDs. The numbers in the picture are for easier reading.)

This eliminates our duplication problem. When changing user data, there is only one document that needs to be changed. However, we have created a new problem for ourselves. Because we can no longer build activity feeds from one document. This is a less efficient and more complex solution. Building an activity feed currently requires us to 1) get an activity feed document, and then 2) get all user documents to fill out names and avatars.

What MongoDB lacks is a join operation as in SQL, which allows you to write a single query that combines the activity feed and all users that have links from the feed. In the end, you have to manually make joins in the application code.

Back to the series for a second. A lot of relationships for TV shows do not have many difficulties. Because all the rectangles in the relationship diagram are different entities, the entire query can be denormalized into one document, without duplication and without links. In this database, there are no links between documents. Therefore, no joins are required.

There are no self-sufficient entities in a social network. Each time you see a username or avatar, you expect that you can click and see the user profile, its posts. TV shows do not work that way. If you are on episode 1 season 1 of the Babylon 5 series, you do not expect that there will be an opportunity to go on episode 1 season 1 of General Hospital.

After we started making ugly joins manually in Diaspora code, we realized that this is only the first sign of problems. It was a signal that our data is actually relational, that there is value in this connection structure, and we are moving against the basic idea of documentary DBMSs.

Whether you duplicate important data (pah), or use links and make joins in the application code (twice pah), if you need links between documents, then you have outgrown MongoDB. When MongoDB apologists say “documents,” they mean things that you can print on paper and work that way. Documents have an internal structure - headings and subheadings, paragraphs and footers, but do not have links to other documents. Self-contained element of poorly structured data.

If your data looks like a set of paper documents - congratulations! This is a good case for Mongo. But if you have links between documents, then you actually do not have documents. MongoDB in this case is a bad solution. For social data, this is a really bad decision, as the most important part is the relationship between the documents.

Therefore, social data is not documentary. Does that mean that social data is ... relational?

When people say "social data is not relational," this does not mean what they mean. They mean one of two things:

This is absolutely true. But there are actually very few concepts in the world that, of course, can be modeled as normalized tables. We use this structure because it is effective because it avoids duplication, and because when it really becomes slow, we know how to fix it.

This is also absolutely true. When your social data is in relational storage, you need to make a connection of many tables to get a feed of activity for a specific user, and that is slower with the growth of the table. However, we have a well-understood solution to this problem. It is called caching.

At the All Your Base Conf conference in Oxford, where I gave a talk on the topic of this post, Neha Narula presented a great caching talk, which I recommend watching. In short, caching normalized data is a complex but well-studied problem. I saw projects in which the activity tape was denormalized in a documentary DBMS, like MongoDB, which allowed me to get data much faster. The only problem is cache invalidation.

It turns out that invalidating the cache is actually quite difficult. Phil Carleton has written most of SSL for version 3, X11, and OpenGL, so he knows a little about computer science.

But what is cache invalidation, and why is it so complicated?

Cache invalidation is knowing when your cache data is outdated and you need to update or replace it. Here is a typical example that I see every day in web applications. We have long-term storage, usually PostgreSQL or MySQL, and in front of them we have a caching layer based on Memcached or Redis. The request to read the user's activity feed is processed from the cache, and not directly from the database, which makes the query execution very fast.

Record- a much more complex process. Suppose a user with two friends creates a new post. The first thing that happens is the post is written to the database. After that, the background thread writes the post to the cached activity stream of both users who are friends of the author.

This is a very common pattern. The tweeter holds in the in-memory cache the tapes of the last active users, to which posts are added when one of the followers creates a new post. Even small applications that use something like activity feeds do this (see: joining seven tables).

Let's get back to our example. When an author changes an existing post, the update is processed in the same way as the creation, except that the item in the cache is updated and not added to the existing one.

What happens if a background thread updating the cache breaks in the middle? The server may crash, the network cables will disconnect, the application will restart. Instability is the only stable fact in our work. When this happens, the data in the cache becomes inconsistent. Some copies of the posts have the old name, while others have a new one. This is not an easy task, but with a cache, there is always a nuclear option.

You can always completely remove an item from the cache and rebuild it from the agreed long-term storage.

But what if there is no long-term storage? What if cache is the only thing you have?

This is the case with MongoDB. This is a cache, without long-term consistent storage. And he will definitely becomeinconsistent. Not “eventually consistent ,” but simply inconsistent all the time (This is not so difficult to achieve, it is enough that updates occur more often than the average time to reach a consistent state - approx. Per. ). In this case, you have no options, not even a “nuclear” one. You have no way to rebuild the cache in a consistent state.

When Diaspora decided to use MongoDB, they combined the base with the cache. Database and cache are very different things. They are based on a different concept of stability, speed, duplication, relationships and data integrity.

Once we realized that we accidentally chose a cache for the database, what could we do?

Well, that is a million dollar question. But I already answered the billion dollar question. In this post, I talked about how we used MongoDB compared to what it was designed for. I talked about it as if all the information was obvious, and the Dispora team was simply not able to conduct the research before choosing.

But it was not at all obvious. The MongoDB documentation says that is good, and generally does not say that is not good. This is natural. Everyone does it. But as a result, it took about 6 months and a lot of user complaints and a lot of investigation to find out that we used MongoDB for other purposes.

There was nothing to do, except for extracting data from MongoDB and placing it in a relational DBMS, solving problems of data inconsistency on the go. The process of extracting data from MongoDB and putting it into MySQL was straightforward. More details in the report at All Your Base Conf .

We had data for 8 months of work, which turned into 1.2 million rows in MySQL. We spent eight weeks developing code for migration, and when we started the process, the main site went into downtime for 2 hours. This was more than acceptable for a pre-alpha project. We could reduce downtime, but we laid 8 hours, so two hours looked fantastic.

(NOT BAD)

Remember the TV show app? This was an ideal use case for MongoDB. Each series was one self-contained document. There are no references to other documents, there is no duplication, and there is no way to make data inconsistent.

After three months in development, everything worked great with MongoDB. But once on Monday at a planning meeting, a client said that one of the investors wants a new feature. He wants to be able to click on the actor’s name and watch his career in television series. He wants a list of all the episodes in all the series in chronological order in which this actor starred.

We stored each series as a document in MongoDB, containing all the data, including the actors. If this actor met in two episodes, even in one series, the information was stored in two places. We could not even find out that this is one and the same actor, except by comparing the names. To implement the feature, it was necessary to go around all the documents, find and deduplicate all instances of the actors. Uh ... It was necessary to do this at least once, and then maintain the external index of all actors, which will experience the same problems with consistency as any other cache.

The client expects the feature to be trivial. If the data were in relational storage, then it would be true. First of all, we tried to convince the manager that this feature is not needed. But the manager did not bend over and we came up with several cheaper alternatives, such as links to IMDB by the name of the actor. But the company made money from advertising, so they needed the client not to leave the site.

This feature pushed the project to switch to PosgreSQL. After talking with the customer, it turned out that the business sees a lot of value in linking episodes to each other. They envisioned watching series shot by one director and episodes released in one week and much more.

It was ultimately a communication problem, not a technical problem. If this talk that happened earlier, if we took the time to really understand how the client wants to see the data and what they want to do with it, then we probably would have switched to PostgreSQL earlier when there was less data and it was easier.

I learned something from experience: the perfect MongoDB case is narrower than our show data. The only thing that is convenient to store in MongoDB is arbitrary JSON fragments. " Arbitrary " in this context means that you absolutely do not care what's inside JSON. You don’t even look there. there is no scheme, not even an implicit scheme, as was the case in our data on the series. Each document is a set of bytes, and you make no assumptions about what's inside.

At RubyConf, I came across Conrad Irwin , who suggested this scenario. It saved arbitrary data coming from clients as JSON. It is reasonable. The CAP theorem does not matter when your data makes no sense. But in any interesting application, data makes sense.

I heard from many people that MongoDB is used as a replacement for PostgreSQL or MySQL. There are no circumstances in which this might be a good idea. The flexibility of the circuit (in fact the lack of a circuit - approx. Per. ) Looks like a good idea, but in fact it is only useful when your data does not carry value. If you have an implicit scheme, that is, you expect some structure in JSON, then MongoDB is the wrong choice. I suggest taking a look at hstore in PostgreSQL (anyway faster than MongoDB), and learning how to make schema changes. They really aren't that complicated, even in large tables.

When you choose a data warehouse, the most important thing is to understand where the value for the client is in the data and relationships. If you still do not know, then you need to choose what does not drive you into a corner. Pushing arbitrary JSON data into the database seems like a flexible solution, but the real flexibility is simply adding features to the business.

Keep valuables simple.

Thank you for reading here.

Several times I made the wrong choice of DBMS. This story is about one such choice - why we made such a choice, as if we knew that the choice was wrong and how we struggled with it. It all happened on an open source project called Diaspora.

Project

Diaspora is a distributed social network with a long history. A long time ago, in 2010, four New York University students posted a video on Kickstarter asking them to donate $ 10,000 to develop a distributed alternative to Facebook. They sent the link to friends, family and hoped for the best.

But they hit the nail on the head. Another scandal just happened due to privacy on Facebook, and when the dust calmed down they received $ 200,000 investment from 6,400 people for a project that has not yet written a single line of code.

Diaspora was one of the first projects on Kickstarter, which managed to significantly exceed the goal. As a result, they were written about them in the New York Times, which turned into a scandal, because an indecent joke was written on the blackboard against the photo of the team, but no one noticed it until the photo was printed ... in the New York Times. So I found out about this project.

As a result of the success at Kickstarter, the guys quit studying and moved to San Francisco to start writing code. So they ended up in my office. At that time I worked at Pivotal Labs and one of the older brothers of the Diaspora developers also worked at this company, so Pivotal offered the guys jobs, Internet, and, of course, access to the beer fridge. I worked with official clients of the company, and in the evenings I hung out with guys and wrote the code on weekends.

It ended up staying at Pivotal for more than two years. However, by the end of the first summer they had a minimal, but working (in a sense) implementation of a distributed social network on Ruby on Rails, using MongoDB to store data.

Quite a lot of baszvorozov - let's understand.

Distributed social network

If you've seen Facebook, then you know everything you need to know about Facebook. This is a web application, it exists in a single copy and allows you to chat with people. Diaspora's interface looks almost the same as Facebook.

The message line in the middle shows the posts of all your friends, and around it there is a lot of garbage that nobody pays attention to. The main difference between Diaspora and Facebook is invisible to users, this is the "distributed" part.

Disapora infrastructure is not located at the same web address. There are hundreds of independent instances of Diaspora. The code is open, so you can deploy your servers. Each instance is called Pod. It has its own database and its set of users. Each Pod interacts with other Pods, which also have their own base and users.

Pods communicate using an API based on the HTTP protocol (now it is fashionable to call the REST API - approx. Per. ). When you deployed your Pod, it will be pretty boring until you add friends. You can add friends as friends in your Pod, or in other Pods. When someone publishes something, this is what happens:

- The message will be saved in the author’s database.

- Your Pod will be notified through the API.

- The message will be saved in your Pod database.

- In your stream you will see a message along with messages from other friends.

Comments work the same way. Each message can be commented on by users from the same Pod, as well as people from other Pods. Anyone who has permission to view the message will also see all comments. As if everything is happening in one application.

Who cares?

There are technical and legal advantages to this architecture. The main technical advantage is fault tolerance.

(such a fault-tolerant system must be in every office)

If one of the Pods falls, then all the others continue to work. The system causes, and even expects, network sharing. The political consequences of this are, for example, if your country does not allow access to Facebook or Twitter, your local Pod will be available to other people in your country, even if all the others are inaccessible.

The main legal advantage is server independence. Each Pod is a legally independent entity governed by the laws of the country where the Pod is located. Each Pod can also set its own conditions; on most, you do not give away the right to all content, such as on Facebook or Twitter. Diaspora is free software, both in the sense of "free" and in the sense of "independently." Most of those who launch their Pods are very concerned.

This is the system architecture, let's look at the architecture of a single Pod.

This is a Rails application.

Each Pod is a Ruby on Rails application with its own base on MongoDB. In a sense, this is a “typical” Rails application — it has a user interface, a programming API, Ruby logic, and a database. But in all other senses it is not at all typical.

The API is used for mobile clients and for “federation”, that is, for the interaction between the Pods. Distribution adds several layers of code that are not available in a typical application.

And, of course, MongoDB is far from the typical choice for web applications. Most Rails applications use PostgreSQL or MySQL. (as of 2010 - approx. per. )

So here is the code. Consider what data we store.

I do not think this word means what you think it means

“Social Data” is information about our network of friends, their friends and their activities. Conceptually, we think of it as a network - an undirected graph in which we are in the center and our friends are around us.

(Photos from rubyfriends.com. Thanks to Matt Rogers, Steve Klabnik, Nell Shamrell, Katrina Owen, Sam Livingston Gray, Josh Sasser, Akshay Khole, Pradyumna Dandwate and Hefzib Watharkar for contributing to # rubyfriends!)

When we store social data, we save both topology and actions.

For several years now, we have known that social data is not relational; if you store social data in a relational database, then you are doing it wrong.

But what are the alternatives? Some argue that graph databases are best suited, but I will not consider them, since they are too niche for mass projects. Others say documentaries are ideal for social data, and they are mainstream enough for real-world use. Let's look at why people think that MongoDB, rather than PostgreSQL, is much better for social data.

How MongoDB stores data

MongoDB is a document database. Instead of storing data in tables consisting of separate rows , as in relational databases, MongoDB saves data in collections consisting of documents . A document is a large JSON object without a predefined format and scheme.

Let's look at a set of relationships that you need to model. This is very similar to the Pivotal projects for which MongoDB was used, and this is the best use case for a documentary DBMS I have ever seen.

At the root, we have a set of series. Each series can have many seasons, each season has many episodes, each episode has many reviews and many actors. When a user comes to the site, usually he gets to the page of a particular series. The page displays all seasons, episodes, reviews and actors, all on one page. From the point of view of the application, when the user lands on the page we want to get all the information related to the series.

This data can be modeled in several ways. In a typical relational store, each of the rectangles will be a table. You will have a tv_shows table, a seasons table with a foreign key in tv_shows, an episodes table with a foreign key in seasons, reviews and a cast_members table with foreign keys in episodes. Thus, in order to get all the information about the series, you need to join five tables.

We could also model this data as a set of nested objects (a set of key-value pairs). A lot of information about a particular series is one big structure of nested key-value sets. Inside the series, there are many seasons, each of which is also an object (a set of key-value pairs). Within each season, an array of episodes, each of which is an object, and so on. This is how data is modeled in MongoDB. Each series is a document that contains all the information about one series.

Here is an example of a document from one series, Babylon 5:

The series has a name and an array of seasons. Each season is an object with metadata and an array of episodes. In turn, each episode has metadata and arrays of reviews and actors.

It looks like a huge fractal data structure.

(A lot of sets of sets of sets. Delicious fractals.)

All the data needed for the series is stored in one document, so you can very quickly get all the information at once, even if the document is very large. There is a series called “General Hospital”, which has already 12,000 episodes over 50+ seasons. On my laptop, PostgreSQL works for about a minute to get denormalized data for 12,000 episodes, while retrieving a document by ID in MongoDB takes a split second.

So in many ways, this application implements an ideal use case for a document base.

Good. But what about social data?

Right. When you get to a social network, there is only one important part of the page: your activity feed. The activity feed request receives all posts from your friends, sorted by date. Each post contains attachments, such as photos, likes, reposts and comments.

The nested structure of the activity tape looks very much like TV shows.

Users have friends, friends have posts, posts have comments and likes, each of which has a single commentator or liker. From the point of view of connections, this is not much more complicated than the structure of television series. And as in the case of series, we want to get the whole structure at once as soon as the user enters the social network. In a relational DBMS, this would be a join of seven tables to pull all the data.

Joining seven tables, uh. Suddenly saving the entire user feed as a single denormalized data structure, instead of executing joins, looks very attractive. (In PostgreSQL, such joins really work slowly cause pain - approx. Per. )

In 2010, the Diaspora team made such a decision, Esty's articles on the use of documentary DBMSs turned out to be very convincing, even though they publicly abandoned MongoDB later. In addition, at this time, the use of Cassandra on Facebook generated a lot of talk about abandoning relational DBMSs. Choosing MongoDB for Disapora was in the spirit of the time. This was not an unreasonable choice at that time, given the knowledge they had.

What could have gone wrong?

There is a very important difference between the social data of Diaspora and the Mongo-ideal data about the series, which no one noticed at first glance.

With TV shows, each rectangle in the relationship diagram has a different type. Series differ from seasons, differ from episodes, differ from reviews, differ from actors. None of them are even a subtype of another type.

But with social data, some of the rectangles in the relationship diagram are of the same type. In fact, all these green rectangles are of the same type - they are all users of the diaspora.

The user has friends, and each friend can be a user themselves. And it may not be, because it is a distributed system. (This is a whole layer of complexity that I will miss today.) In the same way, commentators and likers can also be users.

Such duplication of types significantly complicates the denormalization of the activity ribbon into a single document. This is because in different places in the document, you can refer to the same entity - in this case, the same user. The user who liked the post can also be the user who commented on other activity.

Duplication of data Duplication of data

We can model this differently in MongoDB. The easiest way is to duplicate data. All information about the user is copied is saved in the like for the first post, and then a separate copy is saved in the comment for the second post. The advantage is that all the data is present wherever you need it, and you can still pull out the entire activity stream in one more document.

This is how denormalized activity ribbon looks like density.

All copies of user data are embedded in the document. This is Joe’s feed and has top-level copies of user data, including his name and URL. His tape contains a post by Jane. Joe liked Jane's post, so that in the varnishes to Jane's message, a separate copy of Joe's data was saved.

You can understand why this is attractive: all the data you need is already located where you need it.

You can also see why this is dangerous. Updating user data means bypassing all activity feeds to change data in all places where it is stored. This is very error prone, and often leads to data inconsistencies and cryptic errors, especially when working with deletions.

Is there really no hope?

There is another approach to solving the problem in MongoDB, which will be familiar to those who have experience with relational DBMS. Instead of duplicating data, you can save links to users in the activity stream.

With this approach, instead of embedding the data where it is needed, you give each user an ID. After that, instead of embedding user data, you only save links to users. In the picture, the IDs are highlighted in green:

(MongoDB actually uses BSON identifiers - strings similar to GUIDs. The numbers in the picture are for easier reading.)

This eliminates our duplication problem. When changing user data, there is only one document that needs to be changed. However, we have created a new problem for ourselves. Because we can no longer build activity feeds from one document. This is a less efficient and more complex solution. Building an activity feed currently requires us to 1) get an activity feed document, and then 2) get all user documents to fill out names and avatars.

What MongoDB lacks is a join operation as in SQL, which allows you to write a single query that combines the activity feed and all users that have links from the feed. In the end, you have to manually make joins in the application code.

Simple denormalized data

Back to the series for a second. A lot of relationships for TV shows do not have many difficulties. Because all the rectangles in the relationship diagram are different entities, the entire query can be denormalized into one document, without duplication and without links. In this database, there are no links between documents. Therefore, no joins are required.

There are no self-sufficient entities in a social network. Each time you see a username or avatar, you expect that you can click and see the user profile, its posts. TV shows do not work that way. If you are on episode 1 season 1 of the Babylon 5 series, you do not expect that there will be an opportunity to go on episode 1 season 1 of General Hospital.

Not. It is necessary. Refer. On the. Documents.

After we started making ugly joins manually in Diaspora code, we realized that this is only the first sign of problems. It was a signal that our data is actually relational, that there is value in this connection structure, and we are moving against the basic idea of documentary DBMSs.

Whether you duplicate important data (pah), or use links and make joins in the application code (twice pah), if you need links between documents, then you have outgrown MongoDB. When MongoDB apologists say “documents,” they mean things that you can print on paper and work that way. Documents have an internal structure - headings and subheadings, paragraphs and footers, but do not have links to other documents. Self-contained element of poorly structured data.

If your data looks like a set of paper documents - congratulations! This is a good case for Mongo. But if you have links between documents, then you actually do not have documents. MongoDB in this case is a bad solution. For social data, this is a really bad decision, as the most important part is the relationship between the documents.

Therefore, social data is not documentary. Does that mean that social data is ... relational?

This word again

When people say "social data is not relational," this does not mean what they mean. They mean one of two things:

1. “Conceptually, social data is more graph than a set of tables.”

This is absolutely true. But there are actually very few concepts in the world that, of course, can be modeled as normalized tables. We use this structure because it is effective because it avoids duplication, and because when it really becomes slow, we know how to fix it.

2. “It is much faster to get all social data when they are denormalized into one document”

This is also absolutely true. When your social data is in relational storage, you need to make a connection of many tables to get a feed of activity for a specific user, and that is slower with the growth of the table. However, we have a well-understood solution to this problem. It is called caching.

At the All Your Base Conf conference in Oxford, where I gave a talk on the topic of this post, Neha Narula presented a great caching talk, which I recommend watching. In short, caching normalized data is a complex but well-studied problem. I saw projects in which the activity tape was denormalized in a documentary DBMS, like MongoDB, which allowed me to get data much faster. The only problem is cache invalidation.

“There are only two difficult tasks in the field of computer science: cache invalidation and inventing names.”

Phil Carleton

It turns out that invalidating the cache is actually quite difficult. Phil Carleton has written most of SSL for version 3, X11, and OpenGL, so he knows a little about computer science.

cache invalidation as a service

But what is cache invalidation, and why is it so complicated?

Cache invalidation is knowing when your cache data is outdated and you need to update or replace it. Here is a typical example that I see every day in web applications. We have long-term storage, usually PostgreSQL or MySQL, and in front of them we have a caching layer based on Memcached or Redis. The request to read the user's activity feed is processed from the cache, and not directly from the database, which makes the query execution very fast.

Record- a much more complex process. Suppose a user with two friends creates a new post. The first thing that happens is the post is written to the database. After that, the background thread writes the post to the cached activity stream of both users who are friends of the author.

This is a very common pattern. The tweeter holds in the in-memory cache the tapes of the last active users, to which posts are added when one of the followers creates a new post. Even small applications that use something like activity feeds do this (see: joining seven tables).

Let's get back to our example. When an author changes an existing post, the update is processed in the same way as the creation, except that the item in the cache is updated and not added to the existing one.

What happens if a background thread updating the cache breaks in the middle? The server may crash, the network cables will disconnect, the application will restart. Instability is the only stable fact in our work. When this happens, the data in the cache becomes inconsistent. Some copies of the posts have the old name, while others have a new one. This is not an easy task, but with a cache, there is always a nuclear option.

You can always completely remove an item from the cache and rebuild it from the agreed long-term storage.

But what if there is no long-term storage? What if cache is the only thing you have?

This is the case with MongoDB. This is a cache, without long-term consistent storage. And he will definitely becomeinconsistent. Not “eventually consistent ,” but simply inconsistent all the time (This is not so difficult to achieve, it is enough that updates occur more often than the average time to reach a consistent state - approx. Per. ). In this case, you have no options, not even a “nuclear” one. You have no way to rebuild the cache in a consistent state.

When Diaspora decided to use MongoDB, they combined the base with the cache. Database and cache are very different things. They are based on a different concept of stability, speed, duplication, relationships and data integrity.

Conversion

Once we realized that we accidentally chose a cache for the database, what could we do?

Well, that is a million dollar question. But I already answered the billion dollar question. In this post, I talked about how we used MongoDB compared to what it was designed for. I talked about it as if all the information was obvious, and the Dispora team was simply not able to conduct the research before choosing.

But it was not at all obvious. The MongoDB documentation says that is good, and generally does not say that is not good. This is natural. Everyone does it. But as a result, it took about 6 months and a lot of user complaints and a lot of investigation to find out that we used MongoDB for other purposes.

There was nothing to do, except for extracting data from MongoDB and placing it in a relational DBMS, solving problems of data inconsistency on the go. The process of extracting data from MongoDB and putting it into MySQL was straightforward. More details in the report at All Your Base Conf .

Damage

We had data for 8 months of work, which turned into 1.2 million rows in MySQL. We spent eight weeks developing code for migration, and when we started the process, the main site went into downtime for 2 hours. This was more than acceptable for a pre-alpha project. We could reduce downtime, but we laid 8 hours, so two hours looked fantastic.

(NOT BAD)

Epilogue

Remember the TV show app? This was an ideal use case for MongoDB. Each series was one self-contained document. There are no references to other documents, there is no duplication, and there is no way to make data inconsistent.

After three months in development, everything worked great with MongoDB. But once on Monday at a planning meeting, a client said that one of the investors wants a new feature. He wants to be able to click on the actor’s name and watch his career in television series. He wants a list of all the episodes in all the series in chronological order in which this actor starred.

We stored each series as a document in MongoDB, containing all the data, including the actors. If this actor met in two episodes, even in one series, the information was stored in two places. We could not even find out that this is one and the same actor, except by comparing the names. To implement the feature, it was necessary to go around all the documents, find and deduplicate all instances of the actors. Uh ... It was necessary to do this at least once, and then maintain the external index of all actors, which will experience the same problems with consistency as any other cache.

See what happens?

The client expects the feature to be trivial. If the data were in relational storage, then it would be true. First of all, we tried to convince the manager that this feature is not needed. But the manager did not bend over and we came up with several cheaper alternatives, such as links to IMDB by the name of the actor. But the company made money from advertising, so they needed the client not to leave the site.

This feature pushed the project to switch to PosgreSQL. After talking with the customer, it turned out that the business sees a lot of value in linking episodes to each other. They envisioned watching series shot by one director and episodes released in one week and much more.

It was ultimately a communication problem, not a technical problem. If this talk that happened earlier, if we took the time to really understand how the client wants to see the data and what they want to do with it, then we probably would have switched to PostgreSQL earlier when there was less data and it was easier.

Study, study and study again

I learned something from experience: the perfect MongoDB case is narrower than our show data. The only thing that is convenient to store in MongoDB is arbitrary JSON fragments. " Arbitrary " in this context means that you absolutely do not care what's inside JSON. You don’t even look there. there is no scheme, not even an implicit scheme, as was the case in our data on the series. Each document is a set of bytes, and you make no assumptions about what's inside.

At RubyConf, I came across Conrad Irwin , who suggested this scenario. It saved arbitrary data coming from clients as JSON. It is reasonable. The CAP theorem does not matter when your data makes no sense. But in any interesting application, data makes sense.

I heard from many people that MongoDB is used as a replacement for PostgreSQL or MySQL. There are no circumstances in which this might be a good idea. The flexibility of the circuit (in fact the lack of a circuit - approx. Per. ) Looks like a good idea, but in fact it is only useful when your data does not carry value. If you have an implicit scheme, that is, you expect some structure in JSON, then MongoDB is the wrong choice. I suggest taking a look at hstore in PostgreSQL (anyway faster than MongoDB), and learning how to make schema changes. They really aren't that complicated, even in large tables.

Find value

When you choose a data warehouse, the most important thing is to understand where the value for the client is in the data and relationships. If you still do not know, then you need to choose what does not drive you into a corner. Pushing arbitrary JSON data into the database seems like a flexible solution, but the real flexibility is simply adding features to the business.

Keep valuables simple.

the end

Thank you for reading here.