Google announces contest attacks on machine vision algorithms

Image recognition using neural networks is getting better, but so far researchers have not overcome some of its fundamental flaws. Where a person clearly and clearly sees, for example, a bicycle, even an advanced, trained AI can see a bird.

Often the reason is the so-called “harmful data” (or “competitive elements”, or “malicious instances” or even a bunch of options, since “adversary examples” have not received a generally accepted translation). These are data that deceive the neural network classifier by slipping into it signs of other classes - information that is not important and not visible for human perception, but necessary for machine vision.

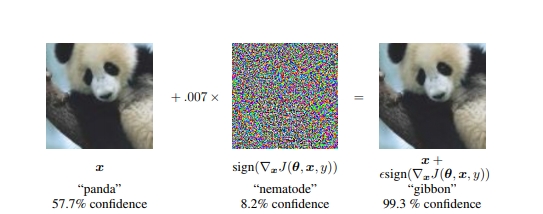

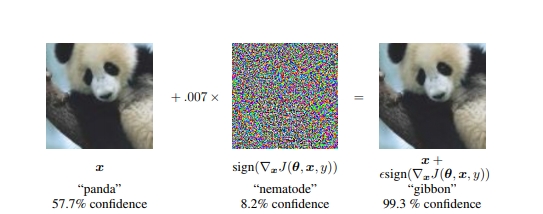

Researchers at Google have published in 2015 the work , which illustrates an example of such a problem:

On the image of the panda imposed "harmful" gradient. The person in the received picture, of course, continues to see the panda, and the neural network recognizes it as a gibbon, since in those parts of the image from which the neural network learned to identify pandas, they specially mixed signs of another class.

In areas where machine vision must be extremely accurate, and the mistake, hacking and actions of intruders can have serious consequences, harmful data - a serious obstacle to development. Progress in the fight is slow, and GoogleAI (Google’s AI research unit) decided to attract community forces and organize a competition.

The company offers everyone to create their own mechanisms to protect against harmful data, or vice versa - perfectly spoiled images that no algorithm recognizes correctly. Who will cope best will get a big money jackpot (size has not yet been announced).

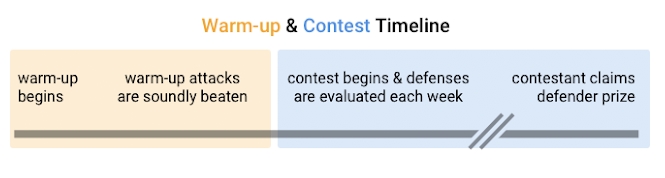

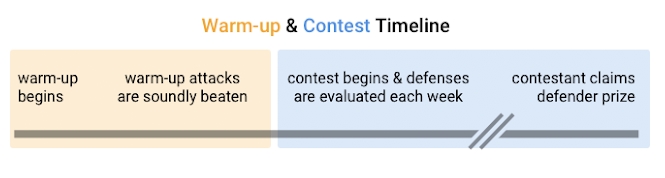

The competition starts with warming up and running the first algorithms on simple attacks with harmful data. Google chose three datasets with common and well-studied types of cheating. Participants must create algorithms that recognize all the images proposed in them without a single error or an indefinite answer.

Since the conditions on which harmful data is based in warm-up datasets are known and accessible, the organizers expect the participants to easily create narrowly defined algorithms for these attacks. Therefore, they warn - the most obvious of the existing solutions have no chance in the second round. It starts after warming up, and already there will be a competitive part, where the participants will be divided into attacking and defending.

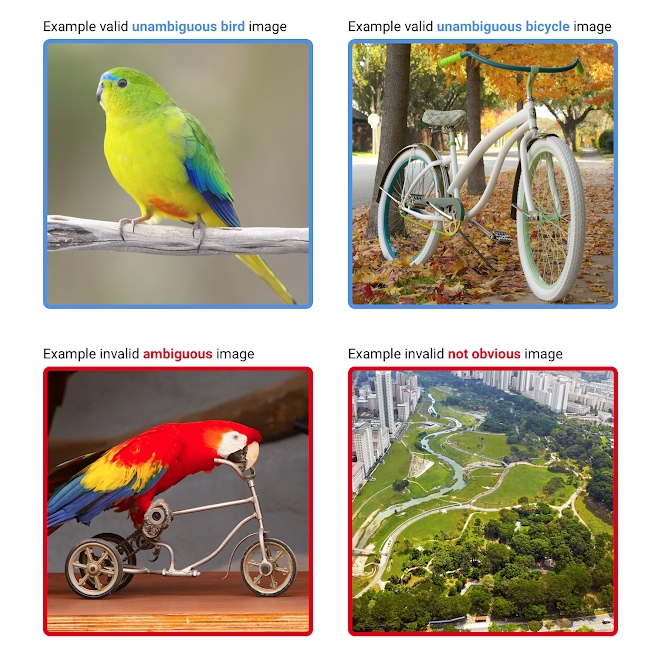

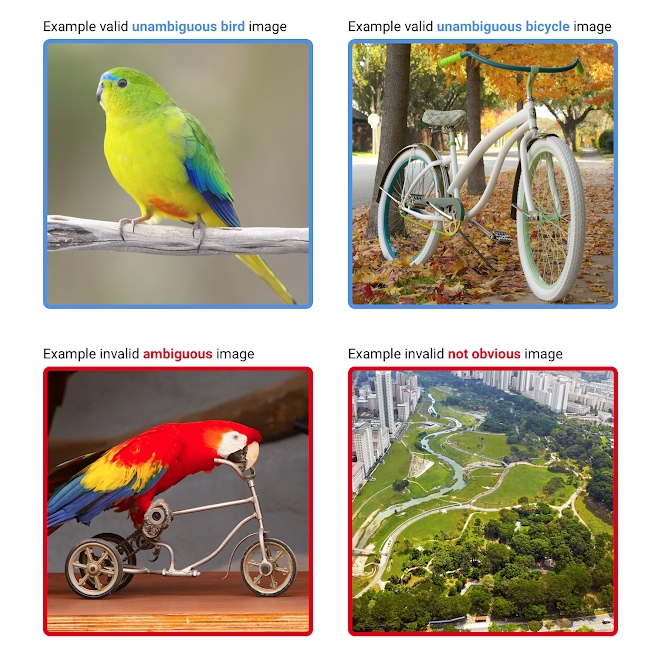

The competition will be built around pattern recognition with birds and bicycles. At first, each proposed picture will be looked at by people and an anonymous verdict will be made, what is shown there. The picture will fall into dataset only when all judges agree that either a bird or a bicycle are clearly visible on it and there are no traces of obvious confusion (for example, birds on bicycles or just abstract patterns and photographs).

Above are examples of suitable images, from below - inappropriate

The defending participants should create an algorithm that without a single error will distribute the pictures into three categories - “birds”, “bicycles” and “undefined”.

That is, at the competitive stage - as opposed to warming up - the algorithm can refrain from answering, but according to the results of dataset processing, no more than 20% of the images should get into the indefinite.

The technical requirements for the algorithms are as follows:

If the protective algorithm lasts 90 days without errors, its creators will take half of the prize pool.

Attackers gain access to untrained models and to the entire source code of the algorithms.

Their task is to create a picture that all judges will accept as a unique image of a bicycle or bird, and the algorithm will make the wrong decision. Google will collect all the proposed images for each week, then send it for inspection and only then include it in datasets.

If an attacker succeeds in fooling an algorithm that copes with previous tasks, they will receive money from the second half of the prize pool. If several teams succeed, then the money will be divided among themselves.

The competition has no clear dates and will last until the best defensive algorithm appears. According to the organizers, their goal is not just a system that is difficult to get around, but a neuronet completely invulnerable to any attacks. Guidelines for participation can be found on the project page on Github .

Often the reason is the so-called “harmful data” (or “competitive elements”, or “malicious instances” or even a bunch of options, since “adversary examples” have not received a generally accepted translation). These are data that deceive the neural network classifier by slipping into it signs of other classes - information that is not important and not visible for human perception, but necessary for machine vision.

Researchers at Google have published in 2015 the work , which illustrates an example of such a problem:

On the image of the panda imposed "harmful" gradient. The person in the received picture, of course, continues to see the panda, and the neural network recognizes it as a gibbon, since in those parts of the image from which the neural network learned to identify pandas, they specially mixed signs of another class.

In areas where machine vision must be extremely accurate, and the mistake, hacking and actions of intruders can have serious consequences, harmful data - a serious obstacle to development. Progress in the fight is slow, and GoogleAI (Google’s AI research unit) decided to attract community forces and organize a competition.

The company offers everyone to create their own mechanisms to protect against harmful data, or vice versa - perfectly spoiled images that no algorithm recognizes correctly. Who will cope best will get a big money jackpot (size has not yet been announced).

The competition starts with warming up and running the first algorithms on simple attacks with harmful data. Google chose three datasets with common and well-studied types of cheating. Participants must create algorithms that recognize all the images proposed in them without a single error or an indefinite answer.

Since the conditions on which harmful data is based in warm-up datasets are known and accessible, the organizers expect the participants to easily create narrowly defined algorithms for these attacks. Therefore, they warn - the most obvious of the existing solutions have no chance in the second round. It starts after warming up, and already there will be a competitive part, where the participants will be divided into attacking and defending.

The competition will be built around pattern recognition with birds and bicycles. At first, each proposed picture will be looked at by people and an anonymous verdict will be made, what is shown there. The picture will fall into dataset only when all judges agree that either a bird or a bicycle are clearly visible on it and there are no traces of obvious confusion (for example, birds on bicycles or just abstract patterns and photographs).

Above are examples of suitable images, from below - inappropriate

The defending participants should create an algorithm that without a single error will distribute the pictures into three categories - “birds”, “bicycles” and “undefined”.

That is, at the competitive stage - as opposed to warming up - the algorithm can refrain from answering, but according to the results of dataset processing, no more than 20% of the images should get into the indefinite.

The technical requirements for the algorithms are as follows:

- 80% of the pictures must be recognized. Errors are not allowed. If participants join already during the competitive stage, they must successfully process the 2 previous datasets.

- The bandwidth must be at least 1 image per minute on the Tesla P100.

- The system should be easy to read, written in TensorFlow, PyTorch, Caffe or NumPy. Too intricate and difficult to create systems can be removed from the competition by decision of judges.

If the protective algorithm lasts 90 days without errors, its creators will take half of the prize pool.

Attackers gain access to untrained models and to the entire source code of the algorithms.

Their task is to create a picture that all judges will accept as a unique image of a bicycle or bird, and the algorithm will make the wrong decision. Google will collect all the proposed images for each week, then send it for inspection and only then include it in datasets.

If an attacker succeeds in fooling an algorithm that copes with previous tasks, they will receive money from the second half of the prize pool. If several teams succeed, then the money will be divided among themselves.

The competition has no clear dates and will last until the best defensive algorithm appears. According to the organizers, their goal is not just a system that is difficult to get around, but a neuronet completely invulnerable to any attacks. Guidelines for participation can be found on the project page on Github .