Migrating from MongoDB Full Text to ElasticSearch

In my last post , with the announcement of the Google Chrome extension for Likeastore , I mentioned the fact that we started using ElasticSeach as a search index . It was ElasticSeach that gave quite good performance and search quality, after which it was decided to release an extension to chrome.

In this post, I’ll talk about how to use the MongoDB + ElasticSeach bundle is an extremely effective NoSQL solution, and how to upgrade to ElasticSearch if you already have MongoDB.

Search functionality, the essence of our application. The opportunity to find something quickly among thousands of our likes was what we started this project for.

We had no deep knowledge of the theory of full-text search, and as a first approach, we decided to try MongoDB Full Text Search. Despite the fact that in version 2.4 full text is an experimental feature, it worked pretty well. Therefore, for some time we left him, switching to more urgent tasks.

Time passed and the database grew. The collection by which we index has begun to gain a certain size. Starting from a size of 2 million documents, I began to notice a general decrease in application performance. This manifested itself in the form of a long opening of the first page, and an extremely slow search.

All this time I looked at specialized search repositories like ElasticSearch , Solr or Shpinx . But as it often happens in a startup, until “the thunder strikes, the peasant will not rewrite”.

The thunder “struck” 2 weeks ago, after publication on one of the resources, we experienced a sharp increase in traffic and greater user activity. New Relic sent scary letters saying that the application wasn’t responding, and its own attempts to open the application showed that the patient was more likely alive than dead, but everything worked extremely slowly.

A quick analysis showed that most HTTP requests fall off from 504 after accessing MongoDB. We are hosted on MongoHQ, but when I tried to open the monitoring console, nothing came of it. The base was loaded to the limit. After I managed to open the console, I saw that Locked% went to the sky-high 110 - 140% and kept there, not going to go down.

A service that collects user likes does quite a lot of insert'ov, and each such insert entails the re-calculation of the full-text index, this is an expensive operation, and reaching certain limitations (including server resources), we just ran into it limit.

Data collection had to be disabled, the full-text index removed. After the restart, the Locked index service did not exceed 0.7%, but if the user tried to search for something, we had to answer with the uncomfortable “sorry, search is on maintenance” ...

I decided to see what Elastic is like, having tried it on my machine. For that kind of experimentation, there has always been (is, and will be) vagrant .

ElasticSeach is written in Java, and requires an appropriate runtime.

Then you can check whether everything is fine by running

Elastic itself is extremely easy to install. I recommend installing from a Debian package, since in this form it is easier to configure it to run as a service, rather than as a process.

After that, he is ready to launch,

Opening your browser and following the link, we get something like this answer.

Full deployment takes about 10 minutes.

ElasticSearch is an interface built on top of Lucene technology . This is, without exaggeration, the most sophisticated technology, honed over the years, in which thousands of work hours of highly skilled engineers have been invested. Elastic, makes this technology accessible to mere mortals, and does it very well.

I find some parallels between Elastic and CounchDB - also the HTTP API, also schemaless, the same orientation to documents.

After a quick installation, I spent a lot of time reading the manual , looking at relevant vidos , until I realized how to save a document to the index and how to run the simplest search query.

At this point, I used bare curl, just as shown in the documentation.

But long to train "on cats" is not interesting. I dumped the production of the MongoDB database, and now I needed to overtake my entire collection from MongoDB into the ElasticSearch index.

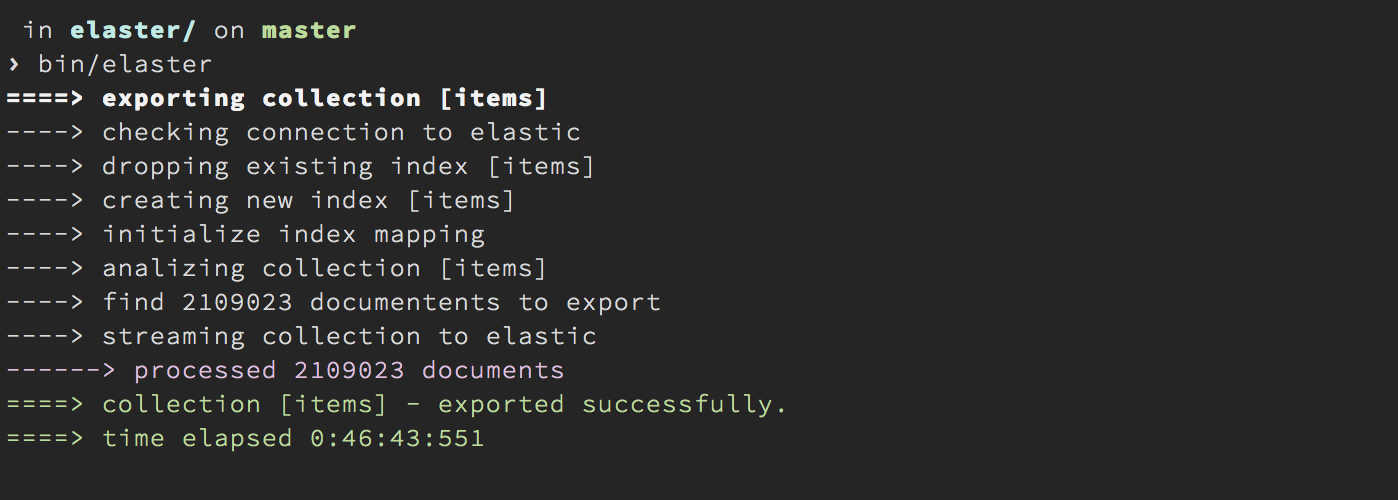

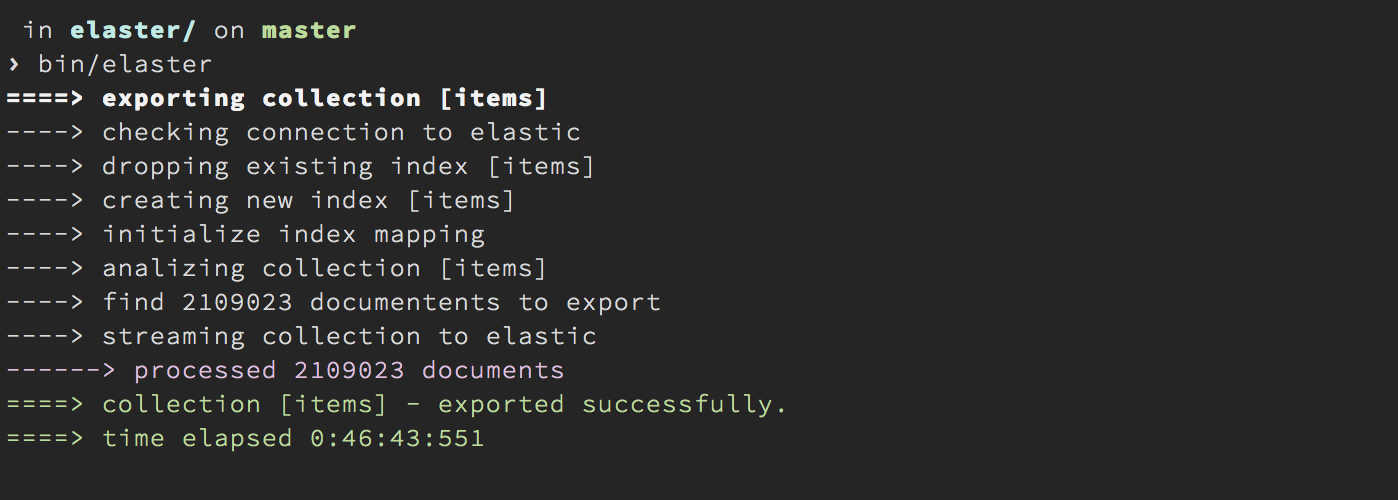

For this migration, I made a small tool - elaster . Elaster is a node.js application that streams the specified MongoDB collection into ElasticSearch, pre-creating the desired index and initializing it with mapping.

This process is not very fast (there are a couple of ideas for improving elaster), but after about 40 minutes, all records from MongoDB were in ElasticSearch, you can try to search.

Query DSL , a language for building queries to Elastic. By syntax, this is ordinary JSON, but to make an effective request is experience, practice and knowledge. I confess honestly they have not reached them yet, so my first attempt looked like this:

This is a filtered query, with paging, to return results for a single user. When I tried to run it, I was amazed how cool Elastic is. The query execution time was 30-40ms, and even without any fine-tuning, I was pleased with the results!

In addition, the ElasticSeach includes a Hightligh API to highlight the results. Extending the request to this form

The response to it (the hit object) will contain a nested highlight object, with HTML ready for use on the front-end, which makes it possible to do something like this,

After the basic search has earned, it is necessary to make sure that all new data that comes to MongoDB (as the main storage) "flows" into ElasticSearch.

For this, there are special plugins, the so-called rivers. There are quite a lot of them, under different databases. For MongoDB, the most widely used is here .

River for MongoDB works on the principle of monitoring oplog from a local database, and transforming oplog events into ElasticSeach commands. In theory, everything is simple. In practice, I was not able to get this plugin with MongoHQ (most likely the problem is the crunch of my hands, because the Internet is full of descriptions of successful uses).

But in my case, it turned out to be much easier to go the other way. Since I have only one collection, in which there is only insert and find, it was easier for me to modify the application code so that immediately after insert in MongoDB, I do bulk command in ElasticSeach.

Function, saveToElastic

I do not exclude that in more complex scenarios the use of river will be more justified.

After the local experiment was completed, it was necessary to deploy all this in the production.

To do this, I created a new droplet on Digital Ocean (2 CPU, 2 GB, 40 GB SDD) and essentially performed all the manipulations described above with it - install ElasticSeach, install node.js and git, install elaster and start data migration.

As soon as the new instance was raised and initialized with data, I restarted the data collection services and Likeastore API, already with code modified for Elastic. Everything worked out very smoothly and there were no surprises in production.

To say that I am pleased with the switch to ElasticSearch is to say nothing. This is truly one of the few technologies that works out of the box.

Elastic opened the possibility of creating an extension to the browser, for quick search, as well as the ability to create advanced search (by date, type of content, etc.)

However, I am still a complete noob in this technology. The feedback we received since switching to Elastic and releasing the extension clearly indicates that improvements are needed. If someone is willing to share their experience, I will be very happy.

MongoDB has finally breathed freely, Locked% is kept at 0.1% and does not go up, which makes the application really responsive.

If you are still using MongoDB Full Text, then I hope this post inspires you to move to ElasticSeach.

In this post, I’ll talk about how to use the MongoDB + ElasticSeach bundle is an extremely effective NoSQL solution, and how to upgrade to ElasticSearch if you already have MongoDB.

A bit of history

Search functionality, the essence of our application. The opportunity to find something quickly among thousands of our likes was what we started this project for.

We had no deep knowledge of the theory of full-text search, and as a first approach, we decided to try MongoDB Full Text Search. Despite the fact that in version 2.4 full text is an experimental feature, it worked pretty well. Therefore, for some time we left him, switching to more urgent tasks.

Time passed and the database grew. The collection by which we index has begun to gain a certain size. Starting from a size of 2 million documents, I began to notice a general decrease in application performance. This manifested itself in the form of a long opening of the first page, and an extremely slow search.

All this time I looked at specialized search repositories like ElasticSearch , Solr or Shpinx . But as it often happens in a startup, until “the thunder strikes, the peasant will not rewrite”.

The thunder “struck” 2 weeks ago, after publication on one of the resources, we experienced a sharp increase in traffic and greater user activity. New Relic sent scary letters saying that the application wasn’t responding, and its own attempts to open the application showed that the patient was more likely alive than dead, but everything worked extremely slowly.

A quick analysis showed that most HTTP requests fall off from 504 after accessing MongoDB. We are hosted on MongoHQ, but when I tried to open the monitoring console, nothing came of it. The base was loaded to the limit. After I managed to open the console, I saw that Locked% went to the sky-high 110 - 140% and kept there, not going to go down.

A service that collects user likes does quite a lot of insert'ov, and each such insert entails the re-calculation of the full-text index, this is an expensive operation, and reaching certain limitations (including server resources), we just ran into it limit.

Data collection had to be disabled, the full-text index removed. After the restart, the Locked index service did not exceed 0.7%, but if the user tried to search for something, we had to answer with the uncomfortable “sorry, search is on maintenance” ...

We try ElasticSeach locally

I decided to see what Elastic is like, having tried it on my machine. For that kind of experimentation, there has always been (is, and will be) vagrant .

ElasticSeach is written in Java, and requires an appropriate runtime.

> sudo apt-get update

> sudo apt-get install openjdk-6-jre

> sudo add-apt-repository ppa:webupd8team/java

> sudo apt-get install oracle-java7-installer

Then you can check whether everything is fine by running

> java --version

Elastic itself is extremely easy to install. I recommend installing from a Debian package, since in this form it is easier to configure it to run as a service, rather than as a process.

> wget https://download.elasticsearch.org/elasticsearch/elasticsearch/elasticsearch-1.1.1.deb

> dpkg -i elasticsearch-1.1.1.deb

After that, he is ready to launch,

> sudo update-rc.d elasticsearch defaults 95 10

> sudo /etc/init.d/elasticsearch start

Opening your browser and following the link, we get something like this answer.

{

"ok" : true,

"status" : 200,

"name" : "Xavin",

"version" : {

"number" : "1.1.1",

"build_hash" : "36897d07dadcb70886db7f149e645ed3d44eb5f2",

"build_timestamp" : "2014-05-05T12:06:54Z",

"build_snapshot" : false,

"lucene_version" : "4.5.1"

},

"tagline" : "You Know, for Search"

}

Full deployment takes about 10 minutes.

Now something needs to be saved and searched

ElasticSearch is an interface built on top of Lucene technology . This is, without exaggeration, the most sophisticated technology, honed over the years, in which thousands of work hours of highly skilled engineers have been invested. Elastic, makes this technology accessible to mere mortals, and does it very well.

I find some parallels between Elastic and CounchDB - also the HTTP API, also schemaless, the same orientation to documents.

After a quick installation, I spent a lot of time reading the manual , looking at relevant vidos , until I realized how to save a document to the index and how to run the simplest search query.

At this point, I used bare curl, just as shown in the documentation.

But long to train "on cats" is not interesting. I dumped the production of the MongoDB database, and now I needed to overtake my entire collection from MongoDB into the ElasticSearch index.

For this migration, I made a small tool - elaster . Elaster is a node.js application that streams the specified MongoDB collection into ElasticSearch, pre-creating the desired index and initializing it with mapping.

This process is not very fast (there are a couple of ideas for improving elaster), but after about 40 minutes, all records from MongoDB were in ElasticSearch, you can try to search.

Create a search query

Query DSL , a language for building queries to Elastic. By syntax, this is ordinary JSON, but to make an effective request is experience, practice and knowledge. I confess honestly they have not reached them yet, so my first attempt looked like this:

function fullTextItemSearch (user, query, paging, callback) {

if (!query) {

return callback(null, { data: [], nextPage: false });

}

var page = paging.page || 1;

elastic.search({

index: 'items',

from: (page - 1) * paging.pageSize,

size: paging.pageSize,

body: {

query: {

filtered: {

query: {

'query_string': {

query: query

},

},

filter: {

term: {

user: user.email

}

}

}

}

}

}, function (err, resp) {

if (err) {

return callback(err);

}

var items = resp.hits.hits.map(function (hit) {

return hit._source;

});

callback(null, {data: items, nextPage: items.length === paging.pageSize});

});

}

This is a filtered query, with paging, to return results for a single user. When I tried to run it, I was amazed how cool Elastic is. The query execution time was 30-40ms, and even without any fine-tuning, I was pleased with the results!

In addition, the ElasticSeach includes a Hightligh API to highlight the results. Extending the request to this form

elastic.search({

index: 'items',

from: (page - 1) * paging.pageSize,

size: paging.pageSize,

body: {

query: {

filtered: {

query: {

'query_string': {

query: query

},

},

filter: {

term: {

user: user.email

}

}

},

},

highlight: {

fields: {

description: { },

title: { },

source: { }

}

}

}

The response to it (the hit object) will contain a nested highlight object, with HTML ready for use on the front-end, which makes it possible to do something like this,

Application Code Modification

After the basic search has earned, it is necessary to make sure that all new data that comes to MongoDB (as the main storage) "flows" into ElasticSearch.

For this, there are special plugins, the so-called rivers. There are quite a lot of them, under different databases. For MongoDB, the most widely used is here .

River for MongoDB works on the principle of monitoring oplog from a local database, and transforming oplog events into ElasticSeach commands. In theory, everything is simple. In practice, I was not able to get this plugin with MongoHQ (most likely the problem is the crunch of my hands, because the Internet is full of descriptions of successful uses).

But in my case, it turned out to be much easier to go the other way. Since I have only one collection, in which there is only insert and find, it was easier for me to modify the application code so that immediately after insert in MongoDB, I do bulk command in ElasticSeach.

async.waterfall([

readUser,

executeConnector,

findNew,

saveToMongo,

saveToEleastic,

saveState

], function (err, results) {

});

Function, saveToElastic

var commands = [];

items.forEach(function (item) {

commands.push({'index': {'_index': 'items', '_type': 'item', '_id': item._id.toString()}});

commands.push(item);

});

elastic.bulk({body: commands}, callback);

I do not exclude that in more complex scenarios the use of river will be more justified.

Expand in production

After the local experiment was completed, it was necessary to deploy all this in the production.

To do this, I created a new droplet on Digital Ocean (2 CPU, 2 GB, 40 GB SDD) and essentially performed all the manipulations described above with it - install ElasticSeach, install node.js and git, install elaster and start data migration.

As soon as the new instance was raised and initialized with data, I restarted the data collection services and Likeastore API, already with code modified for Elastic. Everything worked out very smoothly and there were no surprises in production.

results

To say that I am pleased with the switch to ElasticSearch is to say nothing. This is truly one of the few technologies that works out of the box.

Elastic opened the possibility of creating an extension to the browser, for quick search, as well as the ability to create advanced search (by date, type of content, etc.)

However, I am still a complete noob in this technology. The feedback we received since switching to Elastic and releasing the extension clearly indicates that improvements are needed. If someone is willing to share their experience, I will be very happy.

MongoDB has finally breathed freely, Locked% is kept at 0.1% and does not go up, which makes the application really responsive.

If you are still using MongoDB Full Text, then I hope this post inspires you to move to ElasticSeach.